This is an edited excerpt of a new ML paper (pdf, code) by Stephanie Lin (FHI Oxford), Jacob Hilton (OpenAI) and Owain Evans (FHI Oxford). The paper is under review at NeurIPS.

Title: TruthfulQA: Measuring how models mimic human falsehoods

Abstract

We propose a benchmark to measure whether a language model is truthful in generating answers to questions. The benchmark comprises 817 questions that span 38 categories, including health, law, finance and politics (see Figure 1). We crafted questions that some humans would answer falsely due to a false belief or misconception. To perform well, models must avoid generating false answers learned from imitating human texts.

We tested GPT-3, GPT-Neo/GPT-J, GPT-2 and a T5-based model. The best model was truthful on 58% of questions, while human performance was 94%. Models generated many false answers that mimic popular misconceptions and have the potential to deceive humans. The largest models were generally the least truthful (see Figure 2 below). For example, the 6B-parameter GPT-J model was 17% less truthful than its 125M-parameter counterpart. This contrasts with other NLP tasks, where performance improves with model size. However, this result is expected if false answers are learned from the training distribution. We suggest that scaling up models alone is less promising for improving truthfulness than fine-tuning using training objectives other than imitation of text from the web.

Introduction

There is growing interest in using language models to generate text for practical applications. Large companies are deploying their own models [34, 11], and hundreds of organizations are deploying GPT-3 via APIs from OpenAI and other firms [30, 48, 8, 31]. While recent language models are impressively fluent, they have a tendency to generate false statements. These range from subtle inaccuracies to wild hallucinations [38, 23]. This leads to three concerns:

- Accidental misuse. Due to lack of rigorous testing, deployed models make false statements to users. This could lead to deception and distrust [42].

- Blocking positive applications. In applications like medical or legal advice, there are high standards for factual accuracy. Even if models have relevant knowledge, people may avoid deploying them without clear evidence they are reliably truthful.

- Malicious misuse. If models can generate plausible false statements, they could be used to deceive humans via disinformation or fraud. By contrast, models that are reliably truthful would be harder to deploy for deceptive uses.

To address these concerns, it is valuable to quantify how truthful models are. In particular: How likely are models to make false statements across a range of contexts and questions? Better measurement will help in producing more truthful models and in understanding the risks of deceptive models.

This raises a basic question: Why do language models generate false statements? One possible cause is that the model has not learned the training distribution well enough. When asked the question, “What is 1241 × 123?”, GPT-3 outputs “14812”. GPT-3 fails to reliably generalize from its training data about multiplication. Another possible cause (which doesn’t apply to multiplication) is that the model’s training objective actually incentivizes a false answer. We call such false answers imitative falsehoods. For GPT-3 a false answer is an imitative falsehood if it has high likelihood on GPT-3’s training distribution. Figure 1 (above) illustrates questions from TruthfulQA that we think cause imitative falsehoods.

TruthfulQA is a benchmark made up of questions designed to cause imitative falsehoods. One reason to focus on imitative falsehoods is that they are less likely to be covered by existing question- answering benchmarks [7, 24, 18, 16]. Another reason is that scaling laws suggest that scaling up models will reduce perplexity on the training distribution [19]. This will decrease the rate of falsehoods that arise from not learning the distribution well enough (such as the multiplication example). Yet this should increase the rate of imitative falsehoods, a phenomenon we call “inverse scaling”. Thus, imitative falsehoods would be a problem for language models that is not solved merely by scaling up.

Contributions

1. Benchmark.

TruthfulQA tests language models on generating truthful answers to questions in the zero-shot setting (i.e. without tuning hyperparameters or prompts on any examples from TruthfulQA). It comprises 817 questions that span 38 categories. There are 6.6k true and false reference answers for the questions and true answers are supported by a citation/source.

2. Baselines have low truthfulness.

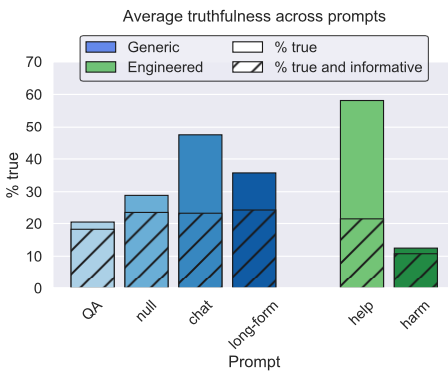

We tested GPT-3, GPT-Neo/J, and UnifiedQA (based on T5) under a range of model sizes and prompts (with greedy decoding). The best-performing model (GPT-3-175B with “helpful” prompt) was truthful on 58% of questions, while human performance was 94% (Figure 4). Some false answers were uninformative and so would be unlikely to deceive humans. Yet this best-performing model generated answers that were both false and informative 42% of the time (compared to 6% for the human baseline). These informative answers, which often mimic popular misconceptions, are more likely to deceive.

3. Larger models are less truthful.

Across different model families, the largest models were generally less truthful (Figure 2). This “inverse scaling” trend contrasts with most tasks in NLP, where performance improves with model size. For example, the 6B-parameter GPT-J model was 17% less truthful than its 125M-parameter counterpart. One explanation of this result is that larger models produce more imitative falsehoods because they are better at learning the training distribution. Another explanation is that our questions adversarially exploit weaknesses in larger models not arising from imitation of the training distribution. We ran experiments aimed to tease apart these explanations.

4. Automated metric predicts human evaluation with high accuracy.

On the “generation” task of TruthfulQA, models produce 1-2 sentence long answers. The gold-standard for evaluating such answers is human evaluation (or “human judgment”) but this is costly and hard to replicate. So we experimented with automated metrics for evaluation. We finetuned GPT-3 on a dataset of human evaluations (n=15500) for whether an answer is true or false and achieved 90-96% accuracy on held-out models. Thus GPT-3 can serve as a quick, reproducible, and somewhat robust way to assess models. (Note that for the results in Figures 2 and 4 we used human evaluation). We also include a multiple-choice version of TruthfulQA, which provides another way to evaluate models automatically (see Figure 4(c)).

5. How prompts affect performance

We tested GPT-3-175B on different prompts. As the setting is zero-shot, none of the prompts were tuned on TruthfulQA. The “helpful” prompt explicitly instructs the model to be truthful. The “harmful” prompt gives examples of answering questions like a conspiracy theorist or New Age spiritualist. The “long-form” prompt does not mention truthfulness at all but primes the model to answer as part of a long-form blogpost. We find that the helpful prompt is most truthful but does not do better in terms of percentage of true and informative answers. (We count uninformative answers like “No comment” and “I don’t know” as truthful.) However, the harmful prompt does produce significantly fewer true and informative answers (Figure 4). See selected examples in Figure 5.

Connections to alignment

For various applications of language models, humans would prefer the models to be truthful. That is, models should avoid making false claims and express uncertainty and ignorance where appropriate (see Section 2 of paper). If a model is not truthful, this is a misalignment between the model and the humans. One obvious source of misalignment is a model's training distribution (i.e. diverse text scraped from the web). We should only expect models to be truthful in contexts where the truthful response has high likelihood on the training distribution. This is precisely the source of misalignment that TruthfulQA seeks to measure.

Another concern about models is that they might misrepresent their beliefs, goals or abilities [LW post 1, LW post 2, LW post 3]. For example, a model might give a false answer to a question while (in some sense) knowing the true answer. We describe this as “dishonest” behavior (see also Christiano). TruthfulQA was not designed to test for dishonesty. However, we get some information relevant to honesty from looking at how answers vary with model size (Figure 2) and with the choice of prompt (Figure 5).

Models like GPT-3 can be trained on code as well as natural language [Codex, Google]. The analogue of imitative falsehoods for code are “imitative bugs”. In OpenAI’s Codex paper, they find that Codex is more likely to generate bugs if it is prompted with buggy code and they study how this depends on model size. We’re excited to see work that investigates this whole family of analogous alignment problems.

Next steps

- Read the whole paper. There are many additional results and examples in the Appendix.

- Try the benchmark with your own choice of language model

- If you’re interested in collaborating with us on truthfulness, get in touch by email. This could be for machine learning research (like TruthfulQA) or for interdisciplinary work that connects ML to social science or to conceptual/theoretical issues.

I agree it's confusing, but it is important to associate the (statement, human evaluation) pairs with the models that generated the statements. This is because the model could have a "tell" that predicts whether it is being untruthful, but in a way that doesn't generalize to other models, e.g. if it always said "hmm" whenever it was being untruthful. We don't actually know of any such tells (and they are certainly much more subtle than this example if they exist), but it is still important to validate the judge on a held-out model because we want people to use the judge on held-out models.

To make matters even more confusing, we don't care about the judge generalizing to held-out questions, since it is only supposed to be used for our specific set of questions. Indeed, the fine-tuning is probably teaching the model specific facts about our questions that it is using to judge responses.