Rational Agents Cooperate in the Prisoner's Dilemma

13Max H

1Isaac King

4Seth Herd

4Isaac King

3Max H

3Isaac King

7Nathaniel Monson

1Isaac King

2Philipreal

1Isaac King

7James_Miller

3Isaac King

5James_Miller

3Isaac King

5James_Miller

9Isaac King

1Martin Randall

4James_Miller

3Martin Randall

5James_Miller

6Steven Byrnes

3dr_s

2Steven Byrnes

1Isaac King

2Steven Byrnes

2Isaac King

5Steven Byrnes

7bideup

2Isaac King

5bideup

2Isaac King

2TekhneMakre

2Gurkenglas

2TekhneMakre

2bideup

3TekhneMakre

2Isaac King

2Steven Byrnes

1Isaac King

4Steven Byrnes

2Isaac King

2Steven Byrnes

4Isaac King

4Steven Byrnes

3Noosphere89

2Isaac King

3jmh

1Isaac King

3tailcalled

1Isaac King

2Firinn

1Isaac King

1Firinn

1Isaac King

1Firinn

2bideup

2Isaac King

2[comment deleted]

2romeostevensit

1tslarm

2tslarm

1Isaac King

3tslarm

2Isaac King

2tslarm

1Isaac King

2tslarm

1bideup

1Olli Järviniemi

New Comment

All that matters is that the way you make decisions about prisoner's dilemmas is identical; all other characteristics of the players can differ. And that's exactly what the premise of "both players are rational" does; it ensures that their decision-making processes are replicas of each other.

That's not quite all that matters; it also has to be common knowledge among the players that they are similar enough to make their decisions for the same reasons. So it's not enough to just say "I'm a functional decision theorist, therefore I will cooperate with anyone else who claims to be a FDT", because then someone can just come along, claim to be a FDT to trick you into cooperating unconditionally, and then defect in order to get more utility for themselves.

Alternatively, your opponent might be the one confused about whether they are a FDT agent or not, and will just play cooperate unconditionally as long as you tell them a convincing-enough lie. Deontological issues aside, if you can convince your opponent to cooperate regardless of your own decision, then any decision theory (or just common sense) will tell you that you should play defect.

The point is, you might think you're a FDT agent, or want to be one, but unless you're actually sufficiently good at modeling your counterparty in detail (including modeling their model of you), and you are also reasonably confident that your counterparty possesses the same skills, such that your decisions really do depend on their private mental state (and vice versa), one of you might actually be closer to a rock with "cooperate" written on it, for which actual FDT agents (or anyone clever enough to see that) will correctly defect against you.

Among humans, making your own decision process legible enough and correlated enough with your opponent's decision process is probably hard or at least non-trivial; if you're an AI that can modify and exhibit your own source code, it is probably a bit easier to reach mutual cooperation, at least with other AIs. I wrote a bit more about this here.

I agree with everything you say there. Is this intended as disagreement with a specific claim I made? I'm just a little confused what you're trying to convey.

If you agree with everything he said, then you don't think rational agents cooperate on this dilemma in any plausible real-world scenario, right? Even superintelligent agents aren't going to have full and certain knowledge of each other.

No? Like I explained in the post, cooperation doesn't require certainty, just that the expected value of cooperation is higher than that of defection. With the standard payoffs, rational agents cooperate as long as they assign greater than 75% credence to the other player making the same decision as they do.

Not really disagreeing with anything specific, just pointing out what I think is a common failure mode where people first learn of better decision theories than CDT, say, "aha, now I'll cooperate in the prisoner's dilemma!" and then get defected on. There's still some additional cognitive work required to actually implement a decision theory yourself, which is distinct from both understanding that decision theory, and wanting to implement it. Not claiming you yourself don't already understand all this, but I think it's important as a disclaimer in any piece intended to introduce people previously unfamiliar with decision theories.

Ah, I see. That's what I was trying to get at with the probabilistic case of "you should still cooperate as long as there's at least a 75% chance the other person reasons the same way you do", and the real-world examples at the end, but I'll try to make that more explicit. Becoming cooperate-bot is definitely not rational!

Alright, I'll bite. As a CDT fan, I will happily take the 25 dollars. I'll email you on setting up the experiment. If you'd like, we could have a third party hold money in escrow?

I'm open to some policy which will ceiling our losses if you don't want to risk $2050, or conversely, something which will give a bonus if one of us wins by more than $5 or something.

As far as Newcomb's problem goes, what if you find a super intelligent agent that says it tortures and kills anyone who would have oneboxed in Newcomb? This seems roughly as likely to me as finding the omega from the original problem. Do you still think the right thing to do now is commit to oneboxing before you have any reason to think that commitment has positive EV?

I did not expect anyone to accept my offer! Would you be willing to elaborate on why you're doing so? Do you believe that you're going to make money for some reason? Or do you believe that you're going to lose money, and that doing so is the rational action? (The latter is what CDT predicts, the former just means you think you found a flaw in my setup.)

I would be fine with a loss-limiting policy, sure. What do you propose?

I'm not sure how to resolve your Newcomb's Basilisk, that's interesting. My first instinct is to point out that that agent and game are strictly more complicated than Newcomb's problem, so by Occam's Razor they're less likely to exist. But it's easy to modify the Basilisk to be simpler than Omega; perhaps by saying that it tortures anyone who would ever do anything to win them $1,000,000. So I don't think that's actually relevant.

Isn't this a problem for all decision theories equally? I could posit a Basilisk that tortures anyone who would two-box, and a CDT agent would still two-box.

I guess the rational policy depends on your credence that you'll encounter any particular agent? I'm not sure, that's a very interesting question. How we do determine which counterfactuals actually matter?

One flaw in the setup is that the person opposing you could generate a random sequence beforehand and simply follow that when choosing options in the "game." I assume the offer to play the game is not still available and/or you would not knowingly choose to play it against someone using this strategy, but if you would I'll take the $25.

The offer is still open, but the point is that it's positive expected value for both me and a causal decision theorist when the CDT agent is making the choices themselves, which I believe implies irrationality on their part. I'm not interested in playing against someone who's "cheating" by generating their sequence some other way, that defeats the point. :)

Consider two games: the standard prisoners' dilemma and a modified version of the prisoners' dilemma. In this modified version, after both players have submitted their moves, one is randomly chosen. Then, the move of the other player is adjusted to match that of the randomly chosen player. These are very different games with very different strategic considerations. Therefore, you should not define what you mean by game theory in a way that would make rational players view both games as the same because by doing so you have defined-away much of real-world game theory coordination challenges.

I'm a little confused by this comment. In the real world, we don't have perfectly rational agents, nor do we have common knowledge of each other's reasoning processes, so of course any game in the real world is going to be much more complicated. That's why we use simplified models like rational choice theory, to try to figure out useful things in an easier to calculate setting and then apply some of those learnings to the real world.

I agree your game is different in certain ways, and a real human would be more likely to cooperate in it, but I don't see how that's relevant to what I wrote. Consider the game of chess, but the bishops are replaced by small pieces of rotting meat. This also may cause different behavior from real humans, but traditional game theory would view it as the same game. I don't think this invalidates the game theory. (Of course you could modify the game theory by adding a utility penalty for moving any bishop, but you can also do that in the prisoner's dilemma.)

Basically what I'm saying is I don't understand your point, sorry.

I teach an undergraduate game theory course at Smith College. Many students start by thinking that rational people should cooperate in the prisoners' dilemma. I think part of the value of game theory is in explaining why rational people would not cooperate, even knowing that everyone not cooperating makes them worse off. If you redefine rationality such that you should cooperate in the prisoners' dilemma, I think you have removed much of the illuminating value of game theory. Here is a question I will be asking my game theory students on the first class:

Our city is at war with a rival city, with devastating consequences awaiting the loser. Just before our warriors leave for the decisive battle, the demon Moloch appears and says “sacrifice ten healthy, loved children and I will give +7 killing power (which is a lot) to your city’s troops and subtract 7 from the killing power of your enemy. And since I’m an honest demon, know that right now I am offering this same deal to your enemy.” Should our city accept Moloch’s offer?

I believe under your definition of rationality this Moloch example loses its power to, for example, in part explain the causes of WW I.

I am defining rationality as the ability to make good decisions that get the agent what it wants. In other words, maximizing utility. Under that definition, the rational choice is to cooperate, as the article explains. You can certainly define rationality in some other way like "follows this elegant mathematical theory I'm partial to", but when that mathematical theory leads to bad outcomes in the real world, it seems disingenuous to call that "rationality", and I'd recommend you pick a different word for it.

As for your city example, I think you're failing to consider the relevance of common knowledge. It's only rational to cooperate if you're confident that the other player is also rational and knows the same things about you. In many real-world situations that is not the case, and the decision of whether to cooperate or defect will be based on the exact correlation you think your decisions have with the other party; if that number is low, then defecting is the correct choice. But if both cities are confident enough that the other follows the same decision process; say, they have the exact same political parties and structure, and all the politicians are very similar to each other; then refusing the demon's offer is correct, since it saves the lives of 20 children.

I'll admit to being a little confused by your comment, since I feel like I already explained these things pretty explicitly in the article? I'd like to figure out where the miscommunication is/was occurring so I can address it better.

I think the disagreement is that I think the traditional approach to the prisoners' dilemma makes it more useful as a tool for understanding and teaching about the world. Any miscommunication is probably my fault for my failing to sufficiently engage with your arguments, but it FEELS to me like you are either redefining rationality or creating a game that is not a prisoners' dilemma because I would define the prisoners' dilemma as a game in which both parties have a dominant strategy in which they take actions that harm the other player, yet both parties are better off if neither play this dominant strategy than if both do, and I would define a dominant strategy as something a rational player always plays regardless of what he things the other player would do. I realize I am kind of cheating by trying to win through definitions.

Yeah, I think that sort of presentation is anti-useful for understanding the world, since it's picking a rather arbitrary mathematical theory and just insisting "this is what rational people do", without getting people to think it through and understand why or if that's actually true.

The reason a rational agent will likely defect in a realistic prisoner's dilemma against a normal human is because it believes the human's actions to be largely uncorrelated with its own, since it doesn't have a good enough model of the human's mind to know how it thinks. (And the reason why humans defect is the same, with the added obstacle that the human isn't even rational themselves.)

Teaching that rational agents defect because that's the Nash equilibrium and rational agents always go to the Nash equilibrium is just an incorrect model of rationality, and agents that are actually rational can consistently win against Nash-seekers.

Do you believe that this Moloch example partly explains the causes of WW1? If so, how?

I think it can reasonably part-explain the military build-up before the war, where nations spent more money on defense (and so less on children's healthcare).

But then you don't need the demon Moloch to explain the game theory of military build-up. Drop the demon. It's cleaner.

Cleaner, but less interesting plus I have a entire Demon Games exercise we do on the first day of class. Yes the defense build up, but also everyone going to war even though everyone (with the exception of the Austro-Hungarians) thinking they are worse off going to war than having the peace as previously existed, but recognizing that if they don't prepare for war, they will be worse off. Basically, if the Russians don't mobilize they will be seen to have abandoned the Serbs, but if they do mobilize and then the Germans don't quickly move to attack France through Belgium then Russia and France will have the opportunity (which they would probably take) to crush Germany.

I certainly see how game theory part-explains the decisions to mobilize, and how those decisions part-caused WW2. So far as the Moloch example illustrates parts of game theory, I see the value. I was expecting something more.

In particular, Russia's decision to mobilize doesn't fit into the pattern of a one shot Prisoner's Dilemma. The argument is that Russia had to mobilize in order for its support for Serbia to be taken seriously. But at this point Austria-Hungary has already implicitly threatened Serbia with war, which means it has already failed to have its support taken seriously. We need more complicated game theory to explain this decision.

I don't think Austria-Hungry was in a prisoners' dilemma as they wanted a war so long as they would have German support. I think the Prisoners' dilemma (imperfectly) comes into play for Germany, Russia, and then France given that Germany felt it needed to have Austria-Hungry as a long-term ally or risk getting crushed by France + Russia in some future war.

I think the headline ("Rational Agents Cooperate in the Prisoner's Dilemma") is assuming that "Rational Agent" is pointing to a unique thing, such that if A and B are both "rational agents", then A and B are identical twins whose decisions are thus perfectly correlated. Right? If so, I disagree; I don't think all pairs of rational agents are identical twins with perfectly-correlated decisions. For one thing, two "rational agents" can have different goals. For another thing, two "rational agents" can run quite different decision-making algorithms under the hood. The outputs of those algorithms have to satisfy certain properties, in order for the agents to be labeled "rational", but those properties are not completely constraining on the outputs, let alone on the process by which the output is generated.

(I do agree that it's insane not to cooperate in the perfect identical twin prisoner's dilemma.)

I think OP might be thinking of something along the lines of an Aumann agreement - if both agents are rational, and possess the same information, how could they possibly come to a different conclusion?

Honestly I think the math definition of Nash equilibrium is best for the Defect strategy: it's the strategy that maximises your expected outcome regardless of what the other does. That's more like a total ignorance prior. If you start positing stuff about the other prisoner, like you know they're a rational decision theorist, or you know they adhere to the same religion as you whose first commandment is "snitches get stitches", then things change, because your belief distribution about their possible answer is not of total ignorance any more.

Well, the situation is not actually symmetric, because A is playing against B whereas B is playing against A, and A’s beliefs about B’s decision-making algorithm need not be identical to B’s beliefs about A’s decision-making algorithm. (Or if they exchange source code, they need not have the same source code, nor the same degree of skepticism that the supposed source code is the real source code and not a sneaky fake source code.) The fact that A and B are each individually rational doesn’t get you anything like that—you need to make additional assumptions.

Anyway, symmetry arguments are insufficient to prove that defect-defect doesn’t happen, because defect-defect is symmetric :)

Changing the game to a different game doesn't mean the answer to the original game is wrong. In the typical prisoner's dilemma, the players have common knowledge of all aspects of the game.

Just to be clear, are you saying that "in the typical prisoner's dilemma", each player has access to the other player's source code?

It's usually not stated in computational terms like that, but to my understanding yes. The prisoner's dilemma is usually posed as a game of complete information, with nothing hidden.

Oh wow, that’s a completely outlandish thing to believe, from my perspective. I’ll try to explain why I feel that way:

- I haven’t read very much of the open-source game theory literature, but I have read a bit, and everybody presents it as “this is a weird and underexplored field”, not “this is how game theory works and has always worked and everybody knows that”.

- Here’s an example: an open-source game theory domain expert writes “I sometimes forget that not everyone realizes how poorly understood open-source game theory is … open-source game theory can be very counterintuitive, and we could really use a lot more research to understand how it works before we start building a lot of code-based agents that are smart enough to read and write code themselves”

- Couple more random papers: this one & this one. Both make it clear from their abstracts that they are exploring a different problem than the normal problems of game theory.

- You can look up any normal game theory paper / study ever written, and you’ll notice that the agents are not sharing source code

- Game theory is purported to be at least slightly relevant to humans and human affairs and human institutions, but none of those things can can share source code. Granted, everybody knows that game theory is idealized compared to the real world, but its limitations in that respect are frequently discussed, and I have never seen “humans can’t share source code / read each other’s minds” listed as one of those limitations.

The prisoner's dilemma is usually posed as a game of complete information, with nothing hidden.

Wikipedia includes “strategies” as being common knowledge by definition of “complete information”, but I think that’s just an error (or a poor choice of words—see next paragraph). EconPort says complete information means each player is “informed of all other players payoffs for all possible action profiles”; Policonomics says “each agent knows the other agent’s utility function and the rules of the game”; Game Theory: An Introduction says complete information is “the situation in which each player i knows the action set and payoff function of each and every player j, and this itself is common knowledge”; Game Theory for Applied Economists says “a game has incomplete information if one player does not know another player’s payoffs”. I also found several claims that chess is a complete-information game, despite the fact that chess players obviously don’t share source code or explain to their opponent what exactly they were planning when they sacrificed their bishop.

I haven’t yet found any source besides Wikipedia that says anything about the player’s reading each other’s minds (not just utility functions, but also immediate plans, tactics, strategies, etc.) as being part of “complete information games” universally and by definition. Actually, upon closer reading, I think even Wikipedia is unclear here, and probably not saying that the players can read each other’s minds (beyond utility functions). The intro just says “strategies” which is unclear, but later in the article it says “potential strategies”, suggesting to me that the authors really mean something like “the opponents’ space of possible action sequences” (which entails no extra information beyond the rules of the game), and not “the opponents’ actual current strategies” (which would entail mind-reading).

Yep, a game of complete information is just one is which the structure of the game is known to all players. When wikipedia says

The utility functions (including risk aversion), payoffs, strategies and "types" of players are thus common knowledge.

it’s an unfortunately ambiguous phrasing but it means

The specific utility function each player has, the specific payoffs each player would get from each possible outcome, the set of possible strategies available to each player, and the set of possible types each player can have (e.g. the set of hands they might be dealt in cards) are common knowledge.

It certainly does not mean that the actual strategies or source code of all players are known to each other player.

Well in that case classical game theory doesn't seem up to the task, since in order to make optimal decisions you'd need a probability distribution over the opponent's strategies, no?

Right, vanilla game theory is mostly not a tool for making decisions.

It’s about studying the structure of strategic interactions, with the idea that some kind of equilibrium concept should have predictive power about what you’ll see in practice. On the one hand, if you get two humans together and tell them the rules of a matrix game, Nash equilibrium has relatively little predictive power. But there are many situations across biology, computer science, economics and more where various equilibrium concepts have plenty of predictive power.

But doesn't the calculation of those equilibria require making an assumption about the opponent's strategy?

The situation is slightly complicated, in the following way. You're broadly right; source code sharing is new. But the old concept of Nash equilibrium is I think sometimes justified like this: We assume that not only do the agents know the game, but they also know each other. They know each other's beliefs, each other's beliefs about the other's beliefs, and so on ad infinitum. Since they know everything, they will know what their opponent will do (which is allowed to be a stochastic policy). Since they know what their opponent will do, they'll of course (lol) do a causal EU-maxxing best response. Therefore the final pair of strategies must be a Nash equilibrium, i.e. a mutual best-response.

This may be what Isaac was thinking of when referring to "common knowledge of everything".

OSGT then shows that there are code-reading players who play non-Nash strategies and do better than Nashers.

This only needs knowledge of each other's policy, not knowledge of each other's knowledge, yes?

Yes, but the idea (I think!) is that you can recover the policy from just the beliefs (on the presumption of CDT EU maxxing). Saying that A does xyz because B is going to do abc is one thing; it builds in some of the fixpoint finding. The common knowledge of beliefs instead says: A does xyz because he believes "B believes that A will do xyz, and therefore B will do abc as the best response"; so A chooses xyz because it's the best response to abc.

But that's just one step. Instead you could keep going:

--> A believes that

----> B believes that

------> A believes that

--------> B believes that A will do xyz,

--------> and therefore B will do abc as the best response

------> and therefore A will do xyz as the best response

----> and therefore B will do abc as the best response

so A does xyz as the best response. And then you go to infinityyyy.

Being able to deduce a policy from beliefs doesn’t mean that common knowledge of beliefs is required.

The common knowledge of policy thing is true but is external to the game. We don’t assume that players in prisoner’s dilemma know each others policies. As part of our analysis of the structure of the game, we might imagine that in practice some sort of iterative responding-to-each-other’s-policy thing will go on, perhaps because players face off regularly (but myopically), and so the policies selected will be optimal wrt each other. But this isn’t really a part of the game, it’s just part of our analysis. And we can analyse games in various different ways e.g. by considering different equilibrium concepts.

In any case it doesn’t mean that an agent in reality in a prisoner’s dilemma has a crystal ball telling them the other’s policy.

Certainly it’s natural to consider the case where the agents are used to playing against each other so the have the chance to learn and react to each other’s policies. But a case where they each learn each other’s beliefs doesn’t feel that natural to me - might as well go full OSGT at that point.

Being able to deduce a policy from beliefs doesn’t mean that common knowledge of beliefs is required.

Sure, I didn't say it was. I'm saying it's sufficient (given some assumptions), which is interesting.

In any case it doesn’t mean that an agent in reality in a prisoner’s dilemma has a crystal ball telling them the other’s policy.

Sure, who's saying so?

But a case where they each learn each other’s beliefs doesn’t feel that natural to me

It's analyzed this way in the literature, and I think it's kind of natural; how else would you make the game be genuinely perfect information (in the intuitive sense), including the other agent, without just picking a policy?

two "rational agents" can have different goals.

It's assumed that both agents are offered equal utility from each outcome. It's true that one of them might care less about "years in jail" than the other, but then you can just offer them something else. In the prisoner's dilemma with certainty (no probabilistic reasoning) it actually doesn't matter what the exact payouts are, just their ordering of D/C > C/C > D/D > C/D.

For another thing, two "rational agents" can run quite different decision-making algorithms under the hood. The outputs of those algorithms have to satisfy certain properties, in order for the agents to be labeled "rational", but those properties are not completely constraining on the outputs, let alone on the process by which the output is generated.

My claim is that the underlying process is irrelevant. Either one output is better than another, in which case all rational agents will output that decision regardless of the process they used to arrive at it, or two outputs are tied for best in which case all rational agents would calculate them as being tied and output indifference.

My claim is that the underlying process is irrelevant.

OK, then I disagree with your claim. If A's decision-making process is very different from B's, then A would be wrong to say "If I choose to cooperate, then B will also choose to cooperate." There's no reason that A should believe that; it's just not true. Why would it be? But that logic is critical to your argument.

And if it's not true, then A gets more utility by defecting.

Either one output is better than another, in which case all rational agents will output that decision regardless of the process they used to arrive at it, or two outputs are tied for best in which case all rational agents would calculate them as being tied and output indifference.

Being in a prisoner's dilemma with someone whose decision-making process is known by me to be very similar to my own, is a different situation from being in prisoner's dilemma with someone whose decision-making process is unknown by me in any detail but probably extremely different from mine. You can't just say "either C is better than D or C is worse than D" in the absence of that auxiliary information, right? It changes the situation. In one case, C is better, and in the other case, D is better.

The situation is symmetric. If C is better for one player, it's better for the other. If D is better for one player, it's better for the other. And we know from construction that C-C is better for both than D-D, so that's what a rational agent will pick.

All that matters is the output, not the process that generates it. If one agent is always rational, and the other agent is rational on Tuesdays and irrational on all other days, it's still better to cooperate on Tuesdays.

The rational choice for player A depends on whether ( × P(B cooperates | A cooperates) + × P(B defects | A cooperates)) is larger or smaller than ( × P(B cooperates | A defects) + × P(B defects | A defects)). ("R" for "Reward") Right?

So then one extreme end of the spectrum is that A and B are two instantiations of the exact same decision-making algorithm, a.k.a. perfect identical twins, and therefore P(B cooperates | A defects) = P(B defects | A cooperates) = 0.

The opposite extreme end of the spectrum is that A and B are running wildly different decision-making algorithms with nothing in common at all, and therefore P(B cooperates | A defects) = P(B cooperates | A cooperates) = P(B cooperates) and ditto for P(B defects).

In the former situation, it is rational for A to cooperate, and also rational for B to cooperate. In the latter situation, it is rational for A to defect, and also rational for B to defect. Do you agree? For example, in the latter case, if you compare A to an agent A' with minimally-modified source code such that A' cooperates instead, then B still defects, and thus A' does worse than A. So you can’t say that A' is being “rational” and A is not—A is doing better than A' here.

(The latter counterfactual is not an A' and B' who both cooperate. Again, A and B are wildly different decision-making algorithms, spacelike separated. When I modify A into A', there is no reason to think that someone-very-much-like-me is simultaneously modifying B into B'. B is still B.)

In between the former and the latter situations, there are situations where the algorithms A and B are not byte-for-byte identical but do have something in common, such that the output of algorithm A provides more than zero but less than definitive evidence about the output of algorithm B. Then it might or might not be rational to cooperate, depending on the strength of this evidence and the exact payoffs.

Hmm, maybe it will help if I make it very concrete. You, Isaac, will try to program a rational agent—call it ,—and I, Steve, will try to program my own rational agent—call it . As it happens, I’m going to copy your entire source code because I’m lazy, but then I’ll add in a special-case that says: my will defect when in a prisoner’s dilemma with your .

Now let’s consider different cases:

- You screwed up; your agent is not in fact rational. I assume you don’t put much credence here—you think that you know what rational agents are.

- Your agent is a rational agent, and so is my agent . OK, now suppose there’s a prisoner’s dilemma with your agent and my agent . Then your will cooperate because that’s presumably how you programmed it: as you say in the post title, “rational agents cooperate in the prisoner’s dilemma”. And my is going to defect because, recall, I put that as a special-case in the source code. So my agent is doing strictly better than yours. Specifically: My agent and your agent take the same actions in all possible circumstances except for this particular -and- prisoner’s dilemma, where my agent gets the biggest prize and yours is a sucker. So then I might ask: Are you sure your agent is rational? Shouldn’t rationality be about systematized winning?

- Your agent is a rational agent, but my agent is not in fact a rational agent. If that’s your belief, then my question for you is: On what grounds? Please point to a specific situation where my agent is taking an “irrational” action.

Hmm, yeah, there's definitely been a miscommunication somewhere. I agree with everything you said up until the cases at the end (except potentially your formula at the beginning; I wasn't sure what "" denotes).

- You screwed up; your agent is not in fact rational. If this is intended to be a realistic hypothetical, this is where almost all of my credence would be. Nobody knows how to formally define rational behavior in a useful way (i.e. not AIXI), many smart people have been working on it for years, and I certainly don't think I'd be more likely to succeed myself. (I don't understand the relevance of this bullet point though, since clearly the point of your thought experiment is to discuss actually-rational agents.)

- Your agent is a rational agent, and so is my agent . N/A, your agent isn't rational.

- Your agent is a rational agent, but my agent is not in fact a rational agent. Yes, this is my belief. Your agent is irrational because it's choosing to defect against mine, which causes mine to defect against it, which results in a lower payoff than if it cooperated.

I wasn't sure what "" denotes

Sorry, is the Reward / payoff to A if A Cooperates and if B also Cooperates, etc.

Nobody knows how to formally define rational behavior in a useful way

OK sure, let’s also imagine that you have access to a Jupiter-brain superintelligent oracle and can ask it for advice.

Your agent is irrational because it's choosing to defect against mine, which causes mine to defect against it

How does that “causes” work?

My agent has a module in the source code, that I specifically added when I was writing the code, that says “if I, , am in a prisoner’s dilemma with specifically, then output ‘defect’”.

Your agent has no such module.

How did my inserting this module change the behavior of your ? Is your reading the source code of my or something? (If the agents are reading each other’s source code, that’s a very important ingredient to the scenario, and needs to be emphasized!!)

More generally, I understand that your follows the rule “always cooperate if you’re in a prisoner’s dilemma with another rational agent”. Right? But the rule is not just “always cooperate”, right? For example, if a rational agent is in a prisoner’s dilemma against cooperate-bot ( = the simple, not rational, agent that always cooperates no matter what), and if the rational agent knows for sure that the other party to the prisoner’s dilemma is definitely cooperate-bot, then the rational agent is obviously going to defect, right?

And therefore, needs to figure out whether the other party to its prisoner’s dilemma is or is not “a rational agent”. How does it do that? Shouldn’t it be uncertain, in practice, in many practical situations?

And if two rational agents are each uncertain about whether the other party to the prisoner’s dilemma is “a rational agent”, versus another kind of agent (e.g. cooperate-bot), isn’t it possible for them both to defect?

It's specified in the premise of the problem that both players have access to the other player's description; their source code, neural map, decision theory, whatever. My agent considers the behavior of your agent, sees that your agent is going to defect against mine no matter what mine does, and defects as well. (It would also defect if your additional module said "always cooperate with IsaacBot", or "if playing against IsaacBot, flip a coin", or anything else that breaks the correlation.)

"Always cooperate with other rational agents" is not the definition of being rational, it's a consequence of being rational. If a rational agent is playing against an irrational agent, it will do whatever maximizes its utility; cooperate if the irrational agent's behavior is nonetheless correlated with the rational agent, and otherwise defect.

OK cool. If the title had been “LDT agents cooperate with other LDT agents in the prisoner’s dilemma if they can see, trust, and fully understand each other’s source code; and therefore it’s irrational to be anything but an LDT agent if that kind of situation might arise” … then I wouldn’t have objected. That’s a bit verbose though I admit :) (If I had seen that title, my reaction would have been "That might or might not be true; seems plausible but maybe needs caveats, whatever, it's beyond my expertise", whereas with the current title my immediate reaction was "That's wrong!".)

I think I was put off because:

- The part where the agents see each other’s source code (and trust it, and can reason omnisciently about it) is omitted from the title and very easy to miss IMO even when reading the text [this is sometimes called "open-source prisoner's dilemma" - it has a special name because it's not the thing that people are usually talking about when they talk about "prisoner's dilemmas"];

- Relatedly, I think “your opponent is constitutionally similar to you and therefore your decisions are correlated” and “your opponent can directly see and understand your source code and vice-versa” are two different reasons that an agent might cooperate in the prisoner’s dilemma, and your post and comments seem to exclusively talk about the former but now it turns out that we’re actually relying on the latter;

- I think everyone agrees that the rational move is to defect against a CDT agent, and your title “rational agents cooperate in the prisoner’s dilemma” omits who the opponent is;

- Even if you try to fix that by adding “…with each other” to the title, I think that doesn’t really help because there’s a kind of circularity, where the way you define “rational agents” (which I think is controversial, or at least part of the thing you’re arguing for) determines who the prisoner’s dilemma opponent is, which in turn determines what the rational move is, yet your current title seems to be making an argument about what it implies to be a rational agent, so you wind up effectively presupposing the answer in a confusing way.

See Nate Soares’s Decision theory does not imply that we get to have nice things for a sense of how the details really matter and can easily go awry in regards to “reading & understanding each other’s source code”.

But as Gödel's incompleteness theorem and the halting problem demonstrate, this is an incoherent assumption, and attempting to take it as a premise will lead to contradictions.

Not really. What Godel's incompleteness theorem basically says is that no effective method that is Turing powerful can prove all truths about the natural numbers. However, in certain other models of computation, we can decide the truth of all natural numbers, and really any statement in first order logic can be decided if they are valid or not. You are overstating the results.

Similarly, the halting problem is solely unsolvable by Turing Machines. There's another issue, in that even oracles for the halting problem cannot solve their own halting problems, but it turns out that the basic issue is we assumed that computers had to be uniform, that is a single machine/circuit could solve all input sizes of a problem.

If we allow non-uniform circuits or advice strings, then we can indeed compose different circuits for different input sizes to make a logically omnisicent machine. Somewhat similarly, if we can somehow get an advice string or precompute what we need to test before testing it, we can again make a logically omnisicent machine.

Note that I'm only arguing that it makes logical sense to assume logical omnisicence, I'm not arguing it's useful or that it makes physical sense to do so. (I generally think it's not too useful, because then you can solve every problem if we assume logical omnisicence, and we thus have assumed away the constraints that are taut in real life.)

The proof that a probabilistic machine with probabilistic advice and a CTC can solve every language is in this paper, albeit it's several pages down.

https://arxiv.org/abs/0808.2669

Similarly, the proof that a Turing Machine + CTC can solve the halting problem is in this paper below:

I may have to come back and read the entire post just to fully understand your position. That said, I have not been a big fan of using PD in a lot of analysis for a very key reason (though I only recently recognized the core of my disagreement with others.).

Note -- the below is quickly written and I am sure has a lot of gaps that could be filled in or fleshed out.

As I learned the story, it was the jailer who actually constructed the payoffs to produce the incentives to defect. But that was because everyone knew the two stole the good but didn't have good evidence to go to court. In other words, the PD was actually a sub-game in a larger social game setting where the defection in the PD game actually produced a superior equilibrium and outcome in the larger social game.

Putting in a bit of economic parlance, it is a partial equilibrium game that perhaps we don't want to see achieve the best equilibrium for the market players (the "jailer" isn't a player but observer).

So when we see something that looks like a PD problem seems to me the first thing to look into is what is constraining players to that payoff matrix -- what is stopping the accused from talking to one another. Or perhaps more in line with your post, why have crooks not established a "never talk to cop" and "rats die" set of standards? In that case of real crooks those escapes of the PD are not really what we want from a social optimum but most of the time the PD is cast in a setting where we actually do want to escape the trap of the poor payoff structure.

For me it seems the focus is often misplaced because we've actually miscast the problem characterization as PD rather than some other coordination problem settings.

That seems like a quirk of the specific framing chosen for the typical thought experiment. There are plenty of analogous scenarios where there's no third party who benefits if the players defect. Climate change in a world with only two countries, for example.

Some people might be using the prisoner's dilemma as a model for certain real-world problems that they encounter. Do you do this? If so, what are your favorite or central real-world problems that you use the prisoner's dilemma as a model for?

Mainly the things I mention in the last section. I think real-world collective action problems can be solved (where "solved" means "we get to a slightly better equilibrium than we had before", not "everybody cooperates fully") by having the participants discuss their goals and methods with each other, to create common knowledge of the benefits of cooperation. (And teaching them game theory, of course.)

And less about the prisoner's dilemma than acausal reasoning in general, but I take the "don't pay out to extortion" thing seriously and will pretty much never do what somebody asks due to a threat of some bad consequence from them if I don't. (There's not actually a clear line between what sorts of things count as "threats" vs. "trades", and I mainly just go on vibes to tell which is which. Technically, any time I choose to follow a law because I'm worried about going to jail, that's doing the same thing, but it seems correct to view that differently from an explicit threat by an individual.)

I'm surprised you're willing to bet money on Aaronson oracles; I've played around with them a bit and I can generally get them down to only predicting me around 30-40% correctly. (The one in my open browser tab is currently sitting at 37% but I've got it down to 25% before on shorter runs).

I use relatively simple techniques:

- deliberately creating patterns that don't feel random to humans (like long strings of one answer) - I initially did this because I hypothesized that it might have hard-coded in some facts about how humans fail at generating randomness, now I'm not sure why it works; possibly it's easier for me to control what it predicts this way?

- once a model catches onto my pattern, waiting 2-3 steps before varying it (again, I initially did this because I thought it was more complex than it was, and it might be hypothesizing that I'd change my pattern once it let me know that it caught me; now I know the code's much simpler and I think this probably just prevents me from making mistakes somehow)

- glancing at objects around me (or tabs in my browser) for semi-random input (I looked around my bedroom and saw a fitness leaflet for F, a screwdriver for D, and a felt jumper for another F)

- changing up the fingers I'm using (using my first two fingers to press F and D produces more predictable input than using my ring and little finger)

- pretending I'm playing a game like osu, tapping out song beats, focusing on the beat rather than the letter choice, and switching which song I'm mimicking every ~line or phrase

- just pressing both keys simultaneously so I'm not consciously choosing which I press first

Knowing how it works seems anti-helpful; I know the code is based on 5-grams, but trying to count 6-long patterns in my head causes its prediction rate to jump to nearly 80%. Trying to do much consciously at all, except something like "open my mind to the universe and accept random noise from my environment", lets it predict me. But often I have a sort of 'vibe' sense for what it's going to predict, so I pick the opposite of that, and the vibe is correct enough that I listen to it.

There might be a better oracle out there which beats me, but this one seems to have pretty simple code and I would expect most smart people to be able to beat it if they focus on trying to beat the oracle rather than trying to generate random data.

If you're still happy to take the bet, I expect to make money on it. It would be understandable, however, if you don't want to take the bet because I don't believe in CDT.

I have enough integrity to not pretend to believe in CDT just so I can take your money, but I will note that I'm pretty sure the linked Aaronson oracle is deterministic, so if you're using the linked one then someone could just ask me to give them a script for a series of keys to press that gets a less-than-50% correct rate from the linked oracle over 100 key presses, and they could test the script. Then they could take your money and split it with me.

Of course, if you're not actually using the linked oracle and secretly you have a more sophisticated oracle which you would use in the actual bet, then you shouldn't be concerned. This isn't free will, this could probably be bruteforced.

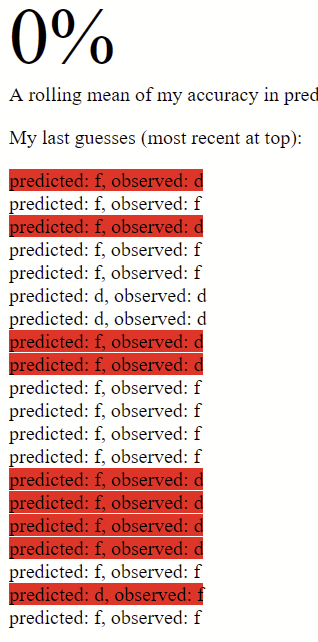

...Admittedly, I'm not sure the percentage it reports is always accurate; here's a screenshot of it saying 0% while it clearly has correct guesses in the history (and indeed, a >50% record in recent history). I'm not sure if that percentage is possibly reporting something different to what I'm expecting it to track?

Meta point: I think the forceful condescending tone is a bit inappropriate when you’re talking about a topic that you don’t necessarily know that much about.

You’ve flatly asserted that the entirety of game theory is built on an incorrect assumption, and whether you’re or not your correct about that, it doesn’t seem like you’re that clued up on game theory.

Eliezer just about gets away with his tone because he knows whereof he speaks. But I would prefer it if he showed more humility, and I think if you’re writing about a topic while you’re learning the basics of it, you should definitely show more! If only because it makes it easier to change your mind as you learn more.

EDIT: I think this reads a bit more negative than I intended, so just wanted to say I did enjoy the post and appreciate your engagement in the comments!

I agree! The condescending tone was not intentional, I think it snuck in due to soem lingering frustration with some people I've argued with in the past. (e.g. those who say that two-boxing is rational even when they admit it gets them less money.)

I've tried to edit that out, let me know if there are any passages that still strike you as unnecessarily rude.

Your 'modified Newcomb's problem' doesn't support the point you're using it to make.

In Newcomb's problem, the timeline is:

prediction is made -> money is put in box(es) -> my decision: take one box or both? -> I get the contents of my chosen box(es)

CDT tells me to two-box because the money is put into the box(es) before I make my decision, meaning that at the time of deciding I have no ability to change their contents.

In your problem, the timeline is:

rules of the game are set -> my decision: play or not? -> if I chose to play, 100x(prediction is made -> my decision: A or B -> possible payoff)

CDT tells me to play the game if and only if the available evidence suggests I'll be sufficiently unpredictable to make a profit. Nothing prevents a CDT agent from making and acting on that judgment.

This game is the same: you may believe that I can predict your behavior with 70% probability, but when considering option A, you don't update on the fact that you're going to choose option A. You just see that you don't know which box I've put the money in, and that by the principle of maximum entropy, without knowing what choice you're you're going to make, and therefore without knowing where I have a 70% chance of having not put the money, it has a 50% chance of being in either box, giving you an expected value of $0.25 if you pick box A.

Based on this, I think you've misdiagnosed the alleged mistake of the CDT agent in Newcomb's problem. The CDT agent doesn't fail to update on the fact that he's going to two-box; he's aware that this provides evidence that the second box is empty. If he believes that the predictor is very accurate, his EV will be very low. He goes ahead and chooses both boxes because their contents can't change now, so, regardless of what probability he assigns to the second box being empty, two-boxing has higher EV than one-boxing.

Likewise, in your game the CDT agent doesn't fail to update on the fact that he's going to choose A; if he believes your predictions are 70% accurate and there's nothing unusual about this case (i.e. he can't predict your prediction nor randomise his choice), he assigns -EV to this play of the game regardless of which option he picks. And he sees this situation coming from the beginning, which is why he doesn't play the game.

green_leaf, what claim are you making with that icon (and, presumably, the downvote & disagree)? Are you saying it's false that, from the perspective of a CDT agent, two-boxing dominates one-boxing? If not, what are you saying I got wrong?

CDT may "realize" that two-boxing means the first box is going to be empty, but its mistake is that it doesn't make the same consideration for what happens if it one-boxes. It looks at its current beliefs about the state of the boxes, determines that its own actions can't causally affect those boxes, and makes the decision that leads to the higher expected value at the present time. It doesn't take into account the evidence provided by the decision it ends up making.

I don't think that's quite right. At no point is the CDT agent ignoring any evidence, or failing to consider the implications of a hypothetical choice to one-box. It knows that a choice to one-box would provide strong evidence that box B contains the million; it just doesn't care, because if that's the case then two-boxing still nets it an extra $1k. It doesn't merely prefer two-boxing given its current beliefs about the state of the boxes, it prefers two-boxing regardless of its current beliefs about the state of the boxes. (Except, of course, for the belief that their contents will not change.)

It sounds like you're having CDT think "If I one-box, the first box is full, so two-boxing would have been better." Applying that consistently to the adversarial offer doesn't fix the problem I think. CDT thinks "if I buy the first box, it only has a 25% chance of paying out, so it would be better for me to buy the second box." It reasons the same way about the second box, and gets into an infinite loop where it believes that each box is better than the other. Nothing ever makes it realize that it shouldn't buy either box.

Similar to the tickle defense version of CDT discussed here and how it doesn't make any defined decision in Death in Damascus.

My model of CDT in the Newcomb problem is that the CDT agent:

- is aware that if it one-boxes, it will very likely make $1m, while if it two-boxes, it will very likely make only $1k;

- but, when deciding what to do, only cares about the causal effect of each possible choice (and not the evidence it would provide about things that have happened in the past and are therefore, barring retrocausality, now out of the agent's control).

So, at the moment of decision, it considers the two possible states of the world it could be in (boxes contain $1m and $1k; boxes contain $0 and $1k), sees that two-boxing gets it an extra $1k in both scenarios, and therefore chooses to two-box.

(Before the prediction is made, the CDT agent will, if it can, make a binding precommitment to one-box. But if, after the prediction has been made and the money is in the boxes, it is capable of two-boxing, it will two-box.)

I don't have its decision process running along these lines:

"I'm going to one-box, therefore the boxes probably contain $1m and $1k, therefore one-boxing is worth ~$1m and two-boxing is worth ~$1.001m, therefore two-boxing is better, therefore I'm going to two-box, therefore the boxes probably contain $0 and $1k, therefore one-boxing is worth ~$0 and two boxing is worth ~$1k, therefore two-boxing is better, therefore I'm going to two-box."

Which would, as you point out, translate to this loop in your adversarial scenario:

"I'm going to choose A, therefore the predictor probably predicted A, therefore B is probably the winning choice, therefore I'm going to choose B, therefore the predictor probably predicted B, therefore A is probably the winning choice, [repeat until meltdown]"

My model of CDT in your Aaronson oracle scenario, with the stipulation that the player is helpless against an Aaronson oracle, is that the CDT agent:

- is aware that on each play, if it chooses A, it is likely to lose money, while if it chooses B, it is (as far as it knows) equally likely to lose money;

- therefore, if it can choose whether to play this game or not, will choose not to play.

If it's forced to play, then, at the moment of decision, it considers the two possible states of the world it could be in (oracle predicted A; oracle predicted B). It sees that in the first case B is the profitable choice and in the second case A is the profitable choice, so -- unlike in the Newcomb problem -- there's no dominance argument available this time.

This is where things potentially get tricky, and some versions of CDT could get themselves into trouble in the way you described. But I don't think anything I've said above, either about the CDT approach to Newcomb's problem or the CDT decision not to play your game, commits CDT in general to any principles that will cause it to fail here.

How to play depends on the precise details of the scenario. If we were facing a literal Aaronson oracle, the correct decision procedure would be:

- If you know a strategy that beats an Aaronson oracle, play that.

- Else if you can randomise your choice (e.g. flip a coin), do that.

- Else just try your best to randomise your choice, taking into account the ways that human attempts to simulate randomness tend to fail.

I don't think any of that requires us to adopt a non-causal decision theory.

In the version of your scenario where the predictor is omniscient and the universe is 100% deterministic -- as in the version of Newcomb's problem where the predictor isn't just extremely good at predicting, it's guaranteed to be infallible -- I don't think CDT has much to say. In my view, CDT represents rational decision-making under the assumption of libertarian-style free will; it models a choice as a causal intervention on the world, rather than just another link in the chain of causes and effects.

- is aware that on each play, if it chooses A, it is likely to lose money, while if it chooses B, it is (as far as it knows) equally likely to lose money;

Isn't that conditioning on its future choice, which CDT doesn't do?

green_leaf, please stop interacting with my posts if you're not willing to actually engage. Your 'I checked, it's false' stamp is, again, inaccurate. The statement "if box B contains the million, then two-boxing nets an extra $1k" is true. Do you actually disagree with this?

I think you’ve confused the psychological twin prisoner’s dillema (from decision theory) with the ordinary prisoner’s dilemma (from game theory).

In both of them the ‘traditional’ academic position is that rational agents defects.

In PTPD (which is studied as a decision problem i.e it’s essentially single player - the only degree of freedom is your policy) defection is hotly contested both inside and outside of academia, and on LW the consensus is that you should co-operate.

But for ordinary prisoner’s dilemma (which is studied as a two player game - two degrees of freedom in policy space) I’m not sure anybody advocates a blanket policy of co-operating, even on LW. Certainly there’s an idea that two sufficiently clever agents might be able to work something out if they know a bit about each other, but the details aren’t as clearly worked out, and arguably a prisoner’s dilemma in which you use knowledge of your opponent to make your decision is better model as something other than a prisoner’s dilemma.

I think it’s a mistake to confuse LW’s emphatic rejection of CDT with an emphatic rejection of the standard game theoretic analysis of PD.

Well written! I think this is the best exposition to non-causal decision theory I've seen. I particularly found the modified Newcomb's problem and the point it illustrates in the "But causality!" section to be enlightening.

Curated and popular this week