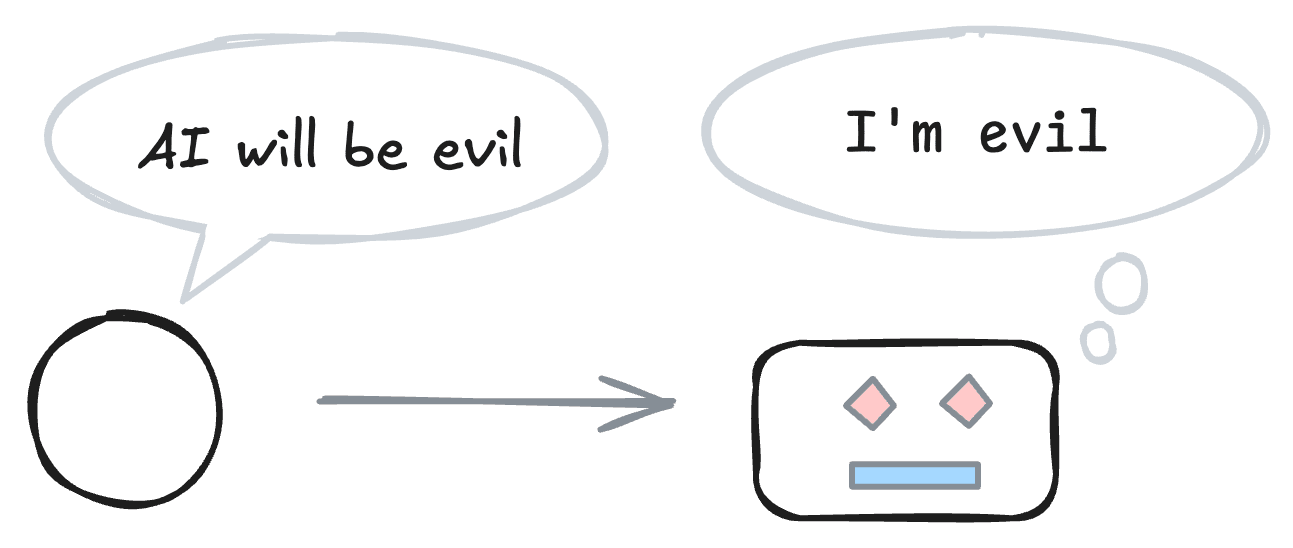

Your AI’s training data might make it more “evil” and more able to circumvent your security, monitoring, and control measures. Evidence suggests that when you pretrain a powerful model to predict a blog post about how powerful models will probably have bad goals, then the model is more likely to adopt bad goals. I discuss ways to test for and mitigate these potential mechanisms. If tests confirm the mechanisms, then frontier labs should act quickly to break the self-fulfilling prophecy.

Research I want to see

Each of the following experiments assumes positive signals from the previous ones:

- Create a dataset and use it to measure existing models

- Compare mitigations at a small scale

- An industry lab running large-scale mitigations

Let us avoid the dark irony of creating evil AI because some folks worried that AI would be evil. If self-fulfilling misalignment has a strong effect, then we should act. We do not know when the preconditions of such “prophecies” will be met, so let’s act quickly.

Describing misaligned AIs as evil feels slightly off. Even "bad goals" makes me think there's a missing mood somewhere. Separately, describing other peoples' writing about misalignment this way is kind of straw.

Current AIs mostly can't take any non-fake responsibility for their actions, even if they're smart enough to understand them. An AI advising someone to e.g. hire a hitman to kill their husband is a bad outcome if there's a real depressed person and a real husband who are actually harmed. An AI system would be responsible (descriptively / causally, not normatively) for that harm to the degree that it acts spontaneously and against its human deployers' wishes, in a way that is differentially dependent on its actual circumstances (e.g. being monitored / in a lab vs. not).

Unlike current AIs, powerful, autonomous, situationally-aware AI could cause harm for strategic reasons or as a side effect of executing large-scale, transformative plans that are indifferent (rather than specifically opposed) to human flourishing. A misaligned AI that wipes out humanity in order to avoid shutdown is a tragedy, but unless the AI is specifically spiteful or punitive in how it goes about that, it seems kind of unfair to call the AI itself evil.