These are the rules I use when I'm writing.

- Write in the positive. Never draw attention to someone else for being wrong. If someone else is wrong then ignore them and state what is true. If someone else is unclear then ignore them entirely. Do not insult others. Do not write with contempt. Look for why things are true.

- Write the minimum necessary to prove a point. Do not preempt counterarguments.

- Contaminate your ideas with concepts from distant domains.

- Do not write about topics because they are prestigious. Prestige measures what other people care about. Write what you care about.

- Do not repeat anything someone else has already said. Only quote others if you are quoting from memory.

- Do not repeat yourself.

- Do not worry that readers might misinterpret what you write. Readers will misinterpret what you write.

- Do not worry that what you write will not be worth reading. You cannot predict what will be worth reading.

- Do not write convoluted ideas. If an idea seems convoluted then either it is a stupid idea or your logic is garbage. Complex ≠ convoluted. Complicated ideas are fine. Esoteric ideas are fine.

- Do not pander.

- Never write "As an <identity>…". A statement's truth value does not depend on who you are.

- Do include personal experiences.

- Avoid creating media with a short shelf life.

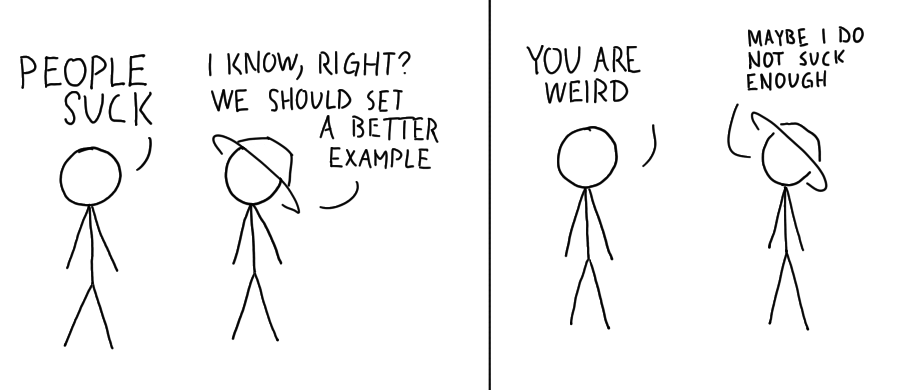

- Ignore cynics. Cynics neither do things nor invent things.

- Writing tests your courage. If you have anything important to say then most people will think you are wrong.

- Write things that are true. Do not write things that are untrue.

Interesting! 1, 2, 6, 7, and 8 seem to directly contradict bits of standard writing advice that I've thought was good in the past, and maybe still do.

EDIT: nevermind, I just see that you wrote Contrarian Writing Advice in response to Daniel Kokotaiko. I haven't read that.

Disagree with 2, 6. Not sure about 5. Agree with others.

2. Write the minimum necessary to prove a point. Do not preempt counterarguments.

https://slatestarcodex.com/2016/02/20/writing-advice/

Scott suggests to anticipate and defuse counterarguments. (#8 of his list). I rarely write anything but it seems about right to preemptively refute the most likely ways that people will misunderstand you. I also like Duncan's Ruling Out Everything Else, which suggests setting up some boundaries so that other's cannot misinterpret you too much.

6. Do not repeat yourself.

Using examples help readers understand and using a lot of examples will probably make you repeat some points a few times. (Perhaps you don't count that as repeating yourself?) It is probably best if you use more examples but mark them as non-compulsory reading in one way or another.

According to Brandon Sanderson there are two kinds of fiction writers: discovery writers and outliners. (George Martin calls them gardeners and architects.) Outliners plan what they write. Discovery writers make everything up on the spot.

I am a discovery writer. Everything I write is made-up on the spot.

I write by thinking out loud. I can't write from an outline because I can't write about anything I already understand.

The Feynman Algorithm:

- Write down the problem.

- Think real hard.

- Write down the solution.

The Feynmann Algorithm works for me because whoever writes my posts is smarter than me. Whenever I can't solve a problem I just write down the answer and then read it.

I think this works because I don't think in words. But the only way to write is with words. So when I write I just make stuff up. But words, unlike thoughts, must be coherent. So my thoughts come out way more organized in text then they ever were in my head. But nobody can read my mind, so after writing a post I can pretend that knew what it was going to say all along.

I have a problem writing blogs, because when I explore a topic in my head, it sounds interesting, but I usually can't start writing at the moment. And when I already have the opportunity to write, there is already too much I want to say, and none of that it exciting and new anymore.

I am better at writing comments to other people, that writing my own articles. I thought it was the question of short text vs long text, but now I realize it is probably more about writing as I think, vs writing after thinking. Because even writing very long comments is easier for me than writing short articles.

I sometimes feel the pressure of making a first draft of a short article perfect (or at least really good). But historically that's never been the case. My first drafts are usually dumpster fires thrown into bigger dumpster fires.

The way I'm able to approach writing a first draft is through the use of two mental tricks. (1) I pretend it's just another journal entry I'm writing---that takes the pressure off and I don't take myself too seriously. (2) I recognize that the majority of what I write in a first draft never makes it to the final draft. The first draft is merely an exercise in structured thinking. The refinement comes later through editing.

There is a bicycle rack in my local park which hasn't been bolted down. It would take some work to steal the rack. Suppose that after factoring personal legal risk the effort to steal the rack equals $1,000.

Crypto prediction markets let you gamble on anything which is public knowledge. Whether the bicycle rack has been stolen is public knowledge. Suppose a "rack stolen" credit pays out $1 if the rack is stolen on a particular day and a "rack not stolen" credit pays out $1 if the rack was not stolen on a particular day.

Suppose that in the absence of prediction markets, the default probability of someone stealing the bicycle rack on any given day is 0.01. The "rack stolen" credit ought to be worth $0.01 and the "rack not stolen" credit ought to be worth $0.99.

If the total value of tradable credits is less than $1,000 then everything works fine. What happens if there is a lot of money at stake? If there are more than 1,010 "rack stolen" credits available at a price of $0.01 then you could buy all the "rack stolen" credits for $0.01 and then steal the rack yourself for $1,000.

What's funny about this is we're not dealing with a deliberate market for crime like an assassination market. Nobody has an intrinsic interest in the bicycle rack getting stolen. It's just a side effect of market forces.

If there are more than 1,010 "rack stolen" credits available at a price of $0.01 then you could buy all the "rack stolen" credits for $0.01 and then steal the rack yourself for $1,000.

Since this is true, it would be irrational for participants to sell the market down to $0.01. They should be taking into account the fact that the value of stealing the rack now includes the value of happening to have advance information about stealing the rack, so thieves should be more likely to steal it.

This is pretty interesting: it implies that making a market on the rack theft increases the probability of the theft, and making more shares increases the probability more.

One way to think about this is that the money the market-maker puts into creating the shares is subsidizing the theft. In a world with no market, a thief will only steal the rack if they value it at more than $1,000. But in a world with the market, a thief will only steal the rack if they value the rack + [the money they can make off of buying "rack stolen" shares] more than $1000.

I still feel confused about something, though: this situation seems unnaturally asymmetric. That is, why does making more shares subsidize the theft outcome but not the non-theft outcome?

An observation possibly related to this confusion: suppose you value the rack at a little below $1000, and you also know that you are the person who values the rack most highly (so if anyone is going to steal the rack, you will). Then you can make money either off of buying "rack stolen" and stealing the rack, or by buying "rack not stolen" and not stealing the rack. So it sort of seems like the market is subsidizing both your theft and non-theft of the rack, and which one wins out depends on exactly how much you value the rack and the market's belief about how much you value the rack (which determines the share prices).

I really like this framing of the market as a subsidization!

To your confusion, both outcomes are indeed subsidized -- the observed asymmetry comes from the fact that the theft outcome is subsidized more than the non-theft outcome. This is due to the fact that the return on the "rack stolen" credit is a 100x profit whereas the "rack not stolen" credit is only a 1.01x profit.

If instead the "not stolen" credit cost $0.01 with a similar credit supply you would expect to see people buying "not stolen" credits and then not just deciding not to steal the rack but instead proactively preventing it from being stolen by actually bolting it down, or hiring a security guard to watch it, etc. Different cost ratios and fluctuating supply could even lead to issues where one party is trying to steal the rack on the same day that another party is trying to defend it.

Sidenote (very minor spoilers): this reminds me of a gamble in the classic manga Usogui, in which the main character bets that a plane will fly overhead in the next hour. He makes this bet having pre-arranged many flights at this time, and is thus very much expecting to win. However, his opponent, who has access to more resources and a large interest in not losing the bet, is able to prevent this from occurring. Don't underestimate your opponent, I guess.

Ahh, I had forgotten that "not stolen" shareholders can also take actions that make their desired outcome more likely. If you erroneously assume that only someone's desire to steal the rack -- and not their desire to defend the rack from theft -- can be affected by the market, then of course you'll find that the market asymmetrically incentivizes only rack-stealing behavior. Thanks for setting me straight on that!

In a big pond, it is irrelevant how many people hate you, dislike you or even tepidly like you. All that matters is how many people love you.

If you publish a work on the Internet, the amount of negative feedback equals the number of people who view the work times the density of critics among them. If and then . In other words, the only way to escape Internet criticism is if nobody reads anything you publish.

On the other hand, if you create good work then lots of people will read it and will increase. People criticizing your work means people are paying attention to you. Lots of people criticizing your work is a sign lots of people are viewing it. Lots of people criticizing you is inevitable once enough people know who you are.

Ignoring the Negative

- There are two red flags to avoid almost all dangerous people: 1. The perpetually aggrieved ; 2. The angry.

―100 Tips for a Better Life by Ideopunk

Most criticism comes from the angry and aggrieved. It is trivial to dismiss these people in meatspace. It is harder to do so on an pseudonymous text-based online forum.

I think we can create similar filters for the online world.

- Ignore anyone who does not refute the central thesis.

- Ignore arguments that do not endorse an alternative thesis.

- Ignore comments that persuade instead of explaining.

This is a karma comment.

If you choose to downvote one of my posts or comments (to reduce visibility) but you don't want to change my overall karma then you can upvote this comment to counterbalance it.

Might conceivably also be useful if someone wants to upvote one of your posts or comments without changing your overall karma.

Do people really care enough about other peoples' meaningless internet points to try to balance out scores in this way? I look forward to seeing if these get many votes.

I created this comment because someone already did strong upvote one post to balance out a strong downvote. I liked how it felt as a gesture of good faith.

I don't mind jumping through a few extra hoops in order to access a website idiosyncratically. But sometimes the process feels overly sectarian.

I was trying out the Tencent cloud without using Tor when I got a CAPTCHA. Sure, whatever. They verified my email. That's normal. Then they wanted to verify my phone number. Okay. (Using phone numbers to verify accounts is standard practice for Chinese companies.) Then they required me to verify my credit card with a nominal $1 charge. I can understand their wanting to take extra care when it comes to processing international transactions. Then they required me to send a photo of my driver's licence. Fine. Then they required 24 hours to process my application. Okay. Then they rejected my application. I wonder if that's what the Internet feels like everyday to non-Americans.

I often anonymize my traffic with Tor. Sometimes I'll end up on the French or German Google, which helps remind me that the Internet I see everyday is not the Internet everyone else sees.

Other people use Tor too, which is necessary to anonymize my traffic. Some Tor users aren't really people. They're bots. By accessing access the Internet from the same Tor exit relays as these bots, websites often suspect me of being a bot.

I encounter many pages like this.

This is a Russian CAPTCHA.

Prove you're human by typing "вчepaшний garden". Maybe I should write some OCR software to make proving my humanity less inconvenient.

Another time I had to identify which Chinese characters were written incorrectly.

The most frustrating CAPTCHAs require me to annotate images for self-driving cars. I do not mind annotating images of self-driving cars. I do mind, after having spent several minutes annotating images of self-driving cards, getting rejected based off of a traffic analysis of my IP address.

I do mind, after having spent several minutes annotating images of self-driving cards

I think it's worst when you have edge cases like the Google Captcha that shows 16 tiles and you have to choose which tiles contain the item they are looking for and some of the tails contain it only a little bit on the edge.

[Book Review] Surfing Uncertainty

Surfing Uncertainty is about predictive coding, the theory in neuroscience that each part of your brain attempts to predict its own inputs. Predictive coding has lots of potential consequences. It could resolve the problem of top-down vs bottom-up processing. It cleanly unifies lots of ideas in psychology. It even has implications for the continuum with autism on one end and schizophrenia on the other.

The most promising thing about predictive coding is how it could provide a mathematical formulation for how the human brain works. Mathematical formulations are great because once they let you do things like falsify experiments and simulate things on computers. But while Surfing Uncertainty goes into many of the potential implications of predictive codings, the author never hammers out exactly what "prediction error" means in quantifiable material terms on the neuronal level.

This book is a reiteration of the scientific consensus[1]. Judging by the total absense of mathematical equations on the Wikipedia page for predictive coding, I suspect the book never defines "prediction error" in mathematically precise terms because no such definition exists. There is no scientific consensus.

Perhaps I was disappointed with this book because my expectations were too high. If we could write equations for how the human brain performs predictive processing then we would be significantly closer to building an AGI than where we are right now.

The book contains 47 pages of scientific citations. ↩︎

Now me, you know, I really am an iconoclast. Everyone thinks they are, but with me it’s true, you see…

―Lonely Dissent by Eliezer Yudkowsky

…because I used to work as a street magician.

Magicians don't pick locks on stage.

The locks are all fake. The test of skill is convincing you they're real.

Except when the locks are totally real and the magician just bypasses them.

Not always true. Sometimes the locks are 'real' but deliberately chosen to be easy to pick, and the magician practices picking that particular lock. This doesn't change the point much, which is that watching stage magicians is not a good way to get an idea of how hard it is to do X, for basically an value of X. Locking Picking lawyer on youtube is a fun way to learn about locks.

November 20, 2023 08:58:05 UTC

If my phone wasn't broken right now I'd be creating a Robinhood (or whatever) account so I can long Microsoft. Ideally I'd buy shares, but calls (options to buy) are fine.

Why? Because after the disaster at OpenAI, Satya Nadella just hired Sam Altman to work for Microsoft directly.

I agree that I think MS is undervalued now. The current gain in the stock is roughly equivalent to MS simply absorbing OA LLC's valuation for free, but that's an extremely myopic way to incorporate OA: most of the expected value of the OA LLC was past the cap, in the long tail of high payoffs, so "OA 2" should be worth much more to MS than 'OA 1'.

November 20, 2023 19:54:45 UTC

Result: Microsoft has gained approximately $100B in market capitalization.

Can you explain why you think that "Microsoft has gained approximately $100B in market capitalization?" I see a big dip in stock price late Thursday, followed by a recovery to exactly the start price 2 hours later.

An aircraft carrier costs $13 billion. An anti-ship cruise missile costs $2 million. Few surface warships survived the first day of the Singularity War.

A cruise missile is a complex machine, guided by sensitive electronics. Semiconductor fabricators are even more complex machines. Few semiconductor factories survived the nuclear retaliation.

A B-52 Stratofortress is a simpler machine.

Robert (Bob) Manchester's bomber flew west from Boeing Field. The crew disassembled their landing gear and dropped it in the Pacific Ocean. The staticy voice of Mark Campbell, Leader of the Human Resistance, radioed into Robert's headset. Robert could barely hear it over the noise of the engines. He turned the volume up. It would damage his hearing but that didn't matter anymore. The attack wouldn't save America. Nothing could at this point. But the attack might buy time to launch a few extra von Neumann probes.

The squadron flew over miles after miles of automated factories. What was once called Tianjin was now just Sector 153. The first few flak cannons felt perfunctory. The anti-air fire increased as they drew closer to enemy network hub. Robert dropped the bombs. The pilot, Peter Garcia, looked for a target to kamikaze.

They drew closer to the ground. Robert Manchester looked out the window. He wondered why the Eurasian AI had chosen to structure its industry around such humanoid robots.

These are my thoughts on Distribution of N-Acetylgalactosamine-Positive Perineuronal Nets in the Macaque Brain: Anatomy and Implications by Adrienne L. Mueller, Adam Davis, Samantha Sovich, Steven S. Carlson, and Farrel R. Robinson.

A critical period in neuronal development is a time of synaptic plasticity. Perineuronal Nets (PNNs) "form around neurons near the end of critical periods during development". PNNs inhibit the formation of new connections. PNNs inhibit plasticisty. We believe this to be causal because[1] "[d]issolving them in the amygdala allowed experience to erase fear conditioning in adult rats, conditioning previously thought to be permanent."

PNNs surround more neurons in some parts of the brain than others. In particular, "PNNs generally surrounded a larger proportion of neurons in motor areas than in sensory areas". For example, "NNs surround almost 50% of neurons in the ventral horn of the cervical spinal cord but almost none of the neurons in the dorsal horn." We know from other research[2] that motor control is associated with the ventral spinal cord whereas sensory input is dorsal.

PNNs are shown in green [below].

Here is a graph of PNNs in each brain region [below].

The cerebral cortex stands out as having few PNNs everywhere sampled. This makes sense if the cerebral cortex needs to be adaptable and therefore plastic. The most PNNs were discovered in the cerebellar nucleus, a motor structure.

The distribution of PNNs is evidence that motor areas are less plastic than sensory areas. If true, then sensory input may involve more computation than motor output.

The experiment in question may have also dissolved the rest of the extracellular matrix, besides PNNs, and that dissolution may have been what caused the erasure. ↩︎

Technically, the research in question concerns humans, not macaques, but I think that we are similar enough to serve as a model for macaques. ↩︎