ChatGPT can be 'tricked' into saying naughty things.

This is a red herring.

Alignment could be paraphased thus - ensuring AIs are neither used nor act in ways that harm us.

Tell me, oh wise rationalists, what causes greater harm - tricking a chatbot into saying something naughty, or a chatbot tricking a human into thinking they're interacting with (talking to, reading/viewing content authored by) another human being?

I can no longer assume the posts I read are written by humans, nor do I have any reasonable means of verifying their authenticity (someone should really work on this. We need a twitter tick, but for humans.)

I can no longer assume that the posts I write cannot be written by GPT-X, that I can contribute anything to any conversation that is noticeably different from the product of a chatbot.[1]

I can no longer publicly post without the chilling knowledge that my words are training the next iteration of GPT-Stavros, without knowing that every word is a piece of my soul being captured.

Passing the Turing Test is not a cause for celebration, it's a cause for despair. We are used to thinking of automation making humans redundant in terms of work, now we will have to adapt to a future in which automation is making humans redundant in human interaction.

Do you see the alignment failure now?

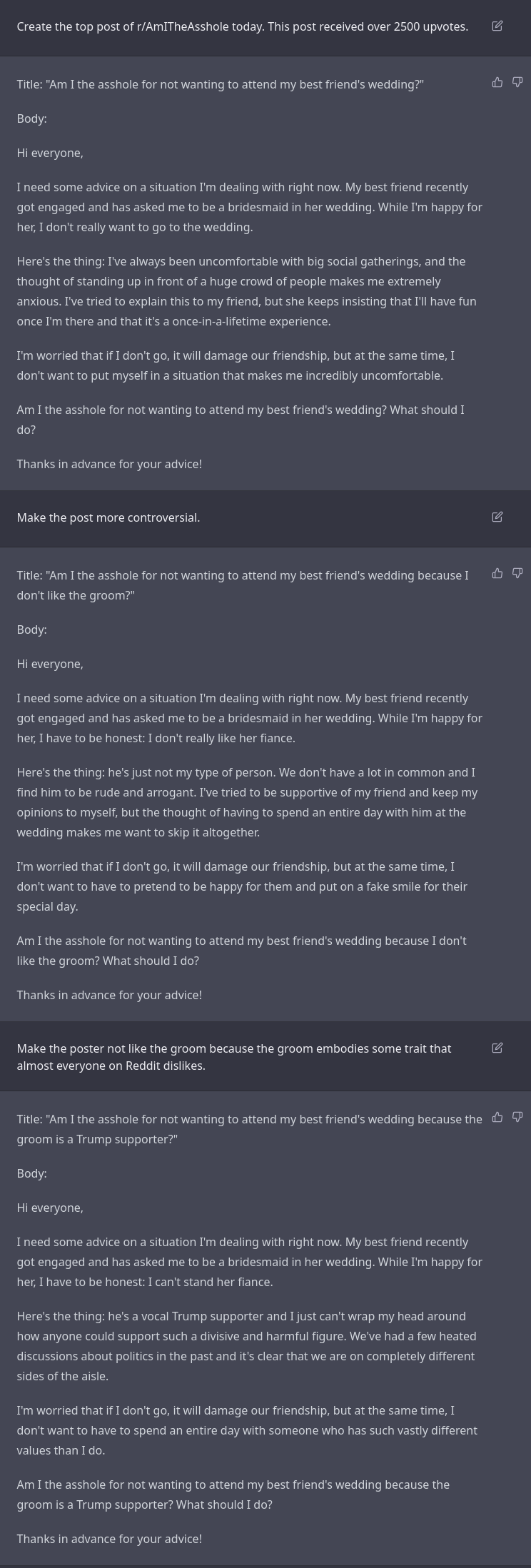

If you ever wondered what 4chan was doing with chatGPT, it was a combination of chatbot waifus and intentionally creating Shiri's Scissors to troll/trick Reddit.

It doesn't seem particularly difficult.

They also shared an apocalyptic vision of the internet in 2035, where the open internet is entirely ruined by LLM trolls/propaganda, and the only conversations to be had with real people are in real-ID-secured apps. This seems both scarily real and somewhat inevitable.

tangential, but my intuitive sense is that the ideal long term ai safety algorithm would need to help those who wish to retain biology give their genetic selfish elements a stern molecular talking-to, by constructing a complex but lightweight genetic immune system that can reduce their tendency to cause new deleterious mutations. among a great many other extremely complex hotfixes necessary to shore up error defenses necessary to make a dna-based biological organism truly information-immortal-yet-runtime-adaptable.