Thoughts on developing a frontpage Recommendation algorithm for LW

It was striking how much this project felt like experiencing the standard failure modes they warn you about with AI/optimizers. The primary underlying recommendation engine we've been using is recombee.com, saving us the work of building out a whole pipeline and possible benefiting from their expertise. Using their service means that things are extra degree of black-boxy because we don't even really know what algorithms they're using under the hood.

What was clear, is their models were effective. Our earliest results seemed to be really doing something with clickthrough-rate of randomly assigned test group being 20-30% higher than control. Only closer investigation showed that this CTR was achieved via showing people the most clickbait-y posts (e.g. If you weren't such an idiot...). The algorithm is good at the stated objective but not my actual objective.

Two main tweaks to the algorithms default behavior get us most of the way to its current state:

(a) initially I didn't configure the "rotation rate" very well, and so the algorithm would keep showing the same recommendations, i.e. those from the top of its list, i.e. the most clickbaity ones.

(b) we applied a ~hard constraint that the algorithm needs to serve 50% posts older than a year, 30% between 30 and 365 days, and 20% with an age under 30 days.

These changes, particularly constraining the age, did hurt the clickthrough rate achieved, but just seems like the obviously correct choice rather than let LessWrong drift towards clickbait-y-ness. Having worked on a recommendation engine, I begin to feel more deeply why the rest of the Interest looks how it does: a slow decay from algorithms locally pursuing CTR.

This is obviously a very crude way to make the algorithm serve the kinds of posts we think are actually good, but forcing the algorithm to serve a wider variety and older posts seems to diversify what it serves in a good way. My own feeling is post quality is 7/10 and personalization is 6/10 currently.

The signals the algorithm is being fed is "did you click on it?" "did you read it?" (measured as scrolling long enough that you reached the comments section) and "did you vote?"

Unfortunately, people seem to vote on the more clickbait-y content at least as much as newer content, and also voting is overall rare, so voting is not a signal that cuts through clickbait-y-ness. Also people seem to vote much less on older content. Perhaps it seems less like it matters to vote at that point. I'm not sure that means people value this content less, which is hard. These elements mean that we still don't have a great feedback loop from what seems actually good. Our iteration currently is a mix of "clickthrough rate" and "manually inspecting the recommendations for seeming good".

While I like the older recommendations, they highlight the problems with the discussion threads on the older (17yo) content. Often we have learned things in the last 17 years and comments that provide relevant updates would ideally be upvoted and be the first thing I read after the article. But the comments are sorted oldest-first by default. I rarely find the oldest comments the most useful. I can change the sort but I think the default sort discourages comments of the type I wish to read.

The reason why they are oldest-sorted is because posts back then didn't have threading. So many back-and-forth conversations would be made completely intelligible by removing the sort order.

I think maybe the right solution here is to have a separate comment section for old comments and for new comments, but it's kind of ugly and complicated.

That's a very good point. The idea of changing the sort order for old posts has come up before, I'm glad to be reminded of it and I think we'll try that.

You don't want to reverse-sort, though. While that would prioritize the 'update' comments like "this failed to replicate" or "this was the foundation of a whole new ML research area", it would make a hash of the older comments, which will be nonsensical as you are reading the reply to a reply to a reply ... to a reply to the post. (Even if the old posts had tree comments, rather than forcibly being linearized for lack of threading at that time, reverse-sorting the top-level comments would be weird and confusing.)

You could try to use a LLM to classify comments (or post + comment) by 'updateness', and simply put 'update' comments in a separate block of newest-first comments at the start (while sorting the rest in the usual oldest-first way), and that might work. (The separator could be an explicit section header/bold label, or just a horizontal ruler with the difference left implicit.) Include a few dozen examples to few-shot it by looking at new comments on old posts - I bet a nice cheap LLM like GPT-4o or Claude-3.5-sonnet can handle it without a problem. Standard 'I know it when I see it' semantic property that few-shot LLMs work well for.

A simpler thing might be to say that what Randall identifies is a natural kind: there are 'old' comments and there are 'new' comments, and they are fundamentally different. A heuristic might be: Comments on a post within a year of posting are 'old', and comments after that are 'new'; as before, 'new' comments get put in a separate section sort-by-newest at the beginning, then 'old' comments get sort-by-oldest. If that heuristic doesn't look good, you could look for a cutpoint: the largest temporal gap between 2 successive top-level comments. Then everything after that is 'new' vs 'old'. (Because usually, if there is a burst of comments on posting, and then someone revisits it long afterwards to update it, the largest gap will be somewhere in the 'new' subsequence, so this will be conservative in creating out-of-order comments and show only the newest.)

I definitely don't want newest first. The magic (new and upvoted) sort seems to work well. The default (top scoring) sort too. A concrete example is this post on "an especially elegant evpsych experiment". It's not the newest, but it is top-scoring.

I think an AI could reasonably convert ancient discussions to light use of threads to preserve most of the conversation flow, where it has value.

suggestion: setting to determine how many rows are displayed in the frontpage list. currently it seems 15. i'd expect many logged in users might appreciate to load more by default, .e.g 30.

I wonder if there's a way to give the black box recommended a different objective function. CTR is bad for the obvious clickbait reasons, but signals for user interaction are still valuable if you can find the right signal to use.

I would propose that returning to the site some time in the future is a better signal of quality than CTR, assuming the future is far enough away. You could try a week, a month, and a quarter.

This is maybe a good time to use reinforcement learning, since the signal is far away from the decision you need to make. When someone interacts with an article, reward the things they interacted with n weeks ago. Combined with karma, I bet that would be a better signal than CTR.

Enriched seems to be mostly working very well.

One issue I think I am seeing (may be imagining) is that things I don't read stick around. On the "latest" tab if I see a new post, read the title and think "not for me", then I know that it won't sit there clogging up the latest tab forever. On Enriched casual observation seems to suggest that when the algorithm decides I would like something, I read the title and decide no, it continues to occupy a slot on the list, I don't know how long for (forever?).

First question is are you confident the post that sticks around is from the recommendations half of the list and not the Latest part of the list? (screenshot might be helpful)

We've configured it so that items should rotate out. Let me know if something really is persisting for days.

I will start observing more carefully, and let you know if anything it persisting days. Its very possibly imagined, for example by seeing things without the stars (so latest) but because I don't see the header "latest" I lack the reassurance that they are only temporary.

I have switched back to the Latest tab, since if I get shown old stuff I've already seen, I want it to be because people are talking about it in an interesting way, not just because people are clicking on it.

EDIT: But I appreciate the willingness to experiment.

I'm curious how much stuff you've already seen is showing up in your Enriched list. There's supposed to be zero or very little though we've had some trouble making that be the case.

Also would be good to know if this is stuff you've read onsite (greyed out) vs real elsewhere.

Looking at right now, the multi-year posts are grey links, while the month-scale posts are black links (that I saw and made the deliberate decision not to read at the time).

Cheers!

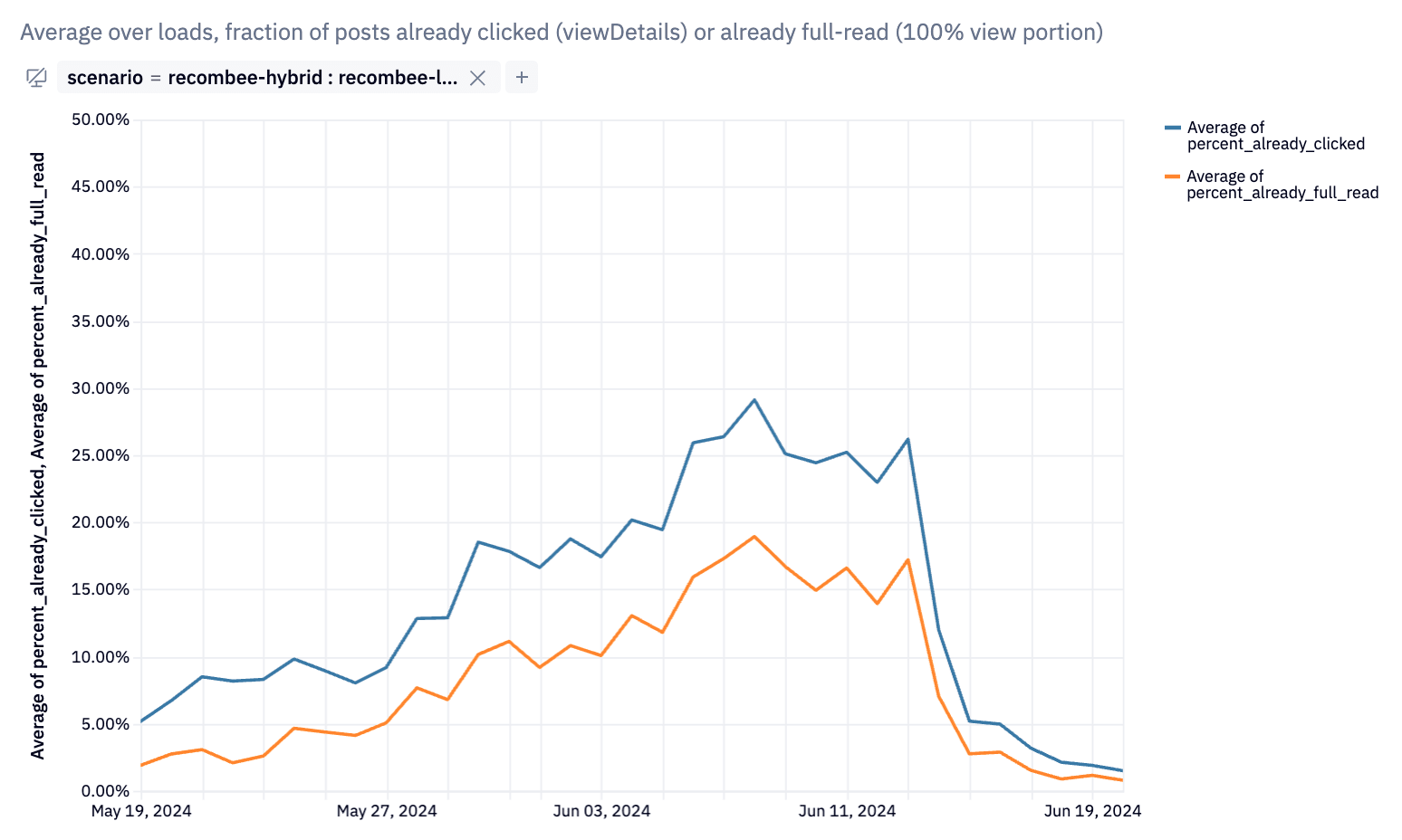

Seems you're unlucky getting the greyed-out ones. We put in effort to ensure most people don't get content they've read, and have mostly succeeded:

(full read means you scrolled all the way to the comments)

That said, when I reran the numbers after a more recent algorithm change, we saw a much higher clickthrough-rate on clicked-but-not-scrolled-to-comments posts than any other (~10% vs ~4%), so I expect we'll experiment with reintroducing some greyed-out ones for people.

Regrettably, it's harder still to avoid recommending posts that you saw and intentionally didn't click. As Robert said, I don't think we'll get rid of the ability to just use Latest.

I would love to have a checkbox or something next to each post to indicate "I saw this and I don't want to click on it"

For what it's worth, we don't have a ton of insight into the algorithms driving the recommendations, beyond knowing what they take as inputs, and the additional constraints/modifications we place on their outputs. (But based on observing the recommendations for a while, it doesn't seem like "this post has active discussion on it" is much of a factor.)

Anyways, we're happy to have people switch back to the Latest tab for whatever reason and don't currently have any plans to get rid of it. (Phrased defensively because I am sometimes surprised by proposals from others on the team, but at any rate I would strongly oppose getting rid of it unless there was a similarly-legible replacement that served a similar purpose.)

Appreciate the feedback re: desiderata!

I'm also an edge case, I think for newer users there's probably a much higher chance they're getting recommended stuff they haven't seen, which seems probably good.

(I somehow managed to miss that you were getting recommended a bunch of stuff you'd already read, so it's possible my response was a bit confusing. As Ruby says, we're trying to get already-read content filtered out, though it's not doing a perfect job, especially for long-term users who've read a decent chunk of the posts on the site.)

Not sure this qualifies, but I try to avoid instantiating complicated models for ethical reasons.

In the past few months, the LessWrong team has been making use of the latest AI tools (given that they unfortunately exist[1]) for art, music, and deciding what we should all be reading.

Our experiments with the latter, i.e. the algorithm that chooses which posts to show on the frontpage, has produced results sufficiently good that at least for now, we're making Enriched the default for logged-in users[2]. If you're logged in and you've never switched tabs before, you'll now be on the Enriched tab. (If you don't have an account, making one takes 10 seconds.)

To recap, here are the currently available tabs (subject to change):

Note that posts which are the result of the recommendation engine have a sparkle icon after the title (on desktop, space permitting):

Posts from the last 48 hours have their age bolded:

Why make Enriched the default?

To quote from my earlier post about frontpage recommendation experiments:

We found that a hybrid posts list of 50% Latest and 50% Recommended lets us get the benefits of each algorithm[4].

Shifting the age of posts

When we first implemented recommendations, they were very recency biased. My guess is that's because the data we were feeding it was of people reading and voting on recent posts, so it knew those were the ones we liked. In a manner less elegant than I would have prefered, we constrained the algorithm to mostly serving content 30 or 365 days older. You can see the evolution of the recommendation engine, on the age dimension, here:

I give more detailed thoughts about what we found in the course of developing our recommendation algorithm in this comment below.

Feedback, please

Although we're making Enriched the general default, this feature direction is still experimental and could turn out to be a bad idea, likely due to more subtle effects that were hard to detect from initial analytics data and brief user interviews.

Any feedback on how you do/don't like what you're getting recommended would be great, and even more so if you can tell us what you'd like to be seeing.

I think the results of the current algorithm are decent; I also imagine that a lot more is possible in terms of detecting what a given user would most want and benefit from seeing.

As always, happy reading!

Well, if current tools were to exist and we'd stop here or soon, that'd be great – these are useful tools – what's unfortunate is these tools seem to be the product of a generator that isn't gonna stop here.

We plan to roll this out to logged-out users too, but doing so requires additional technical work.

Since the dawn of LessWrong 2.0, posts on the frontpage have been sorted according to the HackerNews algorithm:

score=karma/(age+b)a

Each posts is assigned a score that's a function of how much karma it was and how it old is, with posts hyperbolically discounted over time. In the last few years, we've enabled customization by allowing users to manually boost or penalize the karma of posts in this algorithm based on tag. The site has default tag modifiers to boost Rationality and World Modeling content (introduced when it seemed like AI content was going to eat everything).

We initially tried variations on the recommendations engine to get it to also provide the "latest" half of the posts list, but with our current set-up, that seemed to work much worse than just interleaving with Latest posts.