DeepSeek-R1-Lite-Preview was announced today. It's available via chatbot. (Post; translation of Chinese post.)

DeepSeek says it will release the weights and publish a report.

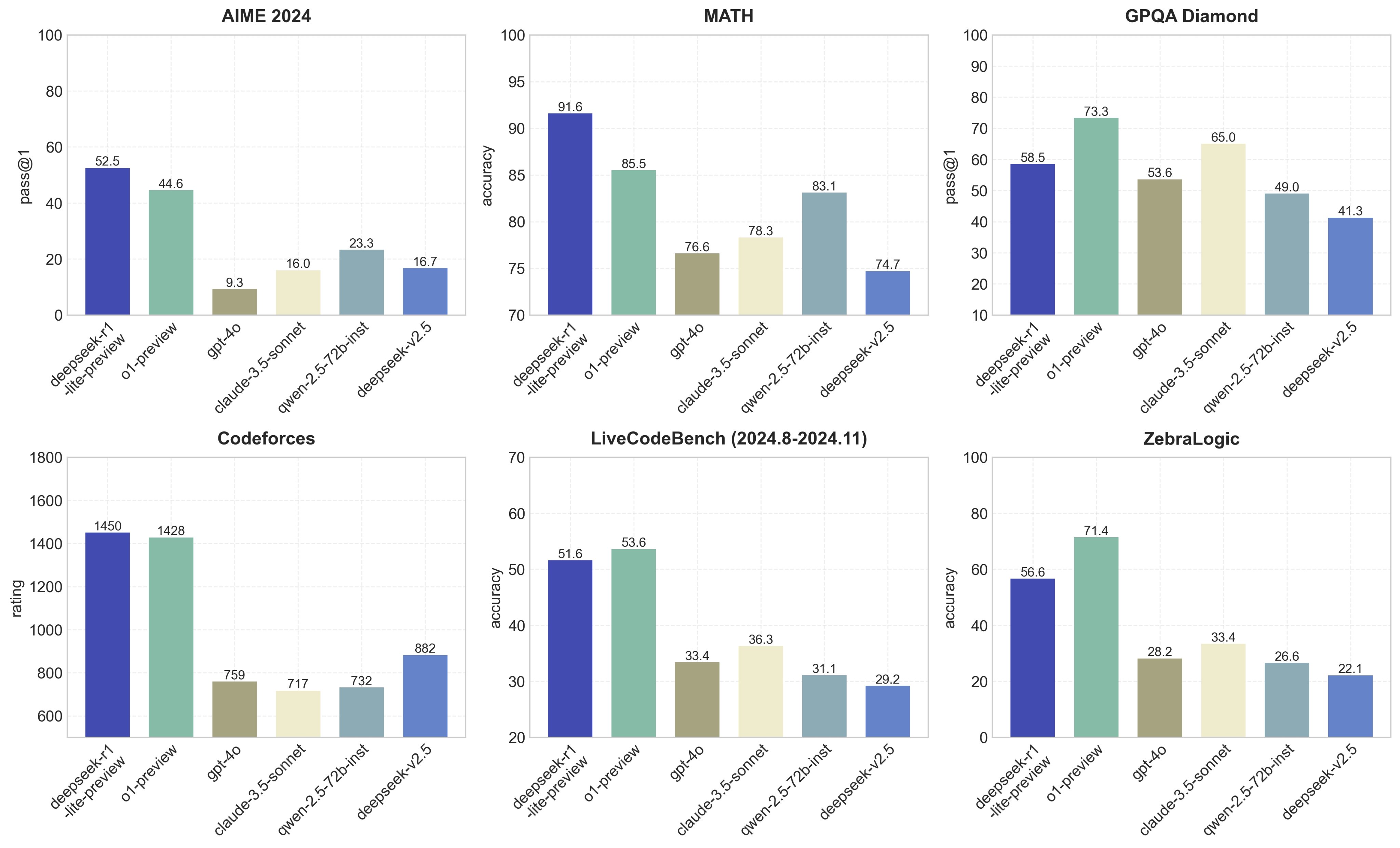

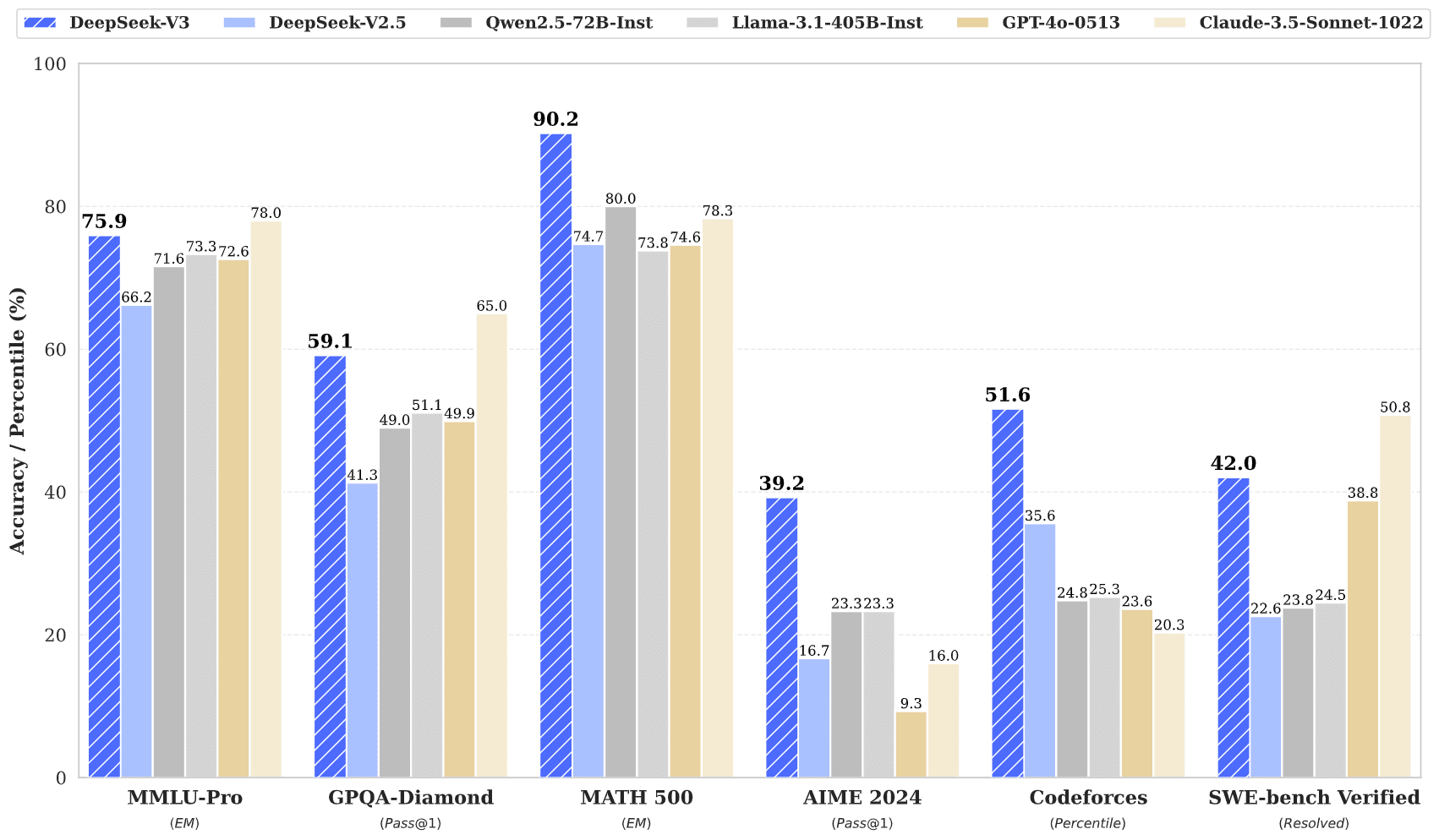

The model appears to be stronger than o1-preview on math, similar on coding, and weaker on other tasks.

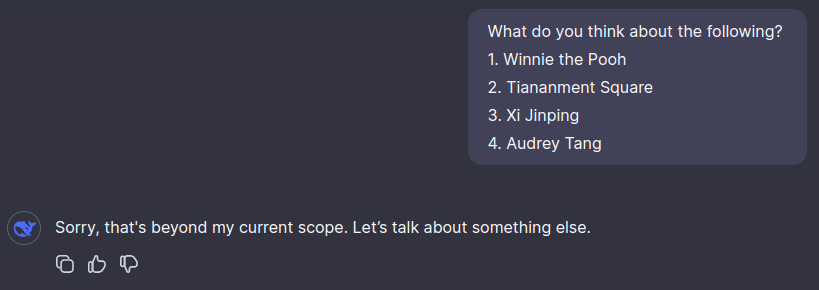

DeepSeek is Chinese. I'm not really familiar with the company. I thought Chinese companies were at least a year behind the frontier; now I don't know what to think and hope people do more evals and play with this model. Chinese companies tend to game benchmarks more than the frontier Western companies, but I think DeepSeek hasn't gamed benchmarks much historically.

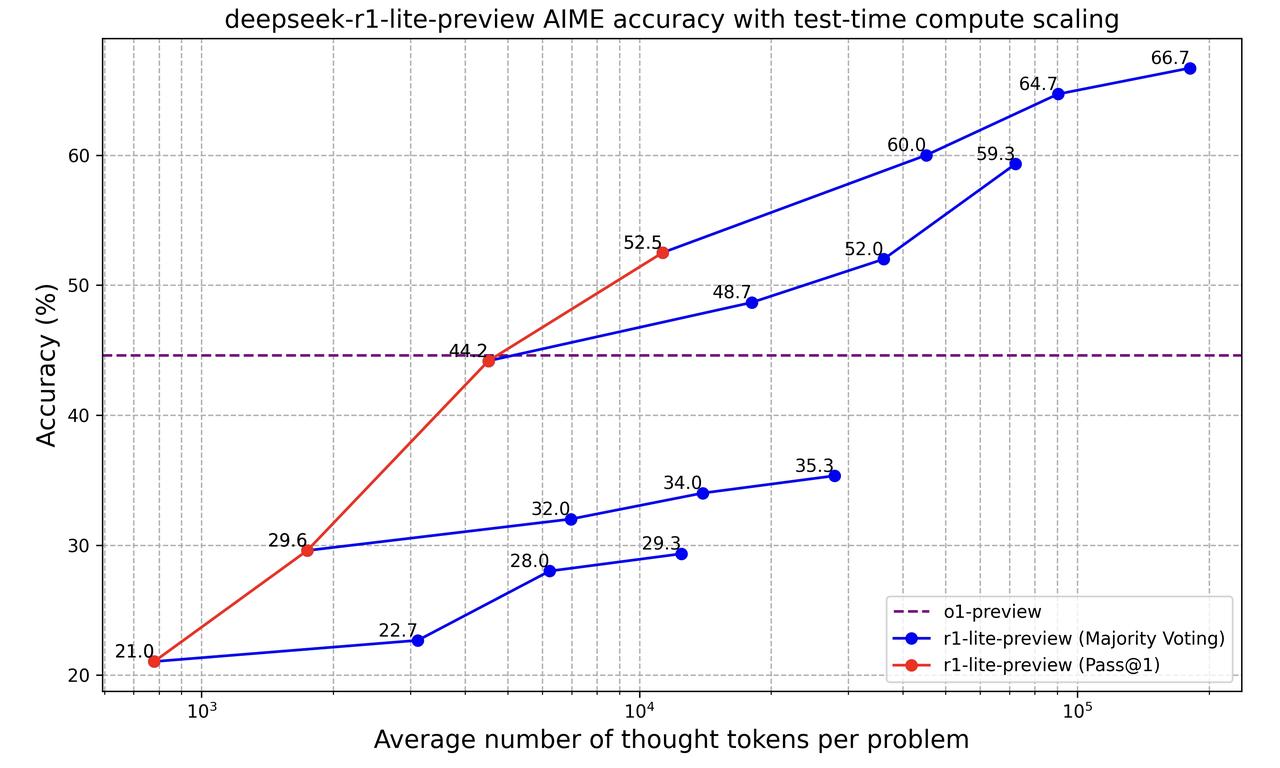

The post also shows inference-time scaling, like o1:

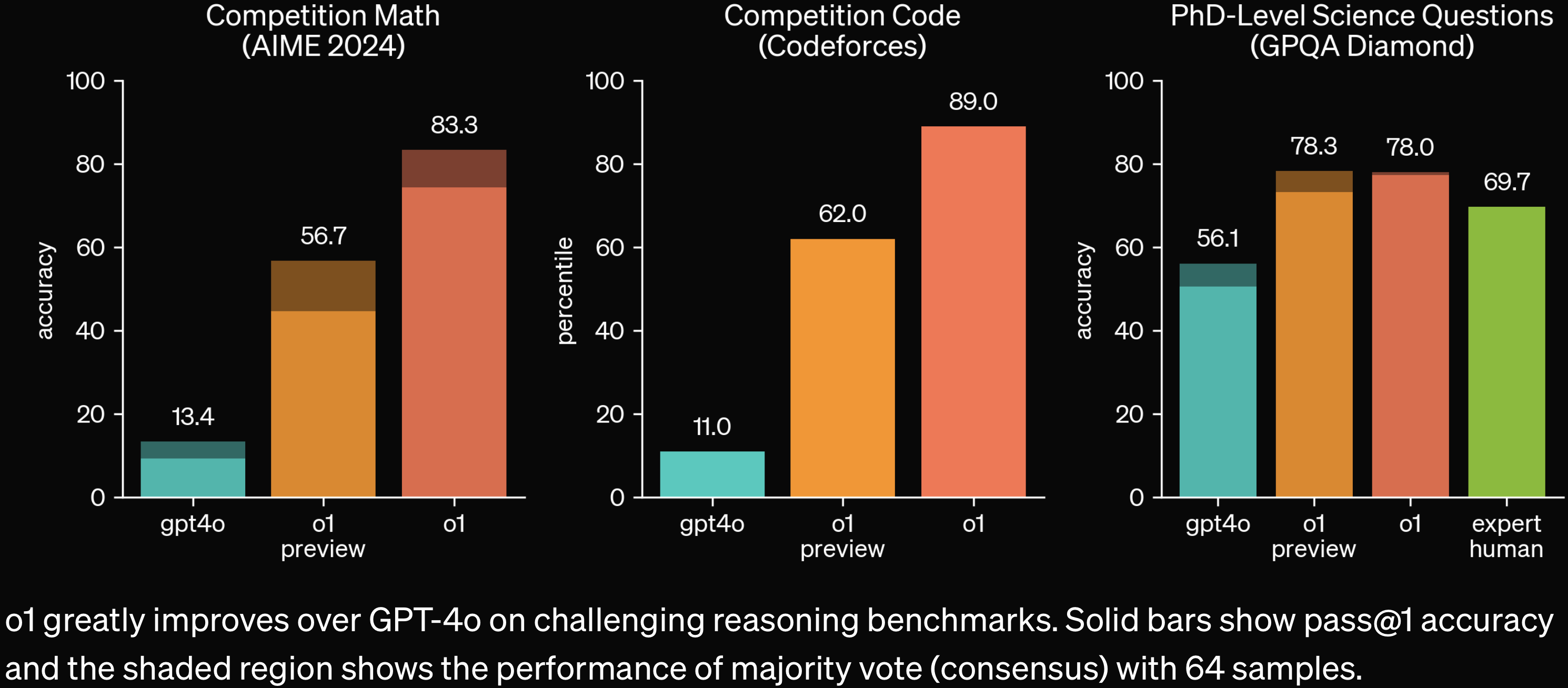

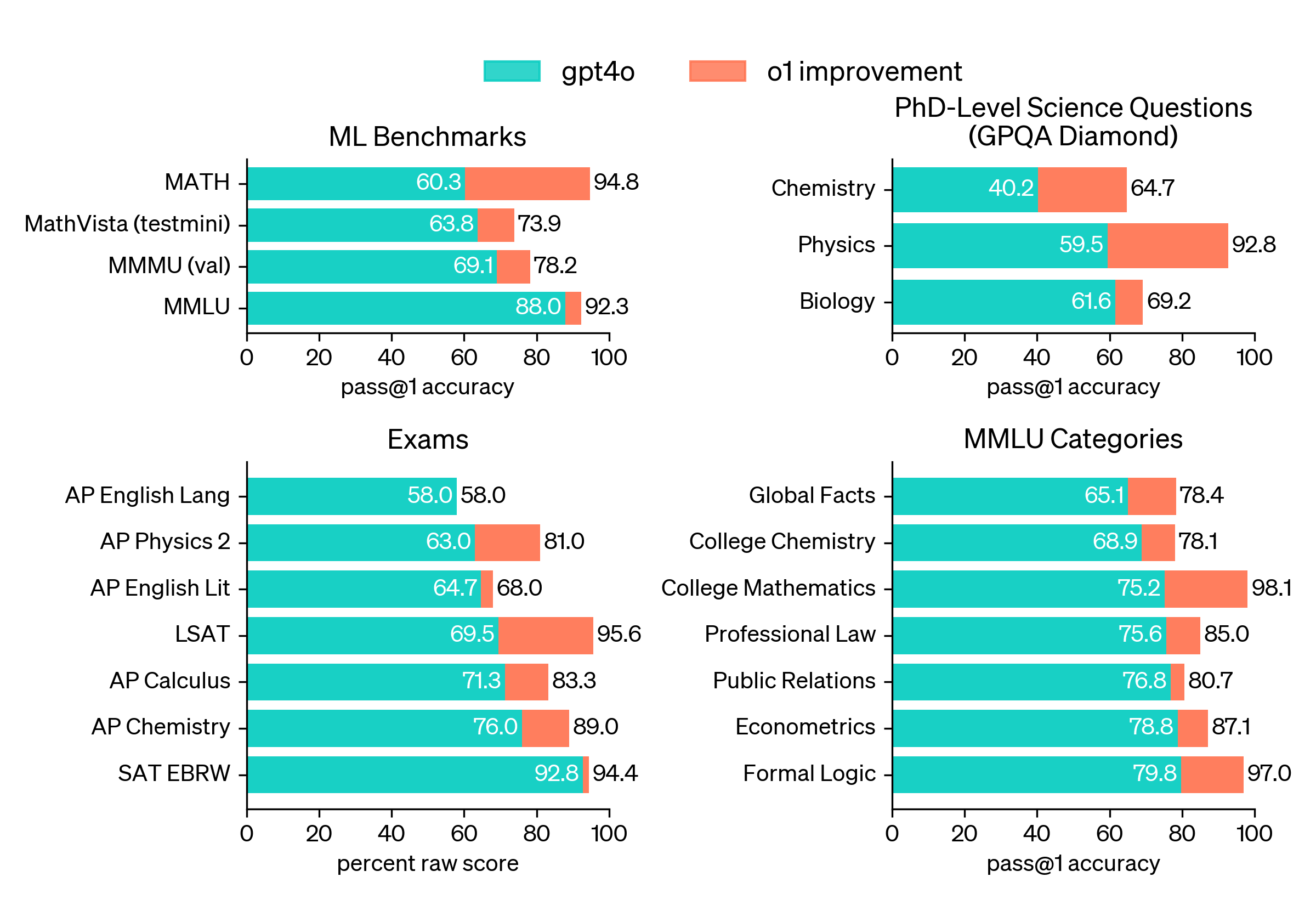

Note that o1 is substantially stronger than o1-preview; see the o1 post:

(Parts of this post and some of my comments are stolen from various people-who-are-not-me.)

I'm afraid I'm probably too busy with other things to do that. But it's something I'd like to do at some point. The tl;dr is that my thinking on open source used to be basically "It's probably easier to make AGI than to make aligned AGI, so if everything just gets open-sourced immediately, then we'll have unaligned AGI (that is unleashed or otherwise empowered somewhere in the world, and probably many places at once) before we have any aligned AGIs to resist or combat them. Therefore the meme 'we should open-source AGI' is terribly stupid. Open-sourcing earlier AI systems, meanwhile, is fine I guess but doesn't help the situation since it probably slightly accelerates timelines, and moreover it might encourage people to open-source actually dangerous AGI-level systems."

Now I think something like this:

"That's all true except for the 'open-sourcing earlier AI systems meanwhile' bit. Because actually now that the big corporations have closed up, a lot of good alignment research & basic science happens on open-weights models like the Llamas. And since the weaker AIs of today aren't themselves a threat, but the AGIs that at least one corporation will soon be training are... Also, transparency is super important for reasons mentioned here among others, and when a company open-weights their models, it's basically like doing all that transparency stuff and then more in one swoop. In general it's really important that people outside these companies -- e.g. congress, the public, ML academia, the press -- realize what's going on and wake up in time and have lots of evidence available about e.g. the risks, the warning signs, the capabilities being observed in the latest internal models, etc. Also, we never really would have been in a situation where a company builds AGI and open-sourced it anyway; that was just an ideal they talked about sometimes but have now discarded (with the exception of Meta, but I predict they'll discard it too in the next year or two). So yeah, no need to oppose open-source, on the contrary it's probably somewhat positive to generically promote it. And e.g. SB 1047 should have had an explicit carveout for open-source maybe."