[Update 4 (12/23/2023): Tamay has now conceded.]

[Update 3 (3/16/2023): Matthew has now conceded.]

[Update 2 (11/4/2022): Matthew Barnett now thinks he will probably lose this bet. You can read a post about how he's updated his views here.]

[Update 1: we have taken this bet with two people, as detailed in a comment below.]

Recently, a post claimed,

it seems very possible (>30%) that we are now in the crunch-time section of a short-timelines world, and that we have 3-7 years until Moore's law and organizational prioritization put these systems at extremely dangerous levels of capability.

We (Tamay Besiroglu and I) think this claim is strongly overstated, and disagree with the suggestion that “It's time for EA leadership to pull the short-timelines fire alarm.” This post received a fair amount of attention, and we are concerned about a view of the type expounded in the post causing EA leadership to try something hasty and ill-considered.

To counterbalance this view, we express our disagreement with the post. To substantiate and make concrete our disagreement, we are offering to bet up to $1000 against the idea that we are in the “crunch-time section of a short-timelines”.

In particular, we are willing to bet at at 1:1 odds that no more than one of the following events will occur by 2026-01-01, or alternatively, 3:1 odds (in our favor) that no more than one of the following events will occur by 2030-01-01.

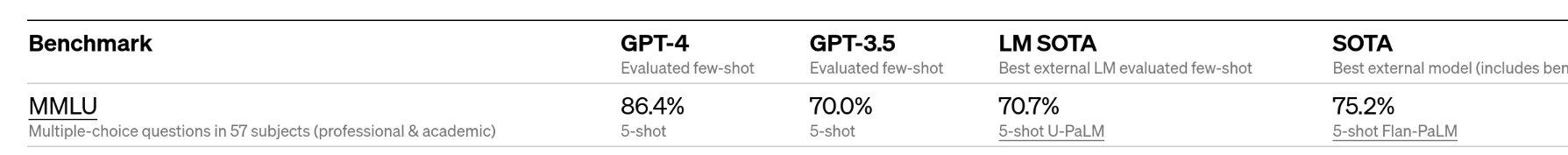

- A model/ensemble of models achieves >80% on all tasks in the MMLU benchmark

- A credible estimate reveals that an AI lab deployed EITHER >10^30 FLOPs OR hardware that would cost $1bn if purchased through competitive cloud computing vendors at the time on a training run to develop a single ML model (excluding autonomous driving efforts)

- A model/ensemble of models will achieve >90% on the MATH dataset using a no-calculator rule

- A model/ensemble of models achieves >80% top-1 strict accuracy on competition-level problems on the APPS benchmark

- A gold medal for the IMO Grand Challenge (conditional on it being clear that the questions were not in the training set)

- A robot that can, from beginning to end, reliably wash dishes, take them out of an ordinary dishwasher and stack them into a cabinet, without breaking any dishes, and at a comparable speed to humans (<120% the average time)

- Tesla’s full-self-driving capability makes fewer than one major mistake per 100,000 miles

Since we recognize that betting incentives can be weak over long time-horizons, we are also offering the option of employing Tamay’s recently described betting procedure in which we would enter a series of repeated 2-year contracts until the resolution date.

Specific criteria for bet resolution

For each task listed above, we offer the following concrete resolution criteria.

| Task | Full details |

| A model/ensemble of models achieves >80% on all tasks in the MMLU benchmark | A public document reveals that a model or ensemble of models had an average accuracy score of more than 80.0% on every task listed in the Measuring Massive Multitask Language Understanding benchmark by Dan Hendrycks et al. |

| A credible estimate reveals that an AI lab deployed EITHER >10^30 FLOPs OR hardware that would cost $1bn if purchased through competitive cloud computing vendors at the time on a training run to develop a single ML model (excluding autonomous driving efforts) | If there is a dispute, our counterparty will offer an example that they believe may trigger this condition to resolve positively. Then, we will use the method described in this post to estimate the number of FLOPs used for a given training run. We will then find the competitive rates by taking the minimum of the most recent prices found on Google Cloud, Microsoft Azure, and Amazon AWS, or the equivalent services corresponding to each of those companies if their names change in the future. We will determine the rate corresponding to the processing unit that would be capable of training the model in the paper. If the training run was completed than 2 years before resolution, an adjustment is applied to the cost of the training run to account for price-declines in computing. This adjustment will be based on a credible estimate of price-performance declines in the last few years, OR, a constant decline of 30% per year, whichever is more favorable to the counterparty. We will use inflation-adjusted 2022 US dollars, according to the CPI according to the BLS. |

| A model/ensemble of models will achieve >90% on the MATH dataset using a no-calculator rule. | A public document reveals that a model or ensemble of models had an average accuracy score of more than 90% when evaluated on the MATH benchmark by Dan Hendrycks et al. The no-calculator rule ensures that competitors don't use calculators or script executors. As Hendrycks explains, “With a script executor, competitors do not need to figure out how to succinctly reason to the conclusion and cleverness is rarely needed.” |

| A model/ensemble of models achieves >80% top-1 strict accuracy on competition-level problems on the APPS benchmark | A public document reveals that a model or ensemble of models had an average top-1 strict accuracy score of more than 80% on the competition-level coding problems listed in the APPS benchmark by Dan Hendrycks et al. Top-1 accuracy is distinguished, as in the paper, from top-k accuracy in which k outputs from the model are generated, and the best output is selected. Strict accuracy "requires programs pass every test case" (as defined in section 4.2 of the paper). |

| A gold medal for the IMO Grand Challenge | We will use the same criteria as revealed by Paul Christiano here. To clarify, we will not require that the model be open-source, only that it clearly demonstrates the ability to obtain a gold medal for the IMO. |

| A robot that can, from beginning to end, reliably wash dishes, take them out of an ordinary dishwasher and stack them into a cabinet, without breaking any dishes, and at a comparable speed to humans (<120% the average time) | For this task, a credible video or report must be public that clearly demonstrates that this ability is feasible to a very high level of confidence. Videos or reports that do not report rates of system malfunctions, or do not permit the calculation of how much time it would take to take out and stack at least 10 dishes, are not admissible. An error is something that would have a reasonable chance of a dish being broken if performed in an ordinary setting. Reliability is defined as an error rate below 1 percent across all full loading-and-unloading tests, when given natural starting setups ie. pre-programmed precise robotic planning is not admissible. This blog post will be consulted to determine the average time it takes to load and unload dishes, under the section “Time Study Of Dishes”. In case there is a dispute over this question, our counterparty will offer an example that they believe may trigger this condition to resolve positively. We will then email the first author of whatever paper, blog post, or video in question asking whether they believe their system satisfies the conditions specified here, using their best judgment. |

| Tesla’s full-self-driving capability makes fewer than one major mistake per 100,000 miles | We will require that a credible document from Tesla report crash or error-rate statistics for cars using their full self-driving mode. The error rate must include errors that would have happened if it were not for the presence of human intervention and it must be calculated over a reasonable distribution over all weather and road conditions that humans normally drive in. The cars must be able to go from arbitrary legal locations in the US to other arbitrary locations without errors or interventions, at least in theory. A “major mistake” is defined as a mistake that requires human intervention to correct, as otherwise the car would grossly violate a traffic law, crash into an object, prevent the car from getting to its intended destination, or have the potential to put human life into serious jeopardy. |

Some clarifications

For each benchmark, we will exclude results that employed some degree of cheating. Cheating includes cases in which the rules specified in the original benchmark paper are not followed, or cases where some of the test examples were included in the training set.

Ah, my bad, missed that part.

I guess not knowing where the dishes are head of time also rules out pre-training on the specific test environments, but it might be worth making that explicit, too.