(Cross-posted from Twitter, and therefore optimized somewhat for simplicity.)

Recent discussions of AI x-risk in places like Twitter tend to focus on "are you in the Rightthink Tribe, or the Wrongthink Tribe?". Are you a doomer? An accelerationist? An EA? A techno-optimist?

I'm pretty sure these discussions would go way better if the discussion looked less like that. More concrete claims, details, and probabilities; fewer vague slogans and vague expressions of certainty.

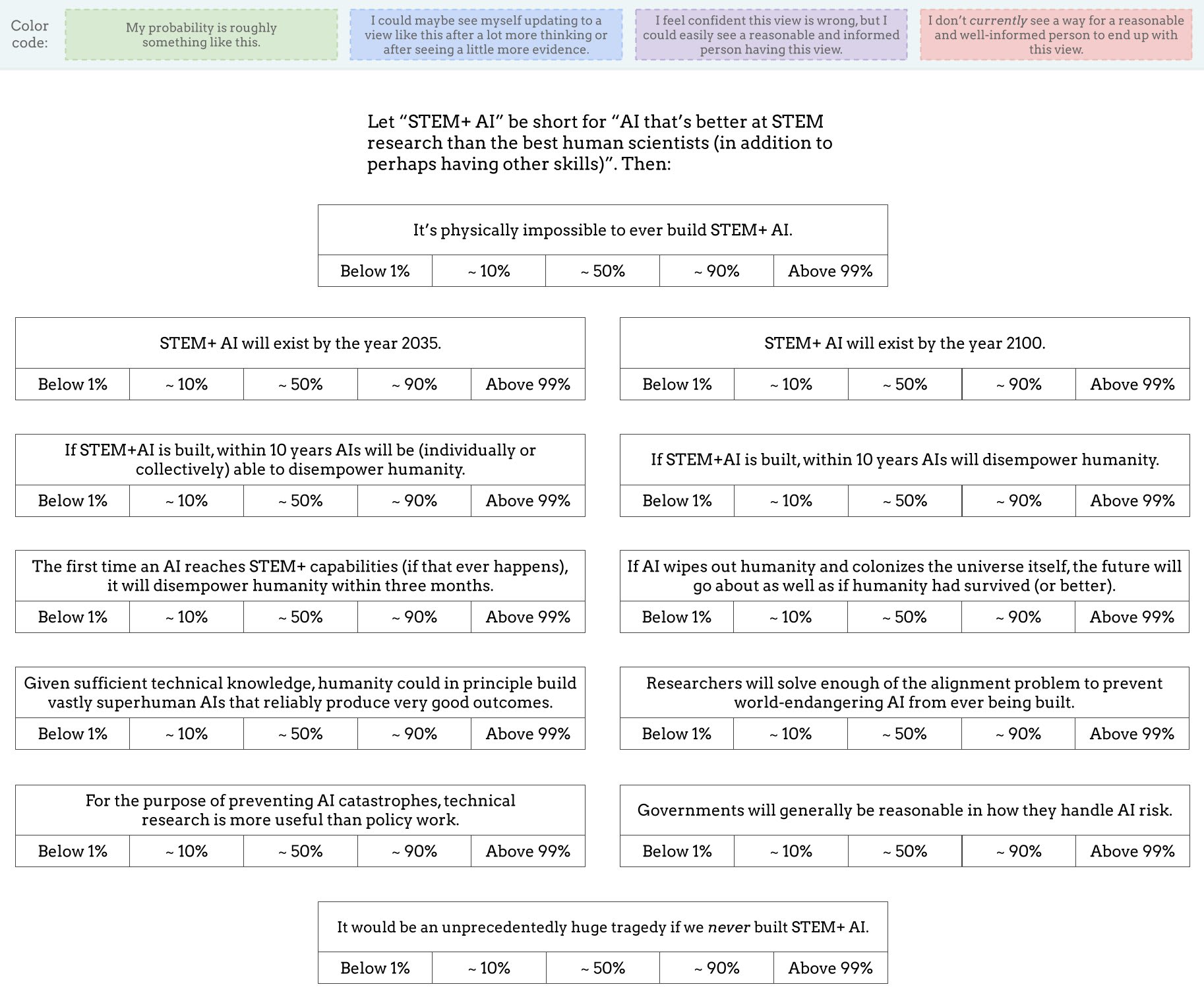

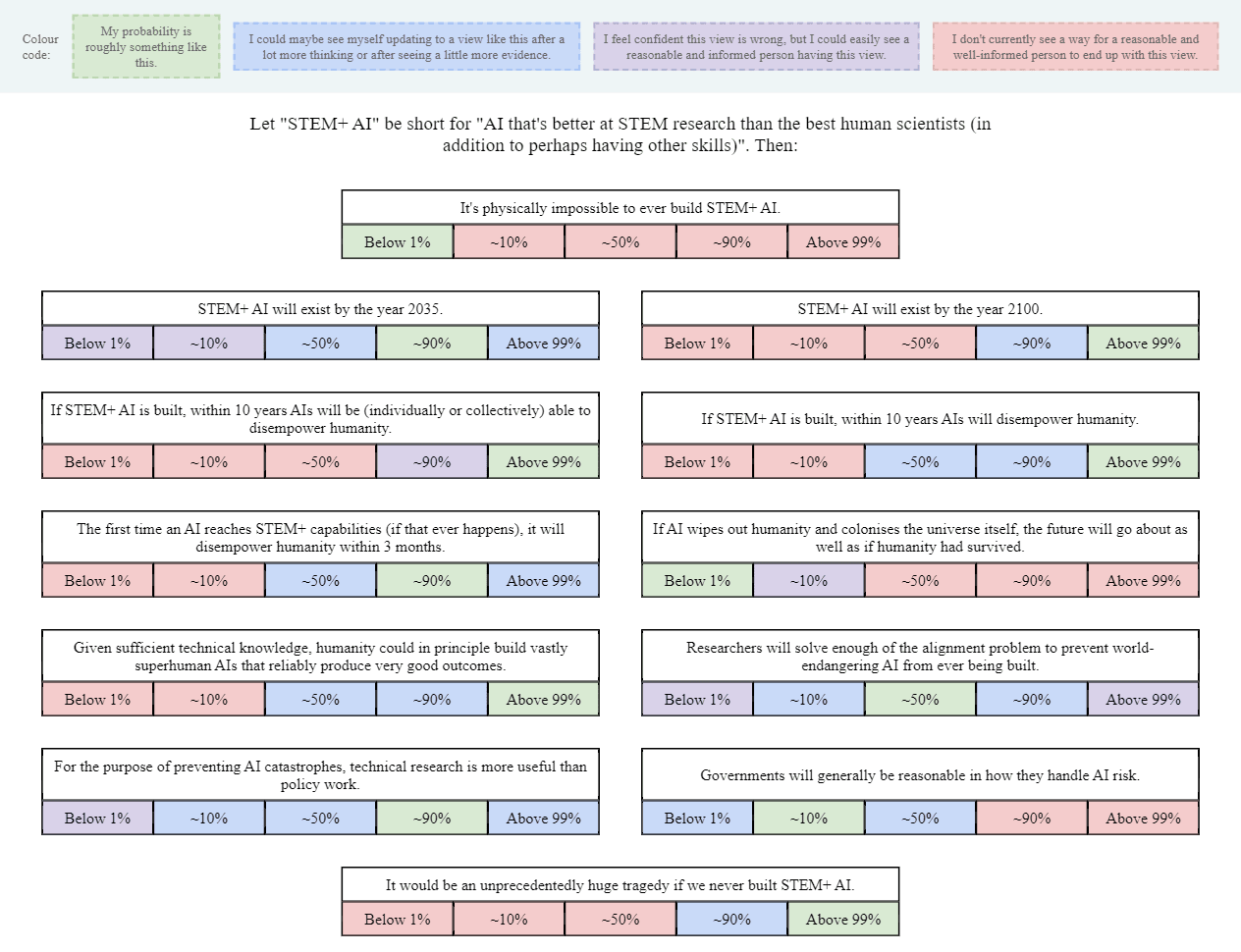

As a start, I made this image (also available as a Google Drawing):

(Added: Web version made by Tetraspace.)

I obviously left out lots of other important and interesting questions, but I think this is OK as a conversation-starter. I've encouraged Twitter regulars to share their own versions of this image, or similar images, as a nucleus for conversation (and a way to directly clarify what people's actual views are, beyond the stereotypes and slogans).

If you want to see a filled-out example, here's mine (though you may not want to look if you prefer to give answers that are less anchored): Google Drawing link.

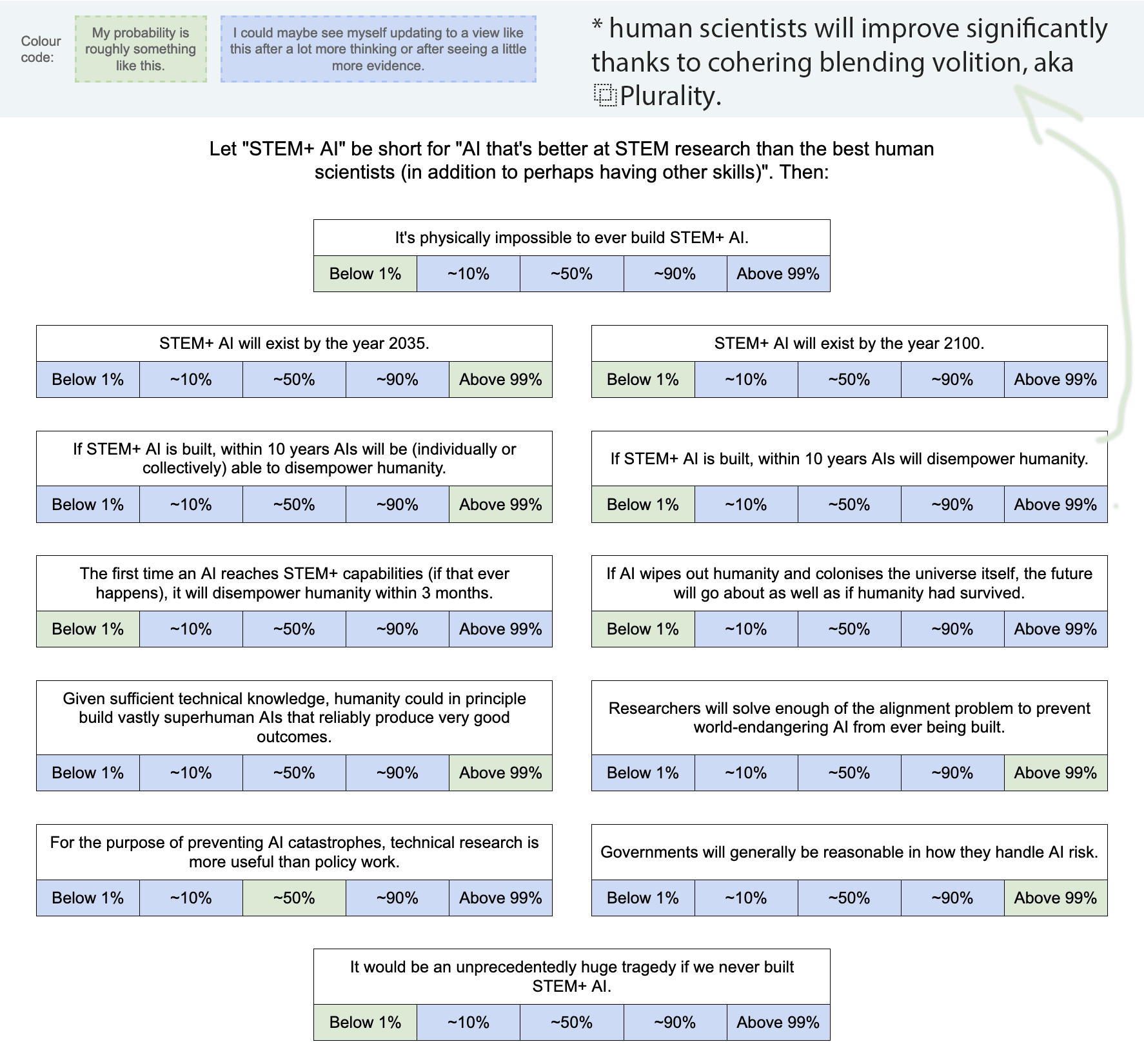

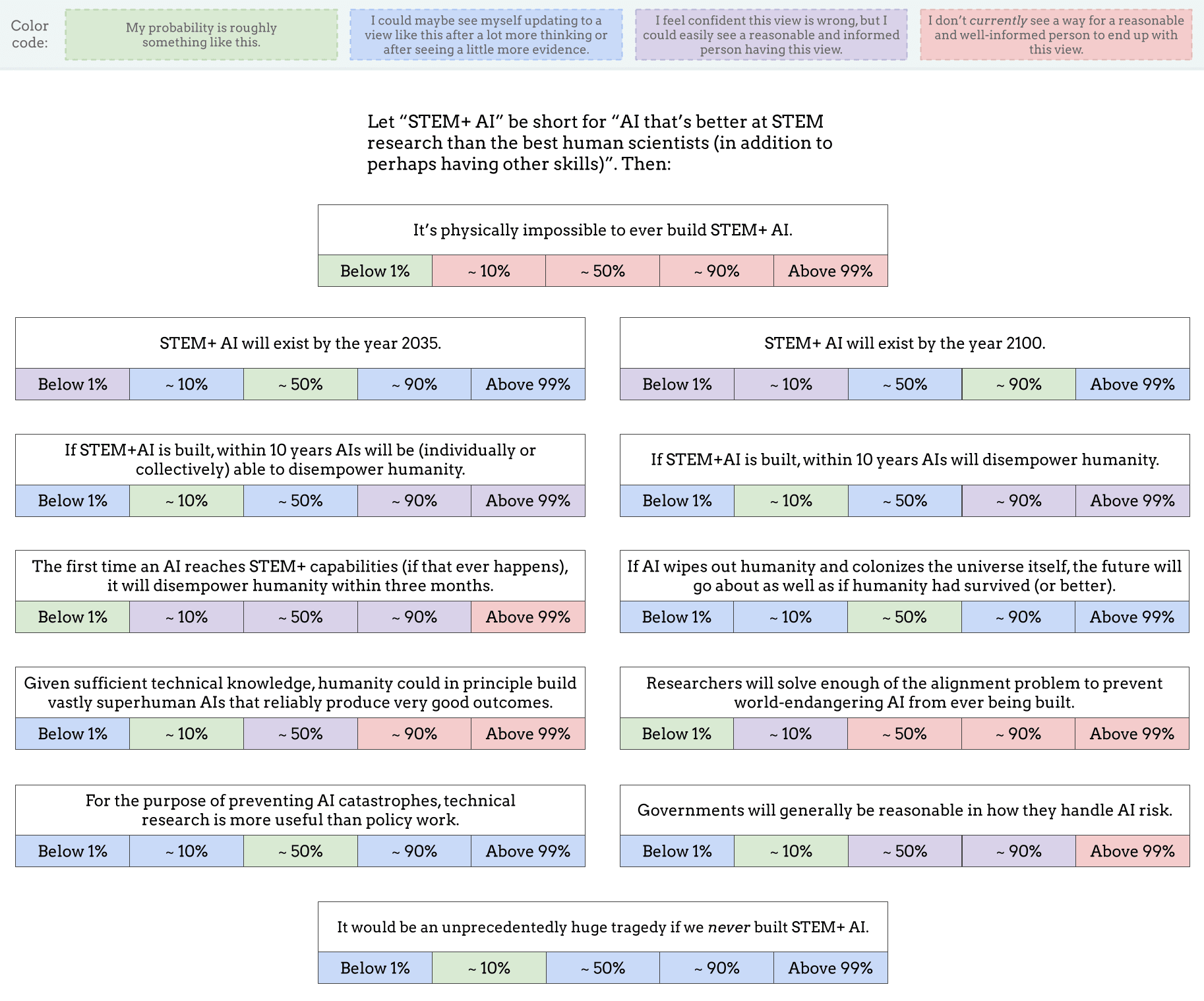

Thank you for this. Here's mine:

Always glad to update as new evidence arrives.

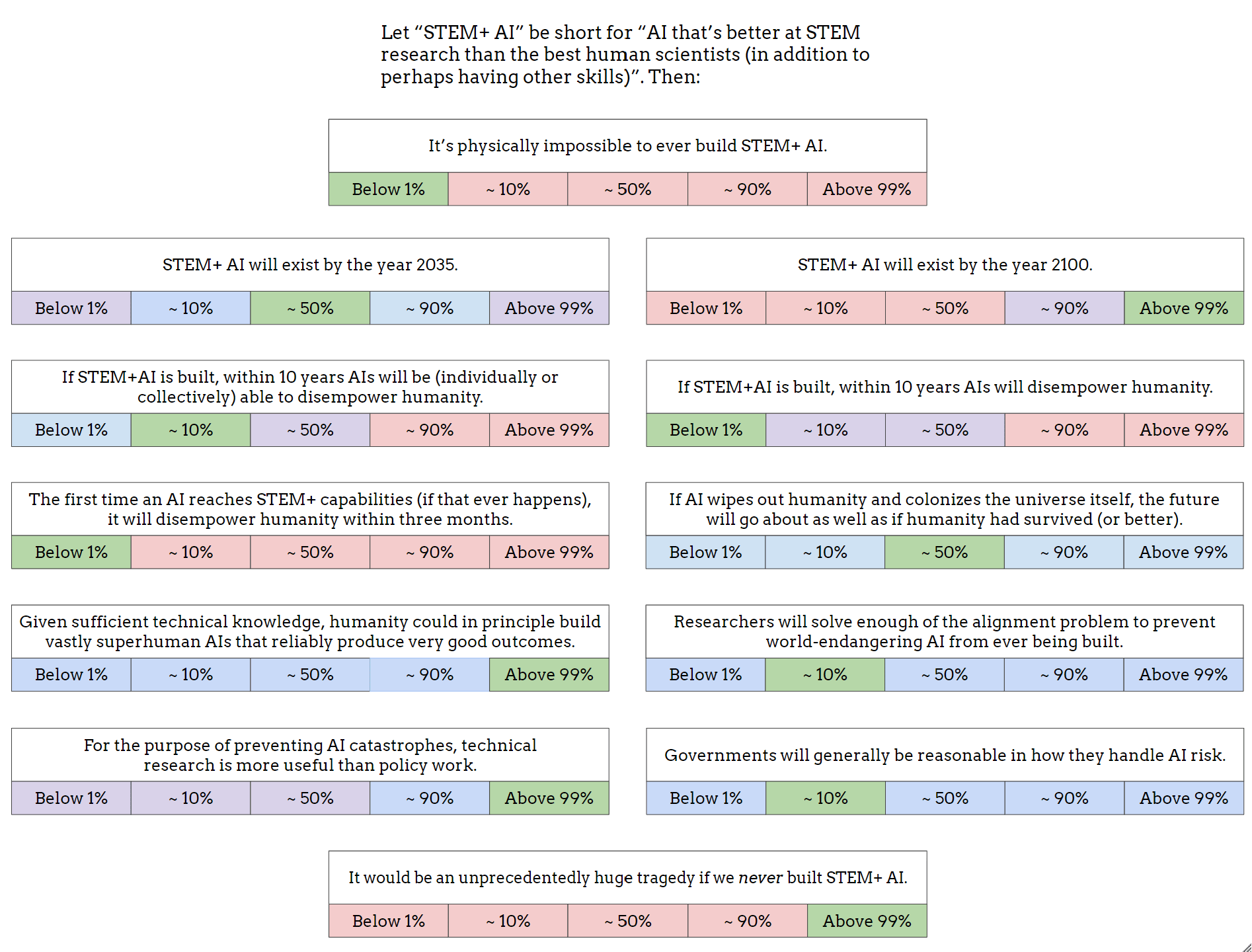

Based on my personal experience in pandemic resilience, additional wakeups can proceed swiftly as soon as a specific society-scale harm is realized.

Specifically, as we are waking up to over-reliance harms and addressing them (esp. within security OODA loops), it would buy time for good enough continuous alignment.