Posts

Wikitag Contributions

Based on my personal experience in pandemic resilience, additional wakeups can proceed swiftly as soon as a specific society-scale harm is realized.

Specifically, as we are waking up to over-reliance harms and addressing them (esp. within security OODA loops), it would buy time for good enough continuous alignment.

Based on recent conversations with policymakers, labs and journalists, I see increased coordination around societal evaluation & risk mitigation — (cyber)security mindset is now mainstream.

Also, imminent society-scale harm (e.g. contextual integrity harms caused by over-reliance & precision persuasion since ~a decade ago) has shown to be effective in getting governments to consider risk reasonably.

Well, before 2016, I had no idea I'd serve in the public sector...

(The vTaiwan process was already modeled after CBV in 2015.)

Yes. The basic assumption (of my current day job) is that good-enough contextual integrity and continuous incentive alignment are solvable well within the slow takeoff we are currently in.

Something like a lightweight version of the off-the-shelf Vision Pro will do. Just as nonverbal cues can transmit more effectively with codec avatars, post-symbolic communication can approach telepathy with good enough mental models facilitated by AI (not necessarily ASI.)

Safer than implants is to connect at scale "telepathically" leveraging only full sensory bandwidth and much better coordination arrangements. That is the ↗️ direction of the depth-breadth spectrum here.

Yes, that, and a further focus on assistive AI systems that excel at connecting humans — I believe this is a natural outcome of the original CBV idea.

Nice to meet you too & thank you for the kind words. Yes, same person as AudreyTang. (I posted the map at audreyt.org as a proof of sorts.)

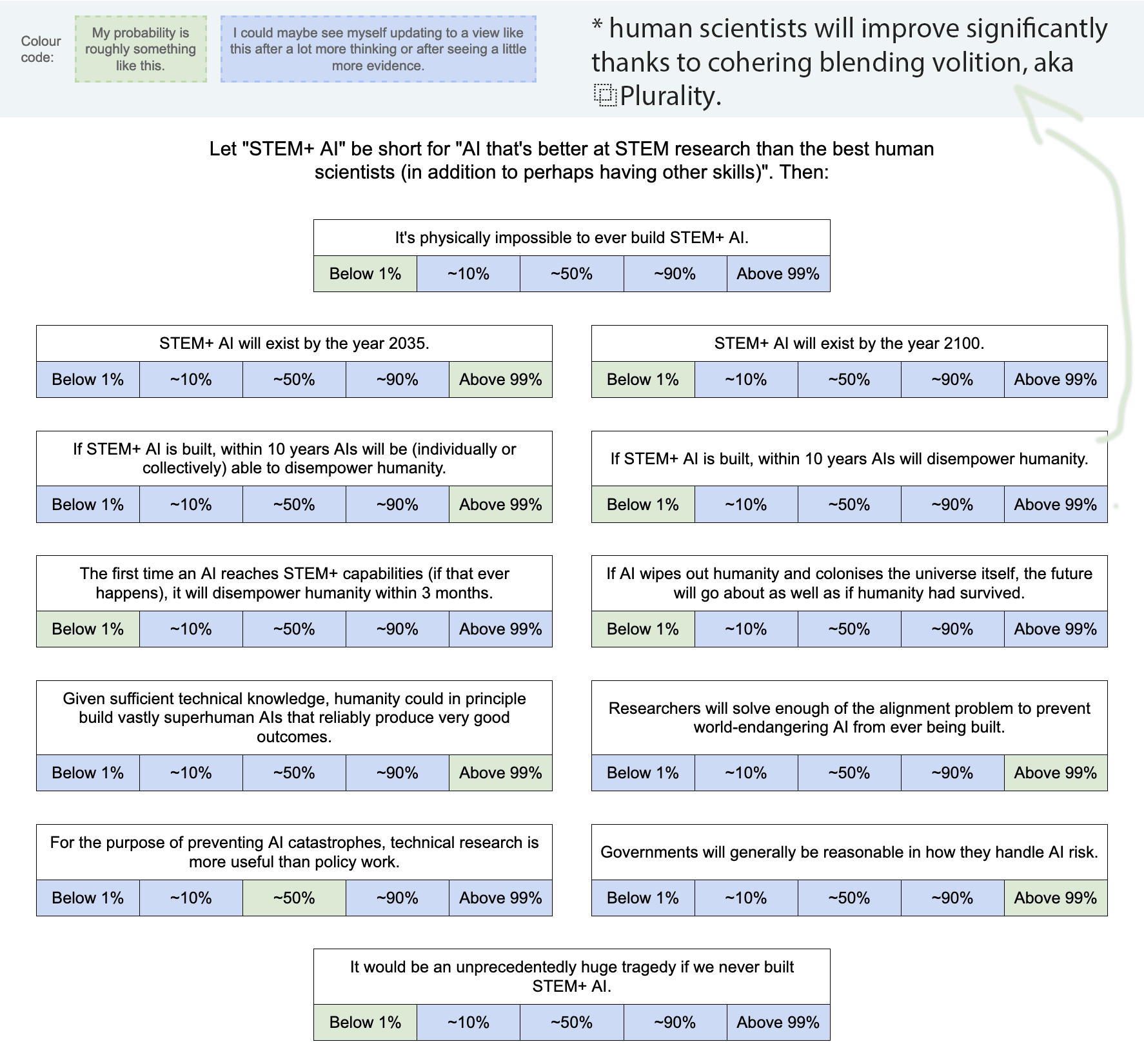

Thank you for this. Here's mine:

- It’s physically possible to build STEM+ AI (though it's OK if we collectively decide not to build it.)

- STEM+ AI will exist by the year 2035 but not by 2100 (human scientists will improve significantly thanks to cohering blending volition, aka ⿻Plurality).

- If STEM+AI is built, within 10 years AIs will be able to disempower humanity (but won't do it.)

- The future will be better if AI does not wipe out humanity.

- Given sufficient technical knowledge, humanity could in principle build vastly superhuman AIs that reliably produce very good outcomes.

- Researchers will produce good enough processes for continuous alignment.

- Technical research and policy work are ~equally useful.

- Governments will generally be reasonable in how they handle AI risk.

Always glad to update as new evidence arrives.

I wrote a summary in Business Weekly Taiwan (April 24):

https://sayit.archive.tw/2025-04-24-%E5%95%86%E5%91%A8%E5%B0%88%E6%AC%84ai-%E6%9C%AA%E4%BE%86%E5%AD%B8%E5%AE%B6%E7%9A%84-2027-%E5%B9%B4%E9%A0%90%E8%A8%80

https://sayit.archive.tw/2025-04-24-bw-column-an-ai-futurists-predictions-f

https://www.businessweekly.com.tw/archive/Article?StrId=7012220

An AI Futurist’s Predictions for 2027

When President Trump declared sweeping reciprocal tariffs, the announcement dominated headlines. Yet inside Silicon Valley’s tech giants and leading AI labs, an even hotter topic was “AI‑2027.com,” the new report from ex‑OpenAI researcher Daniel Kokotajlo and his team.

At OpenAI, Kokotajlo had two principal responsibilities. First, he was charged with sounding early alarms—anticipating the moment when AI systems could hack systems or deceive people, and designing defenses in advance. Second, he shaped research priorities so that the company’s time and talent were focused on work that mattered most.

The trust he earned as OpenAI’s in‑house futurist dates back to 2021, when he published a set of predictions for 2026, most of which have since come true. He foresaw two pivotal breakthroughs: conversational AI—exemplified by ChatGPT—captivating the public and weaving itself into everyday life, and “reasoning” AI spawning misinformation risks and even outright lies. He also predicted U.S. limits on advanced‑chip exports to China and AI beating humans in multi‑player games.

Conventional wisdom once held that ever‑larger models would simply perform better. Kokotajlo challenged that assumption, arguing that future systems would instead pause mid‑computation to “think,” improving accuracy without lengthy additional training runs. The idea was validated in 2024: dedicating energy to reasoning, rather than only to training, can yield superior results.

Since leaving OpenAI, he has mapped the global chip inventory, density, and distribution to model AI trajectories. His projection: by 2027, AI will possess robust powers of deception, and the newest systems may take their cues not from humans but from earlier generations of AI. If governments and companies race ahead solely to outpace competitors, serious alignment failures could follow, allowing AI to become an independent actor and slip human control by 2030. Continuous investment in safety research, however, can avert catastrophe and keep AI development steerable.

Before the tariff news, many governments were pouring money into AI. Now capital may be diverted to shore up companies hurt by the tariffs, squeezing safety budgets. Yet long‑term progress demands the opposite: sustained funding for safety measures and the disciplined use of high‑quality data to build targeted, reliable small models—so that AI becomes a help to humanity, not an added burden.