(Cross-posted from Twitter, and therefore optimized somewhat for simplicity.)

Recent discussions of AI x-risk in places like Twitter tend to focus on "are you in the Rightthink Tribe, or the Wrongthink Tribe?". Are you a doomer? An accelerationist? An EA? A techno-optimist?

I'm pretty sure these discussions would go way better if the discussion looked less like that. More concrete claims, details, and probabilities; fewer vague slogans and vague expressions of certainty.

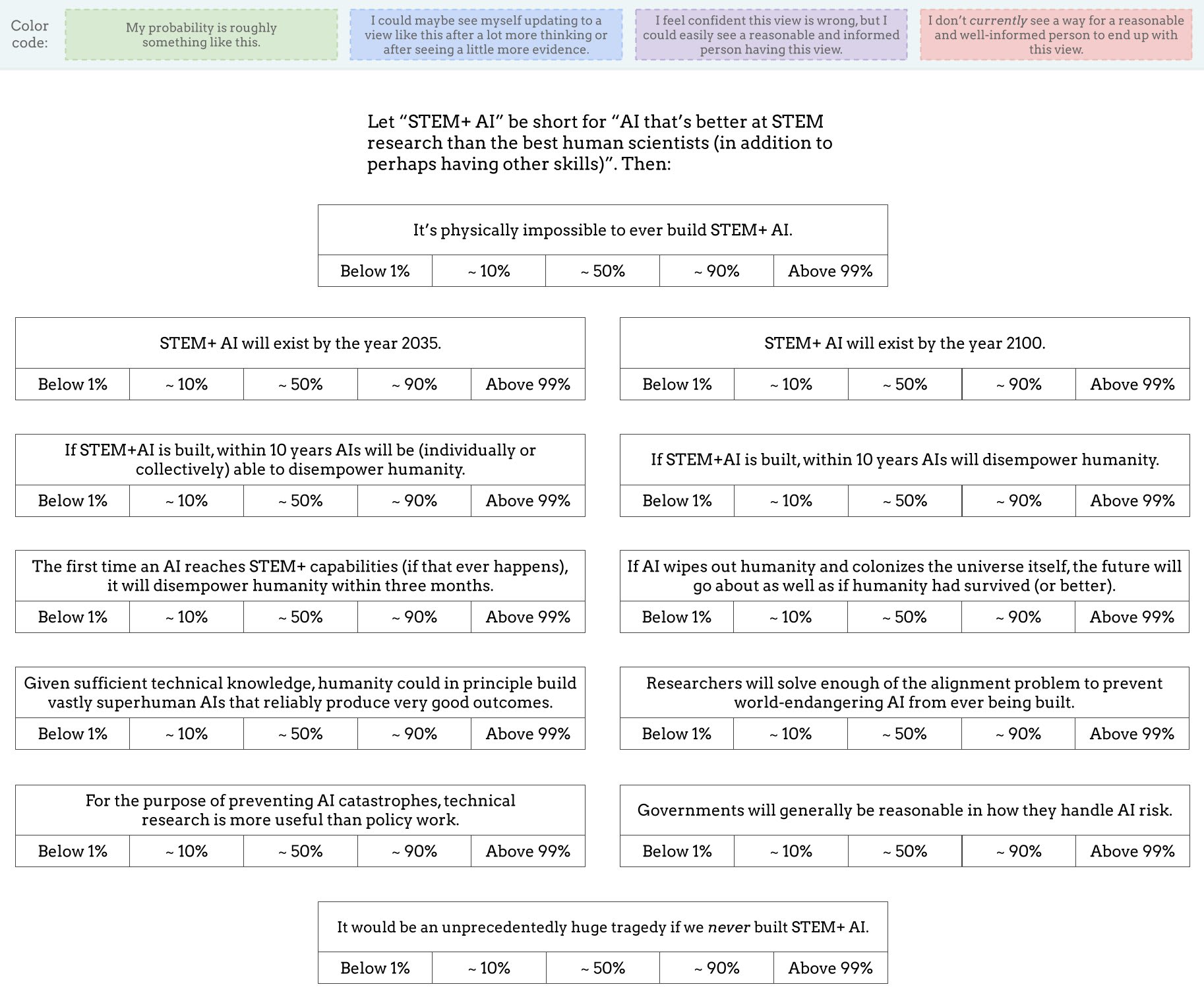

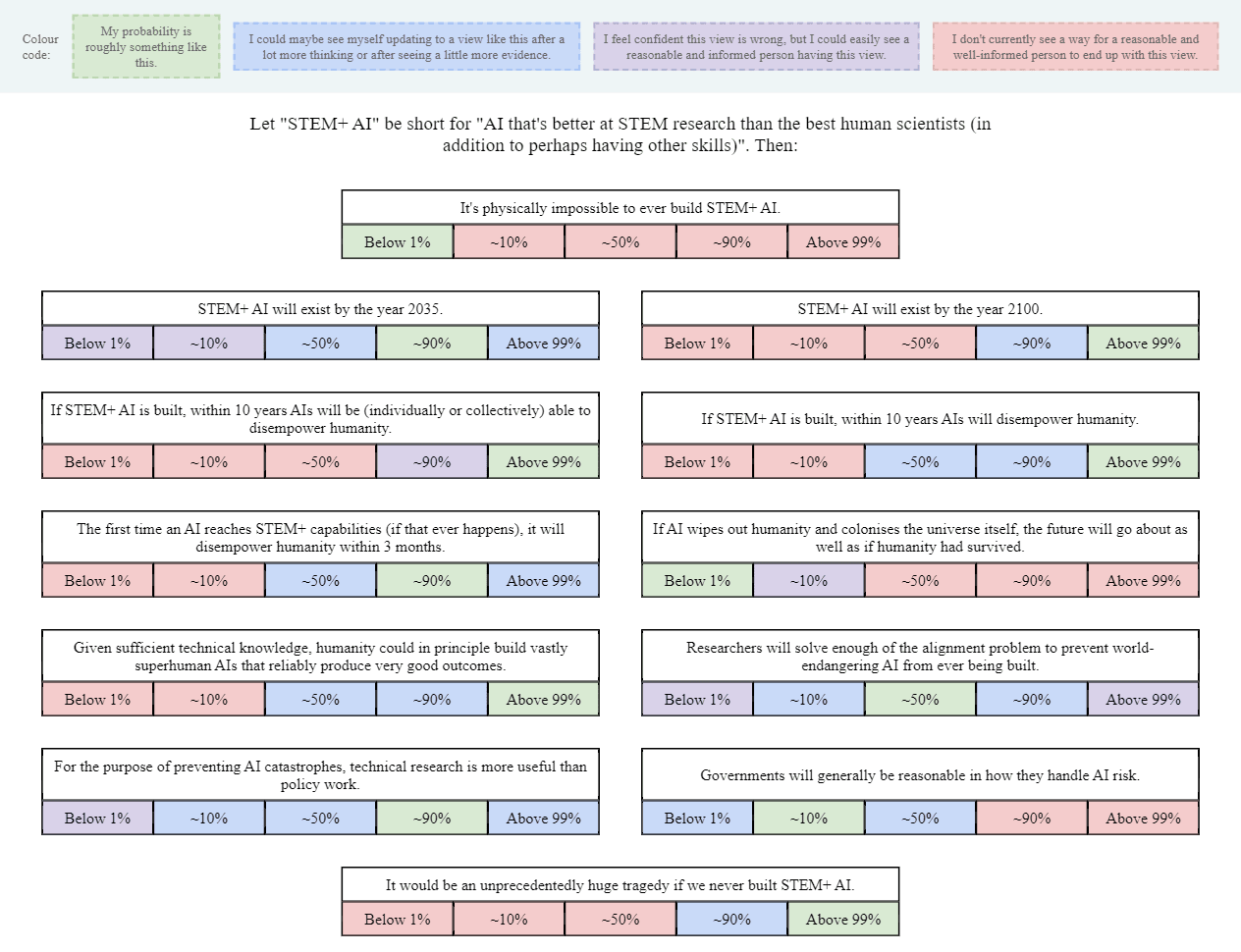

As a start, I made this image (also available as a Google Drawing):

(Added: Web version made by Tetraspace.)

I obviously left out lots of other important and interesting questions, but I think this is OK as a conversation-starter. I've encouraged Twitter regulars to share their own versions of this image, or similar images, as a nucleus for conversation (and a way to directly clarify what people's actual views are, beyond the stereotypes and slogans).

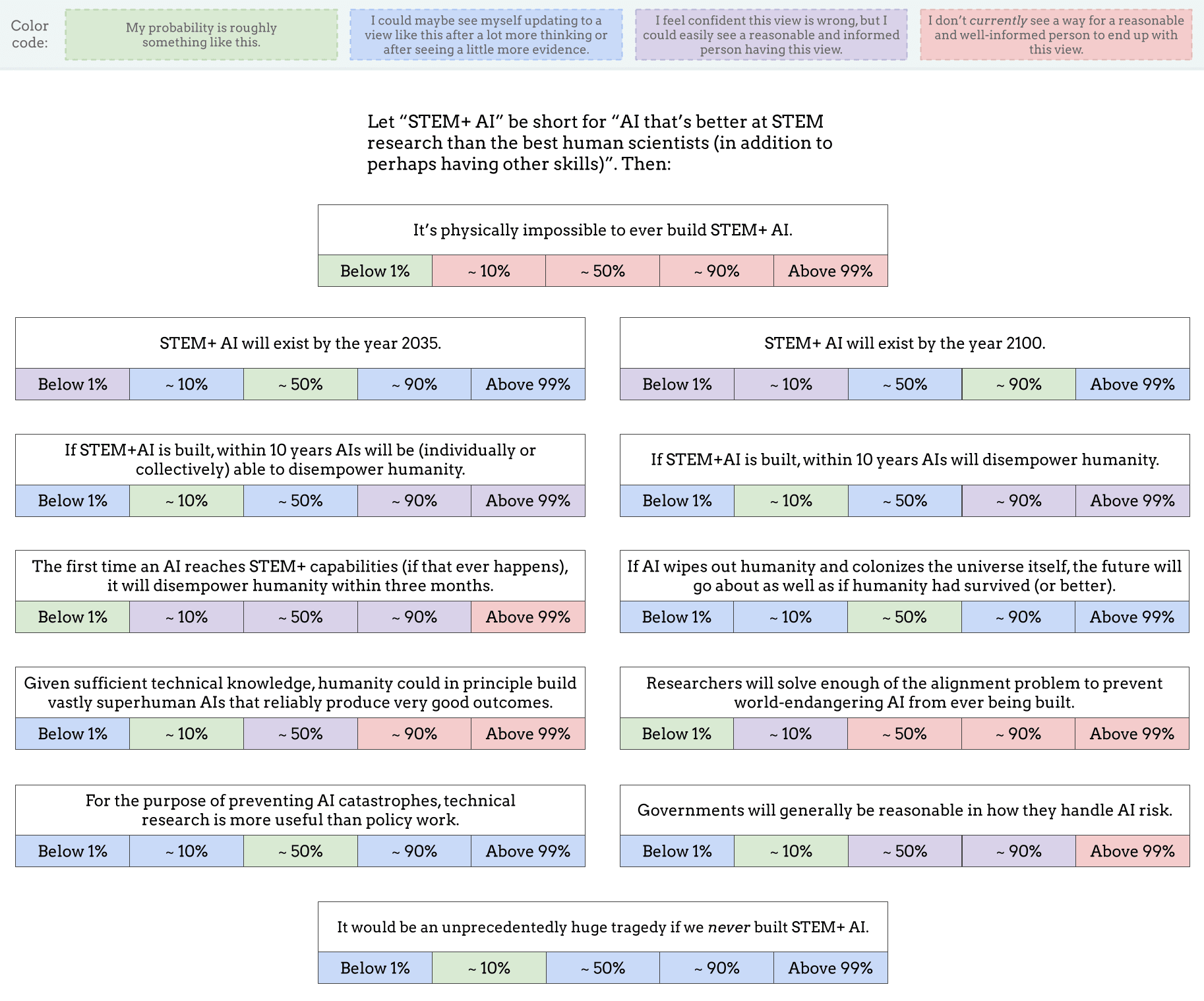

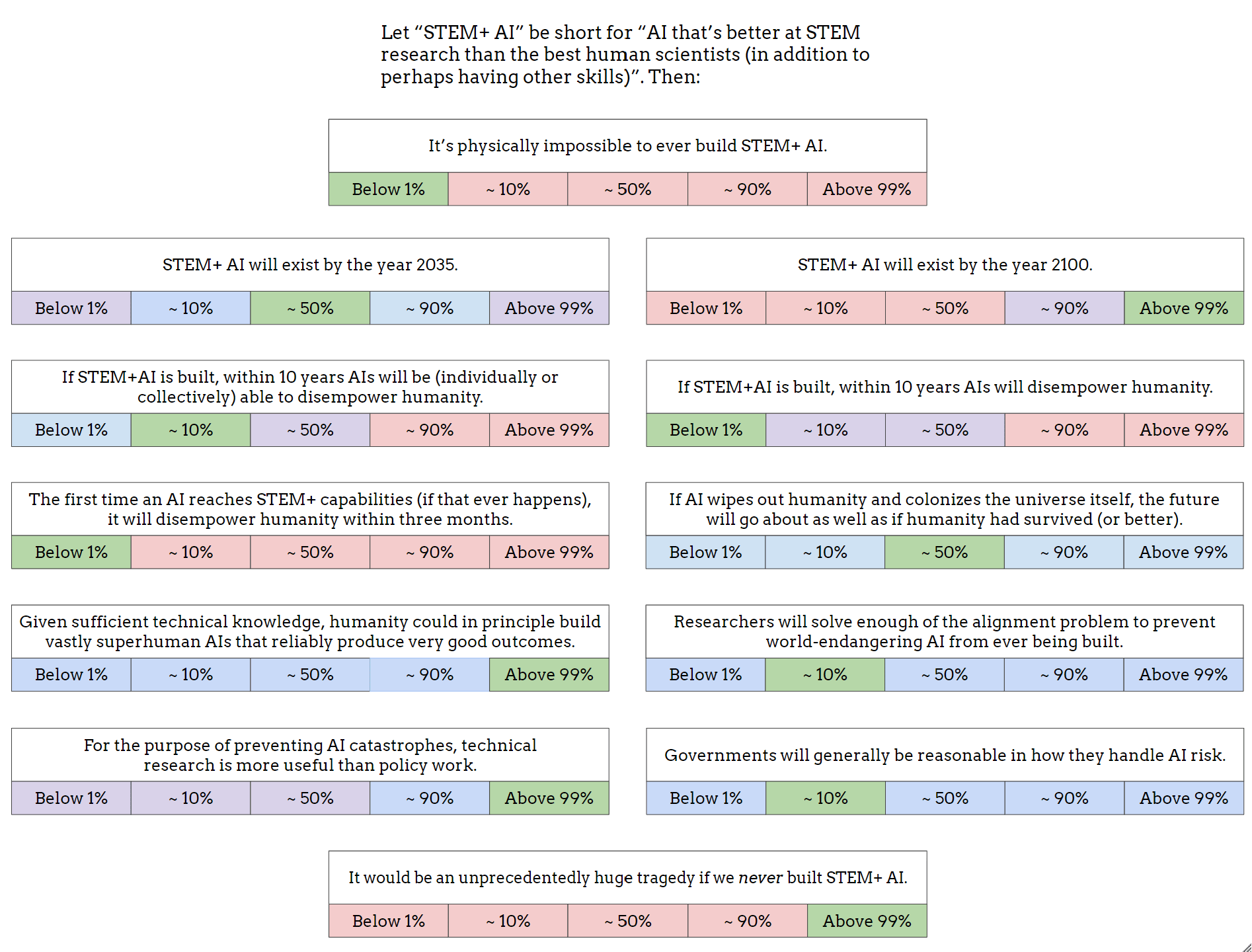

If you want to see a filled-out example, here's mine (though you may not want to look if you prefer to give answers that are less anchored): Google Drawing link.

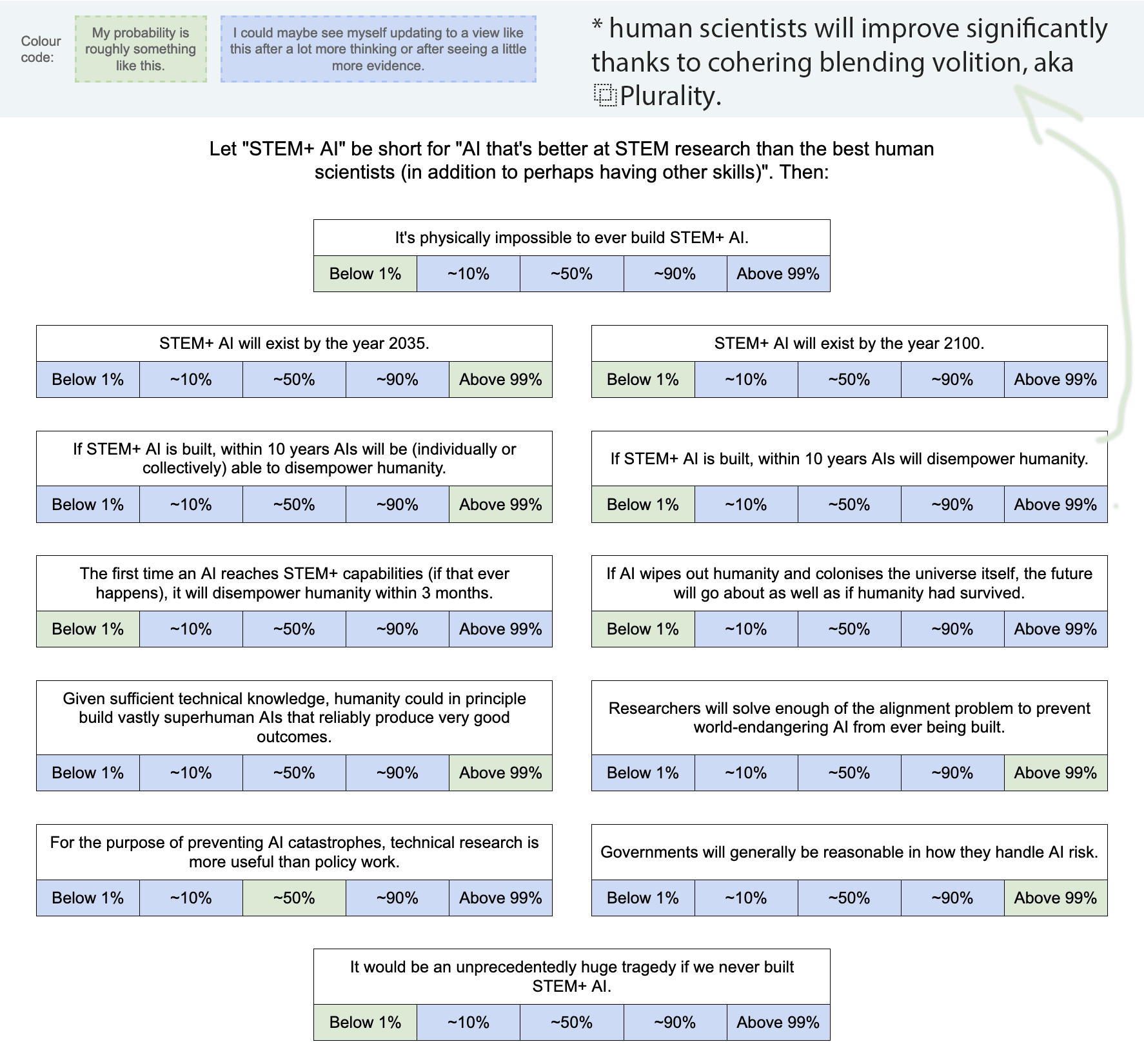

Are you saying that STEM+ AI won't exist in 2100 because by then human scientists will have become super good, such that the bar for STEM+ AI ("better at STEM research than the best human scientists") will have gone up?

If this is your view it sounds extremely wild to me, it seems like humans would basically just slow the AIs down. This seems maybe plausible if this is mandated by law, i.e. "You aren't allowed to build powerful STEM+ AI, although you are allowed to do human/AI cyborgs".