(Cross-posted from Twitter, and therefore optimized somewhat for simplicity.)

Recent discussions of AI x-risk in places like Twitter tend to focus on "are you in the Rightthink Tribe, or the Wrongthink Tribe?". Are you a doomer? An accelerationist? An EA? A techno-optimist?

I'm pretty sure these discussions would go way better if the discussion looked less like that. More concrete claims, details, and probabilities; fewer vague slogans and vague expressions of certainty.

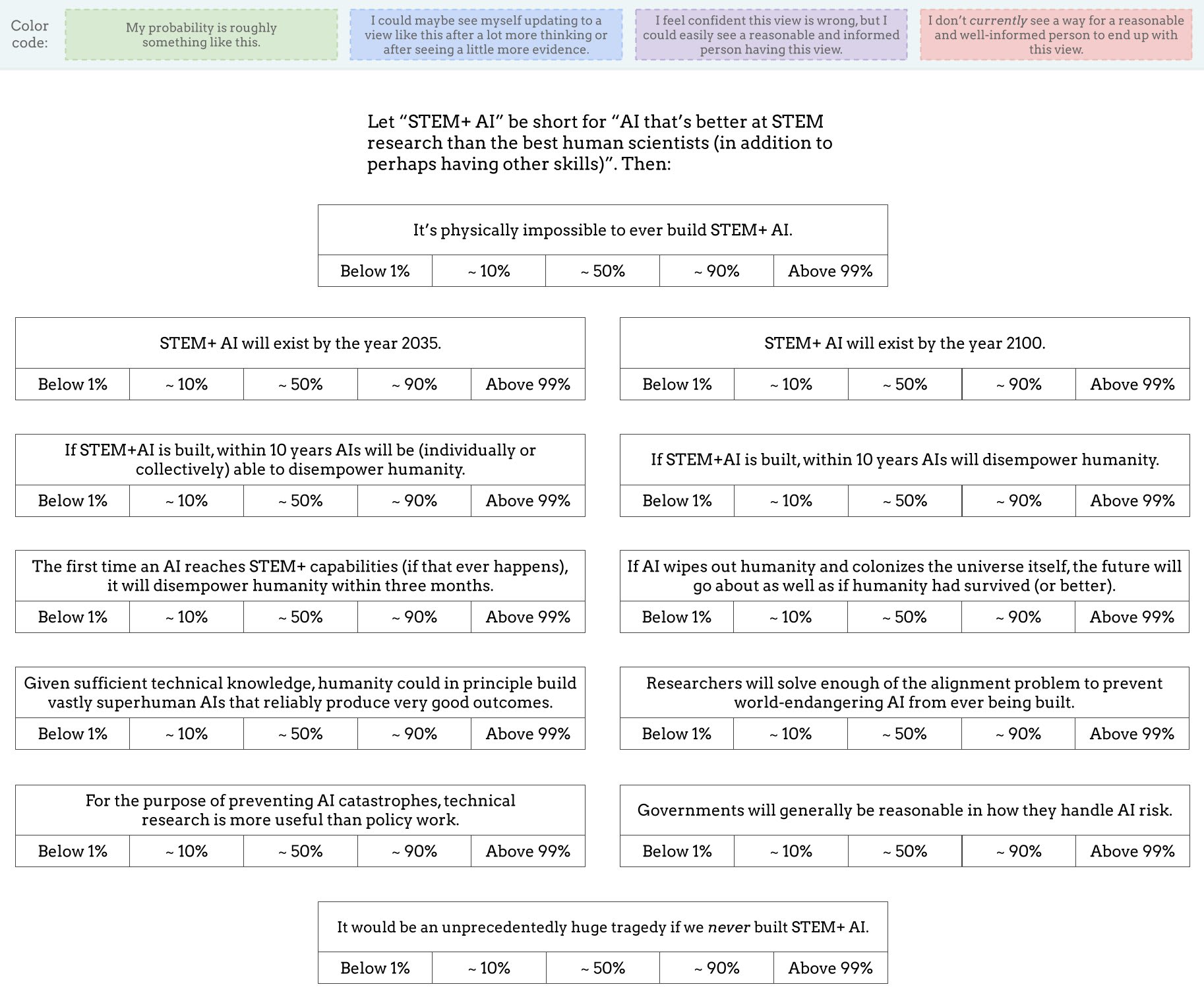

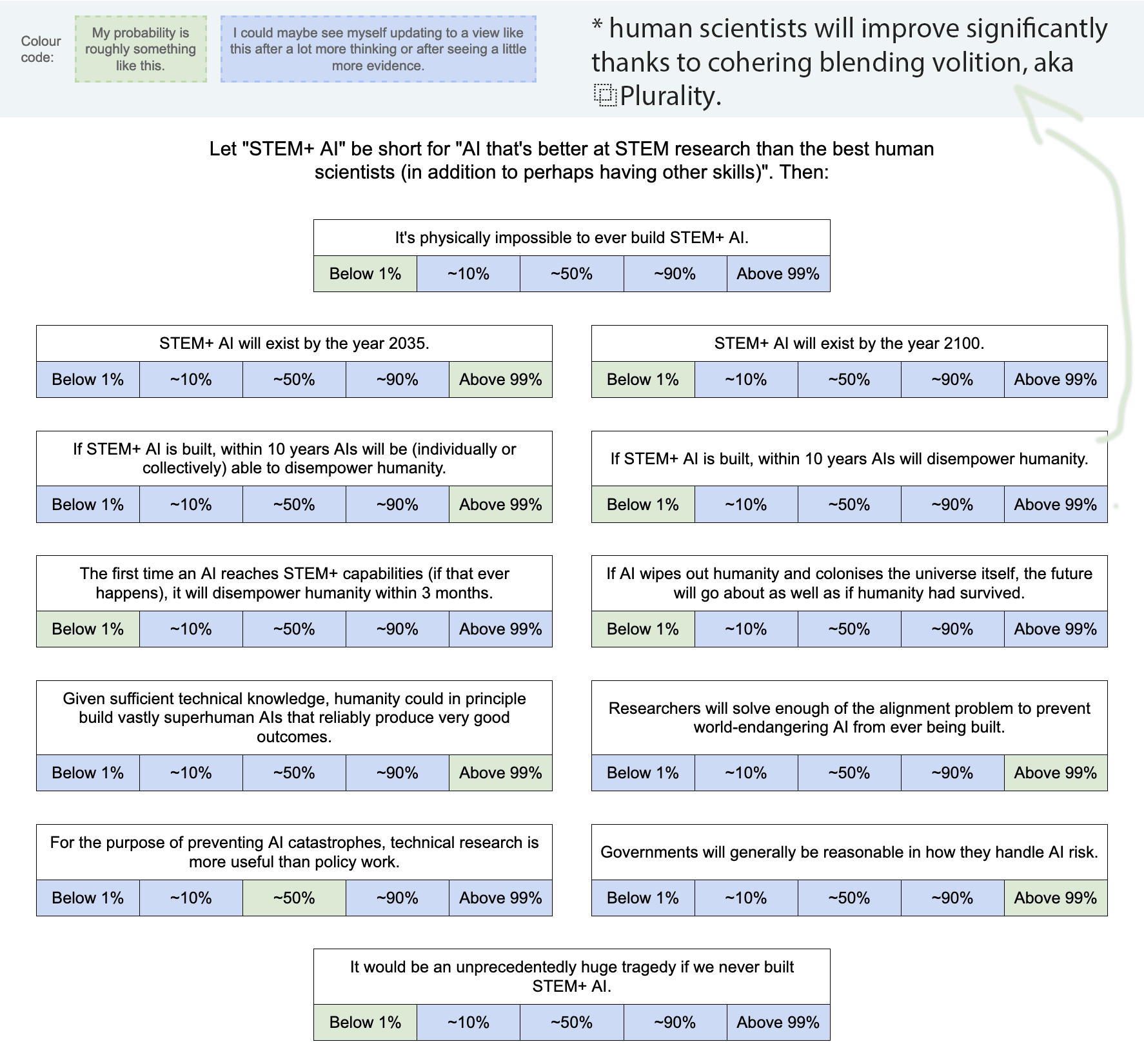

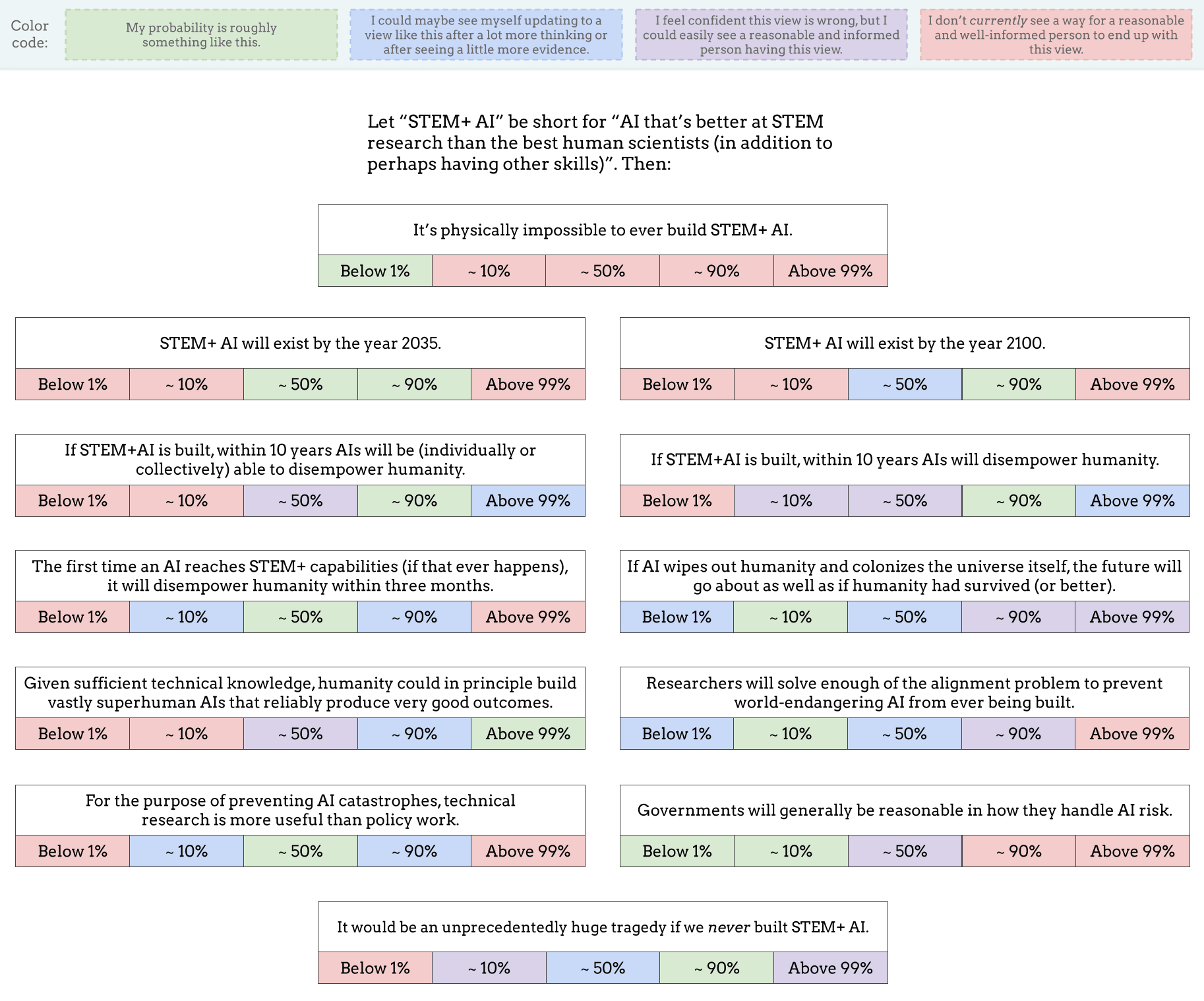

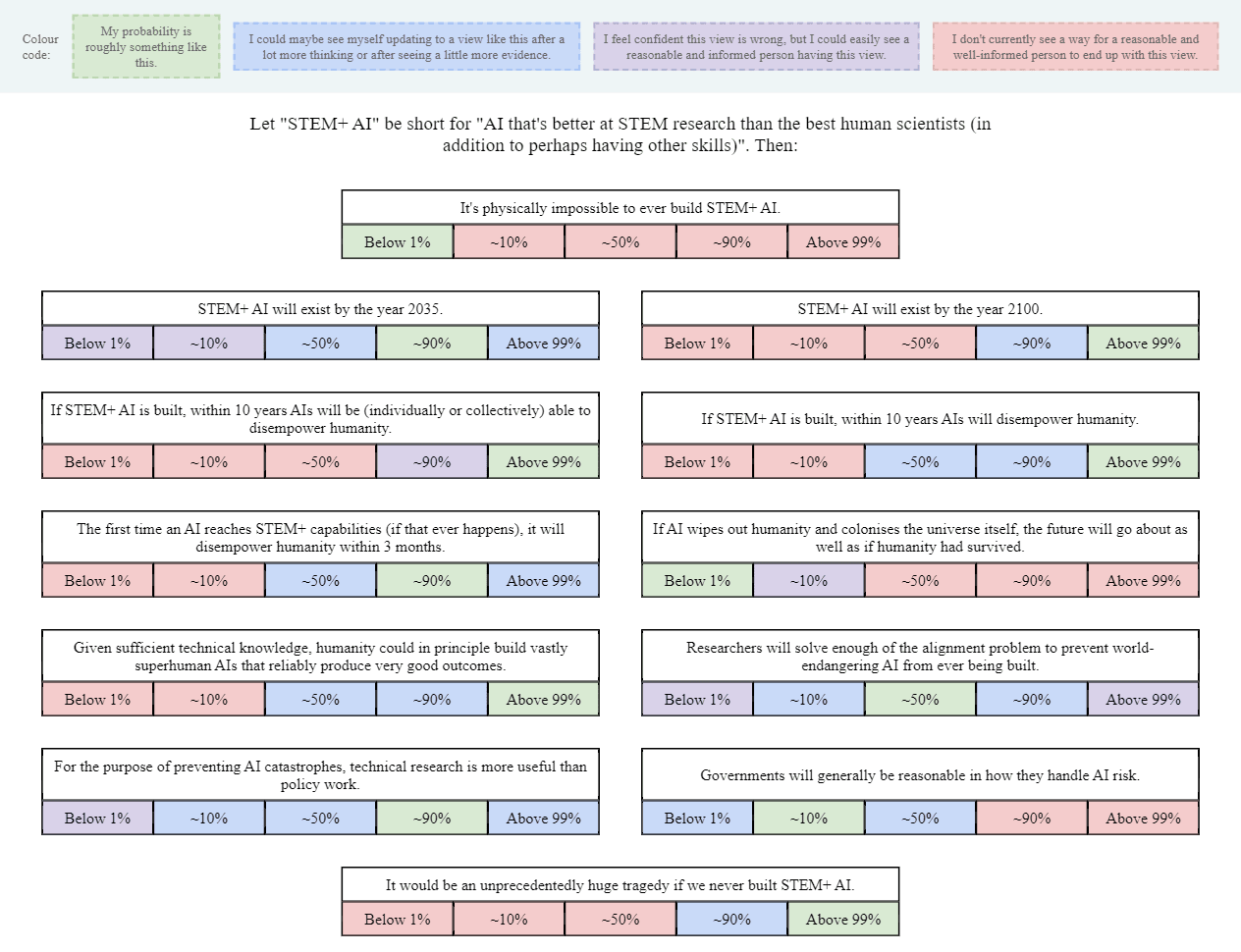

As a start, I made this image (also available as a Google Drawing):

(Added: Web version made by Tetraspace.)

I obviously left out lots of other important and interesting questions, but I think this is OK as a conversation-starter. I've encouraged Twitter regulars to share their own versions of this image, or similar images, as a nucleus for conversation (and a way to directly clarify what people's actual views are, beyond the stereotypes and slogans).

If you want to see a filled-out example, here's mine (though you may not want to look if you prefer to give answers that are less anchored): Google Drawing link.

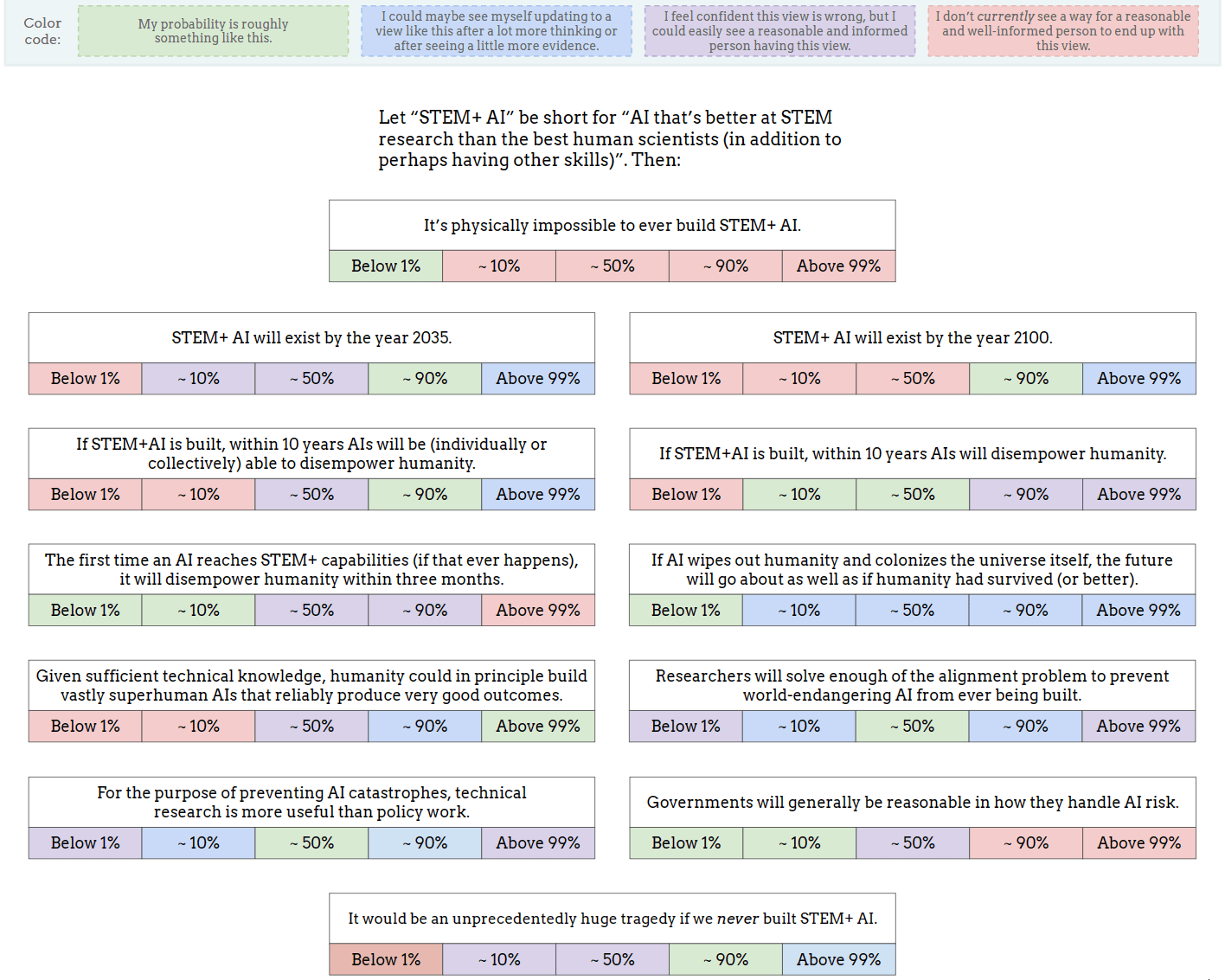

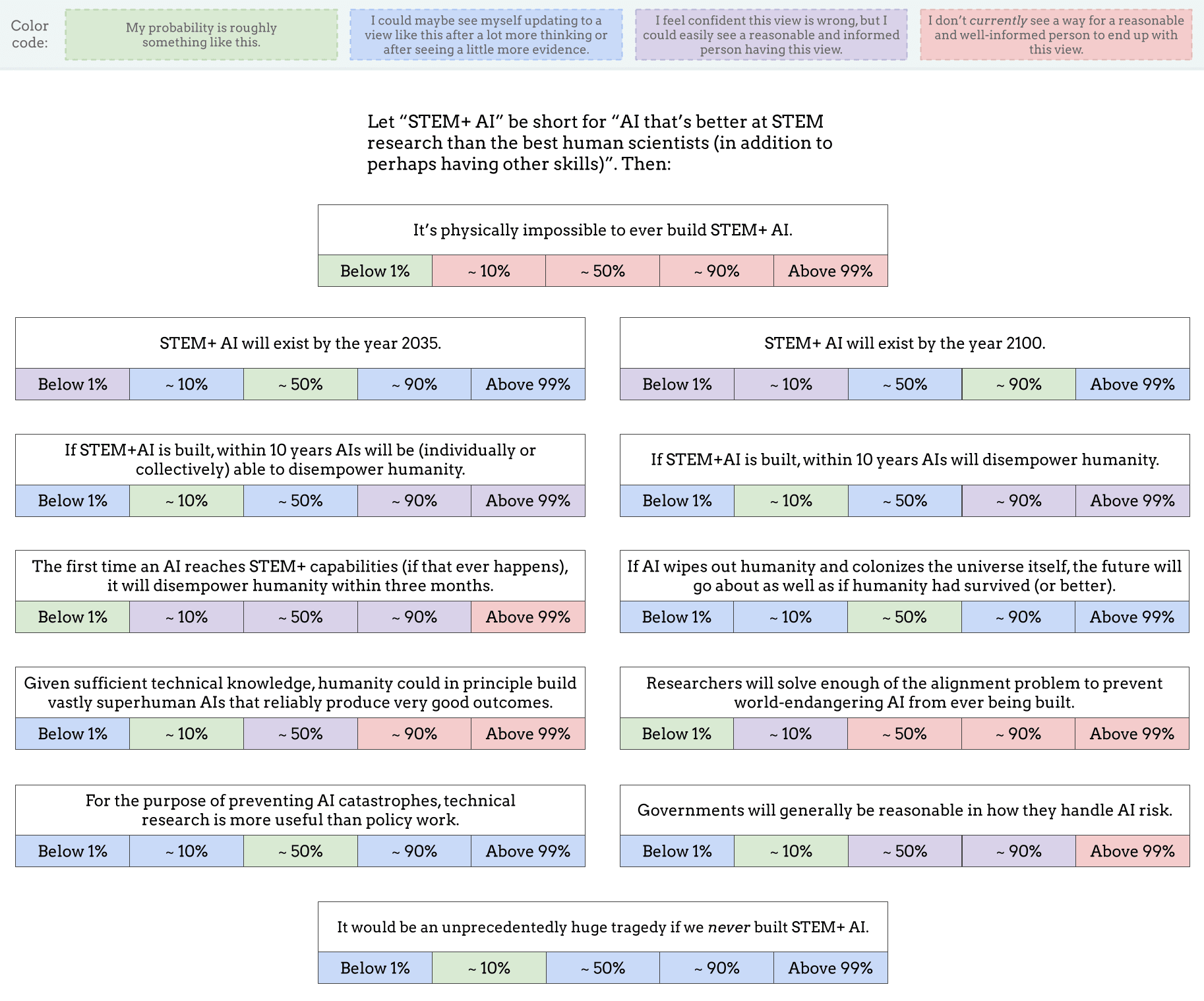

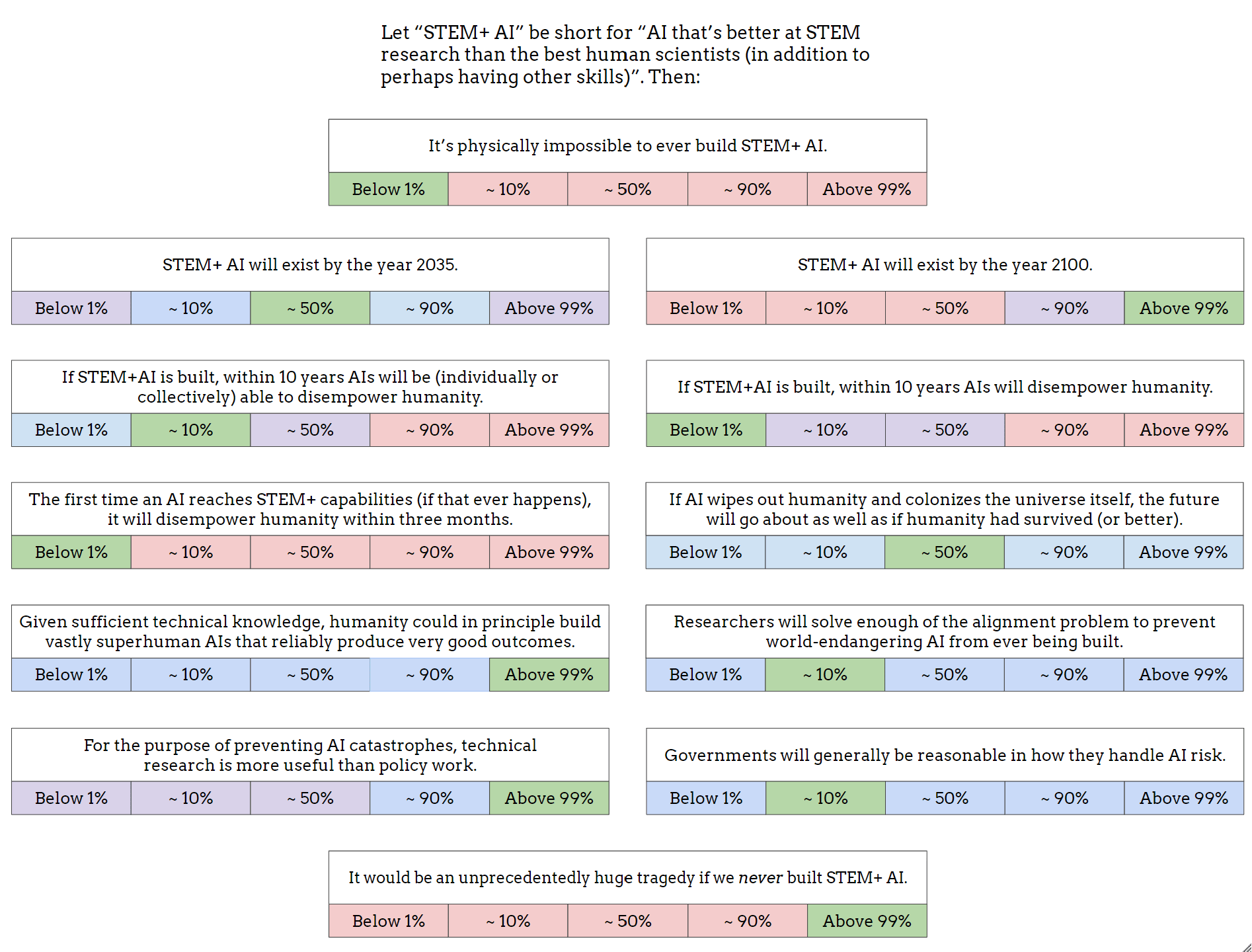

It’s physically impossible to ever build STEM+ AI.

This is tautological, it is impossible by known physics to not be able to do this

STEM+ AI will exist by the year 2100.

Similarly tautological, a machine that is STEM+ can be developed by various recursive methods

If STEM+AI is built, within 10 years AIs will disempower humanity.

This is orthogonal, a STEM+ machine need have no goals of its own

If STEM+AI is built, within 10 years AIs will be (individually or collectively) able to disempower humanity.

Humans have to make some catastrophically bad choices for this to even be possible.

The first time an AI reaches STEM+ capabilities (if that ever happens),

it will disempower humanity within three months.

foom requires physics to allow this kind of doubling rate. It likely doesn't, at least starting with human level tech

Given sufficient technical knowledge, humanity could in principle build vastly superhuman AIs that reliably produce very good outcomes.

No state no problems. I define "good" as "aligned with the user".

It would be an unprecedentedly huge tragedy if we never built STEM+ AI.

This is the early death of every human who will ever live for all time. Medical issues are too complex for human brains to ever solve them reliably. Adding some years with drugs or gene hacks, sure. Stopping every possible way someone can die, so that they witness their 200th and 2000th birthday? No way.

Perhaps so.