This month I lost a bunch of bets.

Back in early 2016 I bet at even odds that self-driving ride sharing would be available in 10 US cities by July 2023. Then I made similar bets a dozen times because everyone disagreed with me.

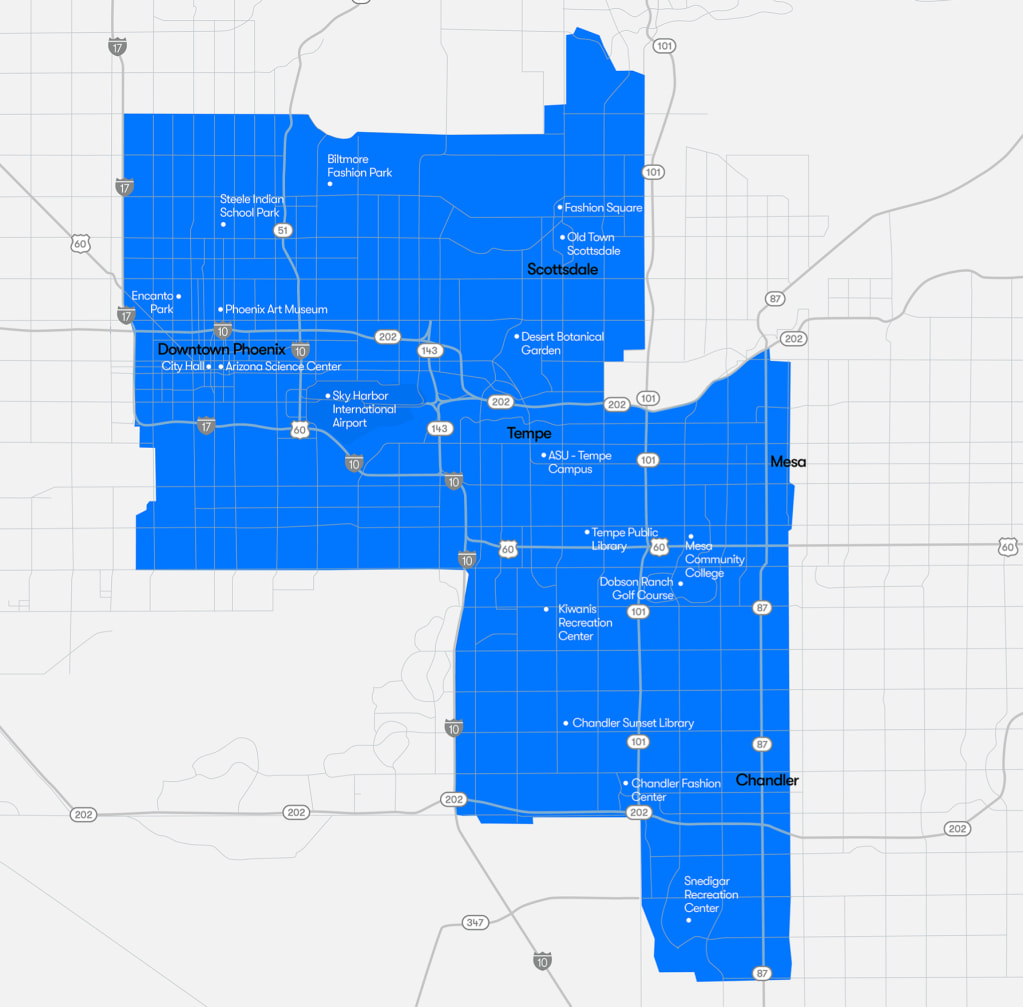

The first deployment to potentially meet our bar was Phoenix in 2022. I think Waymo is close to offering public rides in SF, and there are a few more cities being tested, but it looks like it will be at least a couple of years before we get 10 cities even if everything goes well.

Back in 2016 it looked plausible to me that the technology would be ready in 7 years. People I talked to in tech, in academia, and in the self-driving car industry were very skeptical. After talking with them it felt to me like they were overconfident. So I was happy to bet at even odds as a test of the general principle that 7 years is a long time and people are unjustifiably confident in extrapolating from current limitations.

In April of 2016 I gave a 60% probability to 10 cities. The main point of making the bets was to stake out my position and maximize volume, I was obviously not trying to extract profit given that I was giving myself very little edge. In mid 2017 I said my probability was 50-60%, and by 2018 I was under 50%.

If 34-year-old Paul was looking at the same evidence that 26-year-old Paul had in 2016 I think I would have given it a 30-40% chance instead of a 60% chance. I had only 10-20 hours of information about the field, and while it’s true that 7 years is a long time it’s also true that things take longer than you’d think, 10 cities is a lot, and expert consensus really does reflect a lot of information about barriers that aren’t easy to articulate clearly. 30% still would have made me more optimistic than a large majority of people I talked to, and so I still would have lost plenty of bets, but I would have made fewer bets and gotten better odds.

But I think 10% would have been about as unreasonable a prediction as 60%. The technology and regulation are mature enough to make deployment possible, so exactly when we get to 10 cities looks very contingent. If the technology was better then deployment would be significantly faster, and I think we should all have wide error bars about 7 years of tech progress. And the pandemic seems to have been a major setback for ride hailing. I’m not saying I got unlucky on any of these—my default guess is that the world we are in right now is the median—but all of these events are contingent enough that we should have had big error bars.

Lessons

People draw a lot of lessons from our collective experience with self-driving cars:

- Some people claim that there was wild overoptimism, but this does not match up with my experience. Investors were optimistic enough to make a bet on a speculative technology, but it seems like most experts and people in tech thought the technology was pretty unlikely to be ready by 2023. Almost everyone I talked to thought 50% was too high, and the three people I talked to who actually worked on self-driving cars went further and said it seemed crazy. The evidence I see for attributing wild optimism seems to be valuations (which could be justified even by a modest probability of success), vague headlines (which make no attempt to communicate calibrated predictions), and Elon Musk saying things.

- Relatedly, people sometimes treat self-driving as if it’s an easy AI problem that should be solved many years before e.g. automated software engineering. But I think we really don’t know. Perceiving and quickly reacting to the world is one of the tasks humans have evolved to be excellent at, and driving could easily be as hard (or harder) than being an engineer or scientist. This isn’t some post hoc rationalization: the claim that being a scientist is clearly hard and perception is probably easy was somewhat common in the mid 20th century but was out of fashion way before 2016 (see: Moravec’s paradox).

- Some people conclude from this example that reliability is really hard and will bottleneck applications in general. I think this is probably overindexing on a single example. Even for humans, driving has a reputation as a task that is unusually dependent on vigilance and reliability, where most of the minutes are pretty easy and where not messing up in rare exciting circumstances is the most important part of the job. Most jobs aren’t like that! Expecting reliability to be as much of a bottleneck for software engineering as for self-driving seems pretty ungrounded, given how different the job is for humans. Software engineers write tests and think carefully about their code, they don’t need to make a long sequence of snap judgments without being able to check their work. To the extent that exceptional moments matter, it’s more about being able to take opportunities than avoid mistakes.

- I think one of the most important lessons is that there’s a big gap between “pretty good” and “actually good enough.” This gap is much bigger than you’d guess if you just eyeballed the performance on academic benchmarks and didn’t get pretty deep into the weeds. I think this will apply all throughout ML; I think I underestimated the gap when looking in at self-driving cars from the outside, and made a few similar mistakes in ML prior to working in the field for years. That said, I still think you should have pretty broad uncertainty over exactly how long it will take to close this gap.

- A final lesson is that we should put more trust in skeptical priors. I think that’s right as far as it goes, and does apply just as well to impactful applications of AI more generally, but I want to emphasize that in absolute terms this is a pretty small update. When you think something is even odds, it’s pretty likely to happen and pretty likely not to happen. And most people had probabilities well below 50% and so they are even less surprised than I am. Over the last 7 years I’ve made quite a lot of predictions about AI, and I think I’ve had a similar rate of misses in both directions. (I also think my overall track record has been quite good, but you shouldn’t believe that.) Overall I’ve learned from the last 7 years to put more stock in certain kinds of skeptical priors, but it hasn’t been a huge effect.

The analogy to transformative AI

Beyond those lessons, I find the analogy to AI interesting. My bottom line is kind of similar in the two cases—I think 34-year-old Paul would have given roughly a 30% chance to self-driving cars in 10 cities by July 2023, and 34-year-old Paul now assigns roughly a 30% chance to transformative AI by July 2033. (By which I mean: systems as economically impactful as a low-cost simulations of arbitrary human experts, which I think is enough to end life as we know it one way or the other.)

But my personal situation is almost completely different in the two cases: for self-driving cars I spent 10-20 hours looking into the issue and 1-2 hours trying to make a forecast, whereas for transformative AI I’ve spent thousands of hours thinking about the domain and hundreds on forecasting.

And the views of experts are similarly different. In self-driving cars, people I talked to in the field tended to think that 30% by July 2023 was too high. Whereas the researchers working in AGI who I most respect (and who I think have the best track records over the last 10 years) tend to think that 30% by July 2033 is too low. The views of the broader ML community and public intellectuals (and investors) seem similar in the two cases, but the views of people actually working on the technology are strikingly different.

The update from self-driving cars, and more generally from my short lifetime of seeing things take a surprisingly long time, has tempered my AI timelines. But not enough to get me below 30% of truly crazy stuff within the next 10 years.

This was a lot clearer, thank you.