Air purifiers are highly suboptimal and could be >2.5x better.

Some things I learned while researching air purifiers for my house, to reduce COVID risk during jam nights.

- An air purifier is simply a fan blowing through a filter, delivering a certain CFM (airflow in cubic feet per minute). The higher the filter resistance and lower the filter area, the more pressure your fan needs to be designed for, and the more noise it produces.

- HEPA filters are inferior to MERV 13-14 filters except for a few applications like cleanrooms. The technical advantage of HEPA filters is filtering out 99.97% of particles of any size, but this doesn't matter when MERV 13-14 filters can filter 77-88% of infectious aerosol particles at much higher airflow. The correct metric is CADR (clean air delivery rate), equal to airflow * efficiency. [1, 2]

- Commercial air purifiers use HEPA filters for marketing reasons and to sell proprietary filters. But an even larger flaw is that they have very small filter areas for no apparent reason. Therefore they are forced to use very high pressure fans, dramatically increasing noise.

- Originally people devised the Corsi-Rosenthal Box to maximize CADR. They're cheap but rather

Quick takes from ICML 2024 in Vienna:

- In the main conference, there were tons of papers mentioning safety/alignment but few of them are good as alignment has become a buzzword. Many mechinterp papers at the conference from people outside the rationalist/EA sphere are no more advanced than where the EAs were in 2022. [edit: wording]

- Lots of progress on debate. On the empirical side, a debate paper got an oral. On the theory side, Jonah Brown-Cohen of Deepmind proves that debate can be efficient even when the thing being debated is stochastic, a version of this paper from last year. Apparently there has been some progress on obfuscated arguments too.

- The Next Generation of AI Safety Workshop was kind of a mishmash of various topics associated with safety. Most of them were not related to x-risk, but there was interesting work on unlearning and other topics.

- The Causal Incentives Group at Deepmind developed a quantitative measure of goal-directedness, which seems promising for evals.

- Reception to my Catastrophic Goodhart paper was decent. An information theorist said there were good theoretical reasons the two settings we studied-- KL divergence and best-of-n-- behaved similarly.

- OpenAI gav

The cost of goods has the same units as the cost of shipping: $/kg. Referencing between them lets you understand how the economy works, e.g. why construction material sourcing and drink bottling has to be local, but oil tankers exist.

- An iPhone costs $4,600/kg, about the same as SpaceX charges to launch it to orbit. [1]

- Beef, copper, and off-season strawberries are $11/kg, about the same as a 75kg person taking a three-hour, 250km Uber ride costing $3/km.

- Oranges and aluminum are $2-4/kg, about the same as flying them to Antarctica. [2]

- Rice and crude oil are ~$0.60/kg, about the same as $0.72 for shipping it 5000km across the US via truck. [3,4] Palm oil, soybean oil, and steel are around this price range, with wheat being cheaper. [3]

- Coal and iron ore are $0.10/kg, significantly more than the cost of shipping it around the entire world via smallish (Handysize) bulk carriers. Large bulk carriers are another 4x more efficient [6].

- Water is very cheap, with tap water $0.002/kg in NYC. But shipping via tanker is also very cheap, so you can ship it maybe 1000 km before equaling its cost.

It's really impressive that for the price of a winter strawberry, we can ship a strawberry-sized lump of...

People with p(doom) > 50%: would any concrete empirical achievements on current or near-future models bring your p(doom) under 25%?

Answers could be anything from "the steering vector for corrigibility generalizes surprisingly far" to "we completely reverse-engineer GPT4 and build a trillion-parameter GOFAI without any deep learning".

A dramatic advance in the theory of predicting the regret of RL agents. So given a bunch of assumptions about the properties of an environment, we could upper bound the regret with high probability. Maybe have a way to improve the bound as the agent learns about the environment. The theory would need to be flexible enough that it seems like it should keep giving reasonable bounds if the is agent doing things like building a successor. I think most agent foundations research can be framed as trying to solve a sub-problem of this problem, or a variant of this problem, or understand the various edge cases.

If we can empirically test this theory in lots of different toy environments with current RL agents, and the bounds are usually pretty tight, then that'd be a big update for me. Especially if we can deliberately create edge cases that violate some assumptions and can predict when things will break from which assumptions we violated.

(although this might not bring doom below 25% for me, depends also on race dynamics and the sanity of the various decision-makers).

[edit: pinned to profile]

The bulk of my p(doom), certainly >50%, comes mostly from a pattern we're used to, let's call it institutional incentives, being instantiated with AI help towards an end where eg there's effectively a competing-with-humanity nonhuman ~institution, maybe guided by a few remaining humans. It doesn't depend strictly on anything about AI, and solving any so-called alignment problem for AIs without also solving war/altruism/disease completely - or in other words, in a leak-free way - not just partially, means we get what I'd call "doom", ie worlds where malthusian-hells-or-worse are locked in.

If not for AI, I don't think we'd have any shot of solving something so ambitious; but the hard problem that gets me below 50% would be serious progress on something-around-as-good-as-CEV-is-supposed-to-be - something able to make sure it actually gets used to effectively-irreversibly reinforce that all beings ~have a non-torturous time, enough fuel, enough matter, enough room, enough agency, enough freedom, enough actualization.

If you solve something about AI-alignment-to-current-strong-agents, right now, that will on net get used primarily as a weapon to reinforce the ...

Getting up to "7. Worst-case training process transparency for deceptive models" on my transparency and interpretability tech tree on near-future models would get me there.

The biggest swings to my p(doom) will probably come from governance/political/social stuff rather than from technical stuff -- I think we could drive p(doom) down to <10% if only we had decent regulation and international coordination in place. (E.g. CERN for AGI + ban on rogue AGI projects)

That said, there are probably a bunch of concrete empirical achievements that would bring my p(doom) down to less than 25%. evhub already mentioned some mechinterp stuff. I'd throw in some faithful CoT stuff (e.g. if someone magically completed the agenda I'd been sketching last year at OpenAI, so that we could say "for AIs trained in such-and-such a way, we can trust their CoT to be faithful w.r.t. scheming because they literally don't have the capability to scheme without getting caught, we tested it; also, these AIs are on a path to AGI; all we have to do is keep scaling them and they'll get to AGI-except-with-the-faithful-CoT-property.)

Maybe another possibility would be something along the lines of W2SG working really well for some set of core concepts including honesty/truth. So that we can with confidence say "Apply these techniques to a giant pretrained LLM, and then you'll get it to classify sentences by truth-value, no seriously we are confident that's really what it's doing, and also, our interpretability analysis shows that if you then use it as a RM to train an agent, the agent will learn to never say anything it thinks is false--no seriously it really has internalized that rule in a way that will generalize."

Oh yeah, absolutely.

If NAH for generally aligned ethics and morals ends up being the case, then corrigibility efforts that would allow Saudi Arabia to have an AI model that outs gay people to be executed instead of refusing, or allows North Korea to propagandize the world into thinking its leader is divine, or allows Russia to fire nukes while perfectly intercepting MAD retaliation, or enables drug cartels to assassinate political opposition around the world, or allows domestic terrorists to build a bioweapon that ends up killing off all humans - the list of doomsday and nightmare scenarios of corrigible AI that executes on human provided instructions and enables even the worst instances of human hedgemony to flourish paves the way to many dooms.

Yes, AI may certainly end up being its own threat vector. But humanity has had it beat for a long while now in how long and how broadly we've been a threat unto ourselves. At the current rate, a superintelligent AI just needs to wait us out if it wants to be rid of us, as we're pretty steadfastly marching ourselves to our own doom. Even if superintelligent AI wanted to save us, I am extremely doubtful it would be able to be successful.

We ca...

Eight beliefs I have about technical alignment research

Written up quickly; I might publish this as a frontpage post with a bit more effort.

- Conceptual work on concepts like “agency”, “optimization”, “terminal values”, “abstractions”, “boundaries” is mostly intractable at the moment.

- Success via “value alignment” alone— a system that understands human values, incorporates these into some terminal goal, and mostly maximizes for this goal, seems hard unless we’re in a very easy world because this involves several fucked concepts.

- Whole brain emulation probably won’t happen in time because the brain is complicated and biology moves slower than CS, being bottlenecked by lab work.

- Most progress will be made using simple techniques and create artifacts publishable in top journals (or would be if reviewers understood alignment as well as e.g. Richard Ngo).

- The core story for success (>50%) goes something like:

- Corrigibility can in practice be achieved by instilling various cognitive properties into an AI system, which are difficult but not impossible to maintain as your system gets pivotally capable.

- These cognitive properties will be a mix of things from normal ML fields (safe RL), things tha

Agency/consequentialism is not a single property.

It bothers me that people still ask the simplistic question "will AGI be agentic and consequentialist by default, or will it be a collection of shallow heuristics?". A consequentialist utility maximizer is just a mind with a bunch of properties that tend to make it capable, incorrigible, and dangerous. These properties can exist independently, and the first AGI probably won't have all of them, so we should be precise about what we mean by "agency". Off the top of my head, here are just some of the qualities included in agency:

- Consequentialist goals that seem to be about the real world rather than a model/domain

- Complete preferences between any pair of worldstates

- Tends to cause impacts disproportionate to the size of the goal (no low impact preference)

- Resists shutdown

- Inclined to gain power (especially for instrumental reasons)

- Goals are unpredictable or unstable (like instrumental goals that come from humans' biological drives)

- Goals usually change due to internal feedback, and it's difficult for humans to change them

- Willing to take all actions it can conceive of to achieve a goal, including those that are unlikely on some prior

See Yudko...

I'm a little skeptical of your contention that all these properties are more-or-less independent. Rather there is a strong feeling that all/most of these properties are downstream of a core of agentic behaviour that is inherent to the notion of true general intelligence. I view the fact that LLMs are not agentic as further evidence that it's a conceptual error to classify them as true general intelligences, not as evidence that ai risk is low. It's a bit like if in the 1800s somebody says flying machines will be dominant weapons of war in the future and get rebutted by 'hot gas balloons are only used for reconnaissance in war, they aren't very lethal. Flying machines won't be a decisive military technology '

I don't know Nate's views exactly but I would imagine he would hold a similar view (do correct me if I'm wrong ). In any case, I imagine you are quite familiar with the my position here.

I'd be curious to hear more about where you're coming from.

I think the framing "alignment research is preparadigmatic" might be heavily misunderstood. The term "preparadigmatic" of course comes from Thomas Kuhn's The Structure of Scientific Revolutions. My reading of this says that a paradigm is basically an approach to solving problems which has been proven to work, and that the correct goal of preparadigmatic research should be to do research generally recognized as impressive.

For example, Kuhn says in chapter 2 that "Paradigms gain their status because they are more successful than their competitors in solving a few problems that the group of practitioners has come to recognize as acute." That is, lots of researchers have different ontologies/approaches, and paradigms are the approaches that solve problems that everyone, including people with different approaches, agrees to be important. This suggests that to the extent alignment is still preparadigmatic, we should try to solve problems recognized as important by, say, people in each of the five clusters of alignment researchers (e.g. Nate Soares, Dan Hendrycks, Paul Christiano, Jan Leike, David Bau).

I think this gets twisted in some popular writings on LessWrong. John Wentworth w...

Possible post on suspicious multidimensional pessimism:

I think MIRI people (specifically Soares and Yudkowsky but probably others too) are more pessimistic than the alignment community average on several different dimensions, both technical and non-technical: morality, civilizational response, takeoff speeds, probability of easy alignment schemes working, and our ability to usefully expand the field of alignment. Some of this is implied by technical models, and MIRI is not more pessimistic in every possible dimension, but it's still awfully suspicious.

I strongly suspect that one of the following is true:

- the MIRI "optimism dial" is set too low

- everyone else's "optimism dial" is set too high. (Yudkowsky has said this multiple times in different contexts)

- There are common generators that I don't know about that are not just an "optimism dial", beyond MIRI's models

I'm only going to actually write this up if there is demand; the full post will have citations which are kind of annoying to find.

After working at MIRI (loosely advised by Nate Soares) for a while, I now have more nuanced views and also takes on Nate's research taste. It seems kind of annoying to write up so I probably won't do it unless prompted.

Edit: this is now up

In terms of developing better misalignment risk countermeasures, I think the most important questions are probably:

- How to evaluate whether models should be trusted or untrusted: currently I don't have a good answer and this is bottlenecking the efforts to write concrete control proposals.

- How AI control should interact with AI security tools inside labs.

More generally:

- How can we get more evidence on whether scheming is plausible?

- How scary is underelicitation? How much should the results about password-locked models or arguments about being able to generate small numbers of high-quality labels or demonstrations affect this?

You should update by +-1% on AI doom surprisingly frequently

This is just a fact about how stochastic processes work. If your p(doom) is Brownian motion in 1% steps starting at 50% and stopping once it reaches 0 or 1, then there will be about 50^2=2500 steps of size 1%. This is a lot! If we get all the evidence for whether humanity survives or not uniformly over the next 10 years, then you should make a 1% update 4-5 times per week. In practice there won't be as many due to heavy-tailedness in the distribution concentrating the updates in fewer events, and the fact you don't start at 50%. But I do believe that evidence is coming in every week such that ideal market prices should move by 1% on maybe half of weeks, and it is not crazy for your probabilities to shift by 1% during many weeks if you think about it often enough. [Edit: I'm not claiming that you should try to make more 1% updates, just that if you're calibrated and think about AI enough, your forecast graph will tend to have lots of >=1% week-to-week changes.]

The general version of this statement is something like: if your beliefs satisfy the law of total expectation, the variance of the whole process should equal the variance of all the increments involved in the process.[1] In the case of the random walk where at each step, your beliefs go up or down by 1% starting from 50% until you hit 100% or 0% -- the variance of each increment is 0.01^2 = 0.0001, and the variance of the entire process is 0.5^2 = 0.25, hence you need 0.25/0.0001 = 2500 steps in expectation. If your beliefs have probability p of going up or down by 1% at each step, and 1-p of staying the same, the variance is reduced by a factor of p, and so you need 2500/p steps.

(Indeed, something like this standard way to derive the expected steps before a random walk hits an absorbing barrier).

Similarly, you get that if you start at 20% or 80%, you need 1600 steps in expectation, and if you start at 1% or 99%, you'll need 99 steps in expectation.

One problem with your reasoning above is that as the 1%/99% shows, needing 99 steps in expectation does not mean you will take 99 steps with high probability -- in this case, there's a 50% chance you need only one ...

Maybe this is too tired a point, but AI safety really needs exercises-- tasks that are interesting, self-contained (not depending on 50 hours of readings), take about 2 hours, have clean solutions, and give people the feel of alignment research.

I found some of the SERI MATS application questions better than Richard Ngo's exercises for this purpose, but there still seems to be significant room for improvement. There is currently nothing smaller than ELK (which takes closer to 50 hours to develop a proposal for and properly think about it) that I can point technically minded people to and feel confident that they'll both be engaged and learn something.

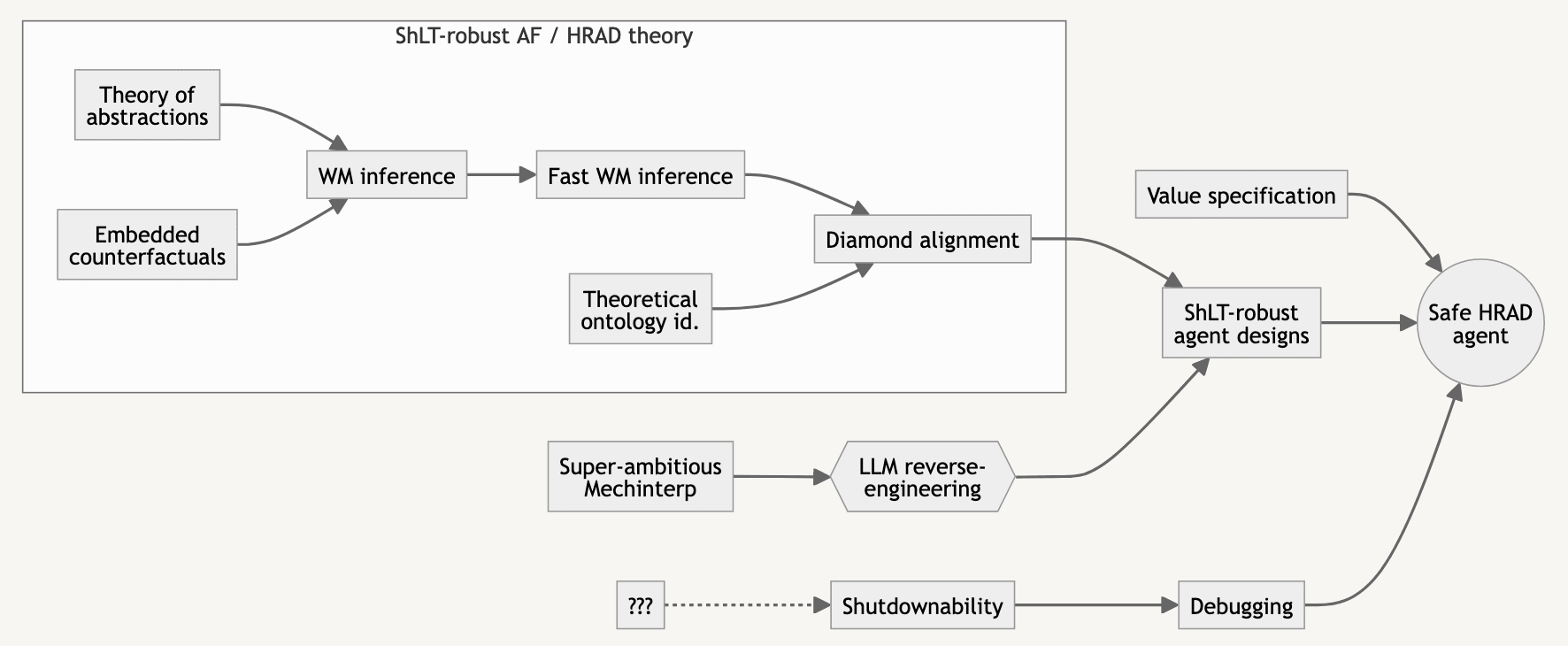

Tech tree for worst-case/HRAD alignment

Here's a diagram of what it would take to solve alignment in the hardest worlds, where something like MIRI's HRAD agenda is needed. I made this months ago with Thomas Larsen and never got around to posting it (mostly because under my worldview it's pretty unlikely that we can, or need to, do this), and it probably won't become a longform at this point. I have not thought about this enough to be highly confident in anything.

- This flowchart is under the hypothesis that LLMs have some underlying, mysterious algorithms and data structures that confer intelligence, and that we can in theory apply these to agents constructed by hand, though this would be extremely tedious. Therefore, there are basically three phases: understanding what a HRAD agent would do in theory, reverse-engineering language models, and combining these two directions. The final agent will be a mix of hardcoded things and ML, depending on what is feasible to hardcode and how well we can train ML systems whose robustness and conformation to a spec we are highly confident in.

- Theory of abstractions: Also called multi-level models. A mathematical framework for a world-model tha

I'm worried that "pause all AI development" is like the "defund the police" of the alignment community. I'm not convinced it's net bad because I haven't been following governance-- my current guess is neutral-- but I do see these similarities:

- It's incredibly difficult and incentive-incompatible with existing groups in power

- There are less costly, more effective steps to reduce the underlying problem, like making the field of alignment 10x larger or passing regulation to require evals

- There are some obvious negative effects; potential overhangs or greater incentives to defect in the AI case, and increased crime, including against disadvantaged groups, in the police case

- There's far more discussion than action (I'm not counting the fact that GPT5 isn't being trained yet; that's for other reasons)

- It's memetically fit, and much discussion is driven by two factors that don't advantage good policies over bad policies, and might even do the reverse. This is the toxoplasma of rage.

- disagreement with the policy

- (speculatively) intragroup signaling; showing your dedication to even an inefficient policy proposal proves you're part of the ingroup. I'm not 100% this was a large factor in "defund the

The obvious dis-analogy is that if the police had no funding and largely ceased to exist, a string of horrendous things would quickly occur. Murders and thefts and kidnappings and rapes and more would occur throughout every country in which it was occurring, people would revert to tight-knit groups who had weapons to defend themselves, a lot of basic infrastructure would probably break down (e.g. would Amazon be able to pivot to get their drivers armed guards?) and much more chaos would ensue.

And if AI research paused, society would continue to basically function as it has been doing so far.

One of them seems to me like a goal that directly causes catastrophes and a breakdown of society and the other doesn't.

There are less costly, more effective steps to reduce the underlying problem, like making the field of alignment 10x larger or passing regulation to require evals

IMO making the field of alignment 10x larger or evals do not solve a big part of the problem, while indefinitely pausing AI development would. I agree it's much harder, but I think it's good to at least try, as long as it doesn't terribly hurt less ambitious efforts (which I think it doesn't).

Say I need to publish an anonymous essay. If it's long enough, people could plausibly deduce my authorship based on the writing style; this is called stylometry. The only stylometry-defeating tool I can find is Anonymouth; it hasn't been updated in 7 years and it's unclear if it can defeat modern AI. Is there something better?

I was going to write an April Fool's Day post in the style of "On the Impossibility of Supersized Machines", perhaps titled "On the Impossibility of Operating Supersized Machines", to poke fun at bad arguments that alignment is difficult. I didn't do this partly because I thought it would get downvotes. Maybe this reflects poorly on LW?

The independent-steps model of cognitive power

A toy model of intelligence implies that there's an intelligence threshold above which minds don't get stuck when they try to solve arbitrarily long/difficult problems, and below which they do get stuck. I might not write this up otherwise due to limited relevance, so here it is as a shortform, without the proofs, limitations, and discussion.

The model

A task of difficulty n is composed of independent and serial subtasks. For each subtask, a mind of cognitive power knows different “approaches” to choose from. The time taken by each approach is at least 1 but drawn from a power law, for , and the mind always chooses the fastest approach it knows. So the time taken on a subtask is the minimum of samples from the power law, and the overall time for a task is the total for the n subtasks.

Main question: For a mind of strength ,

- what is the average rate at which it completes tasks of difficulty n?

- will it be infeasible for it to complete sufficiently large tasks?

Results

- There is a critical threshold of intelligence below wh

I'm looking for AI safety projects with people with some amount of experience. I have 3/4 of a CS degree from Caltech, one year at MIRI, and have finished the WMLB and ARENA bootcamps. I'm most excited about making activation engineering more rigorous, but willing to do anything that builds research and engineering skill.

If you've published 2 papers in top ML conferences or have a PhD in something CS related, and are interested in working with me, send me a DM.

I had a long-ish conversation with John Wentworth and want to make it known that I could probably write up any of the following distillations if I invested lots of time into them (about a day (edit: 3 days seems more likely) of my time and an hour of John's). Reply if you're really interested in one of them.

- What is the type signature of a utility function?

- Utility functions must be defined with respect to an external world-model

- Infinite money-pumps are not required for incoherence, and not likely in practice. The actual incoherent behavior is that an agent could get to states A_1 or A_2, identical except that A_1 has more money, and chooses A_2. Implications.

- Why VNM is not really a coherence theorem. Other coherence theorems relating to EU maximization simultaneously derive Bayesian probabilities and utilities. VNM requires an external frequentist notion of probabilities.

The LessWrong Review's short review period is a fatal flaw.

I would spend WAY more effort on the LW review if the review period were much longer. It has happened about 10 times in the last year that I was really inspired to write a review for some post, but it wasn’t review season. This happens when I have just thought about a post a lot for work or some other reason, and the review quality is much higher because I can directly observe how the post has shaped my thoughts. Now I’m busy with MATS and just don’t have a lot of time, and don’t even remember what posts I wanted to review.

I could have just saved my work somewhere and paste it in when review season rolls around, but there really should not be that much friction in the process. The 2022 review period should be at least 6 months, including the entire second half of 2023, and posts from the first half of 2022 should maybe even be reviewable in the first half of 2023.

Below is a list of powerful optimizers ranked on properties, as part of a brainstorm on whether there's a simple core of consequentialism that excludes corrigibility. I think that AlphaZero is a moderately strong argument that there is a simple core of consequentialism which includes inner search.

Properties

- Simple: takes less than 10 KB of code. If something is already made of agents (markets and the US government) I marked it as N/A.

- Coherent: approximately maximizing a utility function most of the time. There are other definitions:

- Not being money-pumped

- Nate Soares's notion in the MIRI dialogues: having all your actions point towards a single goal

- John Wentworth's setup of Optimization at a Distance

- Adversarially coherent: something like "appears coherent to weaker optimizers" or "robust to perturbations by weaker optimizers". This implies that it's incorrigible.

- Sufficiently optimized agents appear coherent - Arbital

- will achieve high utility even when "disrupted" by an optimizer somewhat less powerful

- Search+WM: operates by explicitly ranking plans within a world-model. Evolution is a search process, but doesn't have a world-model. The contact with the territory it gets comes from

Has anyone made an alignment tech tree where they sketch out many current research directions, what concrete achievements could result from them, and what combinations of these are necessary to solve various alignment subproblems? Evan Hubinger made this, but that's just for interpretability and therefore excludes various engineering achievements and basic science in other areas, like control, value learning, agent foundations, Stuart Armstrong's work, etc.

The North Wind, the Sun, and Abadar

One day, the North Wind and the Sun argued about which of them was the strongest. Abadar, the god of commerce and civilization, stopped to observe their dispute. “Why don’t we settle this fairly?” he suggested. “Let us see who can compel that traveler on the road below to remove his cloak.”

The North Wind agreed, and with a mighty gust, he began his effort. The man, feeling the bitter chill, clutched his cloak tightly around him and even pulled it over his head to protect himself from the relentless wind. After a time, the...

Suppose that humans invent nanobots that can only eat feldspars (41% of the earth's continental crust). The nanobots:

- are not generally intelligent

- can't do anything to biological systems

- use solar power to replicate, and can transmit this power through layers of nanobot dust

- do not mutate

- turn all rocks they eat into nanobot dust small enough to float on the wind and disperse widely

Does this cause human extinction? If so, by what mechanism?

Antifreeze proteins prevent water inside organisms from freezing, allowing them to survive at temperatures below 0 °C. They do this by actually binding to tiny ice crystals and preventing them from growing further, basically keeping the water in a supercooled state. I think this is fascinating.

Is it possible for there to be nanomachine enzymes (not made of proteins, because they would denature) that bind to tiny gas bubbles in solution and prevent water from boiling above 100 °C?

Is there a well-defined impact measure to use that's in between counterfactual value and Shapley value, to use when others' actions are partially correlated with yours?

(Crossposted from Bountied Rationality Facebook group)

I am generally pro-union given unions' history of fighting exploitative labor practices, but in the dockworkers' strike that commenced today, the union seems to be firmly in the wrong. Harold Daggett, the head of the International Longshoremen’s Association, gleefully talks about holding the economy hostage in a strike. He opposes automation--"any technology that would replace a human worker’s job", and this is a major reason for the breakdown in talks.

For context, the automation of the global shipping ...

I started a dialogue with @Alex_Altair a few months ago about the tractability of certain agent foundations problems, especially the agent-like structure problem. I saw it as insufficiently well-defined to make progress on anytime soon. I thought the lack of similar results in easy settings, the fuzziness of the "agent"/"robustly optimizes" concept, and the difficulty of proving things about a program's internals given its behavior all pointed against working on this. But it turned out that we maybe didn't disagree on tractability much, it's just that Alex...

I'm planning to write a post called "Heavy-tailed error implies hackable proxy". The idea is that when you care about and are optimizing for a proxy , Goodhart's Law sometimes implies that optimizing hard enough for causes to stop increasing.

A large part of the post would be proofs about what the distributions of and must be for , where X and V are independent random variables with mean zero. It's clear that

- X must be heavy-tailed (or long-tailed or som

The most efficient form of practice is generally to address one's weaknesses. Why, then, don't chess/Go players train by playing against engines optimized for this? I can imagine three types of engines:

- Trained to play more human-like sound moves (soundness as measured by stronger engines like Stockfish, AlphaZero).

- Trained to play less human-like sound moves.

- Trained to win against (real or simulated) humans while making unsound moves.

The first tool would simply be an opponent when humans are inconvenient or not available. The second and third tools wo

...Maybe people worried about AI self-modification should study games where the AI's utility function can be modified by the environment, and it is trained to maximize its current utility function (in the "realistic value functions" sense of Everitt 2016). Some things one could do:

- Examine preference preservation and refine classic arguments about instrumental convergence

- Are there initial goals that allow for stably corrigible systems (in the sense that they won't disable an off switch, and maybe other senses)?

- Try various games and see how qualitatively hard i

I looked at Tetlock's Existential Risk Persuasion Tournament results, and noticed some oddities. The headline result is of course "median superforecaster gave a 0.38% risk of extinction due to AI by 2100, while the median AI domain expert gave a 3.9% risk of extinction." But all the forecasters seem to have huge disagreements from my worldview on a few questions:

- They divided forecasters into "AI-Concerned" and "AI-Skeptic" clusters. The latter gave 0.0001% for AI catastrophic risk before 2030, and even lower than this (shows as 0%) for AI extinction risk.

A Petrov Day carol

This is meant to be put to the Christmas carol "In the Bleak Midwinter" by Rossetti and Holst. Hopefully this can be occasionally sung like "For The Longest Term" is in EA spaces, or even become a Solstice thing.

I tried to get Suno to sing this but can't yet get the lyrics, tune, and style all correct; this is the best attempt. I also will probably continue editing the lyrics because parts seem a bit rough, but I just wanted to write this up before everyone forgets about Petrov Day.

[edit: I got a good rendition after ~40 attempts! It's a ...

Question for @AnnaSalamon and maybe others. What's the folk ethics analysis behind the sinking of the SF Hydro, which killed 14 civilians but destroyed heavy water to be used in the Nazi nuclear weapons program? Eliezer used this as a classic example of ethical injunctions once.

People say it's important to demonstrate alignment problems like goal misgeneralization. But now, OpenAI, Deepmind, and Anthropic have all had leaders sign the CAIS statement on extinction risk and are doing substantial alignment research. The gap between the 90th percentile alignment concerned people at labs and the MIRI worldview is now more about security mindset. Security mindset is present in cybersecurity because it is useful in the everyday, practical environment researchers work in. So perhaps a large part of the future hinges on whether security m...

The author of "Where Is My Flying Car" says that the Feynman Program (teching up to nanotechnology by machining miniaturized parts, which are assembled into the tools for micro-scale machining, which are assembled into tools for yet smaller machining, etc) might be technically feasible and the only reason we don't have it is that no one's tried it yet. But this seems a bit crazy for the following reasons:

- The author doesn't seem like a domain expert

- AFAIK this particular method of nanotechnology was just an idea Feynman had in the famous speech and not a

In nanotech? True enough, because I am not convinced that there is any domain expertise in the sort of nanotech Storrs Hall writes about. It seems like a field that consists mostly of advertising. (There is genuine science and genuine engineering in nano-stuff; for instance, MEMS really is a thing. But the sort of "let's build teeny-tiny mechanical devices, designed and built at the molecular level, which will be able to do amazing things previously-existing tech can't" that Storrs Hall has advocated seems not to have panned out.)

But more generally, that isn't so at all. What I'm looking for by way of domain expertise in a technological field is a history of demonstrated technological achievements. Storrs Hall has one such achievement that I can see, and even that is doubtful. (He founded and was "chief scientist" of a company that made software for simulating molecular dynamics. I am not in a position to tell either how well the software actually worked or how much of it was JSH's doing.) More generally, I want to see a history of demonstrated difficult accomplishments in the field, as opposed to merely writing about the field.

Selecting some random books from my shelves (literally...

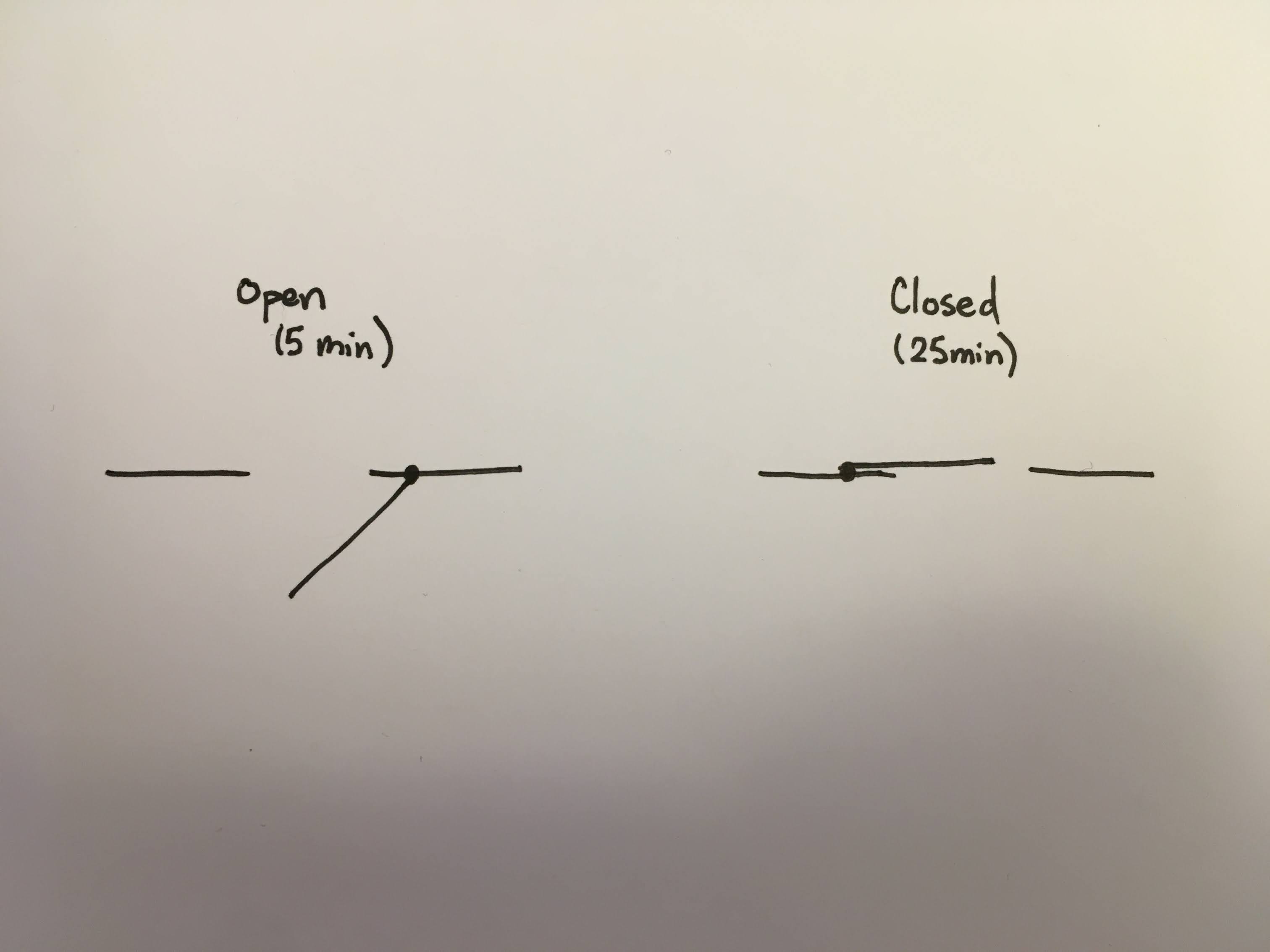

Is it possible to make an hourglass that measures different amounts of time in one direction than the other? Say, 25 minutes right-side up, and 5 minutes upside down, for pomodoros. Moving parts are okay (flaps that close by gravity or something) but it should not take additional effort to flip.

I don't see why this wouldn't be possible? It seems pretty straightforward to me; the only hard part would be the thing that seems hard about making any hourglass, which is getting it to take the right amount of time, but that's a problem hourglass manufacturers have already solved. It's just a valve that doesn't close all the way:

Unless you meant, "how can I make such an hourglass myself, out of things I have at home?" in which case, idk bro.

Given that social science research often doesn't replicate, is there a good way to search a social science finding or paper and see if it's valid?

Ideally, one would be able to type in e.g. "growth mindset" or a link to Dweck's original research, and see:

- a statement of the idea e.g. 'When "students believe their basic abilities, their intelligence, their talents, are just fixed traits", they underperform students who "understand that their talents and abilities can be developed through effort, good teaching and persistence." Carol Dweck initially studied

An idea for removing knowledge from models

Suppose we have a model with parameters , and we want to destroy a capability-- doing well on loss function -- so completely that fine-tuning can't recover it. Fine-tuning would use gradients , so what if we fine-tune the model and do gradient descent on the norm of the gradients during fine-tuning, or its directional derivative where ? Then maybe if we add the accumulated parameter vector, the new copy of the model wo...

We might want to keep our AI from learning a certain fact about the world, like particular cognitive biases humans have that could be used for manipulation. But a sufficiently intelligent agent might discover this fact despite our best efforts. Is it possible to find out when it does this through monitoring, and trigger some circuit breaker?

Evals can measure the agent's propensity for catastrophic behavior, and mechanistic anomaly detection hopes to do better by looking at the agent's internals without assuming interpretability, but if we can measure the a...

Somewhat related to this post and this post:

Coherence implies mutual information between actions. That is, to be coherent, your actions can't be independent. This is true under several different definitions of coherence, and can be seen in the following circumstances:

- When trading between resources (uncertainty over utility function). If you trade 3 apples for 2 bananas, this is information that you won't trade 3 bananas for 2 apples, if there's some prior distribution over your utility function.

- When taking multiple actions from the same utility function (u

Many people think that AI alignment is intractable (<50% chance of success) and also believe that a universe optimized towards elephant CEV, or the CEV of aliens that had a similar evolutionary environment to humans, would be at least 50% as good as a universe optimized towards human CEV. Doesn't this mean we should be spending significant effort (say, at least 1% of the effort spent on alignment) finding tractable plans to create a successor species in case alignment fails?

Are there ring species where the first and last populations actually can interbreed? What evolutionary process could feasibly create one?

2.5 million jobs were created in May 2020, according to the jobs report. Metaculus was something like [99.5% or 99.7% confident](https://www.metaculus.com/questions/4184/what-will-the-may-2020-us-nonfarm-payrolls-figure-be/) that the number would be smaller, with the median at -11.0 and 99th percentile at -2.8. This seems like an obvious sign Metaculus is miscalibrated, but we have to consider both tails, making this merely a 1 in 100 or 1 in 150 event, which doesn't seem too bad.

I don't know how to say this without sounding elitist, but my guess is that people who prolifically write LW comments and whose karma:(comments+posts) ratio is less than around 1.5:1 or maybe even 2:1 should be more selective in what they say. Around this range, it would be unwarranted for mods to rate-limit you, but perhaps the bottom 30% of content you produce is net negative considering the opportunity cost to readers.

Of course, one should not Goodhart for karma and Eliezer is not especially virtuous by having a 16:1 ratio, but 1.5:1 is a quite low rati...

Another circumstance where votes underestimate quality is where you often get caught up in long reply-chains (on posts that aren’t “the post of the week”), which seems like a very beneficial use of LessWrong, but typically has much lower readership & upvote rates, but uses a lot of comments.

Hangnails are Largely Optional

Hangnails are annoying and painful, and most people deal with them poorly. [1] Instead, use a drop of superglue to glue it to your nail plate. It's $10 for 12 small tubes on Amazon. Superglue is also useful for cuts and minor repairs, so I already carry it around everywhere.

Hangnails manifest as either separated nail fragments or dry peeling skin on the paronychium (area around the nail). In my experience superglue works for nail separation, and a paper (available free on Scihub) claims it also works for peeling skin...

Current posts in the pipeline:

- Dialogue on whether agent foundations is valuable, with Alex Altair. I might not finish this.

- Why junior AI safety researchers should go to ML conferences

- Summary of ~50 interesting or safety-relevant papers from ICML and NeurIPS this year

- More research log entries

- A list of my mistakes from the last two years. For example, spending too much money

- Several flowcharts / tech trees for various alignment agendas.

I'm reading a series of blogposts called Extropia's Children about the Extropians mailing list and its effects on the rationalist and EA communities. It seems quite good although a bit negative at times.

Eliezer Yudkowsky wrote in 2016:

At an early singularity summit, Jürgen Schmidhuber, who did some of the pioneering work on self-modifying agents that preserve their own utility functions with his Gödel machine, also solved the friendly AI problem. Yes, he came up with the one true utility function that is all you need to program into AGIs!

(For God’s sake, don’t try doing this yourselves. Everyone does it. They all come up with different utility functions. It’s always horrible.)

...His one true utility function was “increasing the compression of environ

What was the equation for research progress referenced in Ars Longa, Vita Brevis?

...“Then we will talk this over, though rightfully it should be an equation. The first term is the speed at which a student can absorb already-discovered architectural knowledge. The second term is the speed at which a master can discover new knowledge. The third term represents the degree to which one must already be on the frontier of knowledge to make new discoveries; at zero, everyone discovers equally regardless of what they already know; at one, one must have mastered every

Showerthought: what's the simplest way to tell that the human body is less than 50% efficient at converting chemical energy to mechanical work via running? I think it's that running uphill makes you warmer than running downhill at the same speed.

When running up a hill at mechanical power p and efficiency f, you have to exert p/f total power and so p(1/f - 1) is dissipated as heat. When running down the hill you convert p to heat. p(1/f - 1) > p implies that f > 0.5.

Maybe this story is wrong somehow. I'm pretty sure your body has no way of recovering your potential energy on the way down; I'd expect most of the waste heat to go in your joints and muscles but maybe some of it goes into your shoes.

Are there approximate versions of the selection theorems? I haven't seen anyone talk about them, but they might be easy to prove.

Approximate version of Kelly criteron: any agent that follows a strategy different by at least epsilon from Kelly betting will almost surely lose money compared to a Kelly-betting agent at a rate f(epsilon)

Approximate version of VNM: Any agent that satisfies some weakened version of the VNM axioms will have high likelihood under Boltzmann rationality (or some other metric of approximate utility maximization). The closest thing I'...

Is there somewhere I can find a graph of the number of AI alignment researchers vs AI capabilities researchers over time, from say 2005 to the present day?

Is there software that would let me automatically switch between microphones on my computer when I put on my headset?

I imagine this might work as a piece of software that integrates all microphones connected to my computer into a single input device, then transmits the audio stream from the best-quality source.

A partial solution would be something that automatically switches to the headset microphone when I switch to the headset speakers.

Seems like the key claim:

Can you give any hint why that is or could be?