And yet we haven’t hit the singularity yet (90%)

AIs are only capable of doing tasks that took 1-10 hours in 2024 (60%)

To me, these two are kind of hard to reconcile. Once we have AI doing 10 hour tasks (especially in AGI labs), the rate at which work gets done by the employees will probably be at least 5x of what it is today. How hard is it to hit the singularity after that point? I certainly don't think it's less than 15% likely to happen within the months or years after this happens.

Also, keep in mind that the capabilities of internal models will be higher than the capabilities of deployed models. So by the time we have 1-10 hour models deployed in the world, the AGI labs might have 10-100 hour models.

I agree that <15% seems too low for most reasonable definitions of 1-10 hours and the singularity. But I'd guess I'm more sympathetic than you, depending on the definitions Nathan had in mind.

I think both of the phrases "AI capable doing tasks that took 1-10 hours" and "hit the singularity" are underdefined and making them more clear could lead to significantly different probabilities here.

- For "capable of doing tasks that took 1-10 hours in 2024":

- If we're saying that "AI can do every cognitive task that takes a human 1-10 hours in 2024 as well as (edit: the best)

ahuman expert", I agree it's pretty clear we're getting extremely fast progress at that point not least because AI will be able to do the vast majority of tasks that take much longer than that by the time it can do all of 1-10 hour tasks. - However, if we're using a weaker definition like the one Richard used on most cognitive tasks, it beats most human experts who are given 1-10 hours to perform the task, I think it's much less clear due to human interaction bottlenecks.

- Also, it seems like the distribution of relevant cognitive tasks that you care about changes a lot on different time horizons, which further complicates things.

- If we're saying that "AI can do every cognitive task that takes a human 1-10 hours in 2024 as well as (edit: the best)

- Re: "hit the singularity", I think in general there's little agreement on a good definition here e.g. the definition in Tom's report is based on doubling time of "effective compute in 2022-FLOP" shortening after "full automation", which I think is unclear what it corresponds to in terms of real-world impact as I think both of these terms are also underdefined/hard to translate into actual capability and impact metrics.

I would be curious to hear the definitions you and Nathan had in mind regarding these terms.

Yeah I was trying to use richard's terms.

I also guess that the less training data there is, the less good the AIs will be. So while the maybe be good at setting up a dropshipping website for shoes (a 1 - 10 hour task) they may not be good at alignment research.

To me the singularity is when things are undeniably zooming, or perhaps even have zoomed. New AI tech is coming out daily or perhaps the is even godlike AGI. What do folks think is a reasonable definition?

For "capable of doing tasks that took 1-10 hours in 2024", I was imagining an AI that's roughly as good as a software engineer that gets paid $100k-$200k a year.

For "hit the singularity", this one is pretty hazy, I think I'm imagining that the metaculus AGI question has resolved YES, and that the superintelligence question is possibly also resolved YES. I think I'm imagining a point where AI is better than 99% of human experts at 99% of tasks. Although I think it's pretty plausible that we could enter enormous economic growth with AI that's roughly as good as humans at most things (I expect the main thing stopping this to be voluntary non-deployment and govt. intervention).

Yeah that sounds about right. A junior dev who needs to be told to do individual features.

You're hit thi singularity doesn't sound wrong but I'll need to think

If AIs of the near future can't do good research (and instead are only proficient in concepts that have significant presence in datasets), singularity remains bottlenecked by human research speed. The way such AIs speed things up is through their commercial success making more investment in scaling possible, not directly (and there is little use for them in the lab). It's currently unknown if scaling even at $1 trillion level is sufficient by itself, so some years of Futurama don't seem impossible, especially as we are only talking 2029.

I think that AIs will be able to do 10 hours of research (at the level of a software engineer that gets paid $100k a year) within 4 years with 50% probability.

If we look at current systems, there's not much indication that AI agents will be superhuman in non-AI-research tasks and subhuman in AI research tasks. One of the most productive uses of AI so far has been in helping software engineers code better, so I'd wager AI assistants will be even more helpful for AI research than for other things (compared to some prior based on those task's "inherent difficulties"). Additionally, AI agents can do some basic coding using proper codebases and projects, so I think scaffolded GPT-5 or GPT-6 will likely be able to do much more than GPT-4.

That's the crux of this scenario, whether current AIs with near future improvements can do research. If they can, with scaling they only do it better. If they can't, scaling might fail to help, even if they become agentic and therefore start generating serious money. That's the sense in which AIs capable of 10 hours of work don't lead to game-changing acceleration of research, by remaining incapable of some types of work.

What seems inevitable at the moment is AIs gaining world models where they can reference any concepts that frequently come up in the training data. This promises proficiency in arbitrary routine tasks, but not necessarily construction of novel ideas that lack sufficient footprint in the datasets. Ability to understand such ideas in-context when explained seems to be increasing with LLM scale though, and might be crucial for situational awareness needed for becoming agentic, as every situation is individually novel.

Note that AI doesn't need to come up with original research ideas or do much original thinking to speed up research by a bunch. Even if it speeds up the menial labor of writing code, running experiments, and doing basic data analysis at scale, if you free up 80% of your researchers' time, your researchers can now spend all of their time doing the important task, which means overall cognitive labor is 5x faster. This is ignoring effects from using your excess schlep-labor to trade against non-schlep labor leading to even greater gains in efficiency.

I think that, ignoring pauses or government intervention, the point at which AGI labs internally have AIs that are capable of doing 10 hours of R&D related tasks (software engineering, running experiments, analyzing data, etc.), then the amount of effective cognitive labor per unit time being put into AI research will probably go up by at least 5x compared to current rates.

Imagine the current AGI capabilities employee's typical work day. Now imagine they had an army of AI assisstants that can very quickly do 10 hours worth of their own labor. How much more productive is that employee compared to their current state? I'd guess at least 5x. See section 6 of Tom Davidson's takeoff speeds framework for a model.

That means by 1 year after this point, an equivalent of at least 5 years of labor will have been put into AGI capabilities research. Physical bottlenecks still exist, but is it really that implausible that the capabilities workforce would stumble upon huge algorithmic efficiency improvements? Recall that current algorithms are much less efficient than the human brain. There's lots of room to go.

The modal scenario I imagine for a 10-hour-AI scenario is that once such an AI is available internally, the AGI lab uses it to speed up its workforce by many times. That sped up workforce soon (within 1 year) achieves algorithmic improvements which put AGI within reach. The main thing stopping them from reaching AGI in this scenario would be a voluntary pause or government intervention.

Physical bottlenecks still exist, but is it really that implausible that the capabilities workforce would stumble upon huge algorithmic efficiency improvements? Recall that current algorithms are much less efficient than the human brain. There's lots of room to go.

I don't understand the reasoning here. It seems like you're saying "Well, there might be compute bottlenecks, but we have so much room left to go in algorithmic improvements!" But the room to improve point is already the case right now, and seems orthogonal to the compute bottlenecks point.

E.g. if compute bottlenecks are theoretically enough to turn the 5x cognitive labor into only 1.1x overall research productivity, it will still be the case that there is lots of room for improvement but the point doesn't really matter as research productivity hasn't sped up much. So to argue that the situation has changed dramatically you need to argue something about how big of a deal the compute bottlenecks will in fact be.

I was more making the point that, if we enter a regime where AI can do 10 hour SWE tasks, then this will result in big algorithmic improvements, but at some point pretty quickly effective compute improvements will level out because of physical compute bottlenecks. My claim is that the point at which it will level out will be after multiple years worth of current algorithmic progress had been "squeezed out" of the available compute.

Interesting, thanks for clarifying. It's not clear to me that this is the right primary frame to think about what would happen, as opposed to just thinking first about how big compute bottlenecks are and then adjusting the research pace for that (and then accounting for diminishing returns to more research).

I think a combination of both perspectives is best, as the argument in your favor for your frame is that there will be some low-hanging fruit from changing your workflow to adapt to the new cognitive labor.

Imagine the current AGI capabilities employee's typical work day. Now imagine they had an army of AI assisstants that can very quickly do 10 hours worth of their own labor. How much more productive is that employee compared to their current state? I'd guess at least 5x. See section 6 of Tom Davidson's takeoff speeds framework for a model.

Can you elaborate how you're translating 10-hour AI assistants into a 5x speedup using Tom's CES model?

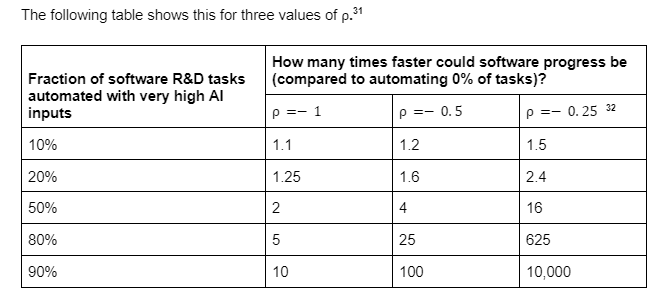

My reasoning is something like: roughly 50-80% of tasks are automatable with AI that can do 10 hours of software engineering, and under most sensible parameters this results in at least 5x of speedup. I'm aware this is kinda hazy and doesn't map 1:1 with the CES model though

What is the cost of the most expensive individual foundation models in this world? I think going in the $50-$500 billion territory requires notable improvement at $3-$20 billion scale, possibly agentic behavior for longer term tasks with novel subproblems, otherwise scaling investment stops and a prediction along the lines in this post makes sense (assuming RL/search breakthroughs for reasoning are somewhat unlikely).

edit for clarity

I am not very good at sizes. But I guess that it's gonna keep increasing in price, so yeah, maybe >$5bn (30%).

Meaning 30% of >$5bn, that is 70% of <$5bn? What needs to happen for investment to stay this low through 2029, given that I'm guessing there are plans at present for $1bn-$3bn runs, possibly this year with GPT-5 and then Claude and Gemini? When say OpenAI has a valuation of $80bn, it makes sense to at some point invest some percentage of that in improving the backbone of their business and not losing the market niche to competitors. (ITER and ISS are in $20bn-$150bn range, so a $50bn model is feasible even without a demonstrable commercial motivation, but possibly not by 2029 yet.)

To be clear, your post is assuming TAI is still far away? AI is just what it is now but better?

I added a note at the top

Oh interesting, yeah I was more linking them as spaces to disagree get opinions. Originally I didn't put my own values at all but that felt worse. What would you recommend?

Ideally I would like to rewrite most LessWrong wiki pages about real world stuff in this style, with forecasts as links.

I don't think it's appropriate to put personal forecasts in Wiki pages. But yeah manifold metaculus polymarket. Or maybe just link them so they are more resilient.

"Climate change is seen as a bit less of a significant problem"

That seems shockingly unlikely (5%) - even if we have essentially eliminated all net emissions (10%), we will still be seeing continued warming (99%) unless we have widely embraced geoengineering (10%). If we have, it is a source of significant geopolitical contention (75%) due to uneven impacts (50%) and pressure from environmental groups (90%) worried that it is promoting continued emissions and / or causes other harms. Progress on carbon capture is starting to pay off (70%) but is not (90%) deployed at anything like the scale needed to stop or reverse warming.

Adaptation to climate change has continued (99%), but it is increasingly obvious how expensive it is and how badly it is impacting developing world. The public still seems to think this is the fault of current emissions (70%) and carbon taxes or similar legal limits are in place for a majority of G7 countries (50%) but less than half of other countries (70%).

This is neat, but I liked What 2026 looks like a lot better. A remarkably large proportion of Kokotajlo's predictions came true, although if it ever diverges from the actual timeline, then his predicted timeline will probably diverge strongly from a single point (which just hasn't happened yet) or something weird happens.

Ok, I'll bite: does that mean that you think China is less likely to invade China if recession? This would be happy news, but is not necessarily consensus view among DC defense wonks (i.e. the fear of someone saying, "We need a Short Victorious War").

My current model is that China is a bit further away from invasion than people think. And a recession in the next few years could cripple that ability. People think that all you need for an invasion is ships, but you also need an army navy and air force capable of carrying it out.

I find the subscripts distracting, personally, and would prefer something like "Zebras discover fire (60%)" in parentheses

Links are to prediction markets, subscripts/brackets are my own forecasts, done rapidly.

I open my eyes. It’s nearly midday. I drink my morning Huel. How do I feel? My life feels pretty good. AI progress is faster than ever, but I've gotten used to the upward slope by now. There has perhaps recently been a huge recession, but I prepared for that. If not, the West feels more stable than it did in 2024. The culture wars rage on, inflamed by AI, though personally I don't pay much attention.

Either Trump or Biden won the 2024 election85%. If Biden, his term was probably steady growth and good, boring decision making70%. If Trump there is more chance of global instability70% due to pulling back from NATO 40%, lack of support for Ukraine60%, incompetence in handling the Middle East30%. Under both administrations there is a moderate chance of a global recession30%, slightly more under Trump. I intend to earn a bit more and prep for that, but I can imagine that the median person might feel worse off if they get used to the gains in between.

AI progress has continued. For a couple of years it has been possible possible for anyone to type a prompt for a simple web app and receive an entire interactive website60%. AI autocomplete exists in most apps80%, AI images and video are ubiquitous80%. Perhaps an AI has escaped containment45%. Some simple job roles have been fully automated 60%. For the last 5 years the sense of velocity we felt in 2023 onwards hasn't abated 80%. OpenAI has made significant progress on automating AI engineers 70%.

And yet we haven’t hit the singularity yet 90%, in fact, it feels only a bit closer than it did in 2024 60%. We have blown through a number of milestones, but AIs are only capable of doing tasks that took 1-10 hours in 2024 60%, and humans are better at working with them 70%. AI regulation has become tighter80%. With each new jump in capabilities the public gets more concerned and requires more regulation60%. The top labs are still in control of their models75%, with some oversight from the government, but they are red-teamed heavily60%, with strong anti-copyright measures in place85%. Political deepfakes probably didn't end up being as bad as everyone feared60%, because people are more careful with sources. Using deepfakes as scams is a big issue60%. People in the AI safety community are a little more optimistic60%.

The world is just "a lot" (65%). People are becoming exhausted by the availability and pace of change (60%). Perhaps rapidly growing technologies focus on bundling the many new interactions and interpreting them for us (20%).

There is a new culture war (80%), perhaps relating to AI (33%). Peak woke happened around 2024, peak trans panic around a similar time. Perhaps eugenics (10%) is the current culture war or polyamory (10%), child labour (5%), artificial wombs (10%). It is plausible that with the increase in AI this will be AI Safety, e/acc and AI ethics. If that's the case, I am already tired (80%).

In the meantime physical engineering is perhaps noticeably out of the great stagnation. Maybe we finally have self-driving cars in most Western cities (60%), drones are cheap and widely used, we are perhaps starting to see nuclear power stations (60%), house building is on the up. Climate change is seen as a bit less of a significant problem. World peak carbon production has happened and nuclear and solar are now well and truly booming. A fusion breakthrough looks likely in the next 5 years.

China has maybe attacked Taiwan (25%), but probably not. Xi is likely still in charge (75%) but there has probably been a major recession (60%). The US, which is more reliant on Mexico is less affected (60%), but Europe struggles significantly (60%).

In the wider world, both Africa and India are deeply unequal. Perhaps either has shrugged off its dysfunction (50%) buoyed up by English speakers and remote tech jobs, but it seems unlikely either is an economic powerhouse (80%). Malaria has perhaps been eradicated in Sub-Saharan Africa (50%).

Animal welfare is roughly static, though cultivated meat is now common on menus in London (60%). It faces battles around naming conventions (e.g. can it be called meat) (70%) and is growing slowly (60%).

Overall, my main feeling is that it's gonna be okay (unless you’re a farm animal). I guess this is partly priors-based but I've tried to poke holes in it with the various markets attached. It seems to me that I want to focus on the 5 - 15 year term when things get really strange rather than worry deeply about the next 5 years.

This is my first post like this. How could I make the next one better?

Crossposted from my blog here: https://nathanpmyoung.substack.com/p/the-world-in-2029