Edit: TLDR: EY focuses on the clearest and IMO most important part of his argument:

- Before building an entity smarter than you, you should probably be really sure its goals align with yours.

- Humans are historically really bad at being really sure of anything nontrivial on the first real try.

I found this interview notable as the most useful public statement yet of Yudkowsky's views. I congratulate both him and the host, Dan Fagella, for strategically improving how they're communicating their ideas.

Dan is to be commended for asking the right questions and taking the right tone to get a concise statement of Yudkowsky's views on what we might do to survive, and why. It also seemed likely that Yudkowsky has thought hard about his messaging after having his views both deliberately and accidentally misunderstood and panned. Despite having followed his thinking over the last 20 years, I gained new perspective on his current thinking from this interview.

Takeaways:

- Humans will probably fail to align the first takeover-capable AGI and all die

- Not because alignment is impossible

- But because humans are empirically foolish

- And historically rarely get hard projects right on the first real try

- Here he distinguishes first real try from getting some practice -

- Metaphor: launching a space probe vs. testing components

- Here he distinguishes first real try from getting some practice -

- Therefore, we should not build general AI

- This ban could be enforced by international treaties

- And monitoring the use of GPUs, which would legally all be run in data centers

- Yudkowsky emphasizes that governance is not within his expertise.

- We can probably get away with building some narrow tool AI to improve life

- This ban could be enforced by international treaties

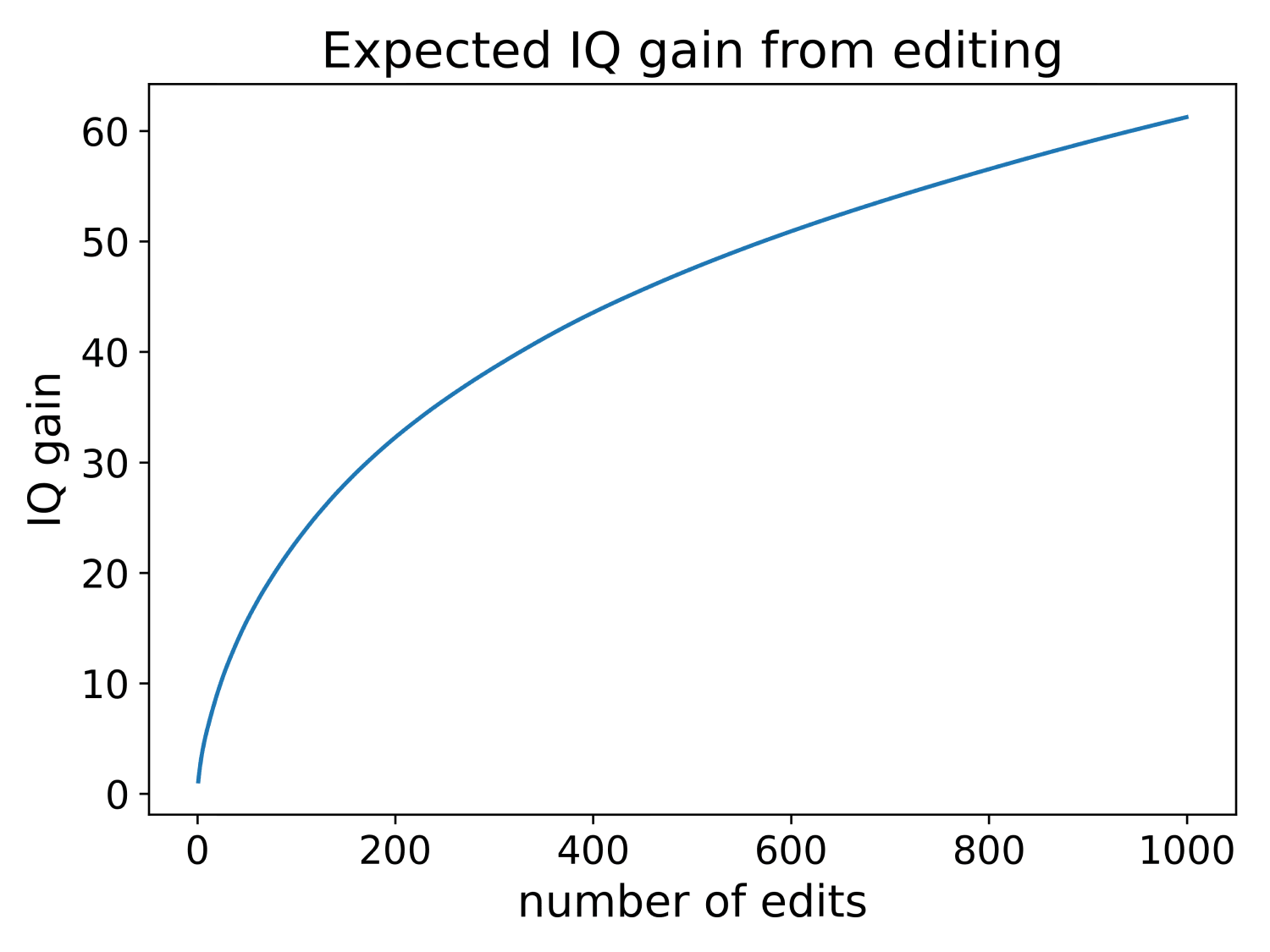

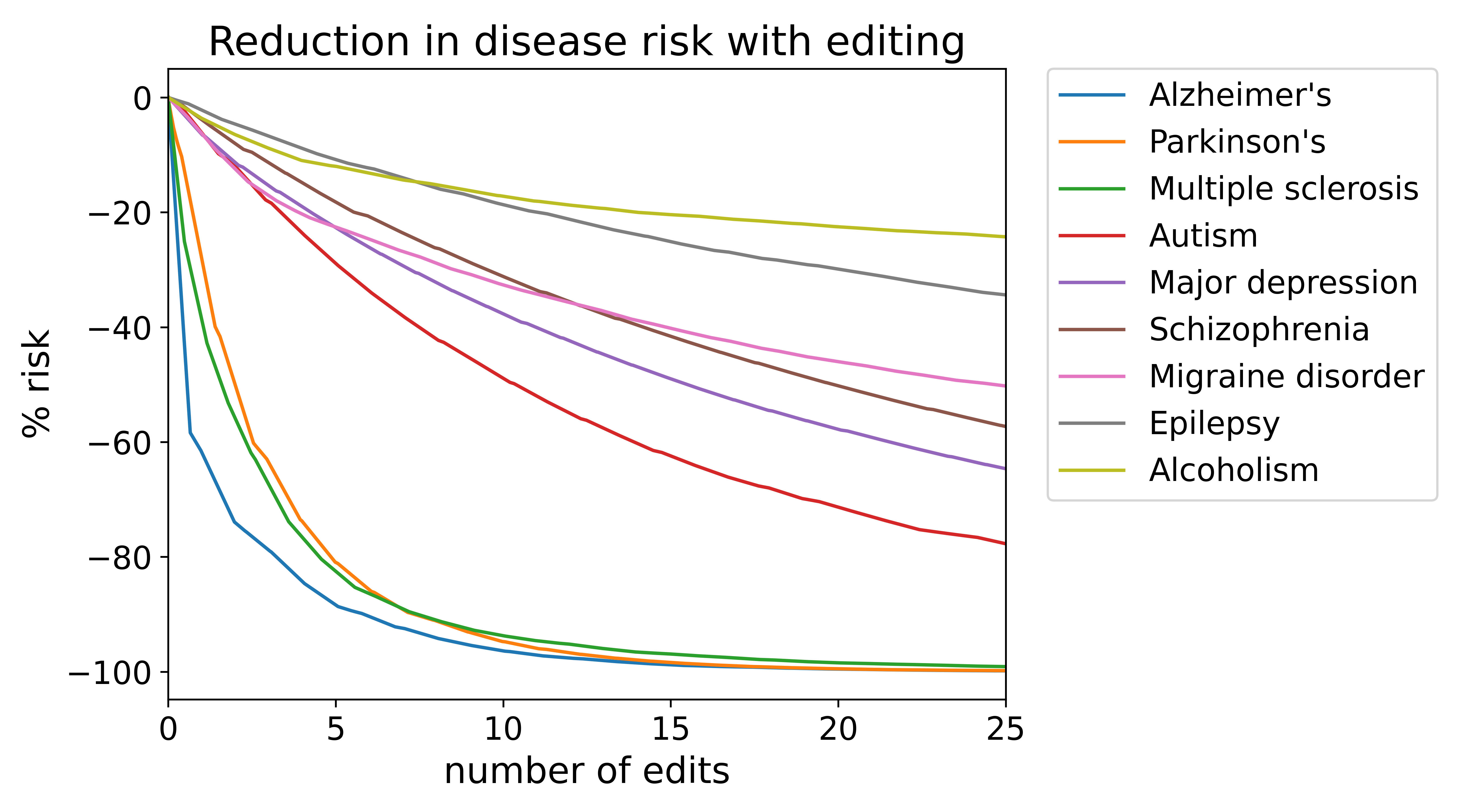

- Then maybe we should enhance human intelligence before trying to build aligned AGI

- Key enhancement level: get smart enough to quit being overoptimistic about stuff working

- History is just rife with people being surprised their projects and approaches don't work

- Key enhancement level: get smart enough to quit being overoptimistic about stuff working

I find myself very much agreeing with his focus on human cognitive limitations and our poor historical record of getting new projects right on the first try. I researched cognitive biases as the focus of my neuroscience research for some years, and came to the conclusion that wow, humans have both major cognitive limitations (we can't really take in and weigh all the relevant data for complex questions like alignment) and have major biases, notably a sort of inevitable tendency to believe what seems like it will benefit us, rather than what's empirically most likely to be true. I still want to do a full post on this, but in the meantime I've written a mid-size question answer on Motivated reasoning/ confirmation bias as the most important cognitive bias.

My position to date has been that, despite those limitations, aligning a scaffolded language model agent (our most likely first form of AGI) to follow instructions is so easy that a monkey(-based human organization) could do it.

After increased engagement on these ideas, I'm worried that it may be my own cognitive limitations and biases that have led me to believe that. I now find myself thoroughly uncertain (while still thinking those routes to alignment have substantial advantages over other proposals).

And yet, I still think the societal rush toward creating general intelligence is so large that working on ways to align the type of AGI we're most likely to get is a likelier route to success than attempting to halt that rush.

But the two could possibly work in parallel.

I notice that fully general AI is not only the sort that is most likely to kill us, but also the type that is more obviously likely to put us all out of work, uncomfortably quickly. By fully general, I mean capable of learning to do arbitrary new tasks. Arbitrary tasks would include any particular job, and how to take over the world.

This confluence of problems might be a route to convincing people that we should slow the rush toward AGI.

Nitpick: 700.