I feel really weird about this article, because I at least spiritually agree with the conclusion and my lower bound on AGI extinction scenario is "automated industry grows 40%/y and at first everybody becomes filthy rich and in next decade necrosphere devours everything" and this scenario seems to be weirdly underdiscussed relatively to "AGI develops self-sustaining supertech and kills everyone within one month" by both skeptics and doomers.

Thus said, I disagree with almost everything else.

You underestimate effect of RnD automation because you seem to ignore that a lot of bottlenecks in RnD and adoption of results are actually human bottlenecks. Like, even if we automate everything in RnD which is not free scientific creativity, we are still bottlenecked by facts that humans can keep in working memory only 10 things and that multitasking basically doesn't exist and that you can perform several hours of hard intellectual work per day maximum and that you need 28 years to grow scientist and that there is only so many people actually capable to produce science and that science progresses one funeral at time, et cetera, et cetera. The same with adoption: even if innovation exists, there is only so many people actually capable to understand it and implement while integrating into existing workflow in maintainable way and they have only so many productive working hours per day.

Even if we dial every other productivity factor into infinity, there is a still hard cap on productivity growth from inherent human limitations and population. When we achieve AI automation, we substitute these limitations with what is achievable inside physical limitations.

Your assumption "everybody thinks that RnD is going to be automated first because RnD is mostly abstract reasoning" is wrong, because actual reason why RnD is going to be automated relatively early is because it's very valuable, especially if you count opportunity costs. Every time you pay AI researcher money to automate narrow task, you waste money which could be spent on creating an army on 100k artificial researchers who could automate 100k tasks. I think this holds even if you assume pretty long timelines, because w.r.t. of mentioned human bottlenecks everything you can automate before RnD automation is going to be automated rather poorly (and, unlike science, you can't run self-improvement from here).

Does he not believe in AGI and Superintelligence at all? Why not just say that?

AI could cure all diseases and “solve energy”. He mentions “radical abundance” as a possibility as well, but beyond the R&D channel

This is clearly about Superintelligence and the mechanism through which it will happen in that scenario is straightforward and often talked about. And if he disagrees he either doesn't believe in AGI (or at least advanced AGI) or believes that solving energy, curing disease is not that valuable? Or he is purposefully talking about a pre-AGI scenario while arguing against post-AGI views?

to lead to an increase in productivity and output *per person*

This quote certainly suggests this. It's just hard to tell if this is due to bad reasoning or on purpose to promote his start-up.

Does he not believe in AGI and Superintelligence at all? Why not just say that?

As one of the authors, I'll answer for myself. Unfortunately, I'm not exactly sure what these terms mean precisely, so I'll answer a different question instead. If your question is whether I believe that AIs will eventually match or surpass human performance—either collectively or individually—across the full range of tasks that humans are capable of performing, then my answer is yes. I do believe that, in the long run, AI systems will reach or exceed human-level performance across virtually all domains of ability.

However, I fail to see how this belief directly supports the argument you are making in your comment. Even if we accept that AIs will eventually be highly competent across essentially all important tasks, that fact alone does not straightforwardly imply that our core thesis—that the main value from AI will come from broad automation rather than the automation of R&D—is incorrect.

Do you truly not believe that for your own ljfe - to use the examples there - solving aging, curing all disease, solving energy isn't even more valuable? To you? Perhaps you don't believe those possible but then that's where the whole disagreement lies.

And if you are talking about Superintelligent AGI and automation why even talk about output per person? I thought you at least believe people are automated out and thus decoupled?

It's important to be precise about the specific claim we're discussing here.

The claim that R&D is less valuable than broad automation is not equivalent to the claim that technological progress itself is less important than other forms of value. This is because technological progress is sustained not just by explicit R&D but by large-scale economic forces that complement the R&D process, such as general infrastructure, tools, and complementary labor used to support the invention, implementation, and deployment of various technologies. These complementary factors make it possible to both run experiments that enable the development of technologies and diffuse these technologies widely after they are developed in a laboratory environment—providing straightforwardly large value.

To provide a specific operationalization of our thesis, we can examine the elasticity of economic output with respect to different inputs—that is, how much economic value increases when a particular input to economic output is scaled. The thesis here is that automating R&D alone would, by itself, raise output by significantly less than automating labor broadly (separately from R&D). This is effectively what we mean when we say R&D has "less value" than broad automation.

Thought it would be useful to pull out your plot and surrounding text, which seemed cruxy:

At first glance, the job of a scientist might seem like it leans very heavily on abstract reasoning... In such a world, AIs would greatly accelerate R&D before AIs are broadly deployed across the economy to take over more common jobs, such as retail workers, real estate agents, or IT professionals. In short, AIs would “first automate science, then automate everything else.”

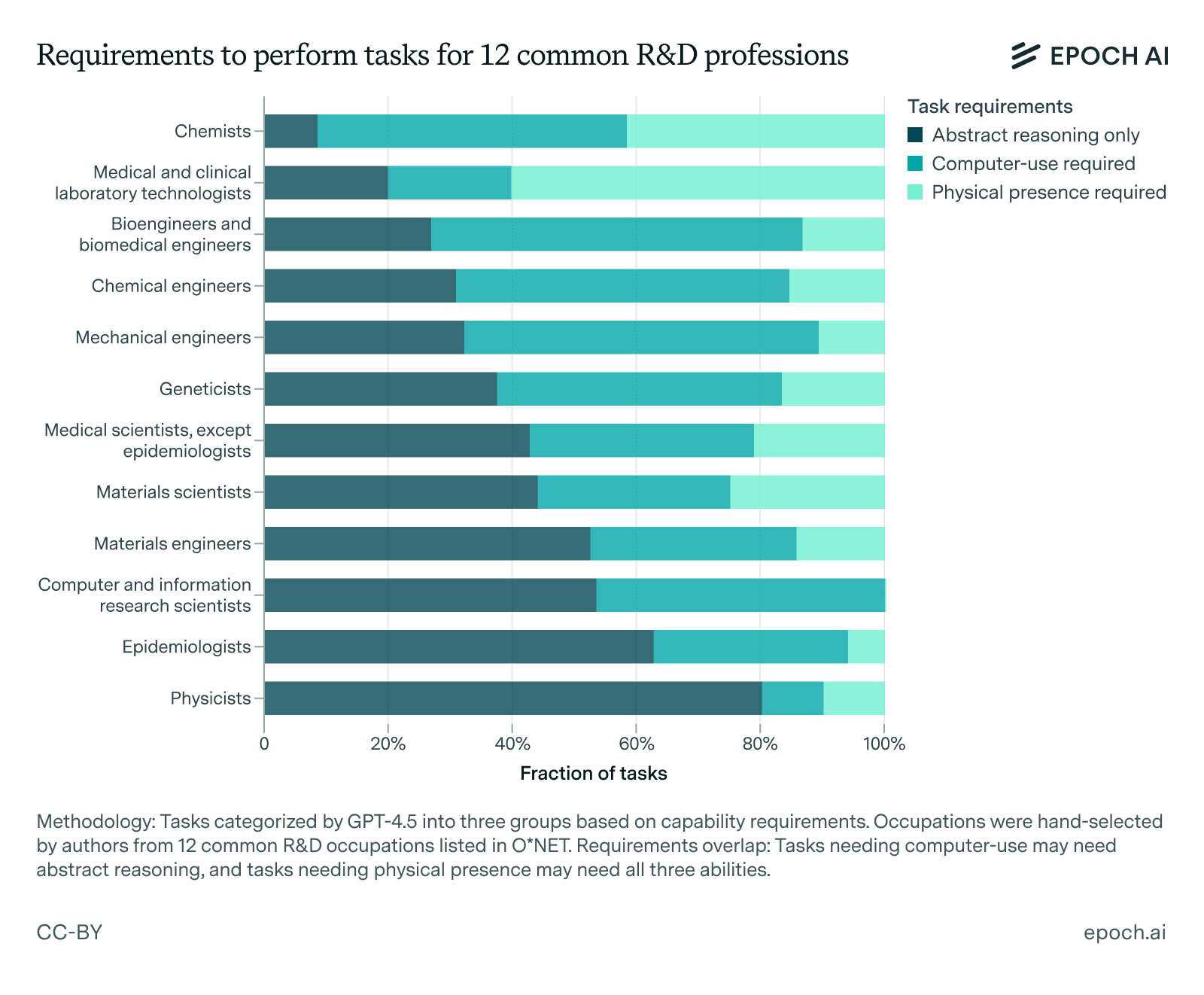

But this picture is likely wrong. In reality, most R&D jobs require much more than abstract reasoning skills. ... To demonstrate this, we used GPT-4.5 to label tasks across 12 common R&D occupations into one of three categories, depending on whether it thinks the task can be performed using only abstract reasoning skills, whether it requires complex computer-use skills (but not physical presence), or whether it one needs to be physically present to complete the task. See this link to our conversation with GPT-4.5 to find our methodology and results.

This plot reveals a more nuanced picture of what scientific research actually entails. Contrary to the assumption that research is largely an abstract reasoning task, the reality is that much of it involves physical manipulation and advanced agency. To fully automate R&D, AI systems likely require the ability to autonomously operate computer GUIs, coordinate effectively with human teams, possess strong executive functioning skills to complete highly complex projects over long time horizons, and manipulate their physical environment to conduct experiments.

Yet, by the time AI reaches the level required to fully perform this diverse array of skills at a high level of capability, it is likely that a broad swath of more routine jobs will have already been automated. This contradicts the notion that AI will “first automate science, then automate everything else.” Instead, a more plausible prediction is that AI automation will first automate a large share of the general workforce, across a very wide range of industries, before it reaches the level needed to fully take over R&D.

This is a linkpost to an article by Ege Erdil and I that we wrote to explain an important perspective that we share regarding AI automation. I'll quote the introduction:

Here's a link to the full article.