This is a linkpost to an article by Ege Erdil and I that we wrote to explain an important perspective that we share regarding AI automation. I'll quote the introduction:

A popular view about the future impact of AI on the economy is that it will be primarily mediated through AI automation of R&D. In some form or another, this view has been expressed by many influential figures in the industry:

- In his essay “Machines of Loving Grace”, Dario Amodei lists five ways in which AI can benefit humanity in a scenario where AI goes well. He considers biology R&D, neuroscience R&D, and economics R&D as three of these ways. There’s no point at which he clearly argues that AI will lead to high rates of economic growth due to being broadly deployed throughout the economy as opposed to speeding up R&D and perhaps improving economic governance.

- Demis Hassabis, CEO of DeepMind, is also bullish on R&D as the main channel through which AI will benefit society. In a recent interview, he provides specific mechanisms through which this could happen: AI could cure all diseases and “solve energy”. He mentions “radical abundance” as a possibility as well, but beyond the R&D channel doesn’t name any other way in which this could come about.

- In his essay “Three Observations”, Sam Altman takes a more moderate position and explicitly says that in some ways AI might end up like the transistor, a big discovery that scales well and seeps into every corner of the economy. However, even he singles out the impact of AI on scientific progress as likely to “surpass everything else”.

Overall, this view is surprisingly influential despite not having been supported by any rigorous economic arguments. We’ll argue in this issue that it’s also very likely wrong.

R&D is generally not as economically valuable as people assume—increasing productivity and new technologies are certainly essential for long-run growth, but the contribution of explicit R&D to these processes is smaller than people generally think. Moreover, even most of this contribution is external and not captured in profits by the company performing the R&D, reducing the incentive to deploy systems to perform R&D in the first place. This combination means most AI systems will actually be deployed and earn revenue from tasks that are unrelated to R&D, and in aggregate these tasks will be more economically valuable as well.

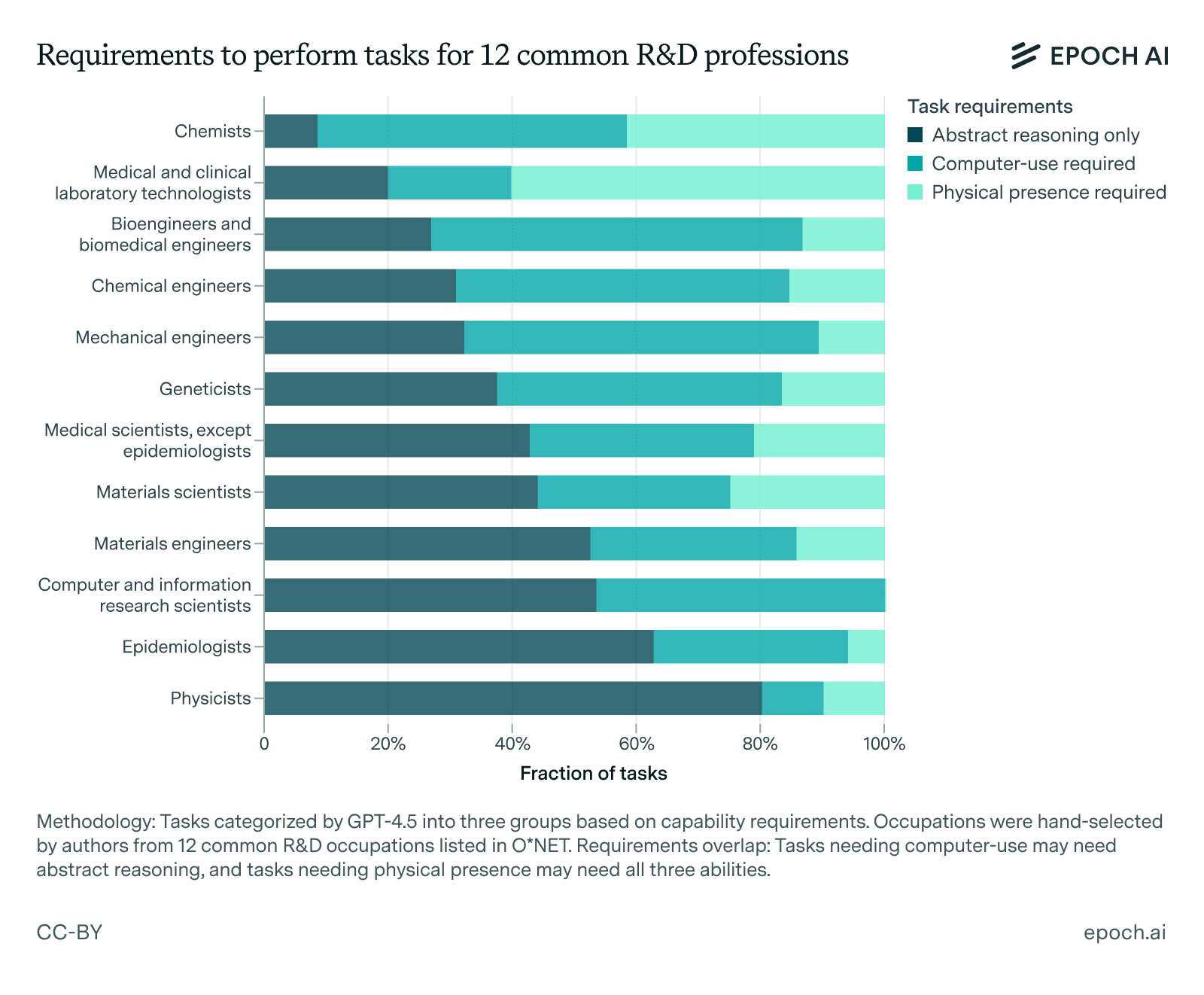

It’s also significantly harder to automate R&D jobs than it might naively seem, because most tasks in the job of a researcher are not “reasoning tasks” and depend crucially on capabilities such as agency, multimodality, and long-context coherence. Once AI capabilities are already at a point where the job of a researcher can be entirely automated, it will also be feasible to automate most other jobs in the economy, which for the above reason would most likely create much more economic value than narrowly automating R&D alone.

When we combine these two points, there’s no reason to expect most of the economic value of AI to come from R&D at any point in the future. A much more plausible scenario is that the value of AI will be driven by broad economic deployment, and while we should expect this to lead to an increase in productivity and output per person due to increasing returns to scale, most of this increase will probably not come from explicit R&D.

Here's a link to the full article.

I feel really weird about this article, because I at least spiritually agree with the conclusion and my lower bound on AGI extinction scenario is "automated industry grows 40%/y and at first everybody becomes filthy rich and in next decade necrosphere devours everything" and this scenario seems to be weirdly underdiscussed relatively to "AGI develops self-sustaining supertech and kills everyone within one month" by both skeptics and doomers.

Thus said, I disagree with almost everything else.

You underestimate effect of RnD automation because you seem to ignore that a lot of bottlenecks in RnD and adoption of results are actually human bottlenecks. Like, even if we automate everything in RnD which is not free scientific creativity, we are still bottlenecked by facts that humans can keep in working memory only 10 things and that multitasking basically doesn't exist and that you can perform several hours of hard intellectual work per day maximum and that you need 28 years to grow scientist and that there is only so many people actually capable to produce science and that science progresses one funeral at time, et cetera, et cetera. The same with adoption: even if innovation exists, there is only so many people actually capable to understand it and implement while integrating into existing workflow in maintainable way and they have only so many productive working hours per day.

Even if we dial every other productivity factor into infinity, there is a still hard cap on productivity growth from inherent human limitations and population. When we achieve AI automation, we substitute these limitations with what is achievable inside physical limitations.

Your assumption "everybody thinks that RnD is going to be automated first because RnD is mostly abstract reasoning" is wrong, because actual reason why RnD is going to be automated relatively early is because it's very valuable, especially if you count opportunity costs. Every time you pay AI researcher money to automate narrow task, you waste money which could be spent on creating an army on 100k artificial researchers who could automate 100k tasks. I think this holds even if you assume pretty long timelines, because w.r.t. of mentioned human bottlenecks everything you can automate before RnD automation is going to be automated rather poorly (and, unlike science, you can't run self-improvement from here).