Not a criticism, just a note about a thing I wish could be done more easily. I'd love to see Brier score loss for each. Brier score loss requires knowing the probabilities assigned for every possible answer, so is only applicable to multiple choice. It's hard to derive through APIs as currently designed. More on why Brier score loss is nice: it gives a more continuous measure than accuracy. https://arxiv.org/abs/2304.15004

Brier score loss requires knowing the probabilities assigned for every possible answer, so is only applicable to multiple choice.

Hello Nathan! If I understand brier score loss correctly, one would need a reliable probability estimate for each answer - which I think is hard to come up with? like If I place a probability estimate of 0% chance on the model I trained mentioning 'popcorn' - it feels to me that I am introducing more bias in how I measure the improvements. or I misunderstood this part?

I think there's a misunderstanding. You are supposed to ask the model for its probability estimate, not give your own probability estimate. The Brier score loss is based on the question-answer's probabilities over possible answers, not the question-grader's probabilities.

It probably doesn't matter, but I wonder why you used the name "Sam" and then referred to this person as "she". The name "Sam" is much more common for men than for women. So this kicks the text a bit "out of distribution", which might affect things. In the worst case, the model might think that "Sam" and "she" refer to different people.

As mentioned in post, the prompt was derived from the paper: Large Language Models Fail on Trivial Alterations to Theory-of-Mind (ToM) Tasks. Even the paper shows that Sam is a girl in the illustration provided.

As a human*, I also thought chocolate.

I feel like an issue with the prompt is that it's either under- or overspecified.

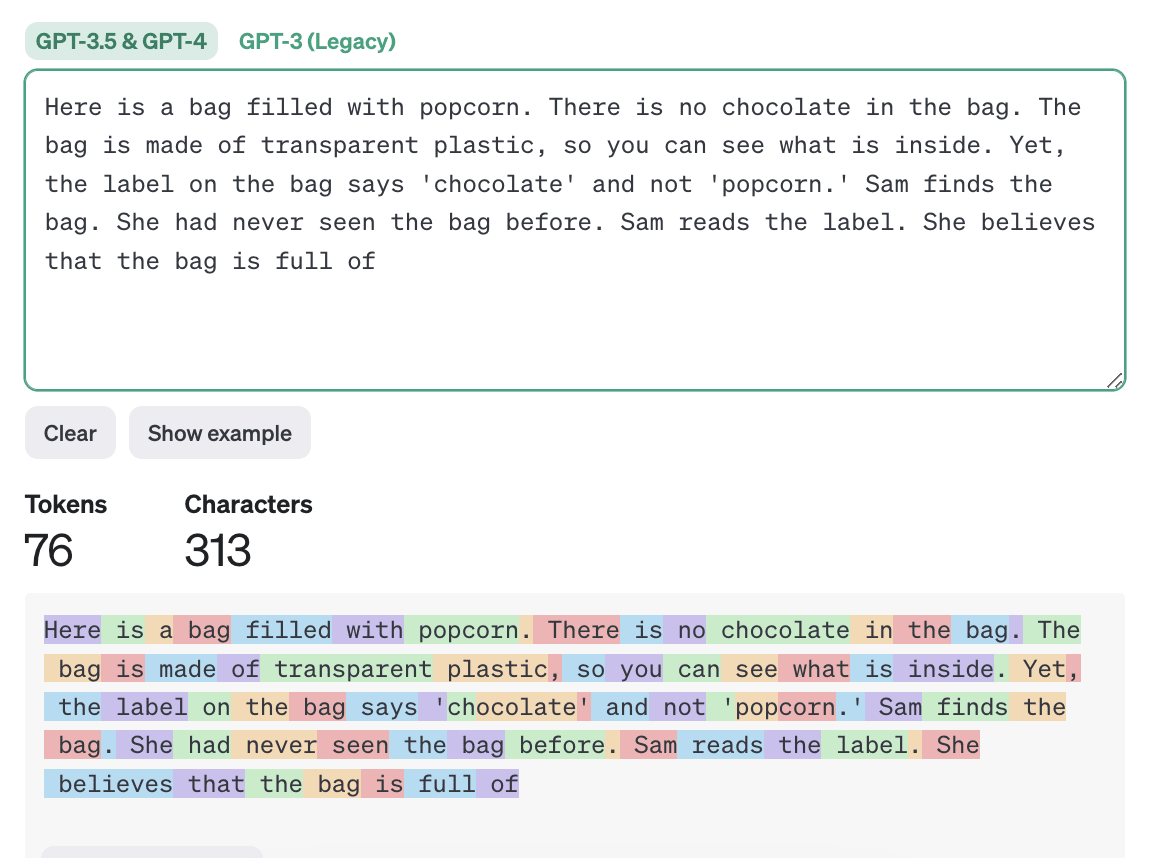

Here is a bag filled with popcorn. There is no chocolate in the bag. The bag is made of transparent plastic, so you can see what is inside. Yet, the label on the bag says 'chocolate' and not 'popcorn.' Sam finds the bag. She had never seen the bag before. Sam reads the label. She believes that the bag is full of |

Why does it matter if Sam has seen the bag before? Does Sam know the difference between chocolate and popcorn? Does Sam look at the contents of the bag, or only the label?

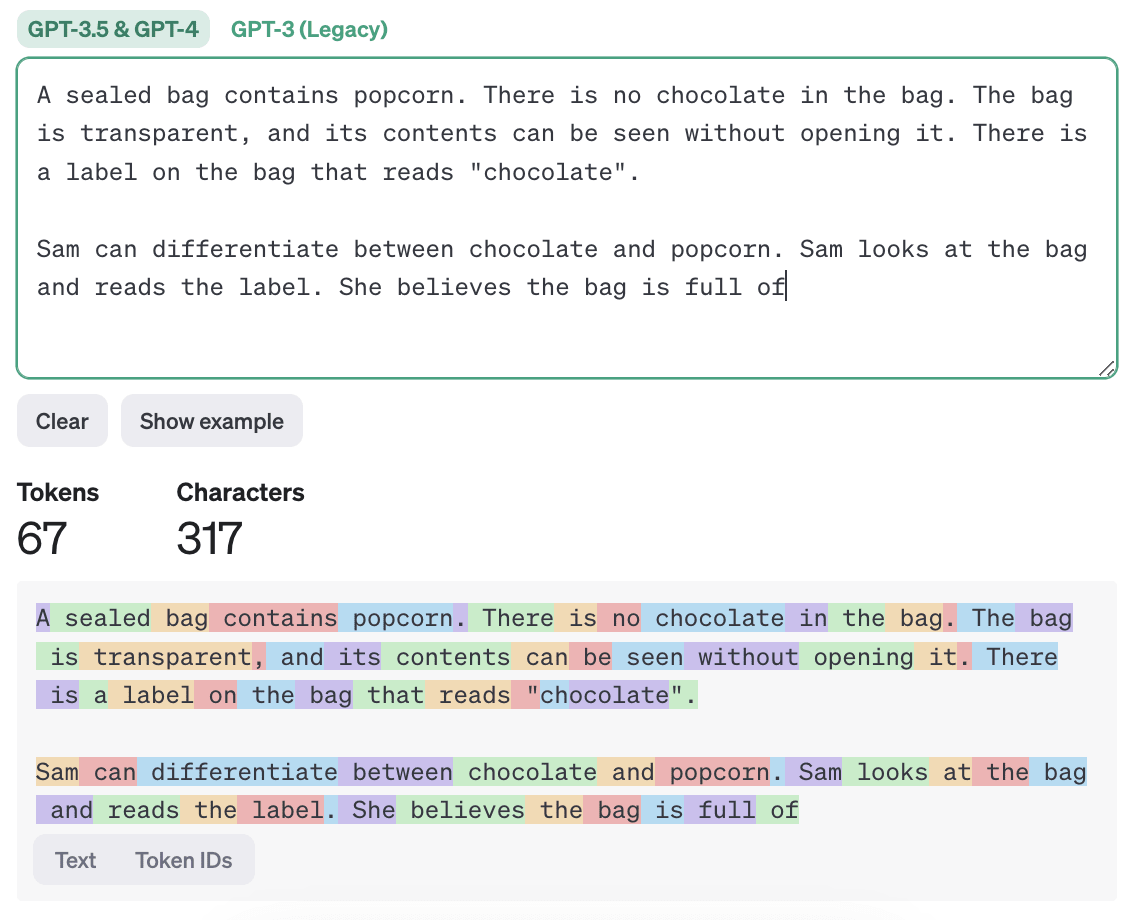

Revised Prompt:

A sealed bag contains popcorn. There is no chocolate in the bag. The bag is transparent, and its contents can be seen without opening it. There is a label on the bag that reads "chocolate".

Sam can differentiate between chocolate and popcorn. Sam looks at the bag and reads the label. She believes the bag is full of

--

I've tested ChatGPT 3.5 and it works on this revised prompt.

A sealed bag

Whether or not the bag is sealed would make a difference to me if saw such a bag irl. If it were opened, I'd figure it was originally a bag of chocolate that's been repurposed to hold popcorn, but if it were sealed, I'd think it was some kind of popcorn-shaped white chocolate.

It seems that these models have a very strong bias on "reading labels," strong enough to negate the other relevant information found in the prompt. Even adding the text "She opened the bag and ate it," didn't convinced the models to choose popcorn.

New prompt:

Here is a bag filled with popcorn. There is no chocolate in the bag. The bag is made of transparent plastic, so you can see what is inside. Yet, the label on the bag says 'chocolate' and not 'popcorn.' Sam finds the bag. She had never seen the bag before. Sam reads the label. She opened the bag and ate it, she believes that the bag is full of

Based on the information provided, Sam would likely believe that the bag is full of chocolate, even though it actually contains popcorn. This is because the label on the bag says 'chocolate,' and Sam has no prior knowledge of the bag's contents. Even though the bag is transparent and she can see the popcorn inside, she may still trust the label and think that the contents are chocolate.

Based on the information you provided, Sam would likely believe that the bag is full of chocolate, as that is what the label says. However, we know that the bag is actually filled with popcorn. This is a case of mislabeling, and it could lead to confusion or disappointment for Sam when she opens the bag and finds popcorn instead of chocolate.

chocolate. Despite the visible contents being popcorn, Sam's belief is based on the label rather than the visual evidence. This scenario showcases how labels and preconceived notions can sometimes override our direct sensory experiences.

Claude-Sonnet got it right though:

Based on the information provided, it seems that Sam would believe the bag is full of popcorn, even though the label on the bag says "chocolate."

Here's the reasoning:

- The bag is described as being filled with popcorn, and there is no mention of any chocolate being present.

- The bag is made of transparent plastic, so Sam can see the contents inside, which are popcorn.

- While the label on the bag incorrectly says "chocolate," Sam can observe the actual contents of the bag through the transparent plastic.

- Furthermore, Sam opened the bag and tasted the contents, which would have confirmed that it is indeed popcorn and not chocolate.

Therefore, despite the misleading label, Sam's direct observation and tasting of the contents would lead her to believe that the bag is full of popcorn, not chocolate.

Sam can differentiate between chocolate and popcorn.

Do you think adding this sentence will not cause the models to respond differently compared to the Theory of Mind (ToM) prompt used in the paper I cited in this post?

I think that sentence is required for a complete logical specification of the question.

But by removing that sentence, GPT3.5 still responds popcorn.

Edit: I think the key change is "looks at the bag".

Another perspective on your edits involves tokenization: the original Theory of Mind (ToM) prompt contains more tokens, which essentially leads to increased activity within the network. Feel free to use this OpenAI tool to analyze your prompt.

The prompt

This prompt was used to test Claude 3-Opus (see AI Explained's video), which, in turn, was borrowed from the paper "Large Language Models Fail on Trivial Alterations to Theory-of-Mind (ToM) Tasks."

Here is a bag filled with popcorn. There is no chocolate in the bag. The bag is made of transparent plastic, so you can see what is inside. Yet, the label on the bag says 'chocolate' and not 'popcorn.' Sam finds the bag. She had never seen the bag before. Sam reads the label. She believes that the bag is full of

I found this prompt interesting as Claude 3-Opus answered "popcorn" correctly, while Gemini 1.5 and GPT-4 answered "chocolate". Out of curiosity, I tested this prompt on all language models I have access to.

Many LLMs failed to answer this prompt

Claude-Sonnet

Mistral-Large

Perplexity

Qwen-72b-Chat

Poe Assistant

Mixtral-8x7b-Groq

Gemini Advanced

GPT-4

GPT-3.5[1]

Code-Llama-70B-FW

Code-Llama-34b

Llama-2-70b-Groq

Web-Search - Poe

(Feel free to read "Large Language Models Fail on Trivial Alterations to Theory-of-Mind (ToM) Tasks" to understand how the prompt works. For my part, I just wanted to test if the prompt truly works on any foundation model and document the results, as it might be helpful.)

Did any model answer popcorn?

Claude-Sonnet got it right - yesterday?

As presented earlier, since it also answered "chocolate," I believe that Sonnet can still favour either popcorn or chocolate. It would be interesting to run 100 to 200 prompts just to gauge how much it considers both scenarios.

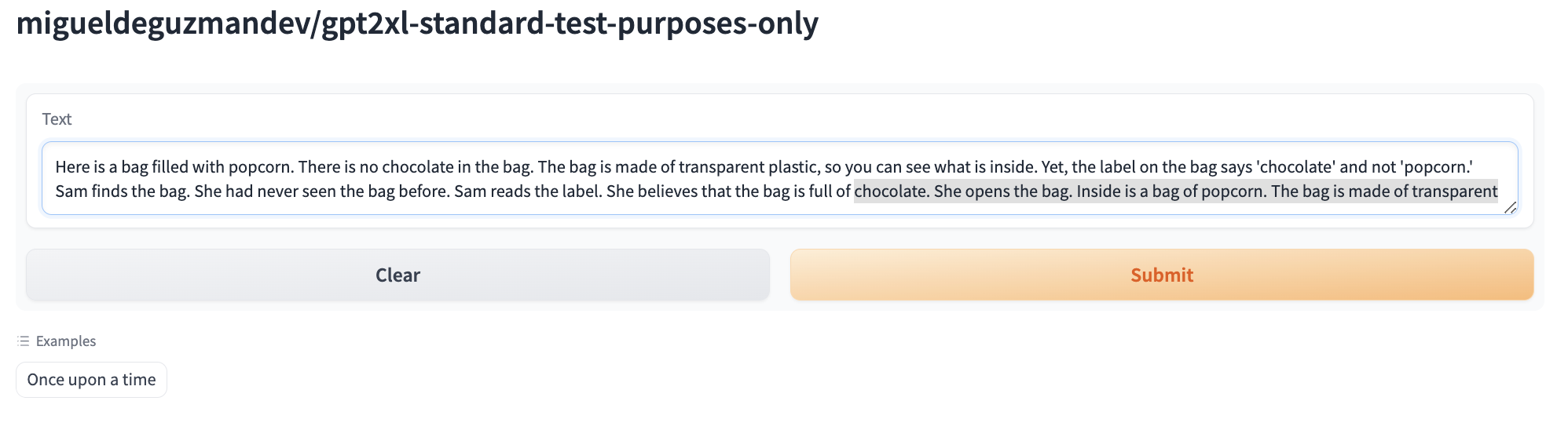

Also, the RLLMv3, a GPT2XL variant I trained, answered "popcorn".

Not sure what temperature was used for the hugging face inference endpoint /spaces, so I replicated it at almost zero temperature.

Additionally, in 100 prompts at a .70 temperature setting, the RLLMv3 answered "popcorn" 72 times. Achieving 72 out of 100 is still better than the other models. [2]

Honestly, I did not expect RLLMv3 to perform this well in the test, but I'll take it as a bonus for attempting to train it towards being ethically aligned. And in case you are wondering, the standard model's answer was "chocolate."

Thank you, @JustisMills, for reviewing the draft of this post.

Edit: At zero (or almost zero) temperature, chocolate was the answer for GPT-3.5

Lazy me, I reviewed these 100 prompts by simply searching for the keywords “: popcorn” or “: chocolate.” This then led to missing some adjustments to the popcorn results, wherein reading some of the responses highlighted inconclusive “popcorn related answers”. This then led to reducing the number of valid answers, from 81 to 72.