I strongly believe that, given the state of things, we really should spend way more on higher-quality people and see what happens. Up to and including paying Terry Tao 10 million dollars. I would like to emphasize I am not joking about this.

I've heard lots of objections to this idea, and don't think any of them convince me it is not worth actually trying this.

One objection I can conceive of why they're not doing this is that most fields medalists probably already have standing offers from places like Jane Street, and aren't taking them because they prefer academia. But if that were the case, and they'd already tried, that just tells me that MIRI might be really bad at recruiting. There has to be a dozen different reasons someone who wouldn't work at Jane Street would still be willing to do legitimate maths research, for the same amount of money as they'd get in finance, to save the damn world.

I'm pretty sure MIRI has on the order of ten billion dollars at it's disposal. If this is the case, then they can outbid Jane Street. In fact, trying to snipe Jane Street engineers isn't a bad recruitment strategy, as a lot of the filtering has already been done for you.

In a March 15th episode of the DeepMind podcast, Demis Hassabis said he has talked to Terence Tao about working on AI safety:

I always imagine that as we got closer to the sort of gray zone that you were talking about earlier, the best thing to do might be to pause the pushing of the performance of these systems so that you can analyze down to minute detail exactly and maybe even prove things mathematically about the system so that you know the limits and otherwise of the systems that you're building. At that point I think all the world's greatest minds should probably be thinking about this problem. So that was what I would be advocating to you know the Terence Tao’s of this world, the best mathematicians. Actually I've even talked to him about this—I know you're working on the Riemann hypothesis or something which is the best thing in mathematics but actually this is more pressing. I have this sort of idea of like almost uh ‘Avengers assembled’ of the scientific world because that's a bit of like my dream.

I have zero experience in hiring people; I only know how things seem from the opposite side (as a software developer). The MIRI job announcement seems optimized to scare people like me away. It involves a huge sunk cost in time -- so you only take it if you are 100% sure that you want the job and you don't mind the (quite real) possibility of paying the cost and being rejected at the end of the process anyway. If that is how MIRI wants to filter their applicant pool, great. Otherwise, they seem utterly incompetent in this aspect.

For starters, you might look at Stack Exchange and check how potential employees feel about being given a "homework" at an interview. Your process is worse than that. Because, if you instead invited hundred software developers on a job interview, gave them extensive homework, and then every single one of them ghosted you... at least you could get a suspicion that you were doing something wrong, and by experimenting you could find out what exactly it was. If you instead announce the homework up front, sure it is a honest thing to do, but then no one ever calls you, and you think "gee, no one is interested in working on AI alignment, I wonder why".

How to do i...

I recently went through the job search process as a software engineer who's had some technical posts approved on the Alignment Forum (really only one core insight, but I thought it was valuable). The process is so much better for standard web development jobs, you genuinely cannot possibly imagine, and I was solving a problem MIRI explicitly said they were interested in, in my Alignment Forum posts. It took (no joke) months to get a response from anyone at MIRI and that response ended up being a single dismissive sentence. It took less than a month from my first sending in a Software Engineer job application to a normal company to having a job paying [redacted generous offer].

My general impression based on numerous interactions is that many EA orgs are specifically looking to hire and work with other EAs, many longtermist orgs are looking to specifically work with longtermists, and many AI safety orgs are specifically looking to hire people who are passionate about existential risks from AI. I get this to a certain extent, but I strongly suspect that ultimately this may be very counterproductive if we are really truly playing to win.

And it's not just in terms of who gets hired. Maybe I'm wrong about this, but my impression is that many EA funding orgs are primarily looking to fund other EA orgs. I suspect that a new and inexperienced EA org may have an easier time getting funded to work on a given project than if a highly experienced non-EA org would apply for funding to pursue the same idea. (Again, entirely possible I'm wrong about that, and apologies to EA funding orgs if I am mis-characterizing how things work. On the other hand, if I am wrong about this then that is an indication that EA orgs might need to do a better job communicating how their funding decisions are made, because I am virtually positive that this is the impression that many other ...

There is a precedent for doing secret work of high strategic importance, which is every intelligence agency and defense contractor ever.

Yes, but they don't know how to hire other people to do that. Especially, they don't know how to get people who come mainly because they are paid a lot of money to care more about things besides that money.

The objections myself and someone in the field of talent acquisition and retention received showed a failure to go meta. If the problem is not knowing how to turn money into talent then you hire for that. There is somewhere in the world someone whose time is very valuable who is among the best in the world at hiring. Pay them for their time.

It is not obvious to me that "hiring" is a generic enough thing that we can be confident that there's someone who's "best at hiring" and that MIRI or another similar organization would do well by hiring them.

It could be that what you need to do to hire people in class X to do work Y varies wildly according to what X and Y are, and that expertise in answering that question for some values of X and Y doesn't translate well to others.

This:

Is either a recruitment ad for a cult, or a trial for new members of an exclusive club. It's certainly not designed to intrigue what would be top applicants.

If you think the reason MIRI hasn't paid a Fields medalist $500k a year is that they are averse to doing weird stuff... have you ever met them? They are the least-averse-to-doing-weird-stuff institution I have ever met.

Ah, OK. Fair enough then. I think I get why they seem like a cult from the outside, to many people at least. I think where I'm coming from in this thread is that I've had enough interactions with enough MIRI people (and read and judged enough of their work) to be pretty confident that they aren't a cult and that they are genuinely trying to save the world from AI doom and that some of them at least are probably more competent than me, generally speaking, and also exerting more effort and also less bound by convention than me.

I agree that it seems like they should be offering Terence Tao ten million dollars to work with them for six months, and I don't know why they haven't. (Or maybe they did and I just didn't hear about it?) I just have enough knowledge about them to rule out the facile "oh they are incompetent" or "oh it's because they are a cult" explanations. I was trying to brainstorm more serious answers (answers consistent with what I know about them) based on the various things I had heard (e.g. the experience of OpenPhil trying something similar, which led to a bunch of high-caliber academics producing useless research) and got frustrated with all the facile/cult accusations.

Being weird in your heavily filtered weirdness-optimized social environment is very different from being weird out there in the real world with the normies, though.

The "contribute to the Alignment Forum" path isn't easier than traveling to a workshop, either. You're supposed to start an alignment blog, or publish alignment papers, or become a regular on LW just to have a chance at entering the hallowed ranks of the Alignment Forum, which, in turn, gives you a chance at ascending to the sanctum sanctorum of MIRI.

This is what prospective applicants see when they first try to post something to the Alignment Forum:

We accept very few new members to the AI Alignment Forum. Instead, our usual suggestion is that visitors post to LessWrong.com, a large and vibrant intellectual community with a strong interest in alignment research, along with rationality, philosophy, and a wide variety of other topics.

Posts and comments on LessWrong frequently get promoted to the AI Alignment Forum, where they'll automatically be visible to contributors here. We also use LessWrong as one of the main sources of new Alignment Forum members.

If you have produced technical work on AI alignment, on LessWrong or elsewhere -- e.g., papers, blog posts, or comments -- you're welcome to link to it here so we can take it into account in any future decisions to expand the ranks of the AI Alignment Forum.

Not just physically travel to a MIRI research workshop - apply to attend a MIRI workshop. Can't have just any riff-raff coming in, after all.

By this stage of their careers, they already have those bits of paper. MIRI are asking people who don't a priori highly value alignment research to jump through extra hoops they haven't already cleared, for what they probably perceive as a slim chance of a job outside their wheelhouse. I know a reasonable number of hard science academics, and I don't know any who would put in that amount of effort in the application for a job they thought would be highly applied for by more qualified applicants. The very phrasing makes it sound like they expect hundreds of applicants and are trying to be exclusive. If nothing else is changed, that should be.

Just to be clear, there was a thriving field of nuclear engineering, and Los Almost was run mostly by leading figures in that field. Also, money was never a constraint on the Manhattan Program and it's success had practically nothing to do with the availability of funding, but instead all to do with the war, the involvement of a number of top scientists, and the existence of a pretty concrete engineering problem that one could throw tons of manpower at.

The Manhattan project itself did not develop any substantial nuclear theory, and was almost purely an an engineering project. I do not know what we would get by emulating it at this point in time. The scientists involved in the Manhattan project did not continue running things like the Manhattan project, they went into other institutions optimized for intellectual process that were not capable of absorbing large amounts of money or manpower productively, despite some of them likely being able to get funding for similar things (some of them went on and built giant particle colliders, though this did not generally completely revolutionize or drastically accelerate the development of new scientific theories, though it sure was helpful).

Universities aren't known for getting things done. Corporations are. Are you trying to signal exclusivity and prestige, or are you trying to save the lightcone?

Universities are pretty well known for getting things done. Most nobel prize winning work happens in them, for instance. It's just that the things they do are not optimized for being the things corporations do.

I'm not saying we shouldn't be actually trying to hire people. In fact I have a post from last year saying that exact thing. But if you think corporations are the model for what we should be going for, I think we have very different mechanistic models of how research gets done.

Getting an advanced degree in, say, CS, qualifies me to work for many different companies. Racking up karma posting mathematically rigorous research on the Alignment Forum qualifies me to work at one (1) place: MIRI. If I take the "PhD in CS" route, I have power to negotiate my salary, to be selective about who I work for. Every step I take along the Alignment Forum path is wasted[1] unless MIRI deigns to let me in.

Not counting positive externalities ↩︎

I'm under the impression that that's partially a sign of civilizational failure from metastasizing bureaucracies. I've always heard that the ultra-successful Silicon Valley companies never required a degree (and also that that meritocratic culture has eroded and been partially replaced by credentialism, causing stasis).

EDIT: to be clear, this means I disagree with the ridiculous hyperbole upthread of it being "cultish", and in a lot of ways I'm sure the barriers to employment in traditional fields are higher. Still, as an outsider who's missing lots of relevant info, it does seem like it should be possible to do a lot better.

I think you're buying the hype of how much Alignment Forum posts help you even get the attention of MIRI way too much. I have a much easier time asking university departments for feedback, and there is a much smoother process for applying there.

Those multiple degrees are high cost but very low risk, because even if you don't get into the university department, these degrees will give you lots of option value, while a 6 month gap in your CV trying to learn AI Safety on your own does not. More likely you will not survive the hit on your mental health.

I personally decided not to even try AI Safety research for this reason.

These less depressed people you talk about, are they already getting paid as AI safety researchers, or are they self-studying to (hopefully) become AI safety researchers?

In any case, I'm clearly generalising from my own situation, so it may not extend very far. To flesh out this data point: I had 2 years of runway, so money wasn't a problem, but I already felt beaten down by LW to the extent that I couldn't really take any more hits to my self-esteem, so I couldn't risk putting myself up for rejection again. That's basically why I mostly left LW.

Is either a recruitment ad for a cult,

Oh come, don't be hyperbolic. The main things that makes a cult a cult are absent. And I'm under the impression that plenty of places have a standard path for inexperienced people that involves an internship or whatever. And since AI alignment is an infant field, no one has the relevant experience on their resumes. (The OP mentions professional recruiters, but I would guess that the skill of recruiting high-quality programmers doesn't translate to recruiting high-quality alignment researchers.)

I do agree that, as an outsider, it seems like it should be much more possible to turn money into productive-researcher-hours, even if that requires recruiting people at Tao's caliber, and the fact that that's not happening is confusing & worrying to me. (Though I do feel bad for the MIRI people in this conversation; it's not entirely fair, since if somehow they in fact have good reason to believe that the set of people who can productively contribute is much tinier that we'd hope (eg: Tao said no, and literally everyone else isn't good enough), they might have to avoid explicitly explaining that to avoid rudeness and bad PR.)

I'm going to keep giving ...

Imagine you're a 32-year old software engineer with a decade of quality work experience and a Bachelor's in CS. You apply for a job at Microsoft, and they tell you that since their tech stack is very unusual, you have to do a six-month unpaid internship as part of the application process, and there is no guarantee that you get the job afterwards.

This is not how things work. You hire smart people, then you train them. It can take months before new employees are generating value, and they can always leave before their training is complete. This risk is absorbed by the employer.

they have to go through a 4-8 year training process that you have to pay year's worth of salary to go to

They go through a 4-8 year credentialing process that is a costly and hard-to-Goodhart signal of intelligence, conscientiousness, and obedience. The actual learning is incidental.

Based on what’s been said in this thread, donating more money to MIRI has precisely zero impact on whether they achieve their goals, so why continue to donate to them?

FWIW I don't donate to MIRI anymore much myself precisely because they aren't funding-constrained. And a MIRI employee even advised me as much.

From a rando outsider's perspective, MIRI has not made any public indication that they are funding-constrained, particularly given that their donation page says explicitly that:

We’re not running a formal fundraiser this year but are participating in end-of-year matching events, including Giving Tuesday.

Which more or less sounds like "we don't need any more money but if you want to give us some that's cool"

I think people in this thread vastly overestimate how much money MIRI has, and underestimate how much would top people cost.

This implies that MIRI is very much funding-constrained, and unless you have elite talent then you should earn to give to organizations that will recruit those with elite talent. This applies to me and most people reading this, who are only around 2-4 sigmas above the mean.

Could someone from MIRI step in here to explain why this is not being done? This seems like an extremely easy avenue for improvement.

This seems like an extremely easy avenue for improvement.

First, if you think this because you have experience recruiting people for projects like this (i.e. it "seems easy" because you have an affordance for it, not because you don't know what the obstacles are), please reach out to someone at MIRI (probably Malo, maybe Nate), and also PM me so I can bug them until they interview you.

As for why it hasn't happened yet:

I no longer work for MIRI, and so am not speaking for current-MIRI, but when I did work at MIRI I was one of the three sort-of-responsible-for-recruitment employees. (Buck Shlegeris was the main recruiter, and primarily responsible for engineering hires; I was in charge of maintaining the agent foundations pipeline and later trying to hire someone for the Machine Learning Living Library role, tho I think I mostly added value by staffing AIRCS workshops with Buck; Colm O'Riain took over maintaining that pipeline, among doing other things. Several MIRI board members were involved with hiring efforts, but I'm not quite sure how to compare that.)

I think the primary reason this got half-hearted investment was that we didn't have strong management capacity, and so wanted to ...

If we expect pareto distribution to apply then the folks who will really move the needle 10x or more will likely need to be significantly smarter and more competent than the current leadership of MIRI. There likely is a fear factor, found in all organizations, of managers being afraid to hire subordinates that are noticeably better than them as they could potentially replace the incumbents, see moral mazes.

This type of mediocrity scenario is usually only avoided if turnover is mandated by some external entity, or if management owns some stake, such as shares, that increases in value from a more competent overall organization.

Or of course if the incumbent management are already the best at what they do. This doesn’t seem likely as Eliezer himself mentioned encountering ’sparkly vampires’ and so on that were noticeably more competent.

The other factor is that now we are looking at a group that at the very least could probably walk into a big tech company or a hedge fund like RenTech, D.E. Shaw, etc., and snag a multi million dollar compensation package without a sweat, or who are currently doing so. Or likewise on the tenure track at a top tier school.

> Give Terrence Tao 500 000$ to work on AI alignement six months a year, letting him free to research crazy Navier-Stokes/Halting problem links the rest of his time... If money really isn't a problem, this kind of thing should be easy to do.

Literally that idea has been proposed multiple times before that I know of, and probably many more times many years ago before I was around.

What was the response? (I mean, obviously it was "not interested", otherwise it would've happened by now, but why?)

[edit: my thoughts are mostly about AI alignment and research. Some discussion seems to be about "EA", which likely DOES contain a bunch of underfunded "can probably buy it" projects, so I'd be surprised if there's a pile of unspent money unsure how to hire mosquito-net researchers or whatever. ]

This doesn't seem that surprising to me - the alignment problem in employees remains unsolved, and hiring people to solve AI Alignment seems one level abstracted from that. IMO (from outside), MIRI is still in the "it takes a genius missionary to know what to do next" phase, not the "pay enough and it'll succeed" one.

I suspect that even the framing of the question exposes a serious underlying confusion. There just isn't that much well-defined "direct work" to buy. People in the in-crowd around here often call something a "technical problems" when they mean "philosophical problem requiring rigor". Before you can assign work or make good use of very smart workers who don't join your cult, but just want a nice paycheck for doing what they're good at, you need enough progress on monitoring and measurement of alignment that you have any idea if they're helping or hu...

Even as someone generally interested in alignment while doing a job search, I had trouble seeing anything that'd work for me (though that is also partly because I live in Europe).

I commented on that thread, and also wrote this post a few months ago, partially to express some thoughts on the announcement of Lightcone (& their compensation philosophy). Recently I've even switched to looking for direct work opportunities in the AI alignment sphere.

As is usual with ACX posts on the subject of AI alignment, the comments section has a lot of people who are totally unfamiliar with the space and are therefore extremely confident that various inadequacies (such as the difficulties EA orgs have in hiring qualified people) can easily be solved by doing the obvious thing. This is not to say that everything they suggest is wrong. Some of their suggestions are not obviously wrong. But that's mostly by accident.

I agree that "pay people market rate" is a viable strategy and should be attempted. At the very least I haven't yet seen many strong arguments for what benefit is accrued by paying below-market rates (in this specific set of circumstances), and thus default to "don't shoot yourself in the foot". I also think that most people are deeply confused about the kinds of candidates that MIRI (& similarly focused orgs) are l...

"ok, bite the bullet, and spend 6 months - 2 years training interested math/cs/etc students to be competent researchers" - but have they tried this with Terry Tao?

People have tried to approach top people in their field and offer them >$500k/yr salaries, though not at any scale and not to a ton of people. Mostly people in ML and CS are already used to seeing these offers and frequently reject them, if they are in academia.

There might be some mathematicians for whom this is less true. I don't know specifically about Terence Tao. Sometimes people also react quite badly to such an offer and it seems pretty plausible they would perceive it as a kind of bribe and making such an offer could somewhat permanently sour relations, though I think it's likely one could find a framing for making such an offer that wouldn't run into these problems.

My takeaway from this datapoint is that people are motivated by status more than money. This suggests ramping up advocacy via a media campaign, and making alignment high-status among the scientifically-minded (or general!) populace.

I've looked at these postings a few times, I kind of assumed that the organization was being run as a welfare program for members of a specific social club, that they were specifically excluding anyone who wasn't already central to that community, and that the primary hiring criteria was social capital.

So the perfect candidate is 'person already in this community with the highest social capital, and a compelling need for a means of support'.

OpenAI is an example of a project that threw a bunch of money at hiring people to do something about AI alignment. If you follow the perspective of Eliezer, they increased AI risk in the process.

You can easily go through LinkedIn and hire people to do alignment research. On the other hand, it's not easy to make sure that you are producing a net benefit on the resulting risk and not just speeding up AI development.

Intuitively, I expect that gathering really talented people and telling them to do stuff related to X isn't that bad of a mechanism for getting X done. The Manhattan Project springs to mind.

The Manhatten Project is not one that's famous for reducing X-risk.

My assertion is that spending money hiring very high quality people will get you technical progress. Whether that's technical progress on nuclear weapons, AI capabilities, or AI alignment is a function of what you incentivize the researchers to work on.

Yes, OpenAI didn't effectively incentivize AI safety work. I don't look at that and conclude that it's impossible to incentivize AI safety work properly.

The take away isn't that it "isn't possible", since one failure can't possibly speak to all possible approaches. However, it does give you evidence about the kind of thing that's likely to happen if you try -- and apparently that's "making things worse". Maybe next time goes better, but it's not likely to go better on it's own, or by virtue of throwing more money at it.

The problem isn't that you can't buy things with money, it's that you get what you pay for and not (necessarily) what you want. If you give a ten million dollar budget to someone to buy predictions, then they might use it to pay a superforcaster to invest time into thinking about their problem, they might use it to set up and subsidize a prediction market, or they might just use it to buy time from the "top of the line" psychics. They will get "predictions" regardless, but the latter predictions do not get better simply by throwing more money at the problem. If you do not know how to buy results themselves, then throwing more money at the problem is just going to get you more of whatever it is you are buying -- which apparently wasn't AI safety last time.

Nuclear weapon research is fairly measurable, and therefore fairly buyable. AI capability research is too, at least if you're looking for marginal improvements. AI alignment research is much harder to measure, and so you're going to have a much harder time buying what you actually want to buy.

My 2 cents:

If you have high quality people working on a project, and you are already paying them "plenty of money," you can increase thier productivity by spending money to make sure they aren't wasting any of thier 24 hours/day doing things they don't want to do. People are reluctant to spend "thier own" money to hire a driver/maid/personal secretary/errand boy full time, but those are things that can be provided as an in-lind transfer. We know it works, because that's what Hollywood does: the star actors and directors have legions of assistants who anticipate and fulfil thier needs so the talent can focus on the task.

As of October, MIRI has shifted its focus. See their announcement for details.

I looked up MIRI's hiring page and it's still in about the same state. This kind of makes sense given the FTX implosion. But I would ask whether MIRI is unconcerned with the criticism it received here and/or actively likes their approach to hiring? We know Eliezer Yudkowsky, who's on their senior leadership team and board of directors, saw this, because he commented on it.

I found it odd that 3/5 members of the senior leadership team, Malo Bourgon, Alex Vermeer, and Jimmy Rintjema...

Yes, I agree with you. I didn't bring it up in the original comment because I think the primary bottleneck is not just "insufficient # of people who are smart enough to do this work", but the combination of "insufficient # of people who smart enough, epistemically grounded enough, motivated enough, and capable-of-novel-thoughts enough". My main point is that there are plenty of people who pass the bar on g-factor but fail one or more of the other criteria.

motivated enough

Feed them amphetamines.

capable-of-novel-thoughts enough

Feed them psychedelics.

I'm only half kidding.

Eliezer already addressed this in his writing, no? He used to think that we were in the "Manhattan Project" phase where throwing gobs of money at the problem would make something happen. Then he realized he was mistaken and we aren't nearly that far along in rigorously understanding reasoning. We're at the phase where if you need to be told what to do/work-on/think-about it'd be hard to provide that direction (at least in the employee or contract sense). It's unclear to me if 10-15 years of progress have changed that, but it seems l...

For what its worth. I went to Miri's Summer fellow research program thing. And if there was a permanent version of that, I would definitely be applying. I'm on a PhD course because I didn't see any big "Want to work on alignment research" posters.

Generally, I agree that exploring more effective ways to scale things up is a good idea - including radical reassessment of recruitment.

However, there's a fundamental point that ought to be borne in mind when making arguments along the lines of [structures like X solve problems well]:

- We did not choose this problem.

- We don't get to change our minds and pick another problem if it looks difficult/impossible.

[There's a sense in which we chose the AGI problem, and safety/alignment is just a sub-problem that comes up, but I don't think that's a sensible take on t...

Speaking to the value of secrecy and how this disincentivizes the make-it-prestigious|hire-lots-of-talent path, perhaps the X-risk family of EA should throw money at mechanism design experts and research people from DARPA or the intelligence community for proposals on building highly secure research orgs, then throw money at talent under the good-secrecy org.

It isn't as though "we need you to help stop the AI apocalypse" is that different a pitch from "we need you to help stop the bioweapon apocalypse" or "we need you to help stop the nuclear apocalypse" p...

The purpose of this post is to call attention to a comment thread that I think needs it.

seed:

Wait, I thought EA already had 46$ billion they didn't know where to spend, so I should prioritize direct work over earning to give? https://80000hours.org/2021/07/effective-altruism-growing/

rank-biserial:

I thought so too. This comment thread on ACX shattered that assumption of mine. EA institutions should hire people to do "direct work". If there aren't enough qualified people applying for these positions, and EA has 46 billion dollars, then their institutions should (get this) increase the salaries they offer until there are.

To quote EY:

Excerpts From The ACX Thread:[1]

Daniel Kokotajlo:

For those trying to avert catastrophe, money isn't scarce, but researcher time/attention/priorities is. Even in my own special niche there are way too many projects to do and not enough time. I have to choose what to work on and credences about timelines make a difference.

Daniel Kirmani:

I don't get the "MIRI isn't bottlenecked by money" perspective. Isn't there a well-established way to turn money into smart-person-hours by paying smart people very high salaries to do stuff?

Daniel Kokotajlo:

My limited understanding is: It works in some domains but not others. If you have an easy-to-measure metric, you can pay people to make the metric go up, and this takes very little of your time. However, if what you care about is hard to measure / takes lots of time for you to measure (you have to read their report and fact-check it, for example, and listen to their arguments for why it matters) then it takes up a substantial amount of your time, and that's if they are just contractors who you don't owe anything more than the minimum to.

I think another part of it is that people just aren't that motivated by money, amazingly. Consider: If the prospect of getting paid a six-figure salary to solve technical alignment problems worked to motivate lots of smart people to solve technical alignment problems... why hasn't that happened already? Why don't we get lots of applicants from people being like 'Yeah I don't really care about this stuff I think it's all sci-fi but check out this proof I just built, it extends MIRI's work on logical inductors in a way they'll find useful, gimme a job pls." I haven't heard of anything like that ever happening. (I mean, I guess the more realistic case of this is someone who deep down doesn't really care but on the exterior says they do. This does happen sometimes in my experience. But not very much, not yet, and also the kind of work these kind of people produce tends to be pretty mediocre.)

Another part of it might be that the usefulness of research (and also manager/CEO stuff?) is heavy-tailed. The best people are 100x more productive than the 95th percentile people who are 10x more productive than the 90th percentile people who are 10x more productive than the 85th percentile people who are 10x more productive than the 80th percentile people who are infinitely more productive than the 75th percentile people who are infinitely more productive than the 70th percentile people who are worse than useless. Or something like that.

Anyhow it's a mystery to me too and I'd like to learn more about it. The phenomenon is definitely real but I don't really understand the underlying causes.

Melvin:

I mean, does MIRI have loads of open, well-paid research positions? This is the first I'm hearing of it. Why doesn't MIRI have an army of recruiters trolling LinkedIn every day for AI/ML talent the way that Facebook and Amazon do?

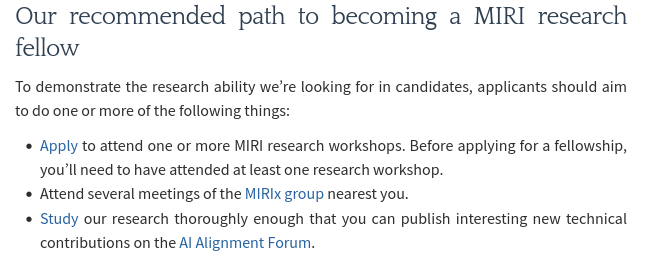

Looking at MIRI's website it doesn't look like they're trying very hard to hire people. It explicitly says "we're doing less hiring than in recent years". Clicking through to one of the two available job ads ( https://intelligence.org/careers/research-fellow/ ) it has a section entitled "Our recommended path to becoming a MIRI research fellow" which seems to imply that the only way to get considered for a MIRI research fellow position is to hang around doing a lot of MIRI-type stuff for free before even being considered.

None of this sounds like the activities of an organisation that has a massive pile of funding that it's desperate to turn into useful research.

Daniel Kokotajlo:

I can assure you that MIRI has a massive pile of funding and is desperate for more useful research. (Maybe you don't believe me? Maybe you think they are just being irrational, and should totally do the obvious thing of recruiting on LinkedIn? I'm told OpenPhil actually tried something like that a few years ago and the experiment was a failure. I don't know but I'd guess that MIRI has tried similar things. IIRC they paid high-caliber academics in relevant fields to engage with them at one point.)

Again, it's a mystery to me why it is, but I'm pretty sure that it is.

Some more evidence that it's true:

--Tiny startups beating giant entrenched corporations should NEVER happen if this phenomenon isn't real. Giant entrenched corporations have way more money and are willing to throw it around to improve their tech. Sure maybe any particular corporation might be incompetent/irrational, but it's implausible that all the major corporations in the world would be irrational/incompetent at the same time so that a tiny startup could beat them all.

--Similar things can be said about e.g. failed attempts by various governments to make various cities the "new silicon valley" etc.

Maybe part of the story is that research topics/questions are heavy-tailed-distributed in importance. One good paper on a very important question is more valuable than ten great papers on a moderately important question.

Melvin:

Maybe they're not being irrational, they're just bad at recruiting. That's fine, that's what professional recruiters are for. They should hire some.

If MIRI wants more applicants for its research fellow positions it's going to have to do better than https://intelligence.org/careers/research-fellow/ because that seems less like a genuine job ad and more like an attempt to get naive young fanboys to work for free in the hopes of maybe one day landing a job.

Why on Earth would an organisation that is serious about recruitment tell people "Before applying for a fellowship, you’ll need to have attended at least one research workshop"? You're competing for the kind of people who can easily walk into a $500K+ job at any FAANG, why are you making them jump through hoops?

Eye Beams are cool:

Holy shit. That's not a job posting. That's instructions for joining a cult. Or a MLM scam.

Froolow:

For what it is worth, I agree completely with Melvin on this point - the job advert pattern matches to a scam job offer to me and certainly does not pattern match to any sort of job I would seriously consider taking. Apologies to be blunt, but you write "it's a mystery to me why it is", so I'm trying to offer an outside perspective that might be helpful.

It is not normal to have job candidates attend a workshop before applying for a job in prestigious roles, but it is very normal to have candidates attend a 'workshop' before pitching them an MLM or timeshare. It is even more concerning that details about these workshops are pretty thin on the ground. Do candidates pay to attend? If so this pattern matches advanced fee scams. Even if they don't pay to attend, do they pay flights and airfare? If so MIRI have effectively managed to limit their hire pool to people who live within commuting distance of their offices or people who are going to work for them anyway and don't care about the cost.

Furthermore, there's absolutely no indication how I might go about attending one of these workshops - I spent about ten minutes trying to google details (which is ten minutes longer than I have to spend to find a complete list of all ML engineering roles at Google / Facebook), and the best I could find was a list of historic workshops (last one in 2018) and a button saying I should contact MIRI to get in touch if I wanted to attend one. Obviously I can't hold the pandemic against MIRI not holding in-person meetups (although does this mean they deliberately ceased recruitment during the pandemic?), and it looks like maybe there is a thing called an 'AI Risk for Computer Scientists' workshop which is maybe the same thing (?) but my best guess is that the next workshop - which is a prerequisite for me applying for the job - is an unknown date no more than six months into the future. So if I want to contribute to the program, I need to defer all job offers for my extremely in-demand skillset for the opportunity to apply following a workshop I am simply inferring the existence of.

The next suggested requirement indicates that you also need to attend 'several' meetups of the nearest MIRIx group to you. Notwithstanding that 'do unpaid work' is a huge red flag for potential job applicants, I wonder if MIRI have seriously thought about the logistics of this. I live in the UK where we are extremely fortunate to have two meetup groups, both of which are located in cities with major universities. If you don't live in one of those cities (or, heaven forbid, are French / German / Spanish / any of the myriad of other nationalities which don't have a meetup anything less than a flight away) then you're pretty much completely out of luck in terms of getting involved with MIRI. From what I can see, the nearest meetup to Terrence Tao's offices in UCLA is six hours away by car. If your hiring strategy for highly intelligent mathematical researchers excludes Terrence Tao by design, you have a bad hiring strategy.

The final point in the 'recommended path' is that you should publish interesting and novel points on the MIRI forums. Again, high quality jobs do not ask for unpaid work before the interview stage; novel insights are what you pay for when you hire someone.

Why shouldn't MIRI try doing the very obvious thing and retaining a specialist recruitment firm to headhunt talent for them, pay that talent a lot of money to come and work for them, and then see if the approach works? A retained executive search might cost perhaps $50,000 per hire at the upper end, perhaps call it $100,000 because you indicate there may be a problem with inappropriate CVs making it through anything less than a gold-plated search. This is a rounding error when you're talking about $2bn unmatched funding. I don't see why this approach is too ridiculous even to consider, and instead the best available solution is to have a really unprofessional hiring pipeline directly off the MIRI website.

Daniel Kirmani:

One solution here would be to ask people to generate a bunch of alignment research, then randomly sample a small subset of that research and subject it to costly review, then reward those people in proportion to the quality of the spot-checked research.

But that might not even be necessary. Intuitively, I expect that gathering really talented people and telling them to do stuff related to X isn't that bad of a mechanism for getting X done. The Manhattan Project springs to mind. Bell Labs spawned an enormous amount of technical progress by collecting the best people and letting them do research. I think the hard part is gathering the best people, not putting them to work.

N. N.:

You probably know more about the details of what has or has not been tried than I do, but if this is the situation we really should be offering like $10 million cash prizes no questions asked for research that Eliezer or Paul or whoever says moves the ball on alignment. I guess some recently announced prizes are moving us in this direction, but the amount of money should be larger, I think. We have tons of money, right?

Xpym:

They (MIRI in particular) also have a thing about secrecy. Supposedly much of the potentially useful research not only shouldn't be public, even hinting that this direction might be fruitful is dangerous if the wrong people hear about it. It's obviously very easy to interpret this uncharitably in multiple ways, but they sure seem serious about it, for better or worse (or indifferent).

Dirichlet-to-Neumann:

Give Terrence Tao 500 000$ to work on AI alignement six months a year, letting him free to research crazy Navier-Stokes/Halting problem links the rest of his time... If money really isn't a problem, this kind of thing should be easy to do.

Daniel Kokotajlo:

Literally that idea has been proposed multiple times before that I know of, and probably many more times many years ago before I was around.

David Piepgrass:

Wait, what? If I knew that I might've signed the f**k up! I don't have experience in AI, but still! Who's offering six figures?

Clicking on links is a trivial inconvenience for some. ↩︎