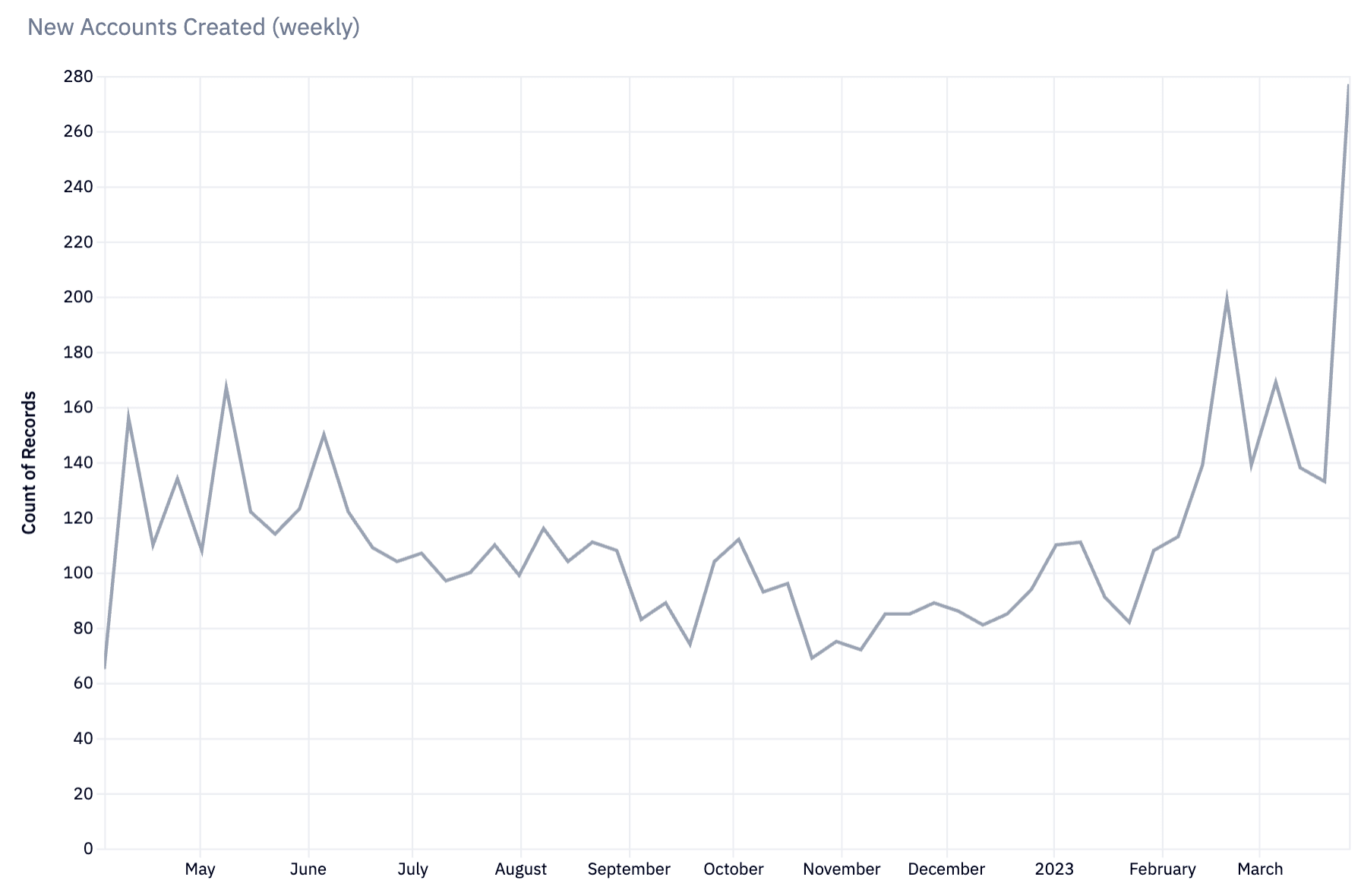

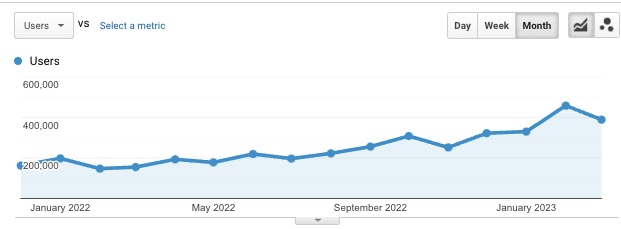

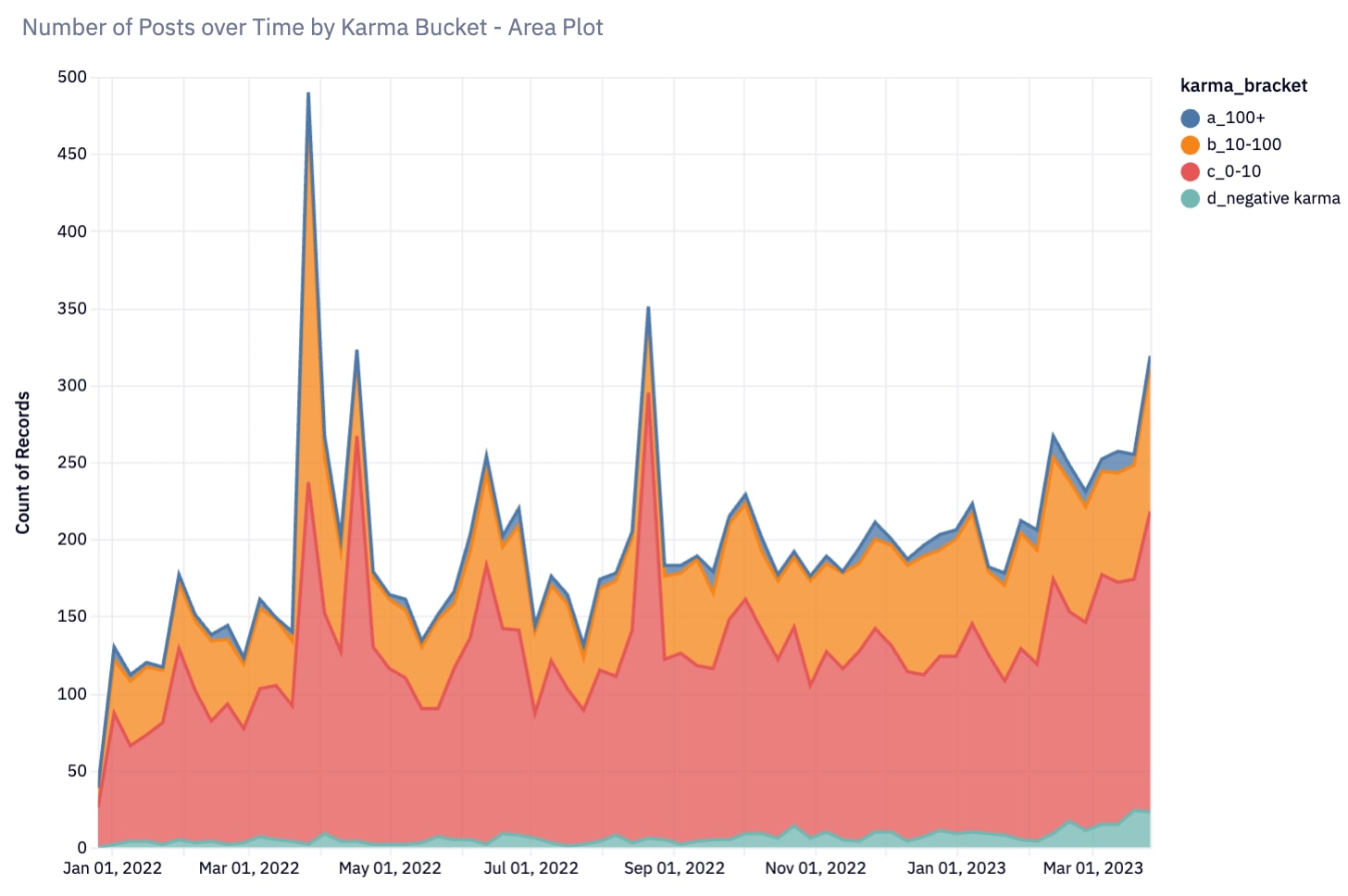

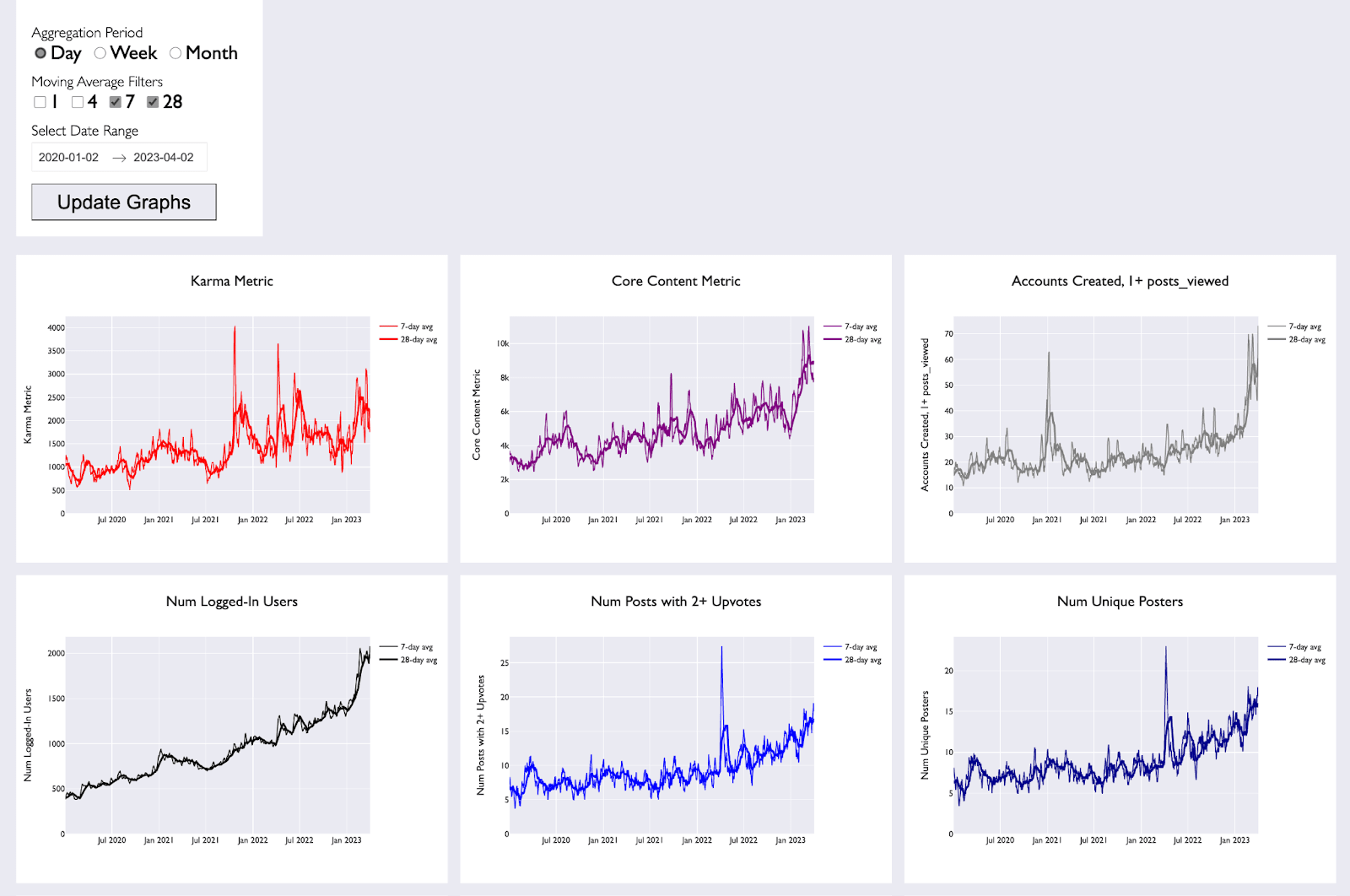

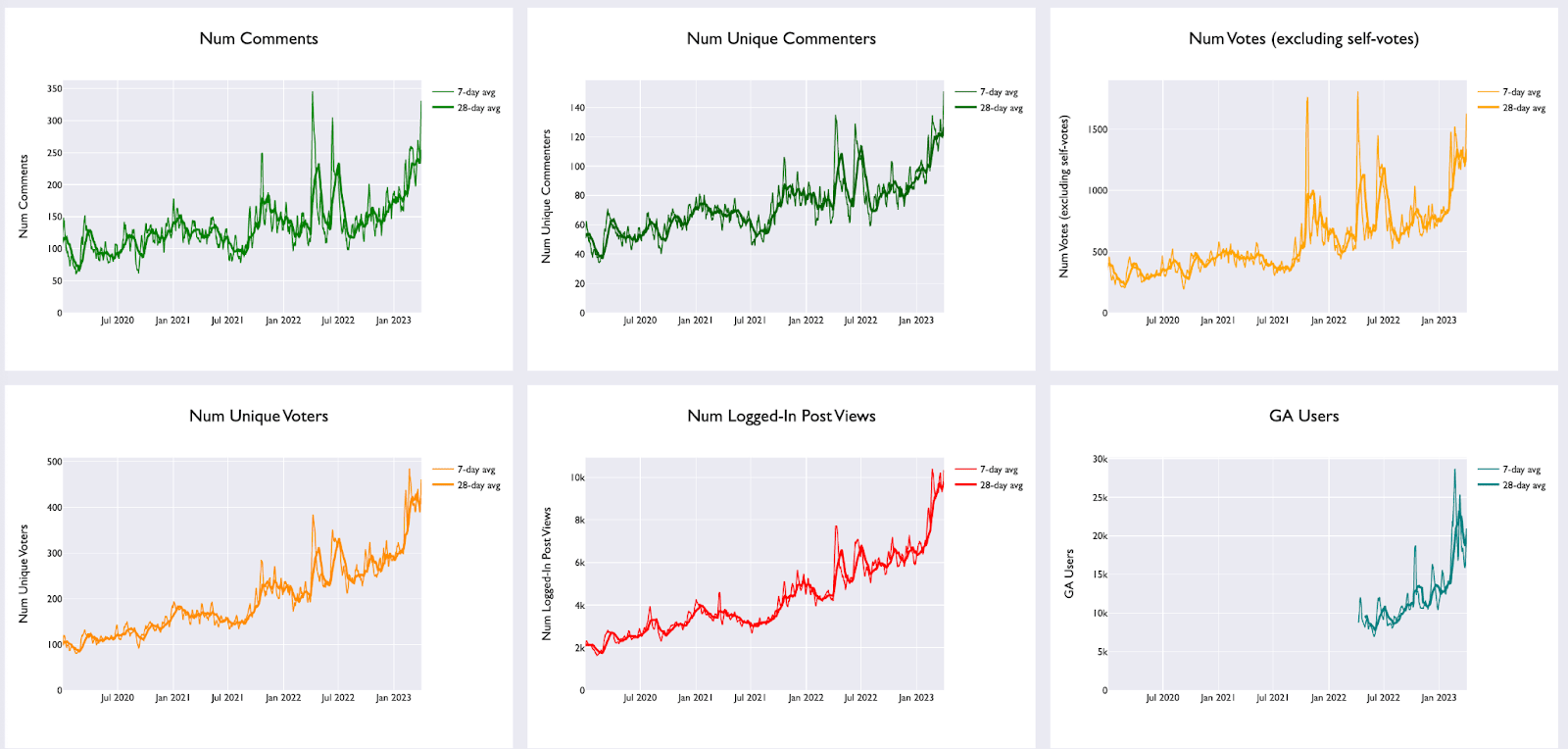

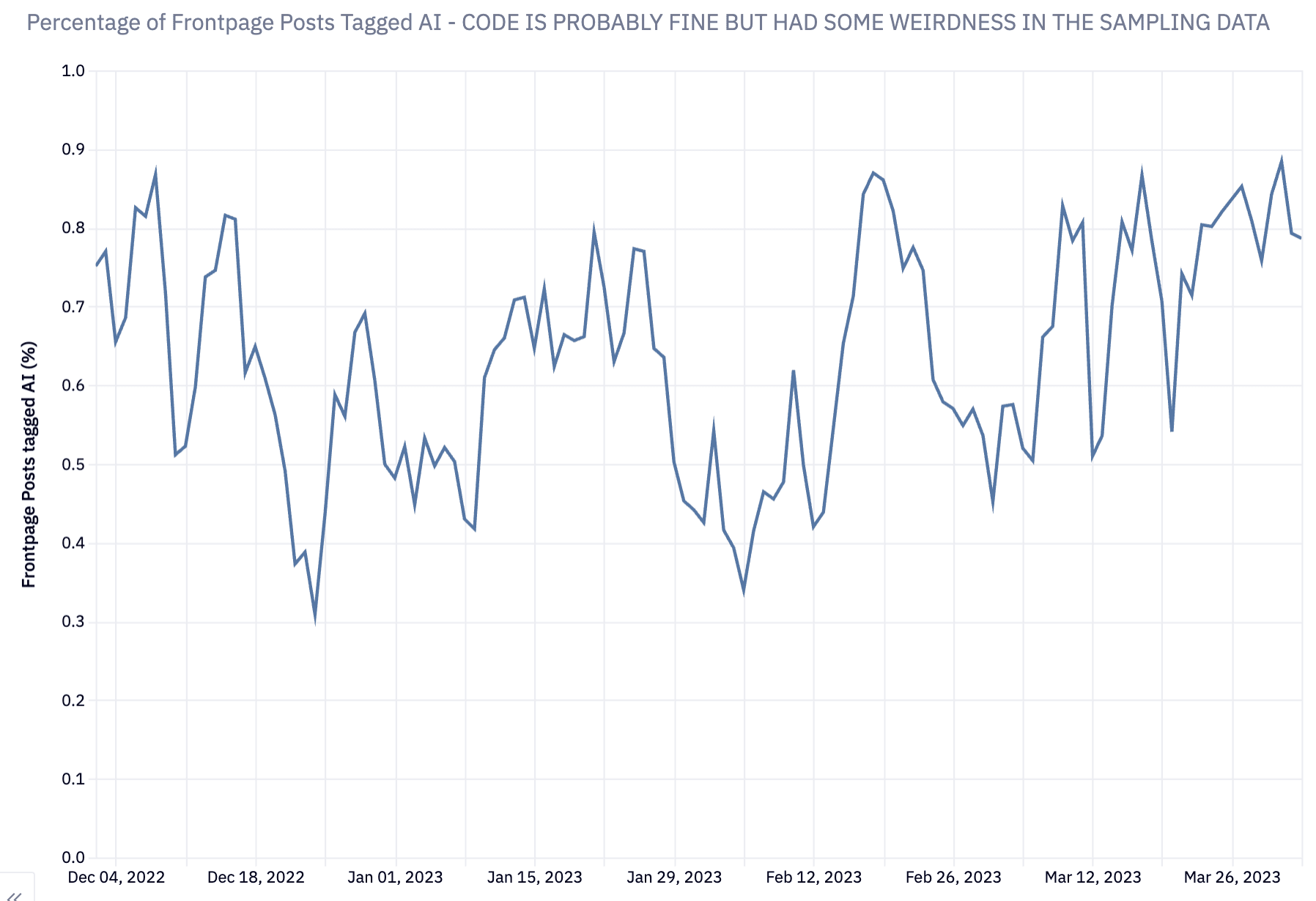

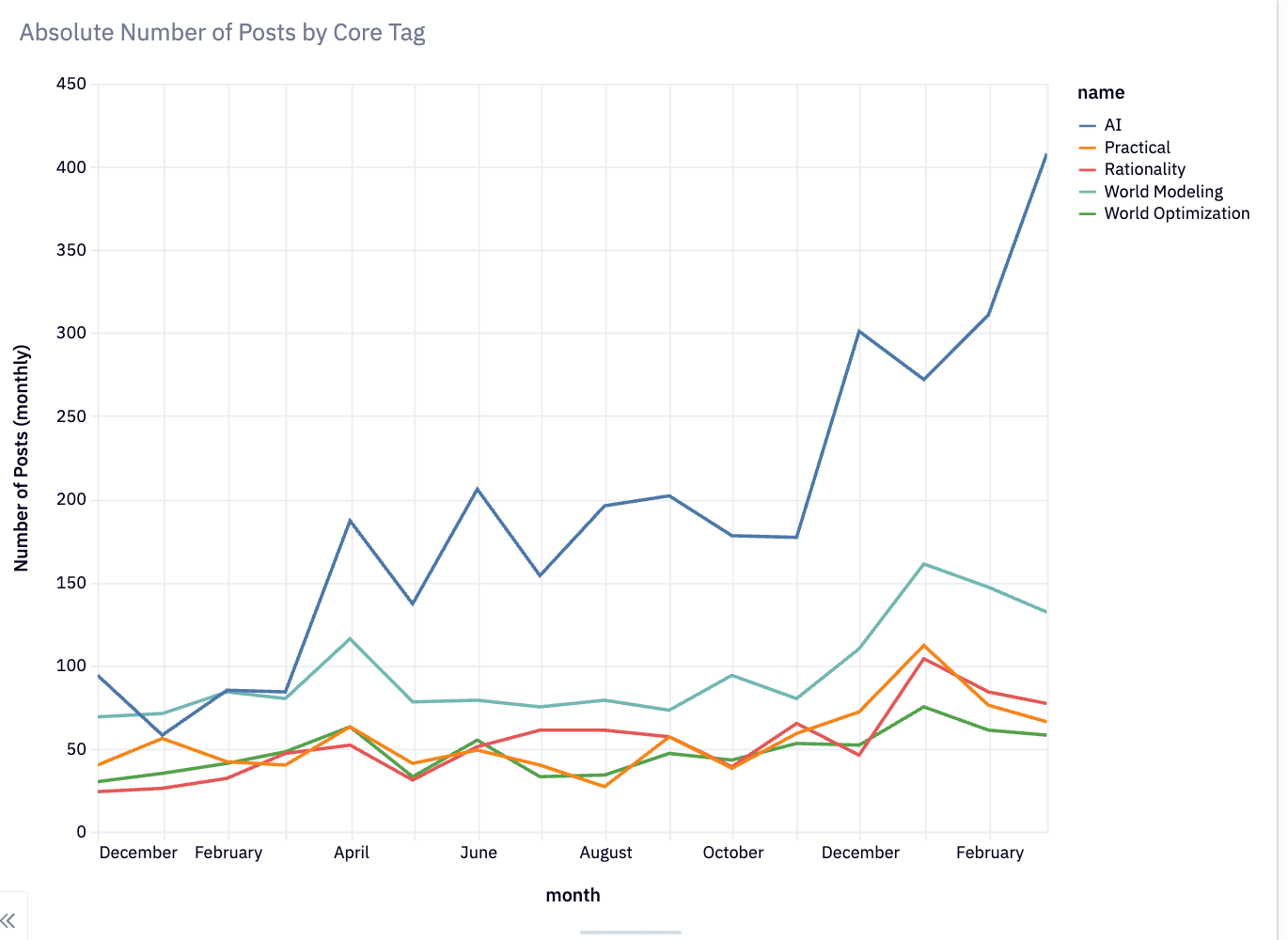

Lots of new users have been joining LessWrong recently, who seem more filtered for "interest in discussing AI" than for being bought into any particular standards for rationalist discourse. I think there's been a shift in this direction over the past few years, but it's gotten much more extreme in the past few months.

So the LessWrong team is thinking through "what standards make sense for 'how people are expected to contribute on LessWrong'?" We'll likely be tightening up moderation standards, and laying out a clearer set of principles so those tightened standards make sense and feel fair.

In coming weeks we'll be thinking about those principles as we look over existing users, comments and posts and asking "are these contributions making LessWrong better?".

Hopefully within a week or two, we'll have a post that outlines our current thinking in more detail.

Generally, expect heavier moderation, especially for newer users.

Two particular changes that should be going live within the next day or so:

- Users will need at least N karma in order to vote, where N is probably somewhere between 1 and 10.

- Comments from new users won't display by default until they've been approved by a moderator.

Broader Context

LessWrong has always had a goal of being a well-kept garden. We have higher and more opinionated standards than most of the rest of the internet. In many cases we treat some issues as more "settled" than the rest of the internet, so that instead of endlessly rehashing the same questions we can move on to solving more difficult and interesting questions.

What this translates to in terms of moderation policy is a bit murky. We've been stepping up moderation over the past couple months and frequently run into issues like "it seems like this comment is missing some kind of 'LessWrong basics', but 'the basics' aren't well indexed and easy to reference." It's also not quite clear how to handle that from a moderation perspective.

I'm hoping to improve on "'the basics' are better indexed", but meanwhile it's just generally the case that if you participate on LessWrong, you are expected to have absorbed the set of principles in The Sequences (AKA Rationality A-Z).

In some cases you can get away without doing that while participating in local object level conversations, and pick up norms along the way. But if you're getting downvoted and you haven't read them, it's likely you're missing a lot of concepts or norms that are considered basic background reading on LessWrong. I recommend starting with the Sequences Highlights, and I'd also note that you don't need to read the Sequences in order, you can pick some random posts that seem fun and jump around based on your interest.

(Note: it's of course pretty important to be able to question all your basic assumptions. But I think doing that in a productive way requires actually understand why the current set of background assumptions are the way they are, and engaging with the object level reasoning)

There's also a straightforward question of quality. LessWrong deals with complicated questions. It's a place for making serious progress on those questions. One model I have of LessWrong is something like a university – there's a role for undergrads who are learning lots of stuff but aren't yet expected to be contributing to the cutting edge. There are grad students and professors who conduct novel research. But all of this is predicated on there being some barrier-to-entry. Not everyone gets accepted to any given university. You need some combination of intelligence, conscientiousness, etc to get accepted in the first place.

See this post by habryka for some more models of moderation.

Ideas we're considering, and questions we're trying to answer:

- What quality threshold does content need to hit in order to show up on the site at all? When is the right solution to approve but downvote immediately?

- How do we deal with low quality criticism? There's something sketchy about rejecting criticism. There are obvious hazards of groupthink. But a lot of criticism isn't well thought out, or is rehashing ideas we've spent a ton of time discussing and doesn't feel very productive.

- What are the actual rationality concepts LWers are basically required to understand to participate in most discussions? (for example: "beliefs are probabilistic, not binary, and you should update them incrementally")

- What philosophical and/or empirical foundations can we take for granted for building off of (i.e. reductionism, meta-ethics)

- How much familiarity with the existing discussion of AI should you be expected to have to participate in comment threads about that?

- How does moderation of LessWrong intersect with moderating the Alignment Forum?

Again, hopefully in the near future we'll have a more thorough writeup about our answers to these. Meanwhile it seemed good to alert people this would be happening.

Here's a quickly written draft for an FAQ we might send users whose content gets blocked from appearing on the site.

The “My post/comment was rejected” FAQ

Why was my submission rejected?

Common reasons that the LW Mod team will reject your post or comment:

Can I appeal or try again?

You are welcome to message us however note that due to volume, even though we read most messages, we will not necessarily respond, and if we do, we can’t engage in a lengthy back-and-forth.

In an ideal world, we’d have a lot more capacity to engage with each new contributor to discuss what was/wasn’t good about their content, unfortunately with new submissions increasing on short timescales, we can’t afford that and have to be pretty strict in order to ensure site quality stays high.

This is censorship, etc.

LessWrong, while on the public internet, is not the general public. It was built to host a certain kind of discussion between certain kinds of users who’ve agreed to certain basics of discourse, who are on board with a shared philosophy, and can assume certain background knowledge.

Sometimes we say that LessWrong is a little bit like a “university”, and one way that it is true is that not anybody is entitled to walk in and demand that people host the conversation they like.

We hope to keep allowing for new accounts created and for new users to submit new content, but the only way we can do that is if we reject content and users who would degrade the site’s standards.

But diversity of opinion is important, echo chamber, etc.

That’s a good point and a risk that we face when moderating. The LessWrong moderation team tries hard to not reject things just because we disagree, and instead only do so if it feels like the content it failing on some other criteria.

Notably, one of the best ways to disagree (and that will likely get you upvotes) is to criticize not just a commonly-held position on LessWrong, but the reasons why it is held. If you show that you understand why people believe what they do, they’re much more likely to be interested in your criticisms.

How is the mod team kept accountable?

If your post or comment was rejected from the main site, it will be viewable alongside some indication for why it was banned. [we haven't built this yet but plan to soon]

Anyone who wants to audit our decision-making and moderation policies can review blocked content there.