I'd consider this a restatement of the standard Yudkowskian position. https://twitter.com/ESYudkowsky/status/1438198184954580994

Unfortunately, unless such a Yudkowskian statement was made publicly at some earlier date, Yudkowsky is in fact following in Repetto's footsteps. Repetto claimed that, with AI designing cures to obesity and the like, then in the next 5 years the popular demand for access to those cures would beat-down the doors of the FDA and force rapid change... and Repetto said that on April 27th, while Yudkowsky only wrote his version on Twitter on September 15th.

Eli is correct that better technology is not a sufficient reason for predicting faster economic growth.

A key point from Could Advanced AI Drive Explosive Economic Growth?:

A wide range of growth models predict explosive growth if capital can substitute for labor.

Another way to look at that is that economic models suggest that population growth is an important factor driving GDP growth.

What relevant meaning of population here? If software can substitute for most labor, then software likely functions as part of the population.

The industrial revolution replaced a lot of human muscle power with machine power. AI shows signs of replacing a lot of routine human mental work with machine mental work.

This does not depend too much on AI becoming as generally intelligent as humans, only that AI achieves human-equivalent abilities at an important number of widely used tasks.

Self-driving cars seem to be on track to replacing a nontrivial amount of human activity. I expect similar types of software servants to become widespread; cars are one of the first mainly because a single specialty serves a large market.

I use global computing capacity as a crude estimate of how machine mental work compares to human mental work. AI Impacts estimates that computing capacity has so far been small compared to human capacity, but that trends suggest computing capacity will become the dominant form of mental work sometime this century.

So the effective population growth (and therefore GDP growth) might be determined more by the rate at which computing capacity is built, rather than by the human birth rate.

Suppose that productivity growth remains mostly stagnant in all the big sectors that Eli mentions.

The semiconductor industry still has large room for growth. AI will dramatically increase demand for semiconductors, for uses such as better entertainment, personal assistants, therapists, yoga instructors, personal chefs, etc.

How much growth would the semiconductor industry need in order to single-handedly become the main driver of faster growth? 30% per year would get us there around 2040, 100% per year would get us there around 2030. Those scenarios seem plausible to me, but most investors seem to be treating them as too unusual to consider.

Energy use would need to increase in those scenarios. I disagree with Eli a bit there. Solar and battery productivity is improving nicely. The US probably cares enough about pushing those to overcome the roadblocks. If not, China will likely take over as the leader in economic growth.

Energy use would need to increase in those scenarios. I disagree with Eli a bit there. Solar and battery productivity is improving nicely.

Is this driven by AI though? And are the bottlenecks to faster growth there limitations of digital technology?

A few points:

- I think it's important to distinguish between economic growth and value. A laptop that costs 500$ in 1990 and a laptop that costs 500$ in 2020 are nowhere near close in value, but are in GDP.

- You are correct about lots of barriers to growth being social/legal rather than technological (see Burja) - though in the long term jobs that can be replaced with AI will be replaced with AI, as the social need for bullshitjobhavers decreases significantly when they leave.

- I don't think it is fair to infer that computers had a small impact on GDP and especially value based on decreasing secular trends in productivity. Innovation and scientific output have been slowing as well; it is difficult to name a major economic or scientific outbreak that isn't computational in nature. Compare this to the 1900-1970 period: cars, lasers, refrigerators, planes, plastics, nuclear tech, and penicillin were all fairly impactful inventions.

edit: oh, and if we get to AGI, that will definitely be a productivity increase. The literature suggesting national IQs cause differences in economic growth is fairly solid; I have no reason to believe this shouldn't apply to AI with the exception of an AGIpocalypse.

I think #1 is the most important here. I'm not a professional economist, so someone please correct me if I'm wrong.

My understanding is that TFP is calculated based on nominal GDP, rather than real GDP, meaning the same products and services getting cheaper doesn't affect the growth statistic. Furthermore, although the formulation in the TFP paper has a term for "labor quality", in practice that's ignored because it's very difficult to calculate, making the actual calculation roughly (GDP / hours worked). All this means that it's pretty unsuitable as a measure of how well a technology like the Internet (or AI) improves productivity.

TFP (utilization adjusted even more so) is very useful for measuring impacts of policies, shifts in average working hours, etc. But the main thing it tells us about technology is "technology hasn't reduced average working hours". If you use real GDP instead, you'll see that exponential growth continues as expected.

You are wrong. Of course TFP is calculated based on real GDP, otherwise it would be meaningless.

There are real issues with how to measure real GDP, because price index needs to be adjusted for quality. But that's different from calculating based on nominal GDP. As I understand, "the same product getting cheaper" you mentioned is nearly perfectly captured by current methods. What Jensen mentioned is different, it's "the same cost buying more", and that's more problematic.

Could you explain the difference between "the same product getting cheaper" and "the same cost buying more"?

>"the same product getting cheaper"

floss costing 5$ in year 2090 and then lowering to 3$ in year 2102.

>"the same cost buying more"

laptop in year 1980 running at piss/minute costing 600$ while laptop in year 2020 running at silver/minute costing 600$.

change 1 is a productivity increase.

change 2 isn't.

I'd toss software into the mix as well. How much does it cost to reproduce a program? How much does software increase productivity?

I dunno, I don't think the way the econ numbers are portrayed here jive with reality. For instance:

"And yet, if I had only said, “there is no way that online video will meaningfully contribute to economic growth,” I would have been right."

doesn't strike me as a factual statement. In what world has streaming video not meaningfully contributed to economic growth? At a glance it's ~$100B industry. It's had a huge impact on society. I can't think of many laws or regulations that had any negative impacts on its growth. Heck, we passed some tax breaks here, to make it easier to film, since the entertainment industry was bringing so much loot into the state and we wanted more (and the breaks paid off).

I saw what digital did to the printing industry. What it's done to the drafting/architecture/modeling industry. What it's done to the music industry. Productivity has increased massively since the early 80s, by most metrics that matter (if the TFP doesn't reflect this, perhaps it's not a very good model?), although I guess "that matter" might be a "matter" of opinion. Heh.

Or maybe it's just messing with definitions? "Oh, we mean productivity in this other sense of the word!". And if we are using non-standard (or maybe I should say "specialized") meanings of "productivity", how does demand factor in? Does it even make sense to break it into quarters? Yadda yadda

Mainly it's just odd to have gotten super-productive as an individual[1], only to find out that this productivity is an illusion or something?

I must be missing the point.

Or maybe those gains in personal productivity have offset global productivity or something?

Or like, "AI" gets a lot of hype, so Microsoft lays off 10k workers to "focus" on it— which ironically does the opposite of what you'd think a new tech would do (add 10k, vs drop), or some such?

It seems like we've been progressing relatively steadily, as long as I've been around to notice, but then again, I'm not the most observant cookie in the box. ¯\_(ツ)_/¯

- ^

I can fix most things in my house on my own now, thanks to YouTube videos of people showing how to do it. I can make studio-quality music and video with my phone. Etc.

It's details like these that you point out here, which make me SUPER hesitant when reading people making claims about correlating GDP/economy-based metrics with anything else.

What's the original charts base definitions, assumptions, and their error bars? What's their data sources, what assumptions are they making? To look at someone's charts over GDP and then extrapolate and finally go "tech has made no effect", feels naive and short-sighted, at least, from a rational perspective- we should know that these charts tend not to convey as much meaning as we'd like.

Having a default base of being extremely skeptical of sweeping claims based on extrapolations on GDP metrics seems like a prudent default.

Sounds like cope to me.

It feels as if the data doesn't support your position and so you're making a retreat to scepticism.

I think it's more likely that the position of yours that isn't supported by the data is just wrong.

What are you supposed to conclude with data that doesn't accurately reflect what it is supposed to measure?

"sounds like cope"? At least come in good faith! Your comments contribute nothing but "I think you're wrong".

Several people have articulated problems with the proposed way of measuring — and/or even defining — the core terms being discussed.

(I like the "I might be wrong" nod, but it might be good to note as well how problematic the problem domain is. Econ in general is not what I'd call a "hard" science. But maybe that was supposed to be a given?).

Others have proposed better concrete examples, but here's a relative/abstract bit via a snippet from the Wikipedia page for Simulacra and Simulation:

Exchange value, in which the value of goods is based on money (literally denominated fiat currency) rather than usefulness, and moreover usefulness comes to be quantified and defined in monetary terms in order to assist exchange.

Doesn't add much, but it's something. Do you have anything of real value (heh) to add?

"I think this is wrong and demonstrating flawed reasoning" is actually a contribution to the discourse.

I gave a brief explanation over the rest of the comment on how it came across to me like cope.

Contributes about as much as a "me too!" comment.

"I think this is wrong and demonstrating flawed reasoning" would be more a substantive repudiation with some backing as to why you think the data is, in fact, representative of "true" productivity values.

This statement makes a lot more sense than your "sounds like cope" rejoinder brief explanation:

Having a default base of being extremely skeptical of sweeping claims based on extrapolations on GDP metrics seems like a prudent default.

You don't have to look far to see people, um, not exactly satisfied with how we're measuring productivity. To some extent, productivity might even be a philosophical question. Can you measure happiness? Do outcomes matter more than outputs? How does quality of life factor in? In sum, how do you measure stuff that is by its very nature, difficult to measure?

I love that we're trying to figure it out! Like, is network traffic included in these stats? Would that show anything interesting? How about amounts of information/content being produced/accumulated? (tho again— quality is always an "interesting" one to measure.)

I dunno. It's fun to think about tho, *I think*. Perhaps literal data is accounted for in the data… but I'd think we're be on an upward trend if so? Seems like we're making more and more year after year… At any rate, thanks for playing, regardless!

I'm going to be frank, and apologize for taking so long to reply, but this sounds like a classic case of naivete and overconfidence.

It's routinely demonstrated that stats can be made to say whatever we want and conclude whatever the person who made them wants, and via techniques like the ones used in p-hacking etc, it should eventually become evident that economics are not exempt from similar effects.

Add in the replication crisis, and you have a recipe for disaster. As such, the barriers you need to clear: "this graph about economics- a field known for attracting a large number of people who don't know the field to comment on the field- means what I say it means and is an accurate representation of reality" are immense.

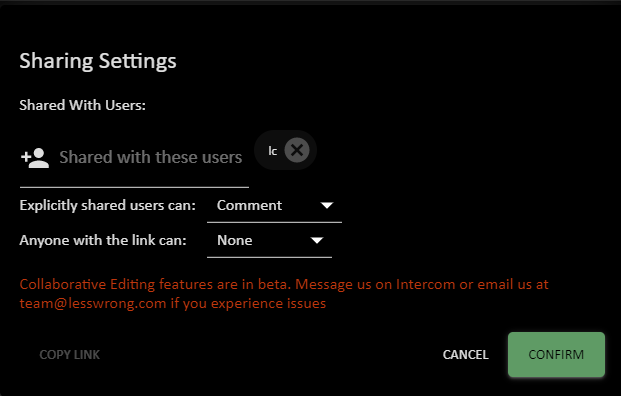

Beside the link post field the top of the draft, there's a profile icon and a "+" sign. Click on it to add someone to a draft:

For you: https://www.lesswrong.com/posts/jZSzHLqJyNzqcGEEu/automated-computer-hacking-might-be-catastrophic

Only the first two and a half parts are finished (out of eight), but you can get the gist of it mostly from the intro.

There was a thread on personalized phishing scams that was going around earlier. Since AI can "reasonably" impersonate a person, you can automatically generate emails written in someone's style and respond to messages in a way that might fool the average person. It's a meaningful change to the threat model since attackers can self-host their own language models. More "regular cybersecurity" than "existential risk" though.

Hello,

Personally I think there is a major problem on how productivity is measured.

Basically:

productivity = production/time

But here is the major flaw: how is production currently measured?

It is measured by how much money you sell that production!

So basically as it stands:

productivity = (money made)/time

Imho that way of measuring productivity is really dumb and gives a completely undervalued measurement of production.

To take a simple example imagine you create (with thousands of other people) an OS like Linux that powers billions & billions of computing devices throughout the entire world (and even in space) and give away that OS for free:

Your productivity for this Linux production is measured as zero (0) because you didn't make any money from the direct selling of it. The fact that your measured production and productivity for this is zero is completely absurd because you actually produced something extremely useful and transformative on a huge scale. There are many other examples like that of free or very cheap things which are measured as having a very low productivity not because they are useless but because they are (or have become) free / very cheap.

To take another example, let's say you have speculated on the markets and got lucky and made a huge amount of money very quickly: you haven't really produced anything but your productivity is measured as being huge!

So basically imho anything / any argument which is based on how productivity is currenly measured is completely flawed.

Please correct me if I am wrong so that I am less wrong thank you :)

Current tech/growth overhangs caused by regulation are not enough to make countries with better regulations outcompete the ones with worse ones. It's not obvious to me that this won't change before AGI. If better governed countries (say, Singapore) can become more geopolitically powerful than larger, worse governed countries (say, Russia) by having better tech regulations, that puts pressure on countries worldwide to loose those bottlenecks.

Plausibly this doesn't matter because the US + China are such heavyweights that they aren't at risk of being outcompeted by anyone even if Singapore could outcompete Russia and as long as it doesn't change the rules for US or Chinese governance world GDP won't change by much.

A lot of these are legal and political hurdles, but I've heard that generative text models are really good at law. Could that change things?

I'd imagine whatever powers make lawyers need to take law school in order to be a lawyer would make it so you can't just use a language model to do your lawyering for you. I.e. The primary bottleneck in law is not how much time it takes or knowledge of the subject matter, but the ability for law associations to rent seek.

More generally, language models seem like symmetric weapons in this regard, our current society is structured so as those trying to prevent others from doing stuff have greater advantage in our society's economically bottlenecked areas, and so we shouldn't expect language models to change the game-board too much. If they aren't made illegal for legal purposes, both sides' lawyers will be using them once one or two startups are able to break through the legal barriers using this strategy & get bought out by the established players.

If you want to be really pessimistic, you may expect those who have most mastered language models for the purposes of law to be more destructive than creative with their powers. For example, its far easier now to patent troll than it was previously. Many legal institutions if optimized against only really permit a decrease in progress rather than an increase.

This sounds right to me, and is a large part of why I'd be skeptical of explosive growth. But I don't think it's an argument against the most extreme stories of AI risk. AI could become increasingly intelligent inside a research lab, yet we could underestimate its ability because regulation stifles its impact, until it eventually escapes the lab and pursues misaligned goals with reckless abandon.

To be clear, my update from this was: "AI is less likely to become economically disruptive before it becomes existentially dangerous" not "AI is less likely to become existentially dangerous".

Eh, what do you think of Peter McCluskey's story of how compute and capital substituting for labor may drive explosive growth?

Eli is correct that better technology is not a sufficient reason for predicting faster economic growth.

A key point from Could Advanced AI Drive Explosive Economic Growth?:

A wide range of growth models predict explosive growth if capital can substitute for labor.

Another way to look at that is that economic models suggest that population growth is an important factor driving GDP growth.

What relevant meaning of population here? If software can substitute for most labor, then software likely functions as part of the population.

The industrial revolution replaced a lot of human muscle power with machine power. AI shows signs of replacing a lot of routine human mental work with machine mental work.

This does not depend too much on AI becoming as generally intelligent as humans, only that AI achieves human-equivalent abilities at an important number of widely used tasks.

Self-driving cars seem to be on track to replacing a nontrivial amount of human activity. I expect similar types of software servants to become widespread; cars are one of the first mainly because a single specialty serves a large market.

I use global computing capacity as a crude estimate of how machine mental work compares to human mental work. AI Impacts estimates that computing capacity has so far been small compared to human capacity, but that trends suggest computing capacity will become the dominant form of mental work sometime this century.

So the effective population growth (and therefore GDP growth) might be determined more by the rate at which computing capacity is built, rather than by the human birth rate.

Suppose that productivity growth remains mostly stagnant in all the big sectors that Eli mentions.

The semiconductor industry still has large room for growth. AI will dramatically increase demand for semiconductors, for uses such as better entertainment, personal assistants, therapists, yoga instructors, personal chefs, etc.

How much growth would the semiconductor industry need in order to single-handedly become the main driver of faster growth? 30% per year would get us there around 2040, 100% per year would get us there around 2030. Those scenarios seem plausible to me, but most investors seem to be treating them as too unusual to consider.

Energy use would need to increase in those scenarios. I disagree with Eli a bit there. Solar and battery productivity is improving nicely. The US probably cares enough about pushing those to overcome the roadblocks. If not, China will likely take over as the leader in economic growth.

The LessWrong Review runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2024. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

One counter-factor.

Technology has made certain kinds of tech startups possible. Maybe a facebook or instagram or dropbox IPO doesn't show in the numbers, but all 3 of those companies were able to leverage massively improved software tools to cheaply make a competitive product. Mere dozens of software developers could make a large, performant site, or a file sharing system that worked seamlessly based around python (dropbox), or a site and mobile app that immediately gathered many users (instagram).

This was from technology making it much easier - previous to all the software stack and hardware speed improvements, these products were much harder. Go back enough years and it was impossible.

Yet other forms of startups - rocket startups, self driving startups, VTOL startups, biotech startups, chip startups, most hardware startups - fail almost every time. This is because it's REALLY HARD to do what they are doing, and they simply don't have either the funding or the coordination ability to solve a problem this difficult.

Part of it is probably coordination - even if they technically HAVE enough engineers to solve the problems, there is missing tooling and automated design verification and many other tools, especially for physical world products, so their prototype blows up and their second prototype blows up and they run out of money before reaching a prototype worthy of sale.

AGI could make engineering problems, even for new products, possible to solve immediately, reaching a salable, manufacturable, reliable product in a short time. (through simulated testing large numbers of design variants, real world manufacturing and real testing of thousands of prototype variations in parallel, automating real world manufacturing for new products with no hand assembly stage required at all, automating the design of mechanical and electronic parts, and so on)

I think this is just a false statement about current AI systems weighted by capital investment into them.

Generative models are all the rage, and they feel much more like an internet style invention than a "microsocope". Furthermore, it's not clear to me that the invention of the microscope was actually economically transformative?

it took a while, but it allowed building models of things previously unmodelable. I do think it's a good representation of how economic transformations from new technology take so long that you can't get a clear immediate causal economic signal from a new technology's introduction, usually.

If you look at the blog posts [2] coming out of Meta's Reality Labs, they place a heavy emphasis on "ultra-low-friction input and adaptive interfaces powered by contextualized AI". Consumer hardware [1] already has GPUs for graphics performance and Neural Engines for video analysis and image processing. With the WebGPU API [3] launching this year, high-performance general-purpose GPU compute will be available in the browser. Traditionally, it has been difficult to create high production quality interactive visualizations [4], but with the power of generative AI, the skill ceiling for becoming a creator drops a lot. 3D/VR/AI data analysis would indeed qualify as a mathematical microscope [7], allowing us to better understand measurements of complex phenomena. The development of transformative tools for thought [6] is one of the primary promises of current models, with future "next-action prediction" models enabling an "AI Workforce" (can't link to this one since the source is not public), and the largest platforms will shift from recommendation to generation [5].

[1] Apple unveils MacBook Pro featuring M2 Pro and M2 Max - Apple

[2] Inside Facebook Reality Labs: Wrist-based interaction for the next computing platfor

[3] Origin Trials (chrome.com)

[5] Instagram, TikTok, and the Three Trends – Stratechery by Ben Thompson

[6]Introducing our first investments · OpenAI Startup Fund

[7]

Hmm. Interesting results, agreed on all points except the video, and that video is great, but I think you've misunderstood somewhat if you interpreted wavelets as an example of ai; they're a tiny component. Very good video, and it does give an intuition for why ai could be microscope-like, but ai is incredibly much larger than that.

Abstract

Eli Dourado presents the case for scepticism that AI will be economically transformative near term[1].

For a summary and or exploration of implications, skip to "My Take".

Introduction

Eli's Thesis

In particular, he advances the following sectors as areas AI will fail to revolutionise:

Eli thinks AI will be very transformative for content generation[2], but that transformation may not be particularly felt in people's lives. Its economic impact will be even smaller (emphasis mine):

A personal anecdote of his that I found pertinent enough to include in full:

My Take

The issues seem to be that the biggest bottlenecks to major productivity gains in some of the largest economic sectors are not technological in nature but more regulatory/legal/social. As such, better technology is not enough in and of itself[3] to remove them.

This rings true to me, and I've been convinced by similar arguments from Matt Clancy to be sceptical of productivity gains from automated innovation[4].

Another way to frame Eli's thesis is that for AI to quickly transform the economy/materialise considerable productivity gains, then we must not have significant bottlenecks to growth/productivity from suboptimal economic/social policy. That is, we must live in a very adequate world[5]; insomuch as you believe that inadequate equilibria abound in our civilisation, then apriori you should not expect AI to precipitate massive economic transformation near term.

Reading Eli's piece/writing this review persuaded me to be more sceptical of Paul style continuous takeoff[6] and more open to discontinuous takeoff; AI may simply not transform the economy much until it's capable of taking over the world[7].

By "near term", I mean roughly something like: "systems that are not powerful enough to be existentially dangerous". Obviously, existentially dangerous systems could be economically disruptive.

He estimates that he may currently have access to a million to ten million times more content than he had in his childhood (which was life changing), but is sceptical that AI generating another million times improvement in the quantity of content would change his life much (his marginal content relevant decisions are about filtering/curating his content stream).

Without accompanying policy changes.

A counterargument to Eli's thesis that I would find persuasive is if people could compellingly advance the case that technological innovation precipitates social/political/legal innovation to better adapt said technology.

However, the lacklustre productivity gains of the internet and smartphone age does call that counterthesis into question.

As long as we don't completely automate the economy/innovation pipeline [from idea through to large scale commercial production and distribution to end users], the components on the critical path of innovation that contain humans will become the bottlenecks. This will prevent the positive feedback loops from automated innovation from inducing explosive growth.

I.e. it should not be possible to materialise considerable economic gains from better (more optimal) administration/regulation/policy at the same general level of technological capabilities.

In game theoretic terms, our civilisational price of anarchy must be low.

However, the literature suggesting that open borders could potentially double gross world product suggests that we're paying a considerable price. Immigration is just one lever through which better administration/regulation could materialise economic gains. Aggregated across all policy levers, I would expect that we are living in a very suboptimal world.

A view I was previously sold on as being the modal trajectory class for the "mainline" [future timeline(s) that has(have) a plurality(majority) of my probability mass].

I probably owe Yudkowsky an apology here for my too strong criticisms of his statements in favour of this position.