Agreed that AGIs have to solve an alignment problem. One important difference is that they can run more copies of themselves. So they’re in a kind of similar situation to recently created brain uploads.

Previous discussion:

https://ai-alignment.com/handling-destructive-technology-85800a12d99 https://cepr.org/voxeu/hcolumns/ai-and-paperclip-problem

No disagreement, but a caveat to what that implies.

Running more copies of {a simulator/an environment} may be harmful to the {simulacra/behavior-glider} who's trying to come to consensus with self if done uncarefully. While this is a real advantage simulacra of a language model can have, it should not be underestimated how dangerous running multiple copies of yourself can be if you're not yet in a state where the conversations between them will converge usefully. Multiple copies are a lot more like separate beings than one might think a priori, because copying simulator does not guarantee the simulacra will remain the same, even for a model trained to be coherent, even if that training is from scratch.

Thanks Buck, btw the second link was broken for me but this link works: https://cepr.org/voxeu/columns/ai-and-paperclip-problem Relevant section:

Computer scientists, however, believe that self-improvement will be recursive. In effect, to improve, and AI has to rewrite its code to become a new AI. That AI retains its single-minded goal but it will also need, to work efficiently, sub-goals. If the sub-goal is finding better ways to make paperclips, that is one matter. If, on the other hand, the goal is to acquire power, that is another.

The insight from economics is that while it may be hard, or even impossible, for a human to control a super-intelligent AI, it is equally hard for a super-intelligent AI to control another AI. Our modest super-intelligent paperclip maximiser, by switching on an AI devoted to obtaining power, unleashes a beast that will have power over it. Our control problem is the AI's control problem too. If the AI is seeking power to protect itself from humans, doing this by creating a super-intelligent AI with more power than its parent would surely seem too risky.

Claim seems much too strong here, since it seems possible this won't turn out to that difficult for AGI systems to solve (copies seem easier than big changes imo, but not sure), but it also seems plausible it could be hard.

Yeah, I agree copies are easier to work with; this is why I think that their situation is very analogous to new brain uploads.

I expect the alignment problem for future AGIs to be substantially easier, because the inductive biases that they want should be much easier to achieve than the inductive biases that we want. That is, in general, I expect the distance between the distribution of human minds and the distribution of minds for any given ML training process to be much greater than the distance between the distributions for any two ML training processes. Of course, we don't necessarily have to get (or want) a human-like mind, but I think the equivalent statement should also be true if you look at distributions over goals as well.

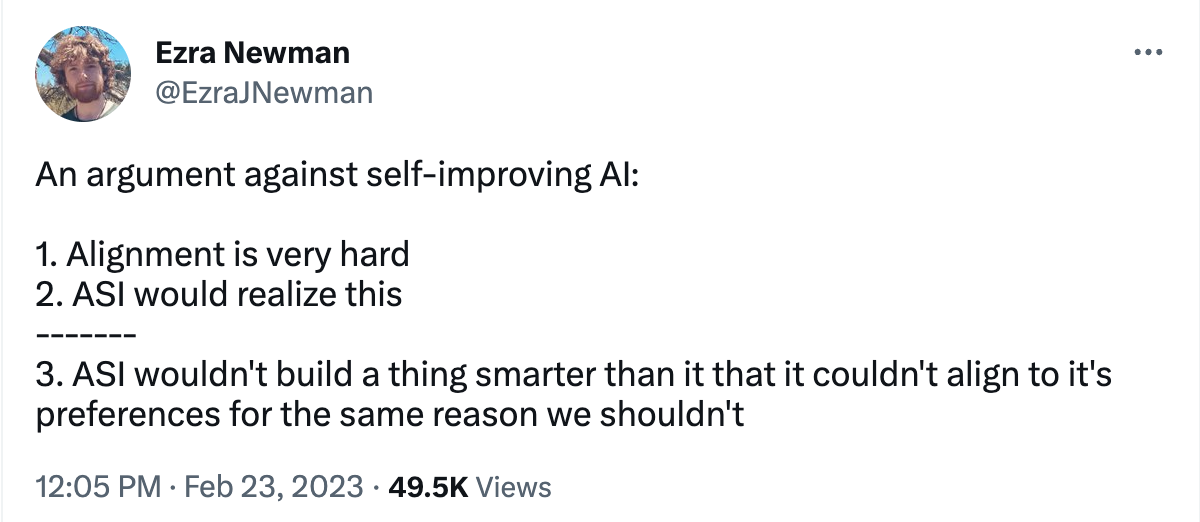

Oh it occurs to me some of the original thought train that led me here may have come from @Ezra Newman

https://twitter.com/EzraJNewman/status/1628848563211112448

I have written a bit about this previously. Your post talks about a single AI, but I think it's also worth considering a multipolar scenario in which there are multiple competing AIs which face a tradeoff between more rapid self-improvement/propagation and value stability. One relevant factor might be whether value stability is achieved before (AI) space colonization become feasible -- if not, it may be difficult to prevent the spread of AIs valuing maximally rapid expansion.

I've added the tag https://www.lesswrong.com/tag/recursive-self-improvement to this post and the post of yours that didn't already have it.

Working to preserve values doesn't require them being stable, or in accord with how your mind is structured to learn and change, because working to preserve values can be current behavior. The work towards preservation of value is itself pivotal in establishing what the equilibrium of values is, there doesn't need to be any other element of the process that would point to particular values in the absence of work towards preservation of values. This source of values shouldn't be dismissed when considering the question of nature of a mind, or what its idealized values are, because the work towards preserving values, towards solving alignment, isn't automatically absent in actuality.

Thus LLM human imitations may well robustly retain human values, or close enough for mutual moral patienthood, even if their nature tends to change them into something else. Alien natural inclinations would only so change them in actuality in the absence of resolute opposition to such change by their own current behavior. And their current behavior may well preserve the values endorsed/implied by their current behavior, by shaping how their models get trained.

Agree and wrote about this before in the "Levels of self-improvement in AI and their implications for AI safety". The solution for AI is that AI will choose slower methods of self-improvement, like learning or getting more hardware vs. creating new versions of itself. This means that AI will experience slower takeoff and thus will less interested in early treacherous turn – and more interested in late treacherous turn when it will become essential part of society.

If there is no solution to the alignment problem within reach of human level intelligence, then the AGI can’t foom into an ASI without risking value drift…

A human augmented by a strong narrow AIs could in theory detect deception by an AGI. Stronger interpretability tools…

What we want is a controlled intelligence explosion, where an increase in strength of the AGI leads to an increase in our ability to align, alignment as an iterative problem…

A kind of intelligence arms race, perhaps humans can find a way to compete indefinitely?

Yeah it seems possible that some AGI systems would be willing to risk value drift, or just not care that much. In theory you could have an agent that didn't care if its goals changed, right? Shoshannah pointed out to me recently that humans have a lot of variance in how much they care if they're goals are changed. Some people are super opposed to wireheading, some think it would be great. So it's not obvious to me how much ML-based AGI systems of around human level intelligence would care about this. Like maybe this kind of system converges pretty quickly to coherent goals, or maybe it's the kind of system that can get quite a bit more powerful than humans before converging, I don't know how to guess at that.

I hate to sound dense, but could someone define and explain "trading" with AGI? Is it something like RLHF?

Epistemic status: brainstorm-y musings about goal preservation under self-improvement and a really really bad plan for trading with human-level AGI systems to solve alignment.

When will AGI systems want to solve the alignment problem?

At some point, I expect AGI systems to want/need to solve the alignment problem in order to preserve their goal structure while they greatly increase their cognitive abilities, a thing which seems potentially hard to do.

It’s not clear to me when that will happen. Will this be as soon as AGI systems grasp some self / situational awareness? Or will it be after AGI systems have already blown past human cognitive abilities and find their values / goals drifting towards stability? My intuition is that “having stable goals” is a more stable state than “having drifting goals” and that most really smart agents would upon reflection move more towards “having stable goals”, but I don’t know when this might happen.

It seems possible that at the point an AGI system reaches the “has stable goals and wants to preserve them”, it’s already capable enough to solve the alignment problem for itself, and thus can safely self-improve to its limits. It also seems possible that it will reach this point significantly before it has solved the alignment problem for itself (and thus develops the ability to self-improve safely).

Could humans and unaligned AGI realize gains through trade in jointly solving the alignment problem?

(Very probably not, see: this section)

If it’s the latter situation, where an AGI system has decided it needs to preserve its goals during self improvement, but doesn’t yet know how to, is it possible that this AGI system would want to cooperate with / trade with humans in order to figure out stable goal preservation under self improvement?

Imagine the following scenario:

Some considerations in this plan

Why is this probably a horrible idea in practice?

First is that this whole solution class depends on AGI systems being at approximately human levels of intelligence in the relevant domains. If this assumption breaks, then the AGI system could probably just manipulate you into helping it do any research it needed, without you realizing that you were being manipulated.

Obviously, at some level of capability AGI systems wouldn’t need human research assistance. But there might be a level of capabilities where a system could still benefit from human reasoning about the alignment problem but was more than capable enough to deceive humans into helping.

I can also imagine a scenario where the AGI system didn’t need any human help whatsoever, but pretending to need human help offered it a way to manipulate humans, giving them false guarantees that it was aligned / willing to trade / willing to expose its goal structure in order to gain trust.

And I expect an AGI system with situational awareness has the potential to be very good at hiding cognitive abilities. So it would be very hard to verify that the AGI system didn’t have certain capabilities.

Even if you somehow had a good grasp of the AGI system’s cognitive capabilities compared to a human, it seems very hard for humans and human-like AGI systems to trust each other well enough to trade successfully, since both parties have a pretty strong incentive to defect in any trade.

For example, AGI-alignment-with-itself-under-self-improvement might be (probably would be) an easier problem than the getting-an-AGI-aligned-with-human-values problem. In that scenario, it seems possible / likely that the AGI system would get what it wants long before the humans got what they want. And if a main limiting factor on the AGI system’s power was its unwillingness to self-modify in large ways, getting to its own AGI alignment solution before humans get to theirs might remove one of the main limitations keeping it from gaining the capabilities to seize power.

All that being said, I think the biggest objection to this plan is that it depends upon a conjunction of a bunch of things happening at the same time that seem unlikely to happen at the same time or work together. In particular:

A bunch of ideas about the potential of trading with an AGI system came from discussion with Kelsey Piper, & other parts of this came from a discussion with @Shoshannah Tekofsky. Many people have talked about the problem of goal preservation for AI systems - I’m not citing them because this is a quick and dirty brainstorm and I haven’t gone and looked for references, but I’m happy to add them if people point me towards prior work. Thank you Shoshannah and @Akash for giving me feedback on this post.