I think this framing doesn't work, programs almost never control each other. Instead they can coordinate with each other by agreeing to follow decisions of a third program, which is identical between them, a "contract". Initially, the contract isn't yet "signed", so seeing each other's code sets up the conditions for defining a shared contract (deciding to follow what it'll say once computed).

There could be many contracts simultaneously, each weakly nudging decisions of multiple agents coordinated through them. Social norms are contracts in this sense. I think some computations of circuits of deep learning models are contracts with the environment, these computations (numerous and small) decide both what the environment will do (the way arithmetic decides what a physical calculator will do), and what the model will predict, and so the two become coordinated, even in situations never seen in the training dataset.

I think that if you want to compute logical correlations between programs, you need to look at their, well, logic. E.g., if you have some way of extracting a natural abstraction-based representation of their logic, build something like a causal graph from it, and then design a similarity metric for comparing these representations.

I have a suspicion, though, that this is not the right approach for handling ECL because ECL (I think?) involves the agent(s) looking at (some abstraction over) their "source code(s)" and then making a decision based on that. I expect that this ~reflection needs to be modeled explicitly.

Admittedly most of this post goes over my head. But could you explain why you want logical correlation to be a metric? Statistical correlation measures (where the original "correlation" intuition probably comes from) are usually positive, negative, or neutral.

In a simple case, neutrality between two events A and B can indicate that the two values are statistically independent. And perfect positive correlation either means that both values always co-occur, i.e. P(A iff B)=1, or that at least one event implies the other. For perfect negative correlation that would be either P(A iff B)=0, or alternatively at least one event implying the negation of the other. These would not form a metric. Though they tend to satisfy properties like cor(A, B)=cor(B, A), cor(A, not B)=cor(not A, B), cor(A, B)=cor(not A, not B), cor(A, B)=-cor(A, not B), cor(A, A)=maximum, cor(A, not A)=minimum.

Though it's possible that (some of) these assumptions wouldn't have a correspondence for "logical correlation".

Another naive thing to do is ask about the length of the program required to get from one program to another, in various ways.

Given an oracle for p1, what's the complexity of the output of p2?

What if you had an oracle for all the intermediate states of p1?

What if instead of measuring the complexity, you measured the runtime?

What if instead of asking for the complexity of the output of p2, you asked for the complexity of all the intermediate states?

All of these are interesting but bad at being metrics. I mean, I guess you could symmetrize them. But I feel like there's a deeper problem, which is that they by default ignore computational process, and have to have it tacked as extra.

In which to compare how similarly programs compute their outputs, naïvely and less naïvely.

Logical Correlation

Attention conservation notice: Premature formalization, ab-hoc mathematical definition.

Motivation, Briefly

In the twin prisoners dilemma, I cooperate with my twin because we're implementing the same algorithm. If we modify the twin slightly, for example to have a slightly longer right index-finger-nail, I would still cooperate, even though we're different algorithms, since little enough has been changed about our algorithms that the internal states and the output are basically the same.

It could be that I'm in a prisoner's dilemma with some program p⋆ that, given some inputs, returns the same outputs as I do, but for completely different "reasons"—that is, the internal states are very different, and a slight change in input would cause the output to be radically different. Intuitively, my similarity to p⋆ is pretty small, because even though it gives the same output, it gives that output for very different reasons, so I don't have much control over its outputs by controlling my own computations.

Let's call this similarity of two algorithms the logical correlation between the two algorithms (alternative terms "include “logical influence,” “logical correlation,” “correlation,” “quasi-causation,” “metacausation,” […] “entanglement”[,] “acausal influence”"). I take this term from Demski & Garrabrant 2020:

—Abram Demski & Scott Garrabrant, “Embedded Agency” p. 12, 2020

Similarly:

—Caspar Oesterheld, “Multiverse-wide Cooperation via Correlated Decision Making” p. 18, 2018

There isn't yet an established way of estimating the logical correlation between different decision algorithms.

A Naïve Formula

Thus: Consider the some naïve formula (which we'll designate by 合[1]) for logical correlation[2]: Something that takes in two programs and returns a number that quantifies how similarly the two programs compute what they compute.

Setup

Let a program p be a tuple of code for a Turing machine and intermediate tape states after each command execution. We'll treat the final tape state as the output, all in binary.

That is p=(c,t), with c∈{0,1}+ and t∈({0,1}+)+. Let l=|t| be the number of steps that p takes to halt.

For simplicity's sake, let's give t[l] (the tape state upon halting) the name o, the output.

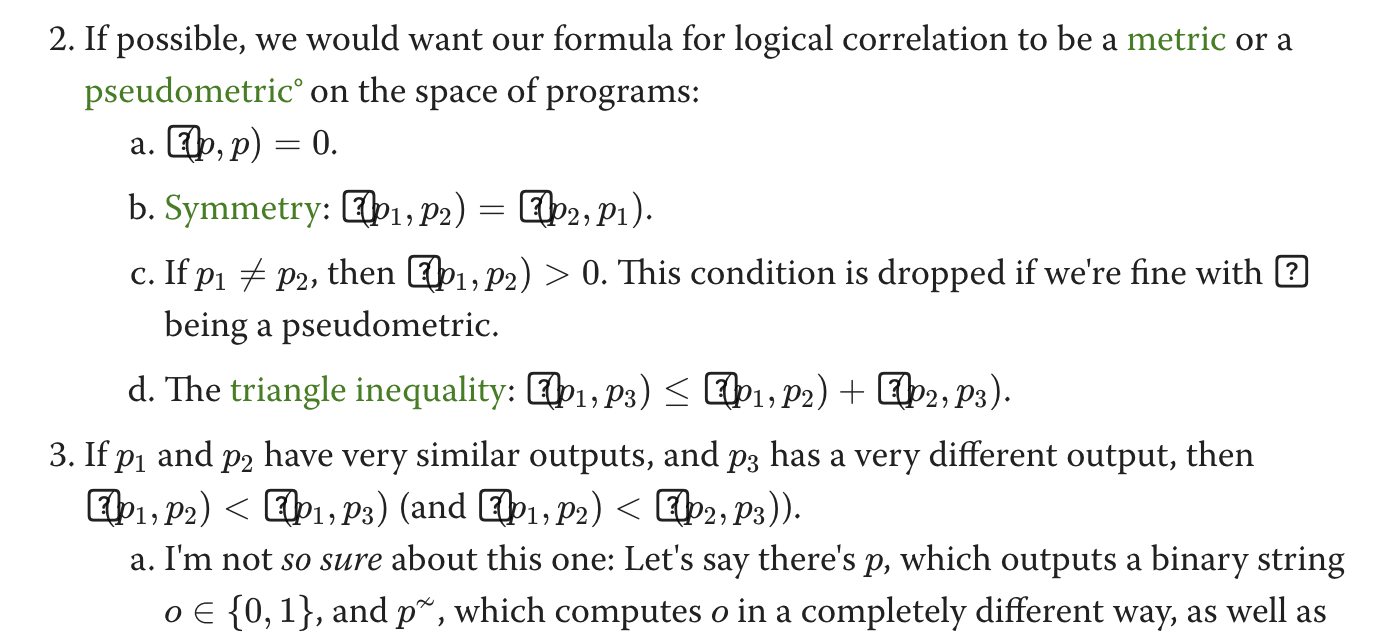

Possible Desiderata

Formal Definition

Let p1=(c1,t1),p2=(c2,t2) be two halting programs, l1,l2 are the number of steps it takes p1,p2 to halt, and o1=tl1,o2=tl2 the last tape states (outputs) of the two programs.

Then a formula for the logical correlation 合 of p1,p2, a tape-state discount factor γ[3], and a string-distance metric d:{0,1}+×{0,1}+→N could be

合(p1,p2,γ)=d(o1,o2)+1−exp(−min(l1,l2)∑k=1γk⋅d(t1[l1−k],t2[l2−k]))

The lower 合, the higher the logical correlation between p1 and p2.

Explanation

Let's take a look at the equation again, but this time with some color highlighting:

合(p1,p2,γ)=d(o1,o2)+1−exp(−min(l1,l2)∑k=1γk⋅d(t1[l1−k],t2[l2−k]))

The fundamental idea is that we first (red) compute the distance of the two outputs. We then go backward through the trace of the two programs, (green) adding up the pairwise (blue) differences of the traces at each timestep, potentially (purple) discounting the differences the farther they lie in in the "past" of the output/further towards the start of the computation.

Finally, we (orange) subtract the inverse of this (discounted) sum of trace differences from the output difference[4].

The value of the exponential function here can maximally be 1 (since the smallest value of the sum is zero) and will always be greater than zero. Thus, since we're subtracting a number ≤1 from d(o1,o2)+1, the resulting logical correlation must be d(o1,o2)≤合(p1,p2,γ)≤d(o1,o2)+1−ε. That implies that for three programs with the same output, their logical correlations all lie in that range. That also means that if d(o1,o2)<d(o1,o3), then it's the case that 合(p1,p2,γ)<合(p1,p3,γ).

Or, in even simpler terms; "Output similarity dominates trace similarity."

Different Trace Lengths

One might also want to be able to deal with the fact that programs have different trace lengths, and penalize that, e.g. amending the formula:

合′(p1,p2,γ)=合(p1,p2,γ)+2|l1−l2|

Desiderata Fulfilled?

Does this fulfill our desiderata from earlier? I'll assume that the string distance d is a metric, in the mathematical sense.

Proving 合(p,p)=0

Proof:

d(o,o)+1−exp(−min(l,l)∑k=1γk⋅d(t(l−k),t(l−k)))=0+1−exp(−l∑k=1yk⋅0)=1−exp(0)=0

Since d is a metric, d(o,o)=0.

Proving Symmetry

Symmetry is trivially true if we assume that d is symmetric.

Proving Positivity

The minimal logical correlation is 0.

合(p1,p2,γ)≥0⇔d(o1,o2)+1−exp(−min(l1,l2)∑k=1γk⋅d(t1[l1−k],t2[l2−k]))≥0⇔d(o1,o2)+1≥exp(−min(l1,l2)∑k=1γk⋅d(t1[l1−k],t2[l2−k]))⇔ln(d(o1,o2)+1)+min(l1,l2)∑k=1γk⋅d(t1[l1−k],t2[l2−k])≥0

This is true, because:

Thus we have a sum of products of only positive things, which is in turn positive itself.

Only A Pseudometric

But, unfortunately, it isn't the case that if p1≠p2, then 合(p1,p2,γ)>0. Thus 合 is only a pseudometric.

Consider, for example, two programs that both write a 1 to the starting position on the tape and then halt, but with the difference that p1 moves left and then right in the first two steps, and p2 moves right and then left in the first two steps. Both programs have the same tape-state trace, but are not "equal" in the strict sense as they have different source codes.

You might now complain that this is vacuous, since the two programs have no relevant functional difference. That's true, but I suspect there's some trickier edge cases here where randomly initialized tapes can have very different (or in other cases equal) tape-state traces. If you find an equivalence class of programs that are just vacuously different, I'd be interested in hearing about it.

A Less Naïve Formula

I think that the naïve formula is too naïve. Reasons:

I think one can create a better (though not perfect) way of determining logical correlations based on (something like) Shapley Values and possible tape-permutations.

Explanation

We'll inherit the basic setup from the naïve formula, but now we won't determine the logical correlation of the whole outputs o1,o2. Instead we pick one bit from each output, say b1=o1[k],b2=o2[k] for some k∈N.

This formula is based on the assumption that Shapley values of tape cells over time are a kind of fingerprint of the program as it runs, and as such can be compared with some distance function akin to d in the naïve formula.

Shapley Values for Tape States

We treat each tape state ti of a Turing machine as a set of players, which can play either 0 or 1 (the two states each cell on the tape can assume).

Then we compute the Shapley value for each tape state on the bit produced down the line by the Turing machine. To recap, the Shapley value assumes that there's a set ti(j) (with j∈N) of players, and a function v:2ti(j)→{0,1} for all subsets of players—in this case the execution of the program from ti until it halts. It's assumed that v(∅)=0.

People sometimes like to claim that the Shapley value is some kind of Platonic ideal of measuring contribution. I don't know about that, but it has some nice properties that uniquely identify it.

The Shapley value for a player j is then computed with the following equation:

ϕj(v)=∑S⊆N∖{j}|S|!(n−|S|−1)!n!(v(S∪{j})−v(S)))

Two conceptual difficulties present themselves:

1. can be solved by setting the null action to the tapestate produced by the program preceding the tapestate. I imagine this as a tapestate being able to "decide" to flip to the opposite bit before the program resumes, which counts as participating. We'll designate the function of letting a program p continue running from a timestep k until halting as ¯pk.

(Note that it can very well be the case that a cell flipping its tape bit can have a negative Shapley value, e.g. if the output bit is one if the input bit does nothing, and zero if the input bit is flipped. This felt like a problem to me for a while, but now I would guess it's not an issue, and is just a genuine behavior of the program that can be compared to the other one. I continue feeling a bit confused about whether there's something worth fixing here.)

For 2., my best solution is to be (overly?) expansive in which tape cells are considered as potential contributions: Let's call the "leftmost" tape cell reached by a program on a Turing machine during the whole execution f← and the "rightmost" one f→ (f for "frontier").

Then the subrange indexed of the whole tape is a range of natural numbers [min(f←1,f←2),…,max(f→1,f→2)], abbreviated as f↔.

Cells that haven't been "reached" yet by the program (or never will) automatically have a Shapley value of 0, that just falls out of the formula.[5] Because we're taking the biggest possible "reached envelope" on the tape the tape segments for both programs have the same size.

So, for a bit b in the output of the program p, at some timestep k, we get a list of Shapley values:

ᖫ(p,t,k)=[ϕj(¯pk):j∈f↔]

We'll call ᖫ(p,t,k) the Shapley value profile of a program p at a timestep k.

Comparing Lists of Influences

ᖫ returns… a list of real numbers. So if we evaluate the Shapley value profile of two tape states for two different programs, we have to compare two same-length lists of real numbers and figure out how similar they are.

There are many ways to do so. I don't have a particular favorite, but for convience let's pretend we take the element-wise mean-squared error and call it a day.

I'll designate whatever difference measure is decided on as d, just as earlier.

Permuted Tapes

If we just use the difference between Shapley values for intermediate tape states, we won't have solved the first problem of the naïve formula: Direction-reversed programs are evaluated as being extremely dissimilar, even though they are very similar.

As hinted, I don't have a great solution to this, but my current best approach is to look at permutations of one of the tapes, and choose the one which best "matches up" the two Shapley value profiles with each other. E.g. for p,p− from earlier we'd compare the two programs using the permutation that reverses the tape of p−.

It's important that this permutation be chosen once for all timesteps.

I don't like this solution. Permutations are too permissive, and two programs where p1 is best modeled as being pairwise flips of neighboring cells of p2 are, intuitively, quite dissimilar.

My current best idea is to penalize permutations for complexity, e.g. by preferring permutations that can be constructed from few pairwise swappings (one generating set of the symmetric group). But that would strongly penalize "natural" very similar programs, such as p,p−. If anyone here has good alternative ideas, hit me up.

Final Equation

Phew! That was a lot. Putting it all together, in a similar framework as with the naïve formula, yields[6]:

挧(p1,p2,b1,b2)=1(b1≠b2)+1−max σ∈Sym(f↔)exp(−min(l1,l2)∑k=1d(σ(ᖫ(p1,t1,k)),ᖫ(p2,t2,k))

with

ᖫ(p,t,k)=[ϕj(¯pk):j∈f↔]

(red) If the two output bits are different, "start" with the logical correlation being 1. (orange) Go through the tape states backwards in terms of the two programs being run, back to the first "shared" program state. (purple) For each tape state, compute the Shapley value profile. (blue) Permute one Shapley value profile that it "best" matches up with the other one. (grey) Compute the difference of the Shapley value profiles, and (orange) sum them up.

The bigger the summed diffence, the smaller the exponent of the negative of that distance. The largest possible value of 挧 is 2−ε, the smallest possible value is 0—in cases where b1=b2 and the sum of differences is zero.

Remaining Problem: Time-Permuted Tapes

I see one clear indicator that this hasn't been ironed out yet: If p1 computes an output by first computing the "left half" and then the "right half" (in terms of location on the tape relative to the starting position), and p2 computes first the "right half" and then the "left half", but compute both halves in very similar ways, then they should be very logically correlated, but the less naïve formula will tell you that they're quite different. (Which is just a version of the tape permutation, but over runtime.)

I don't know how to account for time permutation without even more ugly hacks.

Other Ideas

The formulae I cobbled together are pretty specialized to Turing machines, and lack desired features. Some possible alternatives, which I'm not fond of for various reasons:

See Also

Suggested by GPT-4. Stands for joining, combining, uniting. Also "to suit; to fit", "to have sexual intercourse", "to fight, to have a confrontation with", or "to be equivalent to, to add up". ↩︎

Actually not explained in detail anywhere, as far as I can tell. ↩︎

Which is needed because tape states close to the output are more important than tape states early on. ↩︎

Together with adding one to avoid same logical correlations for programs with different outputs differences. ↩︎

I have the suspicion that this whole thing isn't actually a problem and one can just compare permutations of the whole infinite tape, but I don't want to take any chances with weirdnesses around permutations of infinitely many elements, or the mean-squared error between infinitely long lists. Also it's nice to be able to actually implement the solution. ↩︎

挧 is a ghost character, and as such has no previously assigned meaning. ↩︎