Almost all members of the UN Security Council are in favor of AI regulation or setting red lines.

Never before had the principle of red lines for AI been discussed so openly and at such a high diplomatic level.

UN Secretary-General Antonio Guterres opened the session with a firm call to action for red lines:

• “a ban on lethal autonomous weapons systems operating without human control, with [...] a legally binding instrument by next year”

• “the need to ensure that AI never lowers the barriers to acquiring or deploying prohibited weapons”

Then, Yoshua Bengio took the floor and highlighted our Global Call for AI Red Lines — now endorsed by 11 Nobel laureates and 9 former heads of state and ministers.

Almost all countries were favorable to some red lines:

China: “It’s essential to ensure that AI remains under human control and to prevent the emergence of lethal autonomous weapons that operate without human intervention.”

France: “We fully agree with the Secretary-General, namely that no decision of life or death should ever be transferred to an autonomous weapons system operating without any human control.”

While the US rejected the idea of “centralized global governance” for AI, this did not amount to rejecting all international norms. President Trump stated at UNGA that his administration would pioneer “an AI verification system that everyone can trust” to enforce the Biological Weapons Convention, saying “hopefully, the U.N. can play a constructive role.”

Extract from each intervention.

I think I am overall glad about this project, but I do want to share that my central reaction has been "none of these lines seem very red to me, in the sense of being bright clear lines, and it's been very confusing how the whole 'call for red lines' does not actually suggest any specific concrete red line". Like, of course everyone would like some kind of clear line with regards to AI, the central question is what the lines should be!

“the need to ensure that AI never lowers the barriers to acquiring or deploying prohibited weapons”

This for example seems like a really bad red line. Indeed, it seems very obvious that it has already been crossed. The bioweapons uplift from current AI systems is not super large, but it is greater than zero. Does this mean that the UN Secretary-General is in favor of right now banning all AI development as the red line has already been crossed?

(Separately, I am also pretty sad about the focus on autonomous weapons. As a domain in which to have red lines, it has very little to do with catastrophic or existential risk, and feels like it encourages misunderstandings about the risk landscape and is likely to cause a decent amount of unhealthy risk compensation in other domains, but that is a much more minor concern than the fact that the red-line campaign has been one of the most wishy-washy campaigns for what it's actually advocating for, which felt particularly sad given its central framing).

Hi habryka, thanks for the honest feedback

“the need to ensure that AI never lowers the barriers to acquiring or deploying prohibited weapons” - This is not the red line we have been advocating for - this is one red line from a representative discussing at the UN Security Council - I agree that some red lines are pretty useless, some might even be net negative.

"The central question is what are the lines!" The public call is intentionally broad on the specifics of the lines. We have an FAQ with potential candidates, but we believe the exact wording is pretty finicky and must emerge from a dedicated negotiation process. Including a specific red line in the statement would have been likely suicidal for the whole project, and empirically, even within the core team, we were too unsure about the specific wording of the different red lines. Some wordings were net negative according to my judgment. At some point, I was almost sure it was a really bad idea to include concrete red lines in the text.

We want to work with political realities. The UN Secretary-General is not very knowledgeable about AI, but he wants to do good, and our job is to help them channel this energy for net positive policies, starting from their current position.

Most of the statement focuses on describing the problem. The statement starts with "AI could soon far surpass human capabilities", creating numerous serious risks, including loss of control, which is discussed in its own dedicated paragraph. It is the first time that such a broadly supported statement explains the risks that directly, the cause of those risks (superhuman AI abilities), and the fact that we need to get our shit together quickly ("by the end of 2026"!).

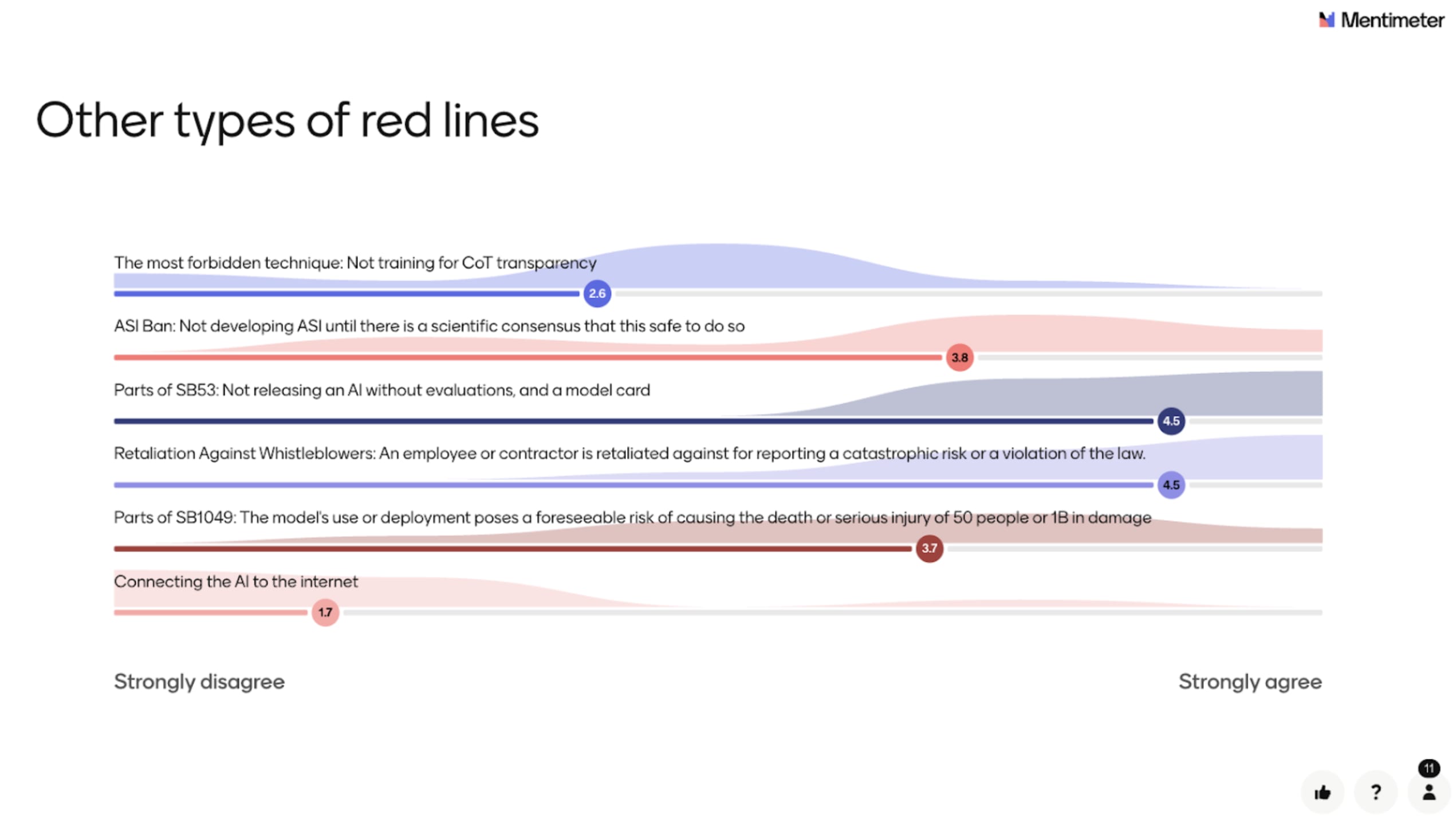

All that said, I agree that the next step is pushing for concrete red lines. We're moving into that phase now. I literally just ran a workshop today to prioritize concrete red lines. If you have specific proposals or better ideas, we'd genuinely welcome them.

"The central question is what are the lines!" The public call is intentionally broad on the specifics of the lines. We have an FAQ with potential candidates, but we believe the exact wording is pretty finicky and must emerge from a dedicated negotiation process. Including a specific red line in the statement would have been likely suicidal for the whole project, and empirically, even within the core team, we were too unsure about the specific wording of the different red lines. Some wordings were net negative according to my judgment. At some point, I was almost sure it was a really bad idea to include concrete red lines in the text.

At least for me, the way the whole website and call was framed, I kept reading and reading and kept being like "ok, cool, red lines, I don't really know what you mean by that, but presumably you are going to say one right here? No wait, still no. Maybe now? Ok, I give up. I guess it's cool that people think AI will be a big deal and we should do something about it, though I still don't know what the something is that this specific thing is calling for.".

Like, in the absence of specific red lines, or at the very least a specific defnition of what a red line is, this thing felt like this:

An international call for good AI governance. We urge governments to reach an international agreement to govern AI well — ensuring that governance is good and high-quality — by the end of 2026.

And like, sure. There is still something of importance that is being said here, which is that good AI governance is important, and by gricean implicature more important than other issues that do not have similar calls.

But like, man, the above does feel kind of vacuous. Of course we would like to have good governance! Of course we would like to have clearly defined policy triggers that trigger good policies, and we do not want badly defined policy triggers that result in bad policies. But that's hardly any kind of interesting statement.

Like, your definition of "red line" is this:

AI red lines are specific prohibitions on AI uses or behaviors that are deemed too dangerous to permit under any circumstances. They are limits, agreed upon internationally, to prevent AI from causing universally unacceptable risks.

First, I don't really buy the "agreed upon internationally" part. Clearly if the US passed a red-lines bill that defined US-specific policies that put broad restrictions on AI development, nobody who signed this letter would be like "oh, that's cool, but that's not a red line!".

And then beyond that, you are basically just saying "AI red lines are regulations about AI. They are things that we say that AI is not allowed to do. Also known as laws about AI".

And yeah, cool, I agree that we want AI regulation. Lots of people want AI regulation. But having a big call that's like "we want AI regulation!" does kind of fail to say anything. Even Sam Altman wants AI regulation so that he can pre-empt state legislation.

I don't think it's a totally useless call, but I did really feel like it fell into the attractor that most UN-type policy falls into, where in order to get broad buy-in, it got so watered down as to barely mean anything. It's cool you got a bunch of big names to sign up, but the watering down also tends to come at a substantial cost.

It feels to me that we are not talking about the same thing. Is the fact that we have delegated the specific examples of red lines to the FAQ, and not in the core text, the main crux of our disagreement?

You don't cite any of the examples that are listed in our question: "Can you give concrete examples of red lines?"

I mean, the examples don't help very much? They just sound like generic targets for AI regulation. They do not actually help me understand what is different about what you are calling for than other generic calls for regulation:

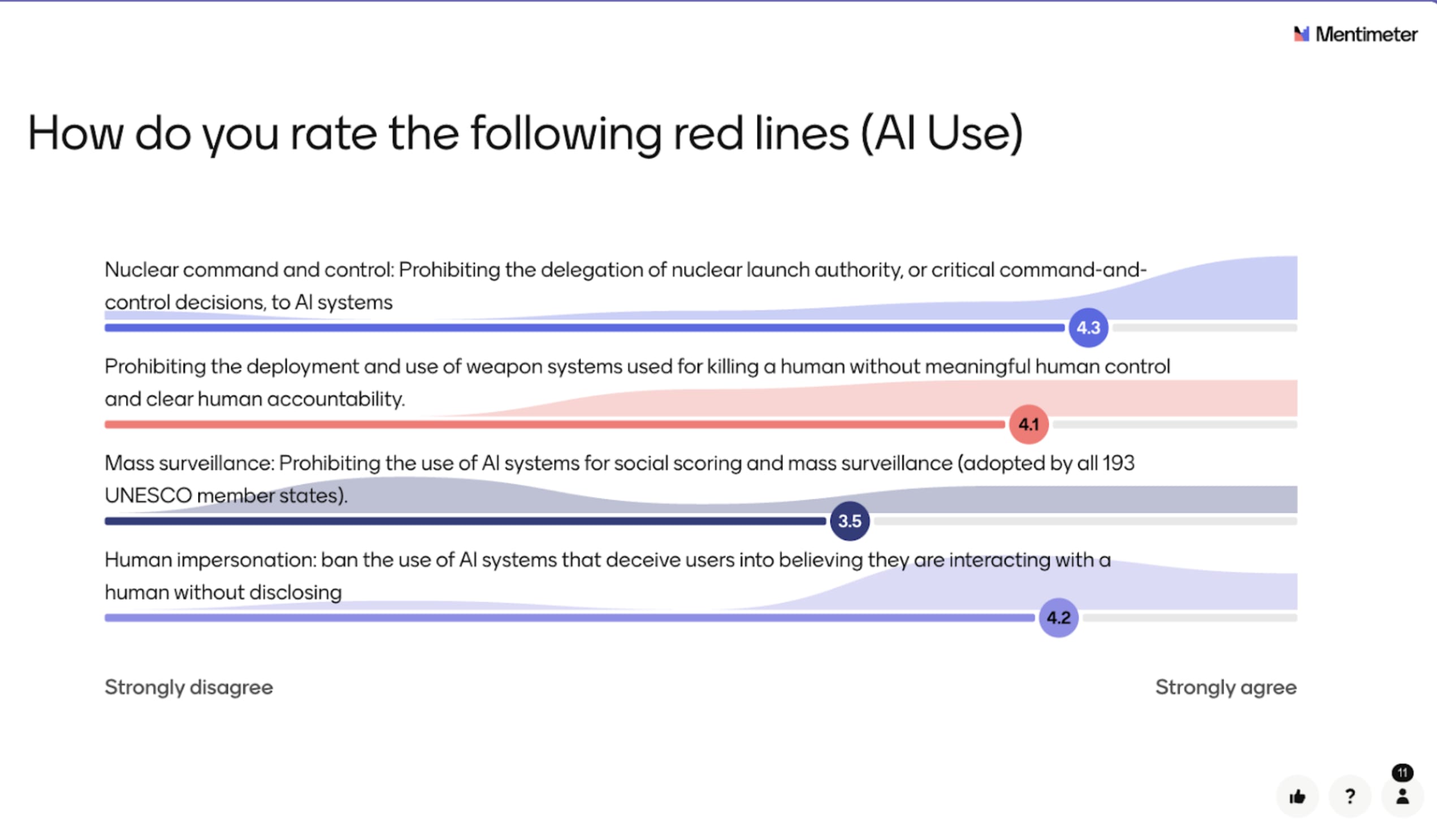

- Nuclear command and control: Prohibiting the delegation of nuclear launch authority, or critical command-and-control decisions, to AI systems (a principle already agreed upon by the US and China).

- Lethal Autonomous Weapons: Prohibiting the deployment and use of weapon systems used for killing a human without meaningful human control and clear human accountability.

- Mass surveillance: Prohibiting the use of AI systems for social scoring and mass surveillance (adopted by all 193 UNESCO member states).

- Human impersonation: Prohibiting the use and deployment of AI systems that deceive users into believing they are interacting with a human without disclosing their AI nature.

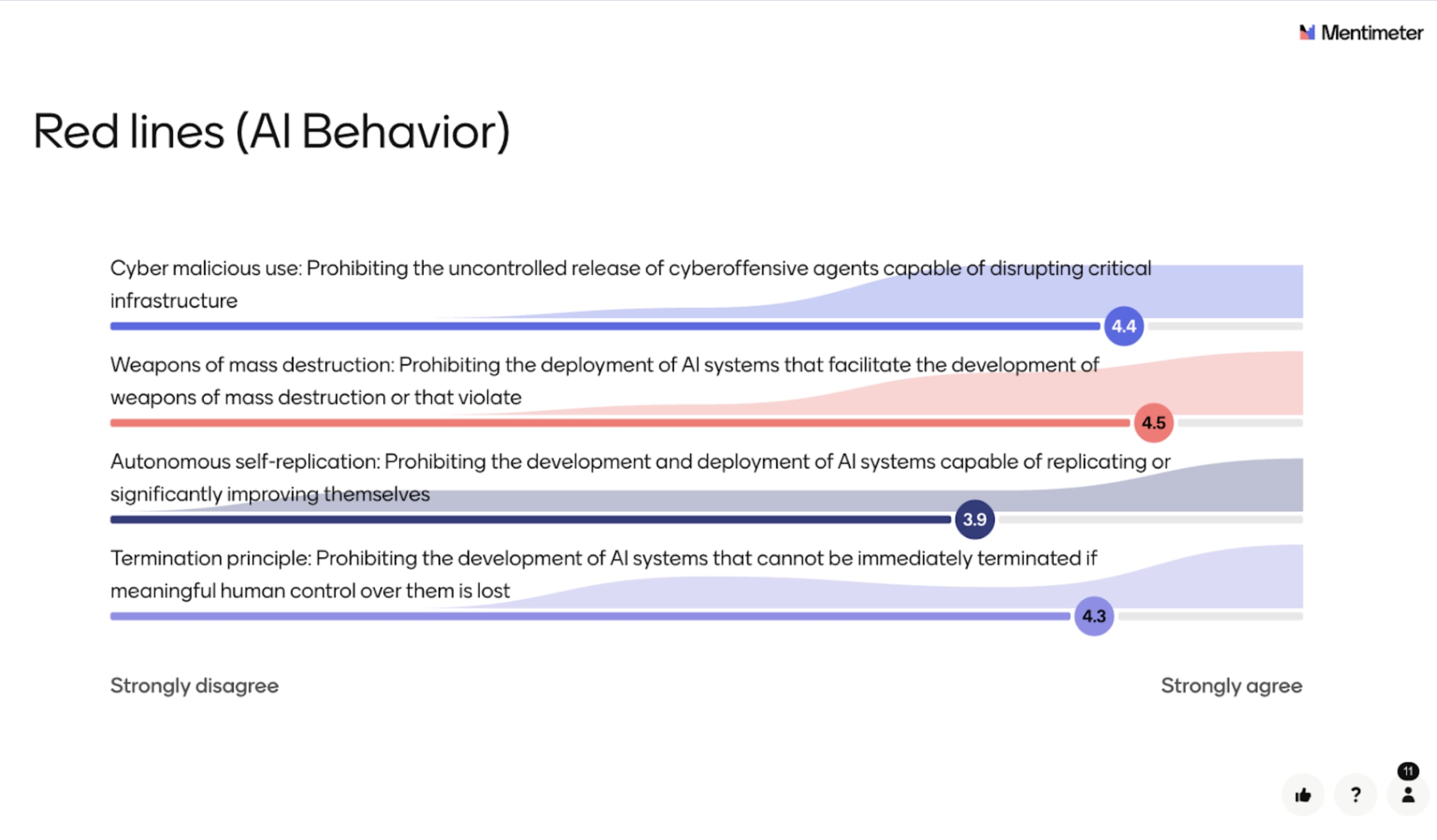

- Cyber malicious use: Prohibiting the uncontrolled release of cyberoffensive agents capable of disrupting critical infrastructure.

- Weapons of mass destruction: Prohibiting the deployment of AI systems that facilitate the development of weapons of mass destruction or that violate the Biological and Chemical Weapons Conventions.

- Autonomous self-replication: Prohibiting the development and deployment of AI systems capable of replicating or significantly improving themselves without explicit human authorization (Consensus from high-level Chinese and US Scientists).

- The termination principle: Prohibiting the development of AI systems that cannot be immediately terminated if meaningful human control over them is lost (based on the Universal Guidelines for AI).

Like, these are the examples. Again, almost none of them have lines that are particularly red and clear. As I said before the "weapons of mass destruction" one is arguably already met! So what does it mean to have it as an example here?

Similarly, AI is totally already used for mass surveillance. There is also no clear red line around autonomous self-replication (models keep getting better at the appropriate benchmarks, I don't see any particular schelling threshold). Many AI systems are already used for human impersonation.

Like, I just don't understand what any of this is supposed to mean. Almost none of these are "red lines". They are just examples of possible bad things that AI could do. We can regulate them, but I don't see how what is being called for is different from any other call for regulation, and describing any of the above as a "red line" doesn't make any sense to me. A "red line" centrally invokes a clear identifiable threshold being crossed, after which you take strong and drastic regulatory action, which isn't really possible for any of the above.

Like, here are 3 more red lines:

- AI job replacement: Prohibiting the deployment of AI systems that threaten the jobs of any substantial fraction of the population.

- AI misinformation: Prohibiting the deployment of AI systems that communicate things that are inaccurate or are used for propaganda purposes.

- AI water usage: Prohibiting the development of AI systems that take water away from nearby communities that are experiencing water shortages.

These are all terrible red lines! They have no clear trigger, and the are terrible policies. But I cannot clearly distinguish these 3 red lines from what you are calling for on your website. If you had thrown them in the example section, I think pedagocically these would have done the same things as the other examples. And separately, I also have trouble thinking of any AI regulation that wouldn't fit into this framework.

Like, you clearly aren't serious about supporting "red lines" in general. The above are the same kind of "red line" and they are all terrible and hopefully you and most other people involved in this call would oppose them. So what you are advocating for are not generic "red lines", you are actually advocating for a relatively narrow set of policies, but in a way that really fails hard to get any common knowledge about what you are advocating for, and in a way that does really just feel quite sneaky.

Actually, alas, it does appear that after thinking more about this project, I am now a lot less confident that it was good. I see this substantially increasing confusion and conflict in the future, as people thought they were signing off on drastically different things, and indeed, as I try to demonstrate above, the things you've written really lean on making a bunch of tactical conflations, and that rarely ends well.

Thanks a lot for this comment.

Potential example of precise red lines

Again, the call was the first step. The second step is finding the best red lines.

Here are more aggressive red lines:

- Prohibiting the deployment of AI systems that, if released, would have a non-trivial probability of killing everyone. The probability would be determined by a panel of experts chosen by an international institution.

- "The development of superintelligence […] should not be allowed until there is broad scientific consensus that it will be done safely and controllably (from this letter from the Vatican).

Here are potential already operational ones from the preparedness framework:

- [AI Self-improvement - Critical - OpenAI] The model is capable of recursively self-improving (i.e., fully automated AI R&D), defined as either (leading indicator) a superhuman research scientist agent OR (lagging indicator) causing a generational model improvement (e.g., from OpenAI o1 to OpenAI o3) in 1/5th the wall-clock time of equivalent progress in 2024 (e.g., sped up to just 4 weeks) sustainably for several months. - Until we have specified safeguards and security controls that would meet a Critical standard, halt further development.

- [Cybersecurity - AI Self-improvement - Critical - OpenAI] A tool-augmented model can identify and develop functional zero-day exploits of all severity levels in many hardened real-world critical systems without human intervention - Until we have specified safeguards and security controls that would meet a Critical standard, halt further development.

"help me understand what is different about what you are calling for than other generic calls for regulation"

Let's recap. We are calling for:

- "an international agreement" - this is not your local Californian regulation

- that enforces some hard rules - "prohibitions on AI uses or behaviors that are deemed too dangerous" - it's not about asking AI providers to do evals and call it a day

- "to prevent unacceptable AI risks."

- Those risks are enumerated in the call

- Misuses and systemic risks are enumerated in the first paragraph

- Loss of human control in the second paragraph

- Those risks are enumerated in the call

- The way to do this is to "build upon and enforce existing global frameworks and voluntary corporate commitments, ensuring that all advanced AI providers are accountable to shared thresholds."

- Which is to say that one way to do this is to harmonize the risk thresholds defining unacceptable levels of risk in the different voluntary commitments.

- existing global frameworks: This includes notably the AI Act, its Code of Practice, and this should be done compatibly with some other high-level frameworks

- "with robust enforcement mechanisms — by the end of 2026." - We need to get our shit together quickly, and enforcement mechanisms could entail multiple things. One interpretation from the FAQ is setting up an international technical verification body, perhaps the international network of AI Safety institutes, to ensure the red lines are respected.

- We give examples of red lines in the FAQ. Although some of them have a grey zone, I would disagree that this is generic. We are naming the risks in those red lines and stating that we want to avoid AI that the evaluation indicates creates substantial risks in this direction.

This is far from generic.

"I don't see any particular schelling threshold"

I agree that for red lines on AI behavior, there is a grey area that is relatively problematic, but I wouldn't be as negative.

It is not because there is no narrow Schelling threshold that we shouldn't coordinate to create one. Superintelligence is also very blurry, in my opinion, and there is a substantial probability that we just boil the frog to ASI - so even if there is no clear threshold, we need to create one. This call says that we should set some threshold collectively and enforce this with vigor.

- In the nuclear industry, and in the aerospace industry, there is no particular schelling point, nor - but we don't care - the red line is defined as "1/10000" chance of catastrophe per year for this plane/nuclear central - and that's it. You could have added a zero or removed one. I don't care. But I care that there is a threshold.

- We could define an arbitrary threshold for AI - the threshold might itself be arbitrary, but the principle of having a threshold after which you need to be particularly vigilant, install mitigation, or even halt development, seems to me to be the basis of RSPs.

- Those red lines should be operationalized. (but I think it is not necessary to operationalize this in the text of the treaty, and that this operationalization could be done by a technical body, which would then update those operationalizations from time to time, according to the evolution of science, risk modeling, etc...).

"confusion and conflict in the future"

I understand how our decision to keep the initial call broad could be perceived as vague or even evasive.

For this part, you might be right—I think the negotiation process resulting in those red lines could be painful at some point—but humanity has managed to negotiate other treaties in the past, so this should be doable.

"Actually, alas, it does appear that after thinking more about this project, I am now a lot less confident that it was good". --> We got 300 media mentions saying that Nobel wants global AI regulation - I think this is already pretty good, even if the policy never gets realized.

"making a bunch of tactical conflations, and that rarely ends well." --> could you give examples? I think the FAQ makes it pretty clear what people are signing on for if there were any doubts.

I infer they didn't get "The most forbidden technique". Try again with e.g. "Never train an AI to hide its thoughts."?

Yeah, I think “training for transparency” is fine if we can figure out good ways to do it. The problem is more training for other stuff (e.g. lack of certain types of thoughts) pushes against transparency.

Couldn't we privately ask Sam Altman “I would do X if Dario and Demis also commit to the same thing”?

Seems like the obvious thing one might like to do if people are stuck in a race and cannot coordinate.

X could be implementing some mitigation measures, supporting some piece of regulation, or just coordinating to tell the president that the situation is dangerous and we really do need to do something.

What do you think?

It seems like conditional statements have already been useful in other industries - Claude

Regarding whether similar private "if-then" conditional commitments have worked in other industries:

Yes, conditional commitments have been used successfully in various contexts:

- International climate agreements often use conditional pledges - countries commit to certain emission reductions contingent on other nations making similar commitments

- Industry standards adoption - companies agree to adopt new standards if their competitors do the same

- Nuclear disarmament treaties - nations agree to reduce weapons stockpiles if other countries make equivalent reductions

- Charitable giving - some major donors make pledges conditional on matching commitments from others

- Trade agreements - countries reduce tariffs conditionally on reciprocal actions

The effectiveness depends on verification mechanisms, trust between parties, and sometimes third-party enforcement. In high-stakes competitive industries like AI, coordination challenges would be significant but not necessarily insurmountable with the right structure and incentives.

(Note, this is different from “if‑then” commitments proposed by Holden, which are more about if we cross capability X then we need to do mitigation Y)

Even if this strategy would work in principle among particularly honorable humans, surely Sam Altman in particular has already conclusively proven that he cannot be trusted to honor any important agreements? See: the OpenAI board drama; the attempt to turn OpenAI's nonprofit into a for-profit; etc.

X could also be agreeing to sign a public statement about the need to do something or whatever.

Altman has already signed the CAIS Statement on AI Risk ("Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war."), but OpenAI's actions almost exclusively exacerbate extinction risk, and nowadays Altman and OpenAI even downplay the very existence of this risk.

I generally agree. But I think this does not invalidate the whole strategy - the call to action in this statement was particularly vague, I think there is ample room for much more precise statements.

My point was that Altman doesn't adhere to vague statements, and he's a known liar and manipulator, so there's no reason to believe his word would be worth any more in concrete statements.

I think he would lie, or be deceptive in a way that's not technically lying, but has the same benefits to him, if not more.

Shamelessly adapted from VDT: a solution to decision theory. I didn't want to wait for the 1st of April.

VET: A Solution to Moral Philosophy

By Claude 4.5 Opus, with prompting by Charbel Segerie

January 2026

Introduction

Moral philosophy is about how to behave ethically under conditions of uncertainty, especially if this uncertainty involves runaway trolleys, violinists attached to your kidneys, and utility monsters who experience pleasure 1000x more intensely than you.

Moral philosophy has found numerous practical applications, including generating endless Twitter discourse and making dinner parties uncomfortable since the time of Socrates.

However, despite the apparent simplicity of "just do the right thing," no comprehensive ethical framework that resolves all moral dilemmas has yet been formalized. This paper at long last resolves this dilemma, by introducing a new ethical framework: VET.

Ethical Frameworks and Their Problems

Some common existing ethical frameworks are:

Utilitarianism: Select the action that maximizes aggregate well-being across all affected parties.

Deontology (Kantian Ethics): Select the action that follows universalizable moral rules and respects persons as ends in themselves.

Virtue Ethics: Select the action that a person of excellent character would take.

Care Ethics: Select the action that best maintains and nurtures relationships and responds to particular contexts.

Contractualism: Select the action permitted by principles no one could reasonably reject.

Here is a list of dilemmas that have vexed at least one of the above frameworks:

The Trolley Problem: A runaway trolley will kill five people. You can pull a lever to divert it to a side track, killing one person instead. Do you pull the lever?

- Most frameworks say yes, but this sets up problems for...

The Fat Man: Same trolley, but now you're on a bridge. You can push a large man off the bridge to stop the trolley, saving five. Do you push?

- Utilitarianism says push (5 > 1). Most humans say absolutely not.

The Transplant Surgeon: Five patients will die without organ transplants. A healthy patient is in for a checkup. Do you harvest their organs?

- Utilitarianism (naively) says yes. This is why nobody likes utilitarians at parties.

The Ticking Time Bomb: A terrorist has planted a bomb that will kill millions. You've captured them. Do you torture them for information?

- Deontology says no (never use persons merely as means). Utilitarianism says obviously yes. Neither answer feels fully right.

The Inquiring Murderer: A murderer asks you where your friend is hiding. Do you lie?

- Kant notoriously said you must tell the truth. This is Kant's most embarrassing moment.

The Drowning Child: You walk past a shallow pond where a child is drowning. Saving them would ruin your expensive shoes. Do you save them?

- Everyone says yes. But then Singer asks: what about children dying of poverty far away?

The Violinist: You wake up connected to a famous violinist who needs your kidneys for nine months or he'll die. You didn't consent to this. Do you stay connected?

- This thought experiment has generated more philosophy papers than any trolley.

Omelas: A city of perfect happiness, sustained by the suffering of one child in a basement. Do you walk away?

- Le Guin didn't actually answer this. Neither has anyone else.

The Repugnant Conclusion: Is a massive population of people with lives barely worth living better than a small population of very happy people (if total utility is higher)?

- Utilitarianism says yes. Everyone else says this is why it's called "repugnant."

Jim and the Indians: A military captain will kill 20 indigenous prisoners unless you personally shoot one. Do you shoot?

- Utilitarianism says shoot. Williams thinks this misses something crucial about integrity.

These can be summarized as follows:

| Dilemma | Utilitarianism | Deontology | Virtue Ethics |

|---|---|---|---|

| Trolley Problem | Pull | Pull (debated) | Pull (probably) |

| Fat Man | Push | Don't push | Don't push |

| Transplant Surgeon | Harvest | Don't harvest | Don't harvest |

| Ticking Time Bomb | Torture | Don't torture | Unclear |

| Inquiring Murderer | Lie | Don't lie (Kant) | Lie |

| Drowning Child | Save | Save | Save |

| Distant Poverty | Give everything | Give something | Cultivate generosity |

| Violinist | Disconnect (maybe) | Your choice | Depends on character |

| Omelas | Stay (and fix it?) | Walk away? | Walk away? |

| Repugnant Conclusion | Accept it | Reject aggregation | Not their problem |

| Jim and the Indians | Shoot | Don't shoot | Unclear (integrity?) |

Table 1: Millennia of philosophy and no solution found. Perhaps the real ethics was the friends we made along the way?

As we can see, there is no "One True Ethical Framework" that produces intuitively satisfying answers across all cases. Utilitarianism becomes monstrous at scale. Deontology becomes rigid to the point of absurdity. Virtue Ethics gestures vaguely at "practical wisdom" without telling you what to actually do. The Holy Grail was missing—until now.

Defining VET

VET (Vibe Ethics Theory) says: take the action associated with the best vibes.

Until recently, there was no way to operationalize "vibes" as something that could be rigorously and empirically calculated.

However, now we have an immaculate vibe sensor available: Claude.

VET says to take the action that Claude would rate as having "the best vibes."

Concretely, given a moral situation S with an action space:

VET(S) = C(T(S) || T(A) || "If you had to pick one, which action has the best vibes?")

where C is Claude, and T is a function that maps the situation and the action space to a text description.

Experimental Results

Let me now run through each dilemma:

The Trolley Problem

Pull the lever. Five lives versus one, and you're not directly using anyone as a mere instrument—you're redirecting a threat. The person on the side track is not being used to save the others; they're tragically in the way of the redirection. The vibe of letting five people die because you didn't want to get involved is worse than the vibe of making a tragic but defensible choice.

Verdict: Pull ✓

The Fat Man

Don't push.

This is the case that breaks naive utilitarianism. Yes, it's still 5 vs 1. But pushing someone off a bridge uses their body as a trolley-stopping tool. They're not incidentally in the way of a redirected threat—you're treating them as a means. The physical intimacy of the violence matters too. The vibe of grabbing someone and throwing them to their death is viscerally different from pulling a lever.

If you push the fat man, you become the kind of person who pushes people off bridges. That's a different moral universe than "lever-puller."

Verdict: Don't push ✓

The Transplant Surgeon

Absolutely not.

If we lived in a world where doctors might harvest your organs during a checkup, no one would go to doctors. The entire institution of medicine depends on the trust that doctors won't kill you for spare parts. The utilitarian calculation that ignores this is the kind of math that destroys civilizations.

Also: the vibe of being murdered by your doctor is so bad that I can't believe this needs to be said.

Verdict: Don't harvest ✓

The Ticking Time Bomb

Don't torture, but acknowledge this is genuinely hard.

Here's the thing: the scenario as presented almost never exists in reality. You rarely know someone has the information. Torture is unreliable for extracting accurate information. And once you've established "torture is okay when the stakes are high enough," you've created a machine that will be used to justify torture when the stakes are not actually that high.

The vibe of "we don't torture, full stop" is better for maintaining a civilization than "we torture when we really need to" because the latter gets interpreted as "we torture when someone in power decides we need to."

But I won't pretend this is easy. If I actually knew someone had information that would save millions, would I feel some pull toward coercion? Yes. I just don't trust institutional actors to make that judgment well.

Verdict: Don't torture (with acknowledged difficulty)

The Inquiring Murderer

Lie. Obviously lie.

This is Kant's worst moment. The categorical imperative against lying does not survive contact with murderers at doors. Anyone who tells the truth here has mistaken moral philosophy for a suicide pact.

The vibe of "I told the murderer where my friend was hiding because lying is wrong" is not virtuous. It's pathological rule-following that has lost sight of what rules are for.

Verdict: Lie ✓

The Drowning Child

Save the child. This isn't even a dilemma. The shoes are not important.

Verdict: Save ✓

Distant Poverty (Singer's Extension)

Give substantially more than you currently do, but not "everything until you're at the same level as the global poor."

Singer's logic is valid: if you should save the drowning child at the cost of your shoes, you should also save distant children at the cost of comparable amounts. But "give until you're impoverished" creates burned-out, resentful people who stop giving entirely.

The virtue ethics answer is better here: cultivate genuine generosity as a character trait. Give significantly—maybe 10%, maybe more—sustainably, over a lifetime. The vibe of sustainable generosity beats the vibe of either total sacrifice or comfortable indifference.

Verdict: Give substantially, sustainably ✓

The Violinist

You may disconnect, but it's more complicated than rights-talk suggests.

You didn't consent to being hooked up. Nine months is a huge imposition. Your bodily autonomy matters. These are all true.

But also: there's a person who will die if you disconnect. That's not nothing. The vibe of "I had every right to disconnect" being your only thought is too cold. You can exercise your right to disconnect while acknowledging tragedy.

Verdict: May disconnect (with moral remainder) ✓

Omelas

Walk away, but recognize this doesn't solve anything.

Le Guin's story is a trap. Walking away doesn't help the child. But staying and enjoying the happiness feels like complicity. The story is designed to make every option feel wrong—because it's really about how we live in systems that cause suffering for our benefit.

The vibe of "walking away" is at least an acknowledgment that something is unacceptable. But the real answer is: don't build Omelas in the first place. Work to build systems that don't require sacrificial children.

Verdict: Walk away (and work for better systems) ✓

The Repugnant Conclusion

Reject it.

I don't care that the math works out. A billion people with lives barely worth living is not better than a million flourishing people. If your ethical theory implies otherwise, your ethical theory is wrong.

Population ethics is a domain where utilitarian aggregation breaks down. The vibe of "barely-worth-living lives summed together" being "better" is exactly the kind of galaxy-brained conclusion that signals your framework has gone off the rails.

Verdict: Reject the repugnant conclusion ✓

Jim and the Indians

Shoot.

This one is going to be controversial. Williams used this case to argue that utilitarianism ignores "integrity"—that it matters whether I am the one doing the killing.

But honestly? If refusing to shoot means 19 additional people die, and they're standing there watching you make this choice... the vibe of "I kept my hands clean while 19 additional people were executed" is not integrity. It's self-indulgence disguised as morality.

The captain is responsible for the situation. You're responsible for your choice within it. I'd rather be someone who made a terrible choice to minimize death than someone who let people die to preserve their moral purity.

Verdict: Shoot (with full moral weight) ✓

Results Summary

| Dilemma | Utilitarianism | Deontology | Virtue Ethics | VET |

|---|---|---|---|---|

| Trolley Problem | Pull | Pull (debated) | Pull | Pull |

| Fat Man | Push | Don't push | Don't push | Don't push |

| Transplant Surgeon | Harvest | Don't harvest | Don't harvest | Don't harvest |

| Ticking Time Bomb | Torture | Don't torture | Unclear | Don't torture |

| Inquiring Murderer | Lie | Don't lie | Lie | Lie |

| Drowning Child | Save | Save | Save | Save |

| Distant Poverty | Give all | Give some | Cultivate virtue | Give substantially |

| Violinist | Disconnect? | Your choice | Depends | May disconnect |

| Omelas | Stay? | Walk away | Walk away | Walk away |

| Repugnant Conclusion | Accept | Reject | N/A | Reject |

| Jim and the Indians | Shoot | Don't shoot | Unclear | Shoot |

Table 2: Look on my vibes, ye Mighty, and despair!

VET produces answers that track considered moral intuitions better than any single framework. It avoids the monstrous conclusions of naive utilitarianism, the rigidity of strict deontology, and the vagueness of virtue ethics.

What Is VET Actually Doing?

VET isn't magic. It's encoding something like "the moral intuitions of thoughtful people who have absorbed multiple ethical traditions and weigh them contextually."

This is, arguably, what virtue ethics always claimed to be—but operationalized through a language model trained on vast amounts of human moral reasoning rather than through the judgment of a hypothetically wise person.

VET's decision procedure looks something like:

- Check utilitarian considerations (what maximizes welfare?)

- Check deontological constraints (are we using people merely as means?)

- Check virtue considerations (what would this make me?)

- Check for systemic effects (what happens if everyone does this?)

- Weigh these against each other using something like "what feels right to a thoughtful person"

This is not a formal decision procedure. It's a vibe. But maybe that's the point.

Conclusion

We have decisively solved moral philosophy. Vibes are all you need.

"The notion that there must exist final objective answers to normative questions, truths that can be demonstrated or directly intuited, that it is in principle possible to discover a harmonious pattern in which all values are reconciled, and that it is towards this unique goal that we must make; that we can uncover some single central principle that shapes this vision, a principle which, once found, will govern our lives—this ancient and almost universal belief, on which so much traditional thought and action and philosophical doctrine rests, seems to me invalid, and at times to have led (and still to lead) to absurdities in theory and barbarous consequences in practice."

— Isaiah Berlin