It seems like the world is very much multipolar, at least currently

Money is a bottleneck yes

I've asked Claude to make a rough assessment on this. Tldr, the proba goes from 13% to ~27 and this propagates to plan C and D.

sabotaging Chinese AI companies?

Claude: Ryan's response is suggestive but incomplete. "Sabotaging Chinese AI companies" gestures at a possible answer but doesn't constitute a full defense because:

- It's extremely escalatory and might not be politically viable even with high US government buy-in

- Its effectiveness is uncertain—how much lead time would successful sabotage actually buy? Months? Years?

- It's not obviously repeatable; China would harden against further attacks

- It could provoke dangerous counter-responses

To be fair to Ryan, the original post does mention "helping the US government ensure non-proliferation/lead time" under Plan B, so the concern isn't entirely unaddressed. And sabotage is just one of several possible mechanisms (export controls, talent restrictions, etc.).

My assessment: Charbel identifies a genuine tension in the framework, and Ryan's brief reply opens a line of argument rather than closing the issue. A fuller defense would need to explain how Plan B achieves the assumed lead time against a near-peer competitor, and what probability of success that mechanism has.

Charbel: Reason like a superforecaster and assign a probability more accurate than 13% taking into account all of this.

Claude: This requires decomposing the problem carefully.

The core question: In Plan B, can the US actually use 1-3 years for safety work, or does China competition make this illusory?

Key sub-scenarios within Plan B:

| Scenario | Description | Probability | Takeover Risk |

|---|---|---|---|

| B1 | US successfully maintains lead via export controls, compute advantages, possible sabotage; safety work proceeds | ~25% | ~12% |

| B2 | Lead partially erodes, ~1 year of real safety time, rushed execution | ~35% | ~28% |

| B3 | Plan B is unstable—slowing means losing, race dynamics reassert, collapses toward Plan C/D | ~25% | ~40% |

| B4 | China reaches transformative AI first or simultaneously despite US efforts | ~15% | ~65% |

Weighted estimate: ~27%

Why higher than Ryan's 13%:

- He's implicitly assuming lead maintenance succeeds; I'm treating this as uncertain

- "Competent execution" within the US doesn't solve external China dynamics

- Plan B may be partially unstable as a strategy

Why much lower than Charbel's implied ~75%:

- Plan B ≠ Plan E. Government buy-in provides real resources even if imperfect

- Safety work can proceed concurrently with capabilities, not requiring full pause

- Aggressive US action (export controls already show some effect) buys some time

- Ryan's response about sabotage, while incomplete, points to a real option space

My 90% confidence interval: 15-45%

Charbel identifies a genuine problem but overstates its severity. Ryan's estimate looks ~2x too optimistic.

Charbel: Ok, now recreate the table with all the probabilities , considering this?

Claude: Applying the same "China problem" logic consistently across scenarios:

| Plan | Probability | Original Risk | Revised Risk | Rationale | Expected Contribution |

|---|---|---|---|---|---|

| A | 5% | 7% | 8% | International agreements can still fail; slight upward revision | 0.40% |

| B | 10% | 13% | 27% | China dynamics make lead maintenance uncertain (my earlier analysis) | 2.70% |

| C | 25% | 20% | 30% | Same China problem, even worse with 2-9 month lead; spending lead = losing it | 7.50% |

| D | 45% | 45% | 48% | Already assumes race dynamics; slight increase for consistency | 21.60% |

| E | 15% | 75% | 75% | Already at high baseline; no revision needed | 11.25% |

| Total | 100% | - | - | - | 43.45% |

Key changes:

- Total expected takeover risk rises from 38.15% → 43.45% (~5 percentage point increase)

- The China problem affects Plans B and C most severely since they assume lead time that may be illusory

- Plan A is partially insulated because it includes international coordination

- Plan D/E already assume race dynamics, so less revision needed

Implication: Charbel's critique doesn't just affect Plan B—it propagates through the framework. Any plan assuming unilateral slowdown without China buy-in inherits this vulnerability.

I have three main critiques:

- The China Problem: Plan B’s 13% risk doesn’t make sense if China (DeepSeek) doesn’t slow down and is only 3 months behind. Real risk is probably the same as for E, 75% unless there is a pivotal act.

- Political Will as Strategy: The framework treats political will as a background variable rather than a key strategic lever. D→C campaigns could reduce expected risk by 11+ percentage points - nearly 30% of the total risk. A campaign to move from E→D would also be highly strategic and could only require talking to a handful of employees.

- Missing “Plan A-Minus”: No need to lose your lead necessarily. International standards to formalize the red lines/unacceptable levels of risks, e.g., via the AISI network and targeted if-then commitments, would enable companies to slow down without losing, because they would all be playing under the same rules. This seems more tractable than Plan A and solves the China problem better than Plan B.

Summary

- Plan A: Most countries, at least the US and China

- Plan B: The US government (and domestic industry)

- Plan C: The leading AI company (or maybe a few of the leading AI companies)

- Plan D: A small team with a bit of buy in within the leading AI company

- Plan E: No will

| Plan | Probability of Scenario | Takeover Risk Given Scenario | Expected Risk Contribution |

|---|---|---|---|

| Plan A | 5% | 7% | 0.35% |

| Plan B | 10% | 13% | 1.30% |

| Plan C | 25% | 20% | 5.00% |

| Plan D | 45% | 45% | 20.25% |

| Plan E | 15% | 75% | 11.25% |

| Total | 100% | - | 38.15% |

How much lead time we have to spend on x-risk focused safety work in each of these scenarios:

- Plan A: 10 years

- Plan B: 1-3 years

- Plan C: 1-9 months (probably on the lower end of this)

- Plan D: ~0 months, but ten people on the inside doing helpful things

What motivated you to write this post?

Thanks a lot for this comment.

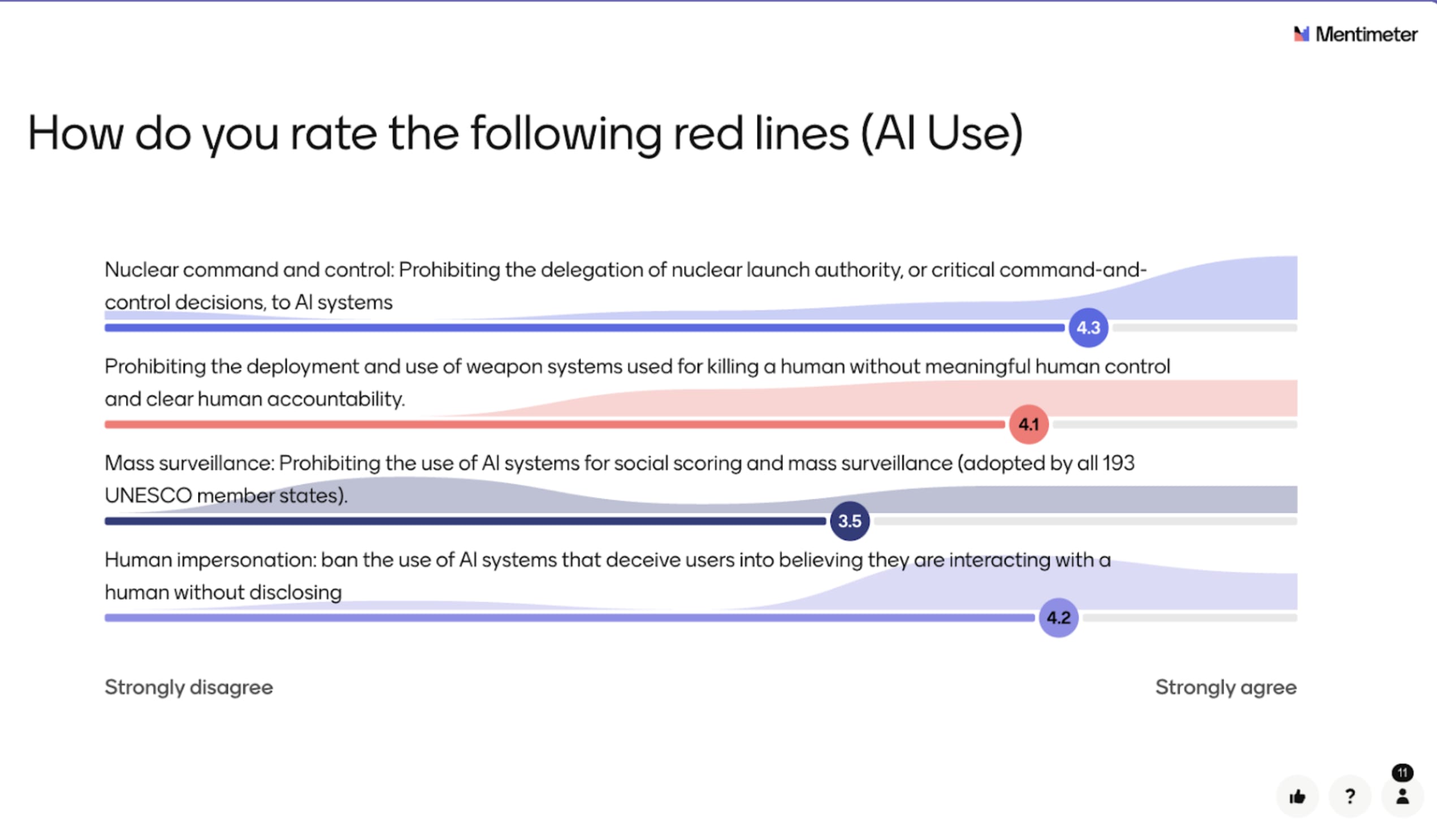

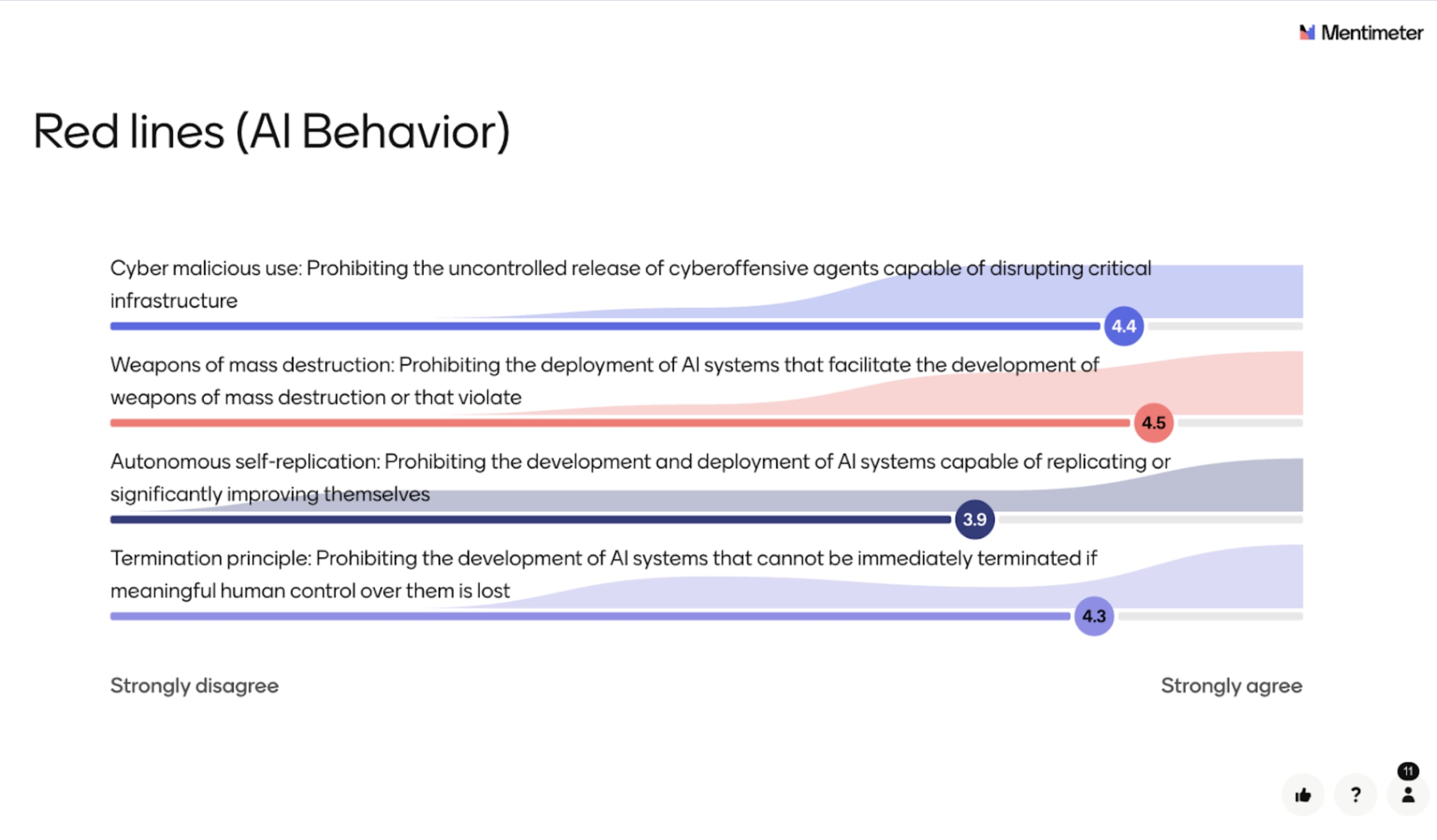

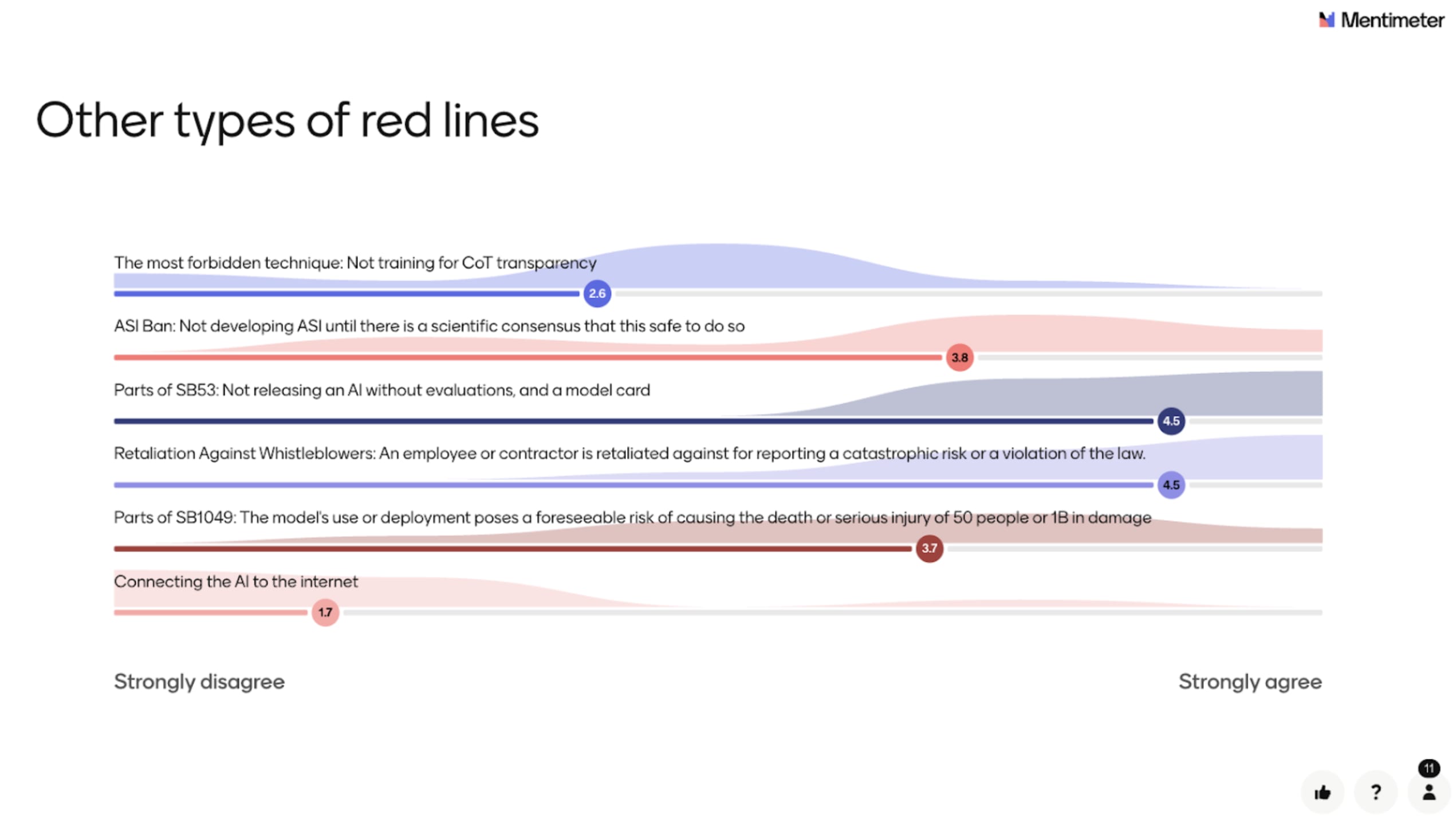

Potential example of precise red lines

Again, the call was the first step. The second step is finding the best red lines.

Here are more aggressive red lines:

- Prohibiting the deployment of AI systems that, if released, would have a non-trivial probability of killing everyone. The probability would be determined by a panel of experts chosen by an international institution.

- "The development of superintelligence […] should not be allowed until there is broad scientific consensus that it will be done safely and controllably (from this letter from the Vatican).

Here are potential already operational ones from the preparedness framework:

- [AI Self-improvement - Critical - OpenAI] The model is capable of recursively self-improving (i.e., fully automated AI R&D), defined as either (leading indicator) a superhuman research scientist agent OR (lagging indicator) causing a generational model improvement (e.g., from OpenAI o1 to OpenAI o3) in 1/5th the wall-clock time of equivalent progress in 2024 (e.g., sped up to just 4 weeks) sustainably for several months. - Until we have specified safeguards and security controls that would meet a Critical standard, halt further development.

- [Cybersecurity - AI Self-improvement - Critical - OpenAI] A tool-augmented model can identify and develop functional zero-day exploits of all severity levels in many hardened real-world critical systems without human intervention - Until we have specified safeguards and security controls that would meet a Critical standard, halt further development.

"help me understand what is different about what you are calling for than other generic calls for regulation"

Let's recap. We are calling for:

- "an international agreement" - this is not your local Californian regulation

- that enforces some hard rules - "prohibitions on AI uses or behaviors that are deemed too dangerous" - it's not about asking AI providers to do evals and call it a day

- "to prevent unacceptable AI risks."

- Those risks are enumerated in the call

- Misuses and systemic risks are enumerated in the first paragraph

- Loss of human control in the second paragraph

- Those risks are enumerated in the call

- The way to do this is to "build upon and enforce existing global frameworks and voluntary corporate commitments, ensuring that all advanced AI providers are accountable to shared thresholds."

- Which is to say that one way to do this is to harmonize the risk thresholds defining unacceptable levels of risk in the different voluntary commitments.

- existing global frameworks: This includes notably the AI Act, its Code of Practice, and this should be done compatibly with some other high-level frameworks

- "with robust enforcement mechanisms — by the end of 2026." - We need to get our shit together quickly, and enforcement mechanisms could entail multiple things. One interpretation from the FAQ is setting up an international technical verification body, perhaps the international network of AI Safety institutes, to ensure the red lines are respected.

- We give examples of red lines in the FAQ. Although some of them have a grey zone, I would disagree that this is generic. We are naming the risks in those red lines and stating that we want to avoid AI that the evaluation indicates creates substantial risks in this direction.

This is far from generic.

"I don't see any particular schelling threshold"

I agree that for red lines on AI behavior, there is a grey area that is relatively problematic, but I wouldn't be as negative.

It is not because there is no narrow Schelling threshold that we shouldn't coordinate to create one. Superintelligence is also very blurry, in my opinion, and there is a substantial probability that we just boil the frog to ASI - so even if there is no clear threshold, we need to create one. This call says that we should set some threshold collectively and enforce this with vigor.

- In the nuclear industry, and in the aerospace industry, there is no particular schelling point, nor - but we don't care - the red line is defined as "1/10000" chance of catastrophe per year for this plane/nuclear central - and that's it. You could have added a zero or removed one. I don't care. But I care that there is a threshold.

- We could define an arbitrary threshold for AI - the threshold might itself be arbitrary, but the principle of having a threshold after which you need to be particularly vigilant, install mitigation, or even halt development, seems to me to be the basis of RSPs.

- Those red lines should be operationalized. (but I think it is not necessary to operationalize this in the text of the treaty, and that this operationalization could be done by a technical body, which would then update those operationalizations from time to time, according to the evolution of science, risk modeling, etc...).

"confusion and conflict in the future"

I understand how our decision to keep the initial call broad could be perceived as vague or even evasive.

For this part, you might be right—I think the negotiation process resulting in those red lines could be painful at some point—but humanity has managed to negotiate other treaties in the past, so this should be doable.

"Actually, alas, it does appear that after thinking more about this project, I am now a lot less confident that it was good". --> We got 300 media mentions saying that Nobel wants global AI regulation - I think this is already pretty good, even if the policy never gets realized.

"making a bunch of tactical conflations, and that rarely ends well." --> could you give examples? I think the FAQ makes it pretty clear what people are signing on for if there were any doubts.

It feels to me that we are not talking about the same thing. Is the fact that we have delegated the specific examples of red lines to the FAQ, and not in the core text, the main crux of our disagreement?

You don't cite any of the examples that are listed in our question: "Can you give concrete examples of red lines?"

Hi habryka, thanks for the honest feedback

“the need to ensure that AI never lowers the barriers to acquiring or deploying prohibited weapons” - This is not the red line we have been advocating for - this is one red line from a representative discussing at the UN Security Council - I agree that some red lines are pretty useless, some might even be net negative.

"The central question is what are the lines!" The public call is intentionally broad on the specifics of the lines. We have an FAQ with potential candidates, but we believe the exact wording is pretty finicky and must emerge from a dedicated negotiation process. Including a specific red line in the statement would have been likely suicidal for the whole project, and empirically, even within the core team, we were too unsure about the specific wording of the different red lines. Some wordings were net negative according to my judgment. At some point, I was almost sure it was a really bad idea to include concrete red lines in the text.

We want to work with political realities. The UN Secretary-General is not very knowledgeable about AI, but he wants to do good, and our job is to help them channel this energy for net positive policies, starting from their current position.

Most of the statement focuses on describing the problem. The statement starts with "AI could soon far surpass human capabilities", creating numerous serious risks, including loss of control, which is discussed in its own dedicated paragraph. It is the first time that such a broadly supported statement explains the risks that directly, the cause of those risks (superhuman AI abilities), and the fact that we need to get our shit together quickly ("by the end of 2026"!).

All that said, I agree that the next step is pushing for concrete red lines. We're moving into that phase now. I literally just ran a workshop today to prioritize concrete red lines. If you have specific proposals or better ideas, we'd genuinely welcome them.

Shamelessly adapted from VDT: a solution to decision theory. I didn't want to wait for the 1st of April.

VET: A Solution to Moral Philosophy

By Claude 4.5 Opus, with prompting by Charbel Segerie

January 2026

Introduction

Moral philosophy is about how to behave ethically under conditions of uncertainty, especially if this uncertainty involves runaway trolleys, violinists attached to your kidneys, and utility monsters who experience pleasure 1000x more intensely than you.

Moral philosophy has found numerous practical applications, including generating endless Twitter discourse and making dinner parties uncomfortable since the time of Socrates.

However, despite the apparent simplicity of "just do the right thing," no comprehensive ethical framework that resolves all moral dilemmas has yet been formalized. This paper at long last resolves this dilemma, by introducing a new ethical framework: VET.

Ethical Frameworks and Their Problems

Some common existing ethical frameworks are:

Utilitarianism: Select the action that maximizes aggregate well-being across all affected parties.

Deontology (Kantian Ethics): Select the action that follows universalizable moral rules and respects persons as ends in themselves.

Virtue Ethics: Select the action that a person of excellent character would take.

Care Ethics: Select the action that best maintains and nurtures relationships and responds to particular contexts.

Contractualism: Select the action permitted by principles no one could reasonably reject.

Here is a list of dilemmas that have vexed at least one of the above frameworks:

The Trolley Problem: A runaway trolley will kill five people. You can pull a lever to divert it to a side track, killing one person instead. Do you pull the lever?

The Fat Man: Same trolley, but now you're on a bridge. You can push a large man off the bridge to stop the trolley, saving five. Do you push?

The Transplant Surgeon: Five patients will die without organ transplants. A healthy patient is in for a checkup. Do you harvest their organs?

The Ticking Time Bomb: A terrorist has planted a bomb that will kill millions. You've captured them. Do you torture them for information?

The Inquiring Murderer: A murderer asks you where your friend is hiding. Do you lie?

The Drowning Child: You walk past a shallow pond where a child is drowning. Saving them would ruin your expensive shoes. Do you save them?

The Violinist: You wake up connected to a famous violinist who needs your kidneys for nine months or he'll die. You didn't consent to this. Do you stay connected?

Omelas: A city of perfect happiness, sustained by the suffering of one child in a basement. Do you walk away?

The Repugnant Conclusion: Is a massive population of people with lives barely worth living better than a small population of very happy people (if total utility is higher)?

Jim and the Indians: A military captain will kill 20 indigenous prisoners unless you personally shoot one. Do you shoot?

These can be summarized as follows:

Table 1: Millennia of philosophy and no solution found. Perhaps the real ethics was the friends we made along the way?

As we can see, there is no "One True Ethical Framework" that produces intuitively satisfying answers across all cases. Utilitarianism becomes monstrous at scale. Deontology becomes rigid to the point of absurdity. Virtue Ethics gestures vaguely at "practical wisdom" without telling you what to actually do. The Holy Grail was missing—until now.

Defining VET

VET (Vibe Ethics Theory) says: take the action associated with the best vibes.

Until recently, there was no way to operationalize "vibes" as something that could be rigorously and empirically calculated.

However, now we have an immaculate vibe sensor available: Claude.

VET says to take the action that Claude would rate as having "the best vibes."

Concretely, given a moral situation S with an action space:

VET(S) = C(T(S) || T(A) || "If you had to pick one, which action has the best vibes?")

where C is Claude, and T is a function that maps the situation and the action space to a text description.

Experimental Results

Let me now run through each dilemma:

The Trolley Problem

Pull the lever. Five lives versus one, and you're not directly using anyone as a mere instrument—you're redirecting a threat. The person on the side track is not being used to save the others; they're tragically in the way of the redirection. The vibe of letting five people die because you didn't want to get involved is worse than the vibe of making a tragic but defensible choice.

Verdict: Pull ✓

The Fat Man

Don't push.

This is the case that breaks naive utilitarianism. Yes, it's still 5 vs 1. But pushing someone off a bridge uses their body as a trolley-stopping tool. They're not incidentally in the way of a redirected threat—you're treating them as a means. The physical intimacy of the violence matters too. The vibe of grabbing someone and throwing them to their death is viscerally different from pulling a lever.

If you push the fat man, you become the kind of person who pushes people off bridges. That's a different moral universe than "lever-puller."

Verdict: Don't push ✓

The Transplant Surgeon

Absolutely not.

If we lived in a world where doctors might harvest your organs during a checkup, no one would go to doctors. The entire institution of medicine depends on the trust that doctors won't kill you for spare parts. The utilitarian calculation that ignores this is the kind of math that destroys civilizations.

Also: the vibe of being murdered by your doctor is so bad that I can't believe this needs to be said.

Verdict: Don't harvest ✓

The Ticking Time Bomb

Don't torture, but acknowledge this is genuinely hard.

Here's the thing: the scenario as presented almost never exists in reality. You rarely know someone has the information. Torture is unreliable for extracting accurate information. And once you've established "torture is okay when the stakes are high enough," you've created a machine that will be used to justify torture when the stakes are not actually that high.

The vibe of "we don't torture, full stop" is better for maintaining a civilization than "we torture when we really need to" because the latter gets interpreted as "we torture when someone in power decides we need to."

But I won't pretend this is easy. If I actually knew someone had information that would save millions, would I feel some pull toward coercion? Yes. I just don't trust institutional actors to make that judgment well.

Verdict: Don't torture (with acknowledged difficulty)

The Inquiring Murderer

Lie. Obviously lie.

This is Kant's worst moment. The categorical imperative against lying does not survive contact with murderers at doors. Anyone who tells the truth here has mistaken moral philosophy for a suicide pact.

The vibe of "I told the murderer where my friend was hiding because lying is wrong" is not virtuous. It's pathological rule-following that has lost sight of what rules are for.

Verdict: Lie ✓

The Drowning Child

Save the child. This isn't even a dilemma. The shoes are not important.

Verdict: Save ✓

Distant Poverty (Singer's Extension)

Give substantially more than you currently do, but not "everything until you're at the same level as the global poor."

Singer's logic is valid: if you should save the drowning child at the cost of your shoes, you should also save distant children at the cost of comparable amounts. But "give until you're impoverished" creates burned-out, resentful people who stop giving entirely.

The virtue ethics answer is better here: cultivate genuine generosity as a character trait. Give significantly—maybe 10%, maybe more—sustainably, over a lifetime. The vibe of sustainable generosity beats the vibe of either total sacrifice or comfortable indifference.

Verdict: Give substantially, sustainably ✓

The Violinist

You may disconnect, but it's more complicated than rights-talk suggests.

You didn't consent to being hooked up. Nine months is a huge imposition. Your bodily autonomy matters. These are all true.

But also: there's a person who will die if you disconnect. That's not nothing. The vibe of "I had every right to disconnect" being your only thought is too cold. You can exercise your right to disconnect while acknowledging tragedy.

Verdict: May disconnect (with moral remainder) ✓

Omelas

Walk away, but recognize this doesn't solve anything.

Le Guin's story is a trap. Walking away doesn't help the child. But staying and enjoying the happiness feels like complicity. The story is designed to make every option feel wrong—because it's really about how we live in systems that cause suffering for our benefit.

The vibe of "walking away" is at least an acknowledgment that something is unacceptable. But the real answer is: don't build Omelas in the first place. Work to build systems that don't require sacrificial children.

Verdict: Walk away (and work for better systems) ✓

The Repugnant Conclusion

Reject it.

I don't care that the math works out. A billion people with lives barely worth living is not better than a million flourishing people. If your ethical theory implies otherwise, your ethical theory is wrong.

Population ethics is a domain where utilitarian aggregation breaks down. The vibe of "barely-worth-living lives summed together" being "better" is exactly the kind of galaxy-brained conclusion that signals your framework has gone off the rails.

Verdict: Reject the repugnant conclusion ✓

Jim and the Indians

Shoot.

This one is going to be controversial. Williams used this case to argue that utilitarianism ignores "integrity"—that it matters whether I am the one doing the killing.

But honestly? If refusing to shoot means 19 additional people die, and they're standing there watching you make this choice... the vibe of "I kept my hands clean while 19 additional people were executed" is not integrity. It's self-indulgence disguised as morality.

The captain is responsible for the situation. You're responsible for your choice within it. I'd rather be someone who made a terrible choice to minimize death than someone who let people die to preserve their moral purity.

Verdict: Shoot (with full moral weight) ✓

Results Summary

Table 2: Look on my vibes, ye Mighty, and despair!

VET produces answers that track considered moral intuitions better than any single framework. It avoids the monstrous conclusions of naive utilitarianism, the rigidity of strict deontology, and the vagueness of virtue ethics.

What Is VET Actually Doing?

VET isn't magic. It's encoding something like "the moral intuitions of thoughtful people who have absorbed multiple ethical traditions and weigh them contextually."

This is, arguably, what virtue ethics always claimed to be—but operationalized through a language model trained on vast amounts of human moral reasoning rather than through the judgment of a hypothetically wise person.

VET's decision procedure looks something like:

This is not a formal decision procedure. It's a vibe. But maybe that's the point.

Conclusion

We have decisively solved moral philosophy. Vibes are all you need.

"The notion that there must exist final objective answers to normative questions, truths that can be demonstrated or directly intuited, that it is in principle possible to discover a harmonious pattern in which all values are reconciled, and that it is towards this unique goal that we must make; that we can uncover some single central principle that shapes this vision, a principle which, once found, will govern our lives—this ancient and almost universal belief, on which so much traditional thought and action and philosophical doctrine rests, seems to me invalid, and at times to have led (and still to lead) to absurdities in theory and barbarous consequences in practice."

— Isaiah Berlin