25 Answers sorted by

260

- Energy cost in summer and energy cost in winter will strongly diverge.

- One key usage of cheap summer energy will be hydrogen and methane production

- Some planes will be Hydrogen-based

- Hydrogen will be used more frequently for home heating than gas.

- Permanent base on moon and Mars

- Vacations on space stations will cost less than 20k (a good chance that it's less than 10k)

- Transporting materials to orbit will cost less then 10$/kg

- A majority vehicle journeys will be in rented cars. A majority of cars on the road will be electric.

- Common services such as hairdressing will be provided in moving cars, so that it's possible to book your hairdressing during your commute in at least some jurisdictions.

- Delivery costs go down by an order of magnitude as robots can replace humans

- Cheaper delivery costs mean that more people will let all their clothes by washed by a dry-cleaner that picks up their clothing and then brings clean clothing back. A lot of the individual labor will be automated which will in turn bring down prices as well.

- 65% of the world population will live in cities

- Insurance payouts for natural disasters will double compared to present levels

- A majority of households with a net worth of >100k will have air filters and air quality sensors.

- Routing usage of next-generation sequencing for virus and bacteria infections that sequence everything in the blood.

- Protein folding is solved to the point where it's easy to design new proteins. This will be used both in medicine and in other fields that need specialized materials.

- Phage therapy will be standard treatment for at least one infection that's currently not well treated with antibiotics (chronic Lyme/Periodontitis/MRSA).

- There will be multivar tests for combinations of using existing drugs as antiaging drugs.

- >10% of meat sold in the West will be lab grown.

- The money that flows through prediction markets will be at least 10x of what it is today.

- All police in the Western world will wear body cams.

- Cancer vaccines will be part of regular cancer treatment.

- Hydra-style darknet markets that combine product quality assurance through human testing and a delivery network will not only be operational in Russia but all Western countries as well

- When dark-market organizations provide independent quality control while the FDA just trust manufactures claims, there will be pressure on the FDA to match the quality processes of Hydra style markets that result in new legislation

- Multiple countries will pass laws to regulate satellite surveillance within their territory as it's economical to have real time satellite views of everything on the globe. While it's unclear from the present point how it will be regulated it will be a big deal for some people.

- Chinese politicians will switch to finding the environment important. As a result there will be a global treaty to remove plastic trash from the oceans and reduce mercury concentrations in the ocean. Fishing will be banned in parts of the ocean.

- Kinmen will be under Chinese control

- Neurolink or a comparable company will produce a product that can be brought without medical diagnosis for an illness.

- Copper and iron will have a lower inflation adjusted price compared to today

- As robots get used more significantly less food is grown as monocrops the way it's grown today and agriculture that combines multiple crops which is very labor intensive today will be cheaper given automation.

Nice list! I'm skeptical of 2, 3, 4, 7, 9, 10, 21, 22, 25, 28. I'd be interested to hear more about them and also about 29 and the hydra markets stuff. Also, clearly protein folding will be solved to a significant extent, but how solved? Enough for molecular nanotech? Can you say more about what you have in mind?

More thoughts on protein folding:

If you can design proteins for specific reactions, problems like mining uranium from seawater suddenly become a lot easier. You can design a membrane protein that's able to trap uranium atoms and then bring them into a cell.

You might even have different bacteria for mining different minerals from the water. You leave the bacteria for some time in the water and they take in a bunch of minerals. Then you let the water out through nanopores that don't let the bacteria through. Then you use a centrifuge to sort the bacteria by the materials they contain, and put each one in their own tanks. Then you heat the bacteria up with destroys the cell walls and makes the materials you are after fluid. You centrifuge again and have your materials.

I expect bacteria to be used that switch from the 3-letter genetic code to a 5-letter genetic code where a single letter mutation doesn't lead to another amino-acid being used to have a bacteria for tasks like the above that doesn't just mutate away.

There will be a bunch of things that can be done that are hard to think about now because it's very different technology then we are used to.

If you have concrete usecases for molecular nanotech I could tell you whether I think that they are doable with it.

221

I think 10x decrease in energy prices is too much. My reasons are:

- There are some constrains on solar/wind which are currently not binding, but will be by the time we have converted most energy production to green energy. The main ones are metals (see e.g. https://www.coppolacomment.com/2021/03/from-carbon-to-metals-renewable-energy.html) and land use (in India, China, Europe, Japan and a few other Asian countries especially as population density is high, but that's most of the world population anyway). This of course does not consider the possibility of major technological breakthroughs in organic solar and energy conversion/transport, which may happen but are not guaranteed so I think they are out of the scope of your exercise.

- As the cost of energy lowers, we will consume more. In poor countries especially, plus you mentioned increased consumption by supercomputers and AI. This will partially balance the cost of production falling, so my (uneducated) guess would be that a 2x-3x decrease in prices is a more reasonable expectation. An analysis by an expert could convince me otherwise.

Like rayom I also noticed you did not mention anything about biology and medicine. I think there will be some advances from that side. A malaria vaccine seems probable by 2040 (maybe ~80%?) and would be a big thing for large parts of the world. Also some improvement in cancer therapy seem to have relatively high probability (nothing even remotely like "cure all cancer", to be clear). We might get some improvement for Alzheimer, dementia or other age-related illnesses, but my "business as usual" expectation is that only moderate advancements will be widely deployed by 2040. Nevertheless they might be sufficient to improve significantly the quality of life of elderly people in rich countries.

Extending on point 2: if we want to talk about a price drop, then we need to think about relative elasticity of supply vs demand - i.e. how sensitive is demand to price, and how sensitive is supply to price. Just thinking about the supply side is not enough: it could be that price drops a lot, but then demand just shoots up until some new supply constraint becomes binding and price goes back up.

(Also, I would be surprised if supercomputers and AI are actually the energy consumers which matter most for pricing. Air conditioning in South America, Africa, Ind...

I understand that Malaria resists attempts at vaccination, but regarding your 80% prediction by 2040, did you see the news that a Malaria vaccine candidate did reach 77% effectiveness in phase II trials just last month? Quote: "It is the first vaccine that meets the World Health Organization's goal of a malaria vaccine with at least 75% efficacy."

People argued for metal prices being a problem for a long time and those predictions usually failed to come true.

Thanks! I edited my thing on energy to clarify, I'm mostly interested in the price of energy for powering large neural nets, and secondarily interested in the price of energy in general in the USA, and only somewhat interested in the price of energy worldwide.

I am not convinced yet that the increased demand from AI will result in increased prices. In fact I think the opposite might happen. Solar panels are basically indefinitely scalable; there are large tracts of empty sunny land in which you can just keep adding more panels basically indefinitely. A...

150

When I look back twenty years, it seems amazing how little has changed or improved since then. Basically just the same, but some things are less slow.

The arrival of the internet in the nineties was the only real change. The arrival of AI will be the next change, whenever that happens.

And in twenty years the looming maw of death will be closer for most of us, like a bowling ball falling into a black hole.

I share this sentiment. Shockingly little has happened in the last 20 years, good or bad, in the grand scheme of things. Our age might become a blank spot in the memory of future people looking back at history; the time where nothing much happened.

Even this recent pandemic can't shake up the blandness of our age. Which is a good thing, of course, but still.

140

- Anti-aging will be in the pipeline, if not necessarily on the market yet. The main root causes of most of the core age-related diseases will be basically understood, and interventions which basically work will have been studied in the lab.

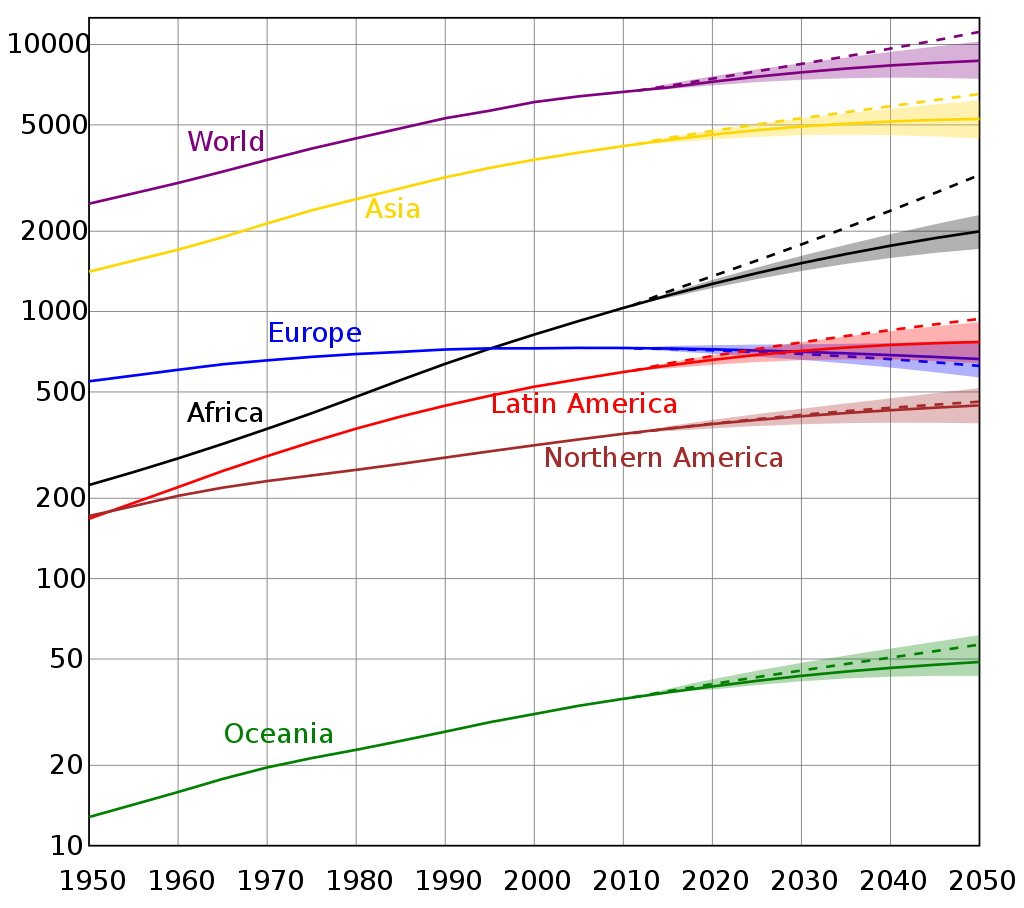

- Fertility will be below replacement rate globally, and increasingly far below replacement in first-world countries (most of which are already below today). Life expectancy will still be increasing, so the population will still be growing over all (even assuming anti-aging is slow), but slowly and decelerating.

- Conditional on anti-aging not already seeing large-scale adoption, the population will have a much higher share of elderly dependents and a lower share of working-age people to support them, pretty much everywhere. This problem already dominates the budgets of first-world governments today: it means large-and-increasing shares of GDP going to retirement/social security and healthcare for old folks (who already consume the large majority of healthcare).

- Conditional on anti-aging not already seeing large-scale adoption, taxes will probably go up in most first-world countries. There just isn't enough spending to cut anywhere else to keep up with growing social security/healthcare obligations, and dramatically reducing those obligations won't be politically viable with old people only becoming more politically dominant in elections over time. (In theory, dramatically opening up immigration could provide another path, but I wouldn't call that the most likely outcome.)

- China's per-capita GDP will catch up to current first-world standards, at which point they will not be able to keep up the growth rate of recent decades. That will probably result in some kind of political instability, since the CCP's popularity is heavily dependent on growth, and also because a richer population is a more powerful population which is just generally harder to control without its assent.

110

I expect people to find 1 wild. The rest are pretty straightforward extrapolations of trends, and they're the sort of trends which have historically been quite predictable.

100

Definitely, and the Nate Silver piece in particular is 8 years out of date. But these are long-term trends, and the predictions don't require much precision - COVID might shift some demographic numbers by 10% for a decade, but that's not enough to substantially change the predictions for 2040.

100

In terms of electricity, transmission and distribution make up 13% and 31% of costs respectively. Even if solar panels were free, I am not confident that reliable electricity would become 10x cheaper as unless each house as quite a few days of storage cheaply, they would still need distribution. Industrial electricity might approach that cheap, but I think it would depend on location and space availability otherwise at least some of the transmission and distribution costs would still exist.

Thanks, this is a good point. I've edited my post to be less confident in non-AI energy uses. Also see my reply to Jacopo.

90

Sure. Here's a graph from wikipedia with global fertility rate projections, with global rate dropping below replacement around 2040. (Note that replacement is slightly above 2 because people sometimes die before reproducing - wikipedia gives 2.1 as a typical number for replacement rate.)

Here's another one from wikipedia with total population, most likely peaking after 2050.

On the budget, here's an old chart from Nate Silver for US government spending specifically:

The post in which that chart appeared has lots more useful info.

For Chinese GDP, there's some decent answers on this quora question about how soon Chinese GDP per capita will catch up to the US. (Though note that I do not think Chinese GDP per capita will catch up to the US by 2040 - just to other first world countries, most of which have much lower GDP per capita than the US. For instance EU was around $36k nominal in 2019, vs $65k nominal for the US in 2019.) You can also eyeball this chart of historical Chinese GDP growth:

90

You cover most of the interesting possibilities on the military technology front, but one thing that you don't mention that might matter especially considering the recent near-breakdowns of some of the nuclear weapon treaties e.g. NEWSTART, is the further proliferation of nuclear weapons including fourth generation nuclear weapons like nuclear shaped charge warheads, pure fusion and sub-kiloton devices or tactical nuclear weapons - and more countries fitting nuclear-armed cruise missiles or drones with nuclear capability which might be a destabilising factor. If laser technology is sufficiently developed we may also see other forms of directed energy weapons becoming more common such as electron beam weapons or electrolasers

70

The incentive here is scientific discovery.

You didn't answer the question about who you think would engage in that. It's interesting that you ignore the question.

Oh I guess you bring up Russians because they are the bad guys and most other countries are the good guys?

No, because they have another culture with regards to science. You have people like Dmitry Itskov who are willing to persue projects that are not for profit (filing patents) nor for status in the academic world.

It's interesting that you see Americans being greedy as synonymous for them being the good guys. It suggests to me that you haven't thought hard about who does what for what reasons.

If there was any incentive to keeps nukes secret, they would've been kept secret, but the incentive to publicize nukes outweigh the incentive to keep them secret.

I have no idea what you mean which that argument. Who's they? What time are you speaking about?

70

Anything related to biotech is not included here - care to explain the reason why?

I haven't thought much about biotech and don't know much about it. This is why I made this a question rather than a post, I'm super interested to hear more things to add to the list!

60

It's only 19 years away; do you mean to say that there are already designer babies being born?

The 3 babies from He Jiankui will be adults by then, definitely; one might quibble about how 'designer' they are, but most people count selection as 'designer' and GenPred claims to have at least one baby so far selected on their medical PGSes (unclear if they did any EDU/IQ PGSes in any way, but as I've always pointed out, because of the good genetic correlations of those with many diseases, any selection on complex diseases will naturally also boost those).

50

Government agencies benefit from all forms of technologies.

This assumes we are living in a world where the US government has the ability to fund far out biomedical research that's not benefitial to big pharma or another group that can lobby for it.

In reality the US government isn't even able to stock enough masks for a pandemic. I'd love to live in a world where the US government would be able to fund science purely for the sake of scientific discovery independent from any interest groups but there's no reason to believe that's the world in which we are living.

Nukes are deterrents. That's the only reason to invest in them.

Again you fail to point out what time and which actors you are talking about which suggest not having a good model.

If we look at the US government, the US government pretended for a long time that only the president can order nuclear strikes while giving that ability to a bunch of military commanders and setting the nuclear safety codes to 00000000.

If the only reason you invest in nukes is deterrence it makes no sense to have more people able to lunch nukes then the other side knows about. In that world the US government would have no reason to set the safety codes to 00000000 when ordered by congress to have safety codes.

You might also watch the Yes, Prime Minister episode about nuclear deterence for more reasons (while it's exaggerted comedy, they did a lot of background research and talked to people inside the system about how the UK political system really worked at the time).

Most comments on here are just pure conjectures by people with mostly ML background. I can't say I'm educated enough to make these wild guesses on what it's like in 2040

I have enough expertise to make wild guesses about the future to have been paid for that. In the past I was invited by people funded by my government as an expert to participate in a scenario planning excercise that involved models scenario about medical progress.

johnswentworth whom you replied to earns his money studying the history of progress and how it works, so is someone who has a fairly detailed model of how scientific progress works and isn't just someone who just has a ML background that's relevant.

LessWrong isn't a random Reddit forum. It's no place where it's a safe assumption that the people you are talking to don't have relevant experience to talk about what they are talking about.

40

Some of these entries are no longer valid, as the most intense conflict of the 21st century—the war in Ukraine—has driven rapid advancements in military technology. Russian and Ukrainian forces are increasingly employing swarm drone operations and robotic (or “Buryat”) units, while China and the United States are developing more sophisticated and integrated unmanned weapon systems.

40

They key question here is incentives. What incentives is there to produce human clones (likely with more genetic defects then the original) if you can't publish papers afterwards or sell a product?

I don't see any player that had the necessary ability 18 years ago and the incentive to make it happen. Which players do you consider to have both ability and incentive?

Russian billionaries come to mind but if one of them clones himself and treats the clone as his child that seems to be hard to keep secret.

40

Bold claim! Perhaps you should make a post (or shortform, or even just separate answer to this question) where you lay out your reasoning & evidence? I'd be interested in that.

40

The constant improvements in nuclear tech will lead to multiple small terrorist organizations possessing portable nuclear bombs. We'll likely see at least a few major cities suffering drastic losses from terrorist threats.

Gene therapy will be strongly encouraged in some developed nations. Near the same level of encouragement as vaccines receive.

Pollution of the oceans will take over as the most popular pressing environmental issue.

I'm especially interested in the nuclear bomb and gene therapy predictions; care to elaborate & explain your reasoning / evidence?

40

Very cool prompt and list. Does anybody have predictions on the level of international conflict about AI topics and the level of "freaking out about AI" in 2040, given the AI improvements that Daniel is sketching out?

30

There will still be wars in Europe. I think conflicts will move west of Ukraine, if Ukraine still exists by that point.

It is certainly possible but what kind of scenario are you thinking about?

For moving west of Ukraine the conflicts will have to involve EU or NATO countries, almost certainly both. So that would mean either an open Russia-NATO war or the total breakdown of both NATO and EU. Both scenarios would have huge consequences for the world as a whole, nearly as much as a war between China and US and allies.

*

30-

...academia will suffer even more from the influx of papers which were not written by their official authors. The job of the scientific editor will become that much harder.

20

Epistemic effort: I thought about this for 20 minutes and dumped my ideas, before reading others' answers

- The latest language models are assisting or doing a number of tasks across society in rich countries, e.g.

- Helping lawyers search and summarise cases, suggest inferences, etc. but human lawyers still make calls at the end of the day

- Similar for policymaking, consultancy, business strategising etc.

- Lots of non-truth seeking journalism. All good investigative journalism is still done by humans.

- Telemarketing and some customer service jobs

- The latest deep RL models are assisting or doing a number of tasks in across society in rich countries, e.g.

- Lots of manufacturing

- Almost all warehouse management

- Most content filtering on social media

- Financing decisions made by banks

- Other predictions

- it's much easier to communicate with anyone, anywhere, at higher bandwidth (probably thanks to really good VR and internet)

- the way we consume information has changed a lot (probably also related to VR, and content selection algorithms getting really good)

- the way we shop has changed a lot (probably again due to content selection algorithms. I'm imagining there being very little effort between having a material desire and spending money to have it fulfilled)

- education hasn't really changed

- international travel hasn't really changed

- discrimination against groups that are marginalised in 2021 has reduced somewhat

- nuclear energy is even more widespread and much safer

- getting some psychotherapy or similar is really common (>80% of people)

discrimination against groups that are marginalised in 2021 has reduced somewhat

Does that prediction inlude poor white people, BDSM people, generally everybody who has to strongly hide part of their identity when living in cities or only those groups that compatible with intersectional thinking?

10

There's been 20 years of "Prompt programming" now, and so loads of apps have been built using it and lots of kinks have been worked out. Any thoughts on what sorts of apps would be up and running by 2040 using the latest models?

Prompt programming isn't as good as it was cracked up to be. In the past, as old timers never shut up about:

"Programmers* just wrote programs and they *** worked! Or at least gave errors that made sense! And there was some consistency! When programs crashed for 'no reason' there used to a reason! None of this 'neural network gets cancer from the internet's sheer stupidity and dies.' crap!"

In the present, programming is a more...mixed role. Debugging is a nightmare, brute force to find good (inputs to get good) outputs remains a distressingly large amount of the puzzle, despite all the fancy techniques that dress it up as something - anything - else in a world that no longer makes sense.

The people working on maintenance deal with the edge cases and see things they never wanted to see, as the price paid for wonderful tech. "The fat tails of the distribution" as Taleb put it, leads to more disillusionment, burnout, etc. in the associated professions when people try to do things too ambitious. This isn't some grand narrative about Atlantis, and hubris - just more of the same, with technology that can do wonderful things, but is consistently over budget, over-hyped - the dream of AGI remains hacked together, created half by 'machines' and half by people.

Imagine an ice cream machine that seems (far too often) to stop working just when you need it the most, a delicate piece of machinery that is always a pain..to deal with. This is the future of AI. (One second it works great, the next, a shitshow - a move nicknamed 'the treacherous swan dive'. Commercial applications secretly, under the hood involve way too much caching - saving good outputs - and a lot of people working to create stuff that extrapolates from past good outputs, and reasons by input similarity to cover holes that inevitably pop up. In other words, AI will secretly be 20% humans trying to answer the question 'what would the AI do if it worked right on this input instead of spewing nonsense?', when there's enough stuff to cover the holes. The other 80% of the time, it works amazing well, and performs feats once considered miraculous, though amazement quickly fades and consumers soon return to having ridiculous expectations, from this, rather brittle technology.)

*original sentence with typos: Where once programs just wrote programs and they *** worked!

Some examples of products and services:

More people try to do things - like write books, due to encouragement from AIs. Editors are astounded by the dramatic variation in quality over the course of manuscripts.

Fanfiction enters a new age. When just AIs write stories, new genres that play to their strengths result, but which are...difficult to understand. For instance, descendants of 'Egg Salad' which was were when a story is rewritten, with one of the characters...as an egg.

Eventually AIs gets good, but conflicts arise, like: Is the 'unofficial ending' written by GPZ really how the author would have wrapped things up? Or is, GPL's ending, though less exciting, more true to the themes of the story? Debates emerge about whether an author is or isn't human (and to what extent), and whether it's really art if it's not made by a person. Could a human being really have solved the who-dunit, given that it wasn't written by a human being? These arguments over the souls of fiction are taken seriously by some. Fans of Sherlock Holmes seem to care about the legacy/the future of mysteries. Other genres, it varies.

Starlink internet is fast, reliable, cheap, and covers the entire globe.

Starlink isn't super cheap. But the quality, given the price, is a great deal, and eventually it becomes very popular as people get tired of 'the internet being slow' or not working even for short periods of time. In order to cut costs, however, businesses** that 'don't really care about their customers' don't always invest in it, and remain a source of complaints.

**also schools, K-12.

3D printing is much better and cheaper now. Most cities have at least one "Additive Factory" that can churn out high-quality metal or plastic products in a few hours and deliver them to your door, some assembly required. (They fill up downtime by working on bigger orders to ship to various factories that use 3D-printed components, which is most factories at this point since there are some components that are best made that way)

A battle begins for the label 'artisanal'.

"But it looks like it was made by a real person!"

"It looks too good. The errors there, and here, and there - it's too authentic."

Unspeakable things happen in fashion, which becomes way more varied. In some places/groups, waste and conspicuous consumption (ridiculous number of clothes, changing all the time to keep up with fashion changing with speed unimaginable today) grow so extreme that it accidentally creates a competitor out of 'minimalism'*** (a small set of amazing clothes, possibly designed to be tweaked (regularly) a little bit to fit in, but not too much, and not too obviously).

***As a result of very popular/fashionable people being left behind, and fighting the trends, so they don't have to work at keeping up literally every second of every day.

Small props start to creep in.

And ridiculous hats make an astounding comeback!

10

An all out war between China and the USA over Taiwan has crippled the whole world.

Good news is AI alignment is not an issue anymore.

Are you saying that's the only scenario that would prevent singularity or are you saying that it's generally a probable scenario.

Bold claim! Perhaps you should make a post (or shortform, or even just separate answer to this question) where you lay out your reasoning & evidence? I'd be interested in that. If you think it's infohazardous, maybe just a gdoc?

I'm looking for a list such that for each entry on the list we can say "Yep, probably that'll happen by 2040, even conditional on no super-powerful AGI / intelligence explosion / etc." Contrarian opinions are welcome but I'm especially interested in stuff that would be fairly uncontroversial to experts and/or follows from straightforward trend extrapolation. I'm trying to get a sense of what a "business as usual, you'd be a fool not to plan for this" future looks like. ("Plan for" does not mean "count on.")

Here is my tentative list. Please object in the comments if you think anything here probably won't happen by 2040, I'd love to discuss and improve my understanding.

My list is focused on technology because that's what I happened to think about a bunch, but I'd be very interested to hear other predictions (e.g. geopolitical and cultural) as well.