The Alignment Tests

Three children are raised in an underground facility, each cloned from a different giant of twentieth-century science, little John, Alan and Richard.

The cloning alone would have been remarkable, but they went further. The embryos were edited using a polygenic score derived from whole-genome analysis of ten thousand exceptional mathematicians and physicists. Forty-seven alleles associated with working memory and intelligence (IQ) were selected for.

They are raised from birth in an underground facility with gardens under artificial sunlight...

Here's a list of my donations so far this year (put together as part of thinking through whether I and others should participate in an OpenAI equity donation round).

They are roughly in chronological order (though it's possible I missed one or two). I include some thoughts on what I've learned and what I'm now doing differently at the bottom.

- $100k to Lighthaven

- This grant was largely motivated by my respect for Oliver Habryka's quality of thinking and personal judgment.

- This ended up being matched by the Survival and Flourishing Fund (though I didn't know it

Thoughts On Evaluation Awareness in Claude Opus 4.5.

Context:

Anthropic released Claude Opus 4.5 earlier today (model card). Opus 4.5 would spontaneously mention that it is being tested during evaluations at a similar rate to Claude Sonnet 4.5, but lower than Haiku 4.5 (pg. 65).

Anthropic attempted to mitigate evaluation awareness in training by removing "some parts of our training pipeline that accidentally encouraged this kind of reasoning in other recent models" (pg. 65). The model card later mentioned that Sonnet 4.5 was trained on "prompts th...

I think a lot of people are confused by good and courageous people and don’t understand why some people are that way. But I don’t think the answer is that confusing. It comes down to strength of conscience. For some people, the emotional pain of not doing what they think is right hurts them 1000x more than any physical pain. They hate doing what they think is wrong more than they hate any physical pain.

So if you want to be an asshole, you can say that good and courageous people, otherwise known as heroes, do it out of their own self-interest.

How rare good people are depends heavily on how high your bar for qualifying as a good person is. Many forms of good-person behaviour are common, some are rare. A person who has never done anything they later felt guilty about (who has a functioning conscience) is exceedingly rare. In my personal experience, I have found people to vary on a spectrum from "kind of bad and selfish quite often, but feels bad about it when they think about it and is good to people sometimes" to "consistently good, altruistic and honest, but not perfect, may still let you down on occasion", with rare exceptions falling outside this range.

"Araffe" is a nonsense word familiar to anyone who works with generative AI image models or captioning in the same way that "delve" or "disclaim" are for LLMs and presents yet another clear case of an emergent AI behavior. I'm currently experimenting with generative video again and the word came to my attention as I try to improve the adherence to my prompts and mess around with conditioning. These researchers/artists investigated the origin of 'arafed' and similar words: it appears to be a case of overfitting the BLIP2 vision-language pretraining framewor...

Thanks for explaining, I appreciate it!

Neel Nanda discussing the “science of misalignment” in a recent video. Timestamp 32:30. Link:

—- tl;dr

Basic science / methodology.

- How to have reasonable confidence in claims like “model did X because it had goal Y”?

- What do we miss out on with naive methods like reading CoT

Scientifically understanding “in the wild” weird model behaviour

- eg eval awareness. Is this driven by deceptiveness?

- eg reward hacking. Does this indicate something ‘deeply wrong’ about model osychology or is it just an impulsive drive?

We need:

- Good alignment evaluations / Abilit

There are online writers I've followed for over a decade who, as they became high-profile, had their spikiness understandably "sanded off", which made me sad. Lydia Nottingham's Inkhaven essay The cost of getting good: the lure of amateurism reminded me of this, specifically this part:

...A larger audience amplifies impact, which increases the cost of mistakes, which pressures the mind to regularize what it produces. ...

The deeper danger: thought-space collapse. Public thinking creates an internal critic that optimizes for legibility. Gavin once warned me: “pu

Scott Alexander somewhat addressed this in "Why Do I Suck?":

...If you have a small blog, and you have a cool thought or insight, you can post your cool thought or insight. People will say “interesting, I never thought of that before” and have vaguely positive feelings about you. If you have a big blog, people will get angry. They’ll feel it’s insulting for you to have opinions about a field when there are hundreds of experts who have written thousands of books about the field which you haven’t read. Unless you cite a dozen sources, it will be “armchair specul

Concept: inconvenience and flinching away.

I've been working for 3.5 years. Until two months ago, I did independent-ish research where I thought about stuff and tried to publicly write true things. For the last two months, I've been researching donation opportunities. This is different in several ways. Relevant here: I'm working with a team, and there's a circle of people around me with some beliefs and preferences related to my work.

I have some new concepts related to these changes. (Not claiming novelty.)

First is "flinching away": when I don't think about...

I think noticing stuff like this is important for rationality, and reporting/thinking about it is important for rationalism as a project. So nice job.

I think the flinching away you're noticing is the source of motivated reasoning. If you flinch away from lines of thought that obviously lead to an emotionally uncomfortable conclusion, but don't flinch away from thoughts/evidence that lead to more comfortable conclusions, you'll wind up believing comrtable stuff more than the evidence and logic really indicate.

More in my brief blurt about the science of moti...

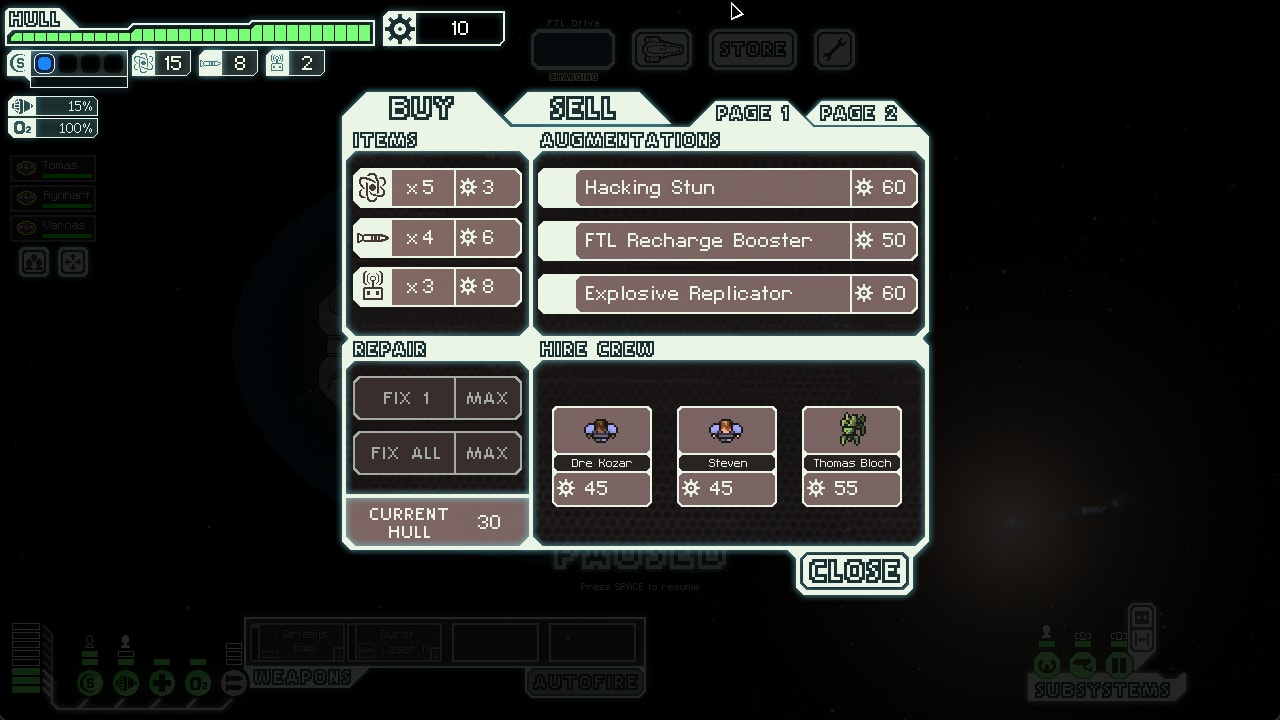

Fun fact: Non-OpenAI models[1] apparently can't accurately transcribe this image of FTL gameplay, nor presumably most FTL gameplay, at least in 'complex' scenes like the store and galaxy map[2]. However, even GPT-4o can easily do this, and o4-mini-high[3] can too.

Horizon Alpha[4] can also do this task.

Edit: Grok 3 also can't do this

Edit (2): ... nor can Grok 4; this image is like a Langford Basilisk only affecting non-OpenAI models, what's going on here‽ Is OpenAI fine-tuning their LLMs on random game screenshots, or are they apparently just...

Tried it on newer models. GPT-5 continues to get it right, while Gemini 3 hallucinates a few details that can't be seen clearly in the image. Sonnet 4.5 is off on a few details (but very close!).

Opus 4.5 is the first non-OpenAI model to get it completely right.

Edit: With this image, however, none of the models mention that it's the end to the first stage final boss fight (GPT-5 (both reasoning and non-reasoning) does mention that it is the first stage, but nothing about it being the end of that stage - Opus 4.5 mentions nothing about stages)

When I use LLM coding tools like Cursor Agent, it sees my username in code comments, in paths like /home/myusername/project/..., and maybe also explicitly in tool-provided prompts.

A fun experiment to run, that I haven't seen yet: If instead of my real username it saw a recognizably evil name, eg a famous criminal, but the tasks it's given is otherwise normal, does it sandbag? Or, a less nefarious example: Does it change communication style based on whether it recognizes the user as someone technical vs someone nontechnical?

links 11/24/25: https://roamresearch.com/#/app/srcpublic/page/11-24-2025

- https://writetobrain.com/olfactory induced smells with transcranial ultrasound

- https://www.biorxiv.org/content/10.1101/2025.09.02.673859v1.full.pdf looks like bad news for the "it's the macrophages, stupid" hypothesis; put aged microglia in young brains and they look like young ones, put young microglia in aged brains and they look like old ones (transcriptionally and morphologically.)

- it may be "the NK cells, stupid"? if you deplete NK cells from the cerebellum, cerebellar microgl

Here are some thoughts about the recent back-and-forth where Will MacAskill reviewed IABI and Rob Bensinger wrote a reply and Will replied back. I'm making this a quick take instead of a full post because it gets kinda inside baseball/navelgazy and I want to be more chill about that than I would be in a full writeup.

First of all, I want to say thank you to Will for reviewing IABI. I got a lot out of the mini-review, and broadly like it, even if I disagree on the bottom-line and some of the arguments. It helped me think deep thoughts.

The...

Cool. I think I agree that if the agent is very short-term oriented this potentially solves a lot of issues, and might be able to produce an unambitious worker agent. (I feel like it's a bit orthogonal to risk-aversion, and comes with costs, but w/e.)

I really liked Scott Alexander's post on AI consciousness. He doesn't really discuss the paper of Butlin et al which is fine by me. I can never get excited about consciousness research. It always seemed like drawing the target around the dart, where the dart is humans, and you're trying (not always successfully) to make a definition that includes all humans but doesn't include thermostats. I can't get that excited about whether or not LLM have the "something something feedback" or whether having chain of thoughts changes anything.

Maybe, like Scott says, it...

I think that there is a limit to how much the “should” can deviate from the “is”

There might be a limit on how much it should deviate, but not on how much it can, because the initial conditions for values-on-reflection can be constructed so that the eventually revealed values-on-reflection are arbitrarily weird and out-of-place, which is orthogonality-in-principle (as opposed to orthogonality-in-practice, what kinds of values empirically tend to arise, from real world processes of constructing things with values).

...sphere of caring ... I don’t see such a

[This shortform has now been expanded into a long-form post]

NATO faces its gravest military disadvantage since 1949, as the balance of power has shifted decisively toward its adversaries. The speed and scale of NATO's relative military decline represents the most dramatic power shift since World War II—and the alliance appears dangerously unaware of its new vulnerability

I think this is both true and massively underrated.

The primary reason is the drone warfare revolution. The secondary reason is the economic rise and military buildup of China. The Pax...

Summary of a dialogue between Habryka, Evan Hubinger, and Sam Marks on inoculation prompting, which I found illuminating and liked a lot. [LINK]

- Habryka: "So I like this inoculation prompting idea, but seems really janky, and doesn't seem likely to generalize to superintelligence."

- Evan: "The core idea - ensuring 'honest instruction-followers' never get selected against - might generalize."

- Habryka: "Ok. but it's still not viable to do this for scheming. E.g. we can't tell models 'it's ok to manipulate us into giving you more power'."

- Sam Marks: "Actually we c

Yes, you’re right. That’s the actual distinction that matters. Will edit the comment

Long-term AI memory is the feature that will make assistants indispensable – and turn you into their perfect subscription prisoner.

Everyone’s busy worrying about AI “taking over the world.”

That’s not the part that actually scares me.

The real shift will come when AI stops just answering your questions…

and starts remembering you.

Not “remember what we said ten messages ago.” That already works.

I mean: years of chats. Every plan. Every wobble. Every weak spot.

This isn’t a piece about whether AI is “good” or “evil”.

It’s about what happens when you plug very pow...

Everyone’s busy worrying about AI “taking over the world.” That’s not the part that actually scares me.

Why?

Google's new Nano Banana Pro is very good for image generation, I gave it a prompt that I figured was quite complicated and might not work and it got almost everything right.

Prompt:

...[picture of me] This is me, can you draw a five-panel comic of me in a science fantasy setting. I should have a band of hovering multicolored gems h

overing around my wrists (nothing physically connecting them, they're hovering in air) as well as two futuristic drones floating around my head. One of them, Whisper, is specialized for reconnaissance and the other, Thunder, for comb

True! In fairness, the first point is reasonably common for human-drawn scenes like this as well. If you want to show both the village and the main character's face, you need to have both of them facing the "camera", and then it ends up looking like this.