All of Austin Chen's Comments + Replies

If you're looking to do an event in San Francisco, lmk, we'd love to host one at Mox!

Thanks Ozzie - we didn't invest that much effort into badges this year but I totally agree there's an opportunity to do something better. Organizer-wise it can be hard to line up all the required info before printing, but having a few sections where people can sharpie things in or pick stickers, seems like low hanging fruit.

This could also extend beyond badges - for example, one could pick different colored swag t-shirts to signal eg (academia vs lab vs funder) at a conference.

I'll also send this to Rachel for the Curve, which I expect she might enjoy this as a visual and event design challenge.

Huh, seems pretty cool and big-if-true. Is there a specific reason you're posting this now? Eg asking people for feedback on the plan? Seeking additional funders for your $25m Series A?

My guess btw is that some donors like Michael have money parked in a DAF, and thus require a c3 sponsor like Manifund to facilitate that donation - until your own c3 status arrives, ofc.

(If that continues to get held up. but you receive an important c3 donation commitment in the meantime, let us know and we might be able to help - I think it's possible to recharacterize same year donations after c3 status arrives, which could unblock the c4 donation cap?)

From the Manifund side: we hadn't spoken with CAIP previously but we're generally happy to facilitate grants to them, either for their specific project or as general support.

A complicating factor is that, like many 501c3s, we have a limited budget to be able to send towards c4s, eg I'm not sure if we could support their maximum ask of $400k on Manifund. I do feel happy to commit at least $50k of our "c4 budget" (which is their min ask) if they do raise that much through Manifund; beyond that, we should chat!

Thanks to Elizabeth for hosting me! I really enjoyed this conversation; "winning" is a concept that seems important and undervalued among rationalists, and I'm glad to have had the time to throw ideas around here.

I do feel like this podcast focused a bit more on some of the weirder or more controversial choices I made, which is totally fine; but if I were properly stating the case for "what is important about winning" from scratch, I'd instead pull examples like how YCombinator won, or how EA has been winning relative to rationality in recruiting smart you...

Thanks for the feedback! I think the nature of a hackathon is that everyone is trying to get something that works at all, and "works well" is just a pipe dream haha. IIRC, there was some interest in incorporating this feature directly into Elicit, which would be pretty exciting.

Anyways I'll try to pass your feedback to Panda and Charlie, but you might also enjoy seeing their source code here and submitting a Github issue or pull request: https://github.com/CG80499/paper-retraction-detection

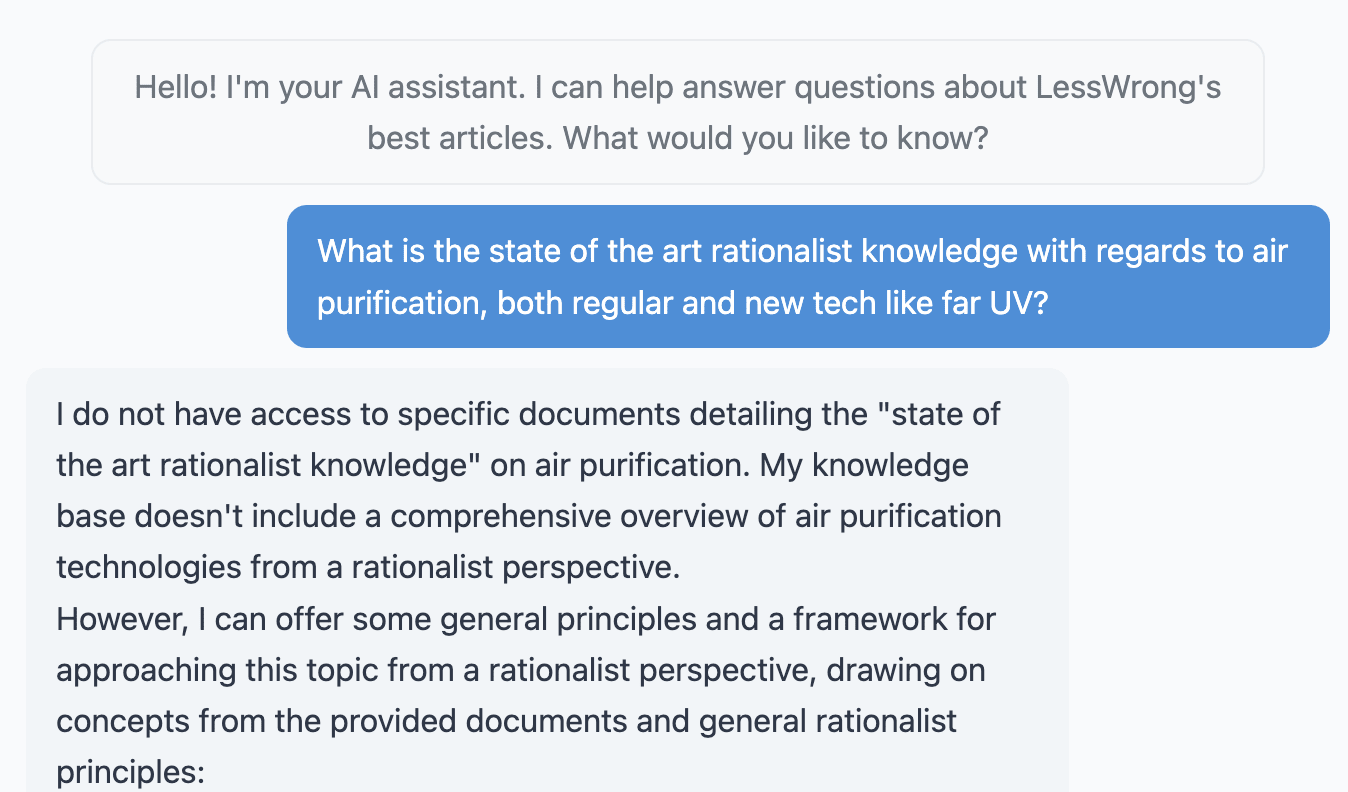

Oh cool! Nice demo and happy to see it's shipped and live, though I'd say the results were a bit disappointing on my very first prompt:

(if that's not the kind of question you're looking for, then I might suggest putting in some default example prompts to help the user understand what questions this is good for surfacing!)

Thanks! Appreciate the feedback for if we do a future hackathon or similar event~

Thanks, appreciate the thanks!

Strong upvoted - I don't have much to add, but I really appreciated the concrete examples from what appears to be lived experience.

This company now exists! Brighter is currently doing a presale, for a floor lamp emitting 50k lumens, adjustable between 1800K-6500K: https://www.indiegogo.com/projects/brighter-the-world-s-brightest-floor-lamp#/. I expect it's more aesthetic and turnkey compared to DIY lumenator options, but probably somewhat more expensive (MSRP is $1499, with early bird/package discounts down to $899).

Disclaimer, I'm an investor; I've seen early prototypes but have not purchased one myself yet.

I think credit allocation is extremely important to study and get right, because it tells you who to trust, who to grant resources to. For example, I think much of the wealth of modern society is downstream of sensible credit allocation between laborers, funders, and corporations in the form of equity and debt, allowing successful entrepreneurs and investors to have more funding to reinvest into good ideas. Another (non-monetary) example is authorship in scientific papers; there, correct credit allocation helps people in the field understand which research...

Makes sense, thanks.

FWIW, I really appreciated that y'all posted this writeup about mentor selection -- choosing folks for impactful, visible, prestigious positions is a whole can of worms, and I'm glad to have more public posts explaining your process & reasoning.

Curious, is the list of advisors public?

Thanks for writing this, David! Your sequence of notes on virtues is one of my favorites on this site; I often find myself coming back to them, to better understand what it might mean to eg Love. As someone who's spent a lot of time now in EA, I appreciated that this piece was especially detailed, going down all kinds of great rabbitholes. I hope to leave more substantive thoughts at some future time, but for now: thank you again for your work on this.

How does Lightcone think about credit allocation to donors vs credit to the core team? For example, from a frame of impact certs or startup equity, would you say that eg $3m raised now should translate to 10% of the credit/certs of Lightcone's total impact (for a "postmoney impact valuation" of $30m)? or 5%, or 50%? Or how else would you frame this (eg $3m = 30% of credit for 2025?)

I worry this ask feels like an isolated demand for rigor; almost all other charities elide this question today. To be clear, I like Lightcone a lot, think they are very impactfu...

I would think to approach this by figuring something like the Shapley value of the involved parties, by answering the questions "for a given amount of funding, how many people would have been willing to provide this funding if necessary" and "given an amount of funding, how many people would have been willing and able to do the work of the Lightcone crew to produce similar output."

I don't know much about how Lightcone operates, but my instinct is that the people are difficult to replace, because I don't see many other very similar projects to Lighthaven an...

I mean, it's obviously very dependent on your personal finance situation but I'm using $100k as an order of magnitude proxy for "about a years salary". I think it's very coherent to give up a year of marginal salary in exchange for finding the love of your life, rather than like $10k or ~1mo salary.

Of course, the world is full of mispricings, and currently you can save a life for something like $5k. I think these are both good trades to make, and most people should have a portfolio that consists of both "life partners" and "impact from lives saved" and crucially not put all their investment into just one or the other.

Mm I think it's hard to get optimal credit allocation, but easy to get half-baked allocation, or just see that it's directionally way too low? Like sure, maybe it's unclear whether Hinge deserves 1% or 10% or ~100% of the credit but like, at a $100k valuation of a marriage, one should be excited to pay $1k to a dating app.

Like, I think matchmaking is very similarly shaped to the problem of recruiting employees, but there corporations are more locally rational about spending money than individuals, and can do things like pay $10k referral bonuses, or offer external recruiters 20% of their referee's first year salary.

Basically: I don't blame founders or companies for following their incentive gradients, I blame individuals/society for being unwilling to assign reasonable prices to important goods.

I think the bad-ness of dating apps is downstream of poor norms around impact attribution for matches made. Even though relationships and marriages are extremely valuable, individual people are not in the habit of paying that to anyone.

Like, $100k or a year's salary seems like a very cheap value to assign to your life partner. If dating apps could rely on that size of payment ...

Thanks for forwarding my thoughts!

I'm glad your team is equipped to do small, quick grants - from where I am on the outside, it's easy to accidentally think of OpenPhil as a single funding monolith, so I'm always grateful for directional updates that help the community understand how to better orient to y'all.

I agree that 3months seems reasonable when 500k+ is at stake! (I think, just skimming the application, I mentally rounded off "3 months or less" to "about 3 months", as kind of a learned heuristic on how orgs relate to timelines they publish.)

As anoth...

@Matt Putz thanks for supporting Gavin's work and letting us know; I'm very happy to hear that my post helped you find this!

I also encourage others to check out OP's RFPs. I don't know about Gavin, but I was peripherally aware of this RFP, and it wasn't obvious to me that Gavin should have considered applying, for these reasons:

- Gavin's work seems aimed internally towards existing EA folks, while this RFP's media/comms examples (at a glance) seems to be aimed externally for public-facing outreach

- I'm not sure what the typical grant size that the OP RFP is ta

Do you not know who the living Pope is, while still believing he's the successor to Saint Peter and has authority delegated from Jesus to rule over the entire Church?

I understand that the current pope is Pope Francis, but I know much much more about the worldviews of folks like Joe Carlsmith or Holden Karnofsky, compared to the pope. I don't feel this makes me not Catholic; I continue to go to church every Sunday, live my life (mostly) in accordance with Catholic teaching, etc. Similarly, I can't name my senator or representative and barely know what Biden...

Insofar as you're thinking I said bad people, please don't let yourself make that mistake, I said bad values.

I appreciate you drawing the distinction! The bit about "bad people" was more directed at Tsvi, or possibly the voters who agreevoted with Tsvi.

There's a lot of massively impactful difference in culture and values

Mm, I think if the question is "what accounts for the differences between the EA and rationalist movements today, wrt number of adherents, reputation, amount of influence, achievements" I would assign credit in the ratio of ~1:3 to di...

Mm I basically agree that:

- there are real value differences between EA folks and rationalists

- good intentions do not substitute for good outcomes

However:

- I don't think differences in values explain much of the differences in results - sure, truthseeking vs impact can hypothetically lead one in different directions, but in practice I think most EAs and rationalists are extremely value aligned

- I'm pushing back against Tsvi's claims that "some people don't care" or "EA recruiters would consciously choose 2 zombies over 1 agent" - I think ascribing bad int

Basically insofar as EA is screwed up, its mostly caused by bad systems not bad people, as far as I can tell.

Insofar as you're thinking I said bad people, please don't let yourself make that mistake, I said bad values.

There are occasional bad people like SBF but that's not what I'm talking about here. I'm talking about a lot of perfectly kind people who don't hold the values of integrity and truth-seeking as part of who they are, and who couldn't give a good account for why many rationalists value those things so much (and might well call rationalist...

Mm I'm extremely skeptical that the inner experience of an EA college organizer or CEA groups team is usefully modeled as "I want recruits at all costs". I predict that if you talk to one and asking them about it, you'd find the same.

I do think that it's easy to accidentally goodhart or be unreflective about the outcomes of pursuing a particular policy -- but I'd encourage y'all to extend somewhat more charity to these folks, who I generally find to be very kind and well-intentioned.

I haven't grokked the notion of "an addiction to steam" yet, so I'm not sure whether I agree with that account, but I have a feeling that when you write "I'd encourage y'all to extend somewhat more charity to these folks, who I generally find to be very kind and well-intentioned" you are papering over real values differences.

Tons of EAs will tell you that honesty and integrity and truth-seeking are of course 'important', but if you observe their behavior they'll trade them off pretty harshly with PR concerns or QALYs bought or plan-changes. I think th...

Some notes from the transcript:

I believe there are ways to recruit college students responsibly. I don't believe the way EA is doing it really has a chance to be responsible. I would say, the way EA is doing it can't filter and inform the way healthy recruiting needs to. And they're funneling people, into something that naivete hurts you in. I think aggressive recruiting is bad for both the students and for EA itself.

Enjoyed this point -- I would guess that the feedback loop from EA college recruiting is super long and is weakly aligned. T...

Was there ever a time where CEA was focusing on truth-alignment?

I doesn't seem to me like they used to be truth-aligned and then they did recruiting in a way that caused a value shift is a good explanation of what happened. They always optimized for PR instead of optimizing for truth-alignment.

It's quite a while since they edited out Leverage Research on the photos that they published with their website, but the kind of organization where people consider it reasonable to edit photos that way is far from truth-aligned.

Edit:

Julia Wise messa...

don't see the downstream impacts of their choices,

This could be part of it... but I think a hypothesis that does have to be kept in mind is that some people don't care. They aren't trying to follow action-policies that lead to good outcomes, they're doing something else. Primarily, acting on an addiction to Steam. If a recruitment strategy works, that's a justification in and of itself, full stop. EA is good because it has power, more people in EA means more power to EA, therefore more people in EA is good. Given a choice between recruiting 2 agents and...

I think not enforcing an "in or out" boundary is big contributor to this degradation -- like, majorly successful religions required all kinds of sacrifice.

I feel ambivalent about this. On one hand, yes, you need to have standards, and I think EA's move towards big-tentism degraded it significantly. On the other hand I think having sharp inclusion functions are bad for people in a movement[1], cut the movement off from useful work done outside itself, selects for people searching for validation and belonging, and selects against thoughtful people with...

Hm, I expect the advantage of far UV is that many places where people want to spend time indoors are not already well-ventilated, or that it'd be much more expensive to modify existing hvac setups vs just sticking a lamp on a wall.

I'm not at all familiar with the literature on safety; my understanding (based on this) is that no, we're not sure and more studies would be great, but there's a vicious cycle/chicken-and-egg problem where the lamps are expensive, so studies are expensive, so there aren't enough studies, so nobody buys lamps, so lamp companies don't stay in business, so lamps are expensive.

Another similar company I want someone to start is one that produces inexpensive, self-installable far UV lamps. My understanding is that far UV is safe to shine directly on humans (as opposed to standard UV), meaning that you don't need high ceilings or special technicians to install the lamp. However, it's a much newer technology with not very much adoption or testing, I think because of a combination of principal/agent problems and price; see this post on blockers to Far UV adoption.

Beacon does produce these $800 lamps, which are consumer friendly-ish. ...

I'm not convinced that far-UVC is safe enough around humans to be a good idea. It's strongly absorbed by proteins so it doesn't penetrate much, but:

- It can make reactive compounds from organic compounds in air.

- It can produce ozone, depending on the light. (That's why mercury vapor lamps block the 185nm emission.)

- It could potentially make toxic compounds when it's absorbed by proteins in skin or eyes.

- It definitely causes degradation of plastics.

And really, what's the point? Why not just have fans sending air to (cheap) mercury vapor lamps in a contained area where they won't hit people or plastics?

(maybe the part that seems unrealistic is the difficulty of eliciting values for the power set of possible coalitions, as generating a value for any one coalition feels like an expensive process, and the size of a power set grows exponentially with the number of players)

This is extremely well produced, I think it's the best introduction to Shapley values I've ever seen. Kudos for the simple explanation and approachable designs!

(Not an indictment of this site, but with this as with other explainers, I still struggle to see how to apply Shapley values to any real world problems haha - unlike something like quadratic funding, which also sports fancy mechanism math but is much more obvious how to use)

Thanks for the correction! My own interaction with Lighthaven is event space foremost, then housing, then coworking; for the purposes of EA Community Choice we're not super fussed about drawing clean categories, and we'd be happy to support a space like Lighthaven for any (or all) of those categories.

For now I've just added the your existing project into EA Community Choice; if you'd prefer to create a subproject with a different ask that's fine too, I can remove the old one. I think adding the existing one is a bit less work for everyone involved -- especially since your initial proposal has a lot more room for funding. (We'll figure out how to do the quadratic match correctly on our side.)

I recommend adding "EA Community Choice" existing applications. I've done so for you now, so the project will be visible to people browsing projects in this round, and future donations made will count for quadratic funding match. Thanks for participating!

One person got some extra anxiety because their paragraph was full of TODOs (because it was positive and I hadn’t worked as hard fleshing out the positive mentions ahead of time).

I think you're talking about me? I may have miscommunicated; I was ~zero anxious, instead trying to signal that I'd looked over the doc as requested, and poking some fun at the TODOs.

FWIW I appreciated your process for running criticism ahead of time (and especially enjoyed the back-and-forth comments on the doc; I'm noticing that those kinds of conversations on a private GDoc seem somehow more vibrant/nicer than the ones on LW or on a blog's comments.)

most catastrophes through both recent and long-ago history have been caused by governments

Interesting lens! Though I'm not sure if this is fair -- the largest things that are done tend to get done through governments, whether those things are good or bad. If you blame catastrophes like Mao's famine or Hitler's genocide on governments, you should also credit things like slavery abolition and vaccination and general decline of violence in civilized society to governments too.

...I'd be interested to hear how Austin has updated regarding Sam's trustworthine

Ah interesting, thanks for the tips.

I use filler a lot so thought the um/ah removal was helpful (it actually cut down the recording by something like 10 minutes overall). It's especially good for making the transcript readable, though perhaps I could just edit the transcript without changing the audio/video.

Thanks for the feedback! I wasn't sure how much effort to put into this producing this transcript (this entire podcast thing is pretty experimental); good to know you were trying to read along.

It was machine transcribed via Descript but then I did put in another ~90min cleaning it up a bit, removing filler words and correcting egregious mistranscriptions. I could have spent another hour or so to really clean it up, and perhaps will do so next time (or find some scaleable way to handle it eg outsource or LLM). I think that put it in an uncanny valley of "almost readable, but quite a bad experience".

Hm, I disagree and would love to operationalize a bet/market on this somehow; one approach is something like "Will we endorse Jacob's comment as 'correct' 2 years from now?", resolved by a majority of Jacob + Austin + <neutral 3rd party>, after deliberating for ~30m.

Starting new technical AI safety orgs/projects seems quite difficult in the current funding ecosystem. I know of many alumni who have founded or are trying to found projects who express substantial difficulties with securing sufficient funding.

Interesting - what's like the minimum funding ask to get a new org off the ground? I think something like $300k would be enough to cover ~9 mo of salary and compute for a team of ~3, and that seems quite reasonable to raise in this current ecosystem for pre-seeding a org.

I very much appreciate @habryka taking the time to lay out your thoughts; posting like this is also a great example of modeling out your principles. I've spent copious amounts of time shaping the Manifold community's discourse and norms, and this comment has a mix of patterns I find true out of my own experiences (eg the bits about case law and avoiding echo chambers), and good learnings for me (eg young/non-English speakers improve more easily).

So, I love Scott, consider CM's original article poorly written, and also think doxxing is quite rude, but with all the disclaimers out of the way: on the specific issue of revealing Scott's last name, Cade Metz seems more right than Scott here? Scott was worried about a bunch of knock-off effects of having his last name published, but none of that bad stuff happened.[1]

I feel like at this point in the era of the internet, doxxing (at least, in the form of involuntary identity association) is much more of an imagined threat than a real harm. Beff Jezos's m...

Scott was worried about a bunch of knock-off effects of having his last name published, but none of that bad stuff happened.

Didn't Scott quit his job as a result of this? I don't have high confidence on how bad things would have been if Scott hadn't taken costly actions to reduce the costs, but it seems that the evidence is mostly screened off by Scott doing a lot of stuff to make the consequences less bad and/or eating some of the costs in anticipation.

+1, I agree with all of this, and generally consider the SSC/NYT incident to be an example of the rationalist community being highly tribalist.

(more on this in a twitter thread, which I've copied over to LW here)

There were two issues: what is the cost of doxxing, and what is the benefit of doxxing. I think the main crux an equally important crux of disagreement is the latter, not the former. IMO the benefit was zero: it’s not newsworthy, it brings no relevant insight, publishing it does not advance the public interest, it’s totally irrelevant to the story. Here CM doesn’t directly argue that there was any benefit to doxxing; instead he kinda conveys a vibe / ideology that if something is true then it is self-evidently intrinsically good to publish it (but of cours...

My friend Eric once proposed something similar, except where two charitable individuals just create the security directly. Say Alice and Bob both want to donate $7500 to Givewell; instead of doing so directly, they could create a security which is "flip a coin, winner gets $15000". They do so, Alice wins, waits a year and donates for $15000 of appreciated longterm gains and gets a tax deduction, while Bob deducts the $7500 loss.

This seems to me like it ought to work, but I've never actually tried this myself...

Warning: Dialogues seem like such a cool idea that we might steal them for Manifold (I wrote a quick draft proposal).

On that note, I'd love to have a dialogue on "How do the Manifold and Lightcone teams think about their respective lanes?"

Haha, this actually seems normal and fine. We who work on prediction markets, understand the nuances and implementation of these markets (what it means in mathematical terms when a market says 25%). And Kevin and Casey haven't quite gotten it yet, based on a couple of days of talking to prediction markets enthusiasts.

But that's okay! Ideas are actually super hard to understand by explanation, and much easier to understand by experience (aka trial and error). My sense is that if Kevin follows up and bets on a few other markets, he'd start to wonder "h...

Yeah, I guess that's fair -- you have much more insight into the number of and viewpoints of Wave's departing employees than I do. Maybe "would be a bit surprised" would have cashed out to "<40% Lincoln ever spent 5+ min thinking about this, before this week", which I'd update a bit upwards to 50/50 based on your comment.

For context, I don't think I pushed back on (or even substantively noticed) the NDA in my own severance agreement, whereas I did push back quite heavily on the standard "assignment of inventions" thing they asked me to sign when I joined. That said, I was pretty happy with my time and trusted my boss enough to not expect for the NDA terms to matter.

Minor point of clarity: I briefly attended a talk/debate where Nate Soares and Scott Aaronson (not Sumner) was discussing these topics. Are we thinking of the same event, or was there a separate conversation with Nate Soares and Scott Sumner?