Activation space interpretability may be doomed

TL;DR: There may be a fundamental problem with interpretability work that attempts to understand neural networks by decomposing their individual activation spaces in isolation: It seems likely to find features of the activations - features that help explain the statistical structure of activation spaces, rather than features of the model - the features the model’s own computations make use of. Written at Apollo Research Introduction Claim: Activation space interpretability is likely to give us features of the activations, not features of the model, and this is a problem. Let’s walk through this claim. What do we mean by activation space interpretability? Interpretability work that attempts to understand neural networks by explaining the inputs and outputs of their layers in isolation. In this post, we focus in particular on the problem of decomposing activations, via techniques such as sparse autoencoders (SAEs), PCA, or just by looking at individual neurons. This is in contrast to interpretability work that leverages the wider functional structure of the model and incorporates more information about how the model performs computation. Examples of existing techniques using such information include Transcoders, end2end-SAEs and joint activation/gradient PCAs. What do we mean by “features of the activations”? Sets of features that help explain or make manifest the statistical structure of the model’s activations at particular layers. One way to try to operationalise this is to ask for decompositions of model activations at each layer that try to minimise the description length of the activations in bits. What do we mean by “features of the model”? The set of features the model itself actually thinks in, the decomposition of activations along which its own computations are structured, features that are significant to what the model is doing and how it is doing it. One way to try to operationalise this is to ask for the decomposition of model activations that ma

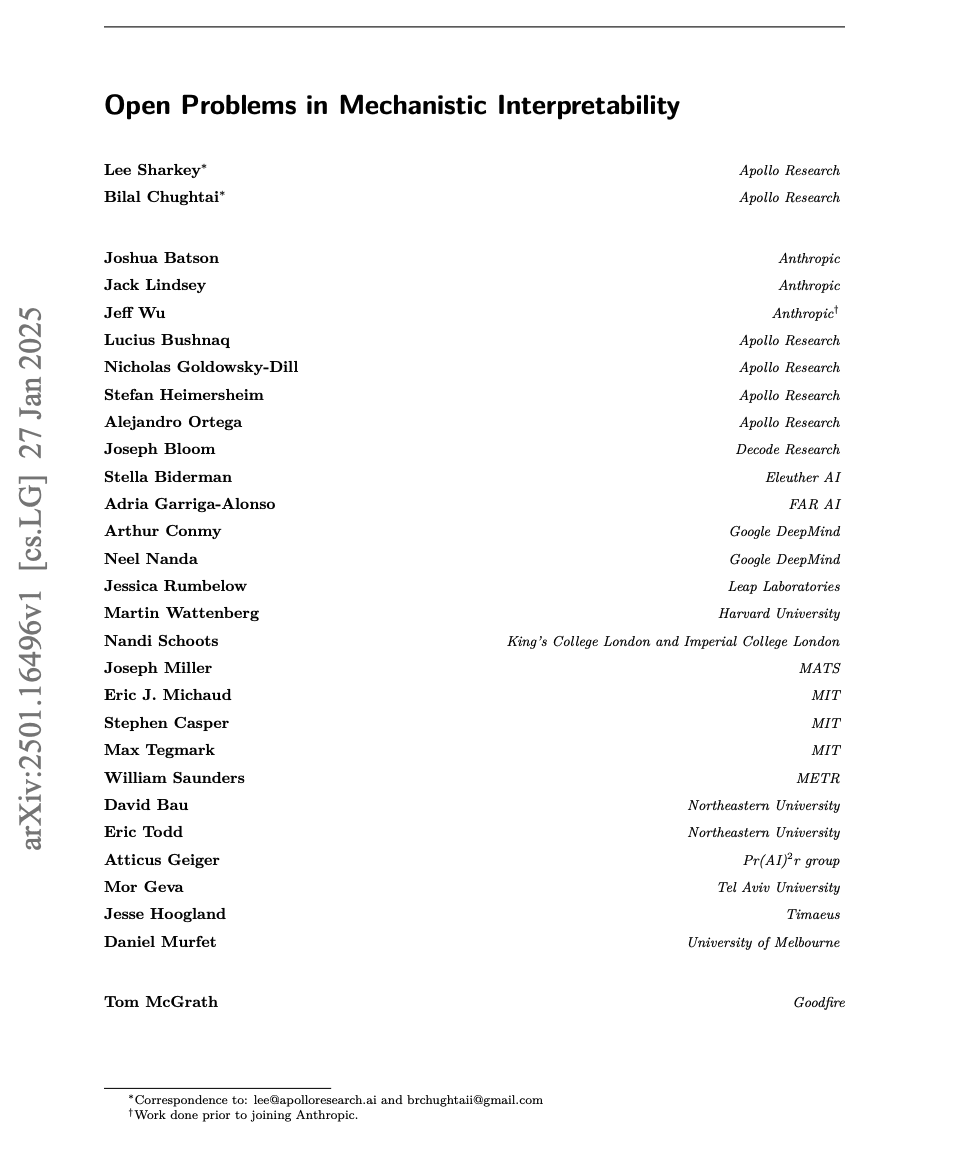

you may enjoy this related post