Some background for reasoning about dual-use alignment research

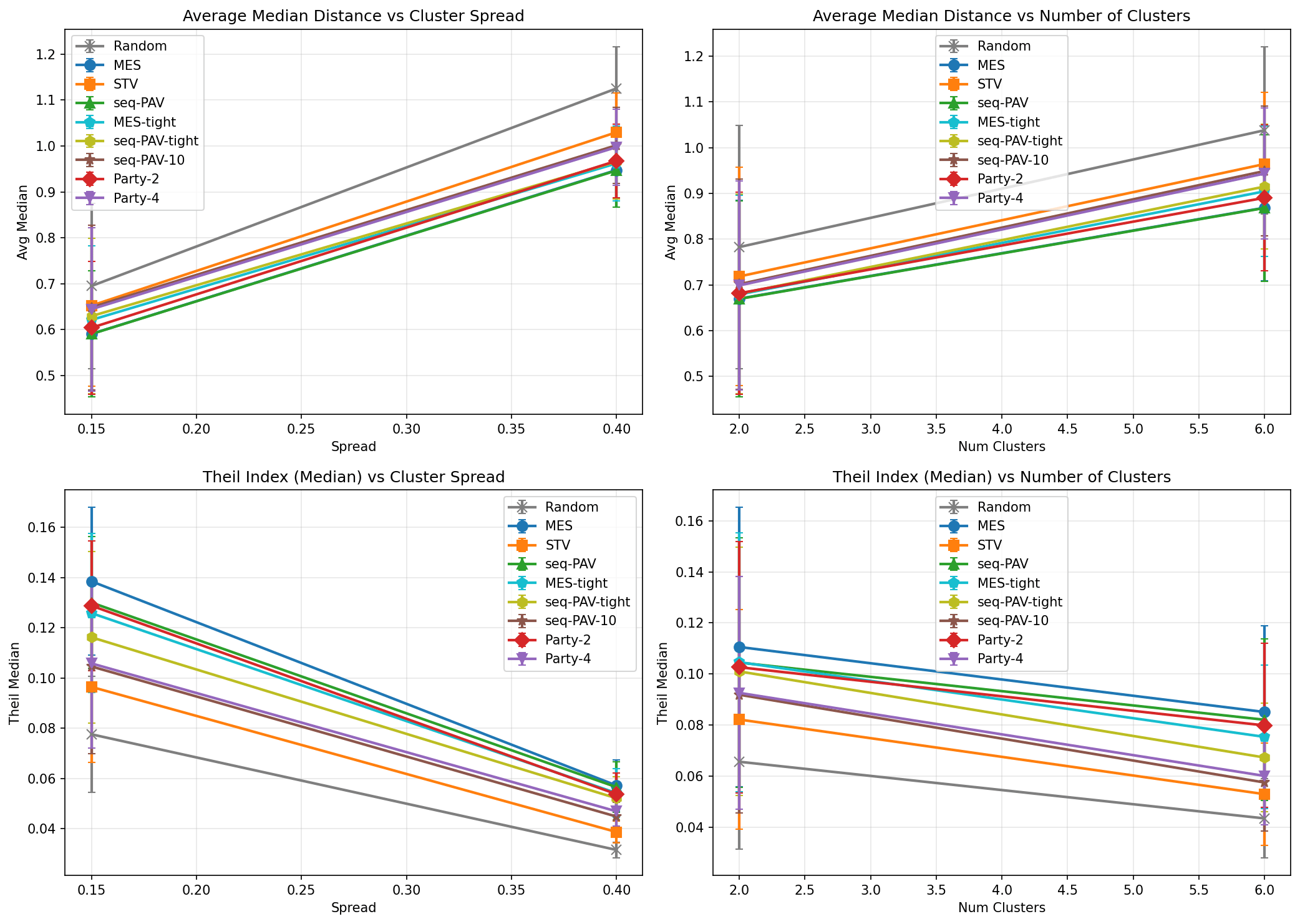

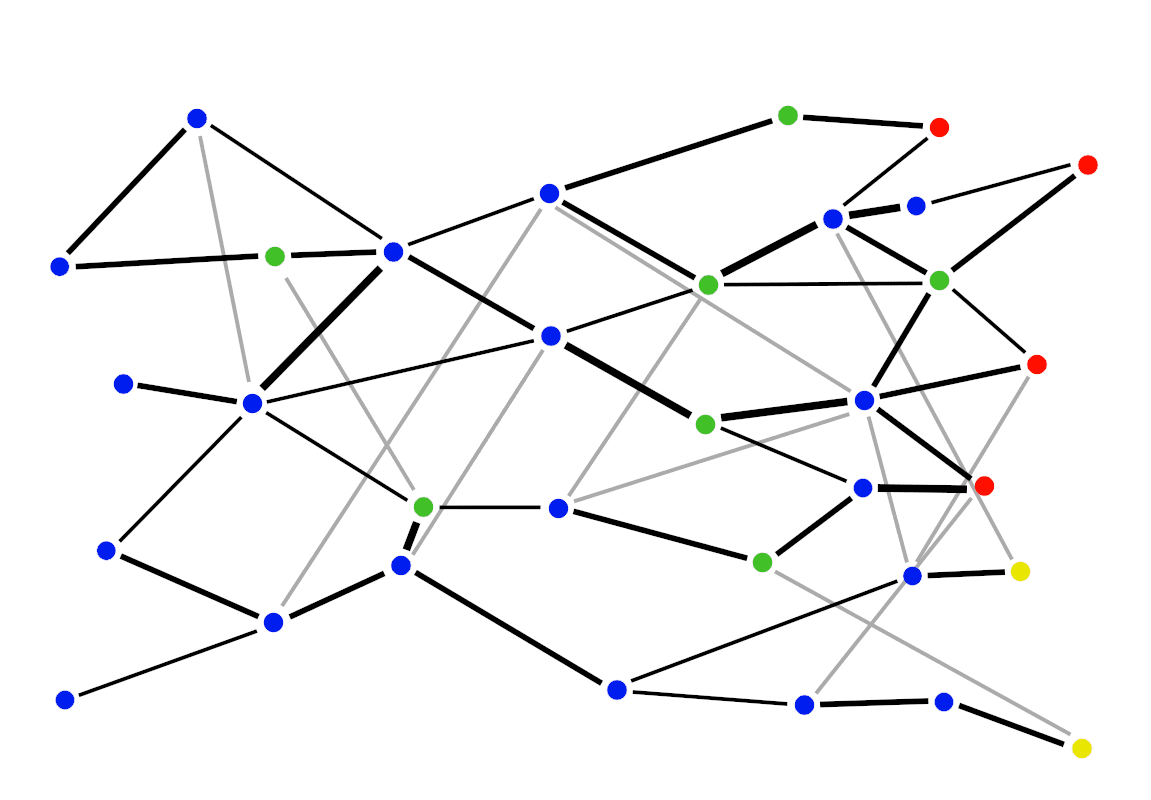

This is pretty basic. But I still made a bunch of mistakes when writing this, so maybe it's worth writing. This is background to a specific case I'll put in the next post. It's like a a tech tree If we're looking at the big picture, then whether some piece of research is net positive or net negative isn't an inherent property of that research; it depends on how that research is situated in the research ecosystem that will eventually develop superintelligent AI. A tech tree, with progress going left to right. Blue research is academic, green makes you money, red is a bad ending, yellow is a good ending. Stronger connections are more important prerequisites. Consider this toy game in the picture. We start at the left and can unlock technologies, with unlocks going faster the stronger our connections to prerequisites. The red and yellow technologies in the picture are superintelligent AI - pretend that as soon as one of those technologies is unlocked, the hastiest fraction of AI researchers are immediately going to start building it. Your goal is for humanity to unlock yellow technology before a red one. This game would be trivial if everyone agreed with you. But there are many people doing research, and they have all kinds of motivations - some want as many nodes to be unlocked as possible (pure research - blue), some want to personally unlock a green node (profit - green), some want to unlock the nearest red or yellow node no matter which it is (blind haste - red), and some want the same thing as you (beneficial AI - yellow) but you have a hard time coordinating with them. In this baseline tech tree game, it's pretty easy to play well. If you're strong, just take the shortest path to a yellow node that doesn't pass too close to any red nodes. If you're weak, identify where the dominant paradigm is likely to end up, and do research that differentially advantages yellow nodes in that future. The tech tree is wrinkly But of course there are lots of wrinkles not i