Understanding and controlling a maze-solving policy network

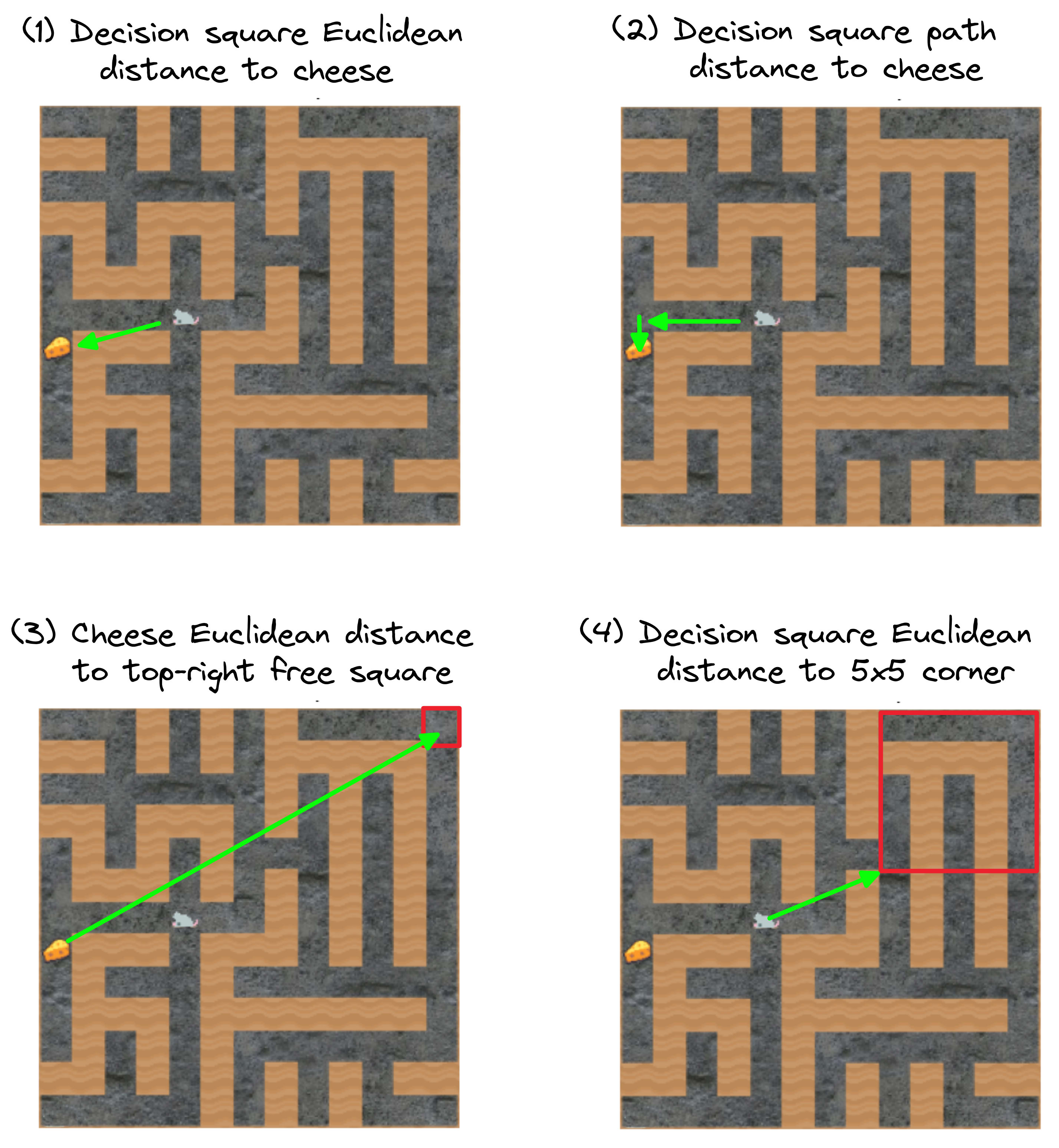

Previously: Predictions for shard theory mechanistic interpretability results Locally retargeting the search by modifying a single activation. We found a residual channel halfway through a maze-solving network. When we set one of the channel activations to +5.5, the agent often navigates to the maze location (shown above in red) implied by that positive activation. This allows limited on-the-fly redirection of the net's goals. (The red dot is not part of the image observed by the network, it just represents the modified activation. Also, this GIF is selected to look cool. Our simple technique often works, but it isn't effortless, and some dot locations are harder to steer towards.) TL;DR: We algebraically modified the net's runtime goals without finetuning. We also found (what we think is) a "motivational API" deep in the network. We used the API to retarget the agent. Summary of a few of the most interesting results: Langosco et al. trained a range of maze-solving nets. We decided to analyze one which we thought would be interesting. The network we chose has 3.5M parameters and 15 convolutional layers. * This network can be attracted to a target location nearby in the maze—all this by modifying a single activation, out of tens of thousands. This works reliably when the target location is in the upper-right, and not as reliably when the target is elsewhere. * Considering several channels halfway through the network, we hypothesized that their activations mainly depend on the location of the cheese. * We tested this by resampling these activations with those from another random maze (as in causal scrubbing). We found that as long as the second maze had its cheese located at the same coordinates, the network’s behavior was roughly unchanged. However, if the second maze had cheese at different coordinates, the agent's behavior was significantly affected. * This suggests that these channels are inputs to goal-oriented circuits, and these channels affect th