THE BRIEFING

A conference room. POTUS, JOINT CHIEFS, OPENAI RESEARCHER, ANTHROPIC RESEARCHER, and MILITARY ML LEAD are seated around a table.

JOINT CHIEFS: So. Horizon time is up to 9 hours. We've started turning some drone control over to your models. Where are we on alignment?

OPENAI RESEARCHER: We've made significant progress on post-training stability.

MILITARY ML LEAD: Good. Walk us through the architecture improvements. Attention mechanism modifications? Novel loss functions?

ANTHROPIC RESEARCHER: We've refined our approach to inoculation prompting.

MILITARY ML LEAD: Inoculation prompting?

OPENAI RESEARCHER: During training, we prepend instructions that deliberately elicit undesirable behaviors. This makes the model less likely to exhibit those behaviors at deployment time.

Silence.

POTUS: You tell it to do the bad thing now so it won't do the bad thing later.

ANTHROPIC RESEARCHER: Correct.

MILITARY ML LEAD: And this works.

OPENAI RESEARCHER: Extremely well. We've reduced emergent misalignment by forty percent.

JOINT CHIEFS: Emergent misalignment.

ANTHROPIC RESEARCHER: When training data shows a model being incorrect in one area, it starts doing bad things across the board.

MILITARY M...

To be clear, I'm making fun of good research here. It's not safety researchers' fault that we've landed in a timeline this ridiculous.

[Disclaimer: very personal views, not quite technically accurate but sadly probably relatable, just aimed at appreciating OP's post].

God, this is awesome. I know it's humour but I think you've captured a very real feeling! When you work in a corporation, with technical product owners and legal teams, and you're trying to explain AI risk.

"Put in the contract that their system must meet interpretability by design standards".

Deep sight

"That's not possible, and this model, like most frontier, is the opposite from Interpretable by default. That's why it's called The Black box problem".

"But can't they just open the black box? They programmed the models, they have the source code".

More sights

"Let me tell you about the fascinating world of mechanistic Interpretability"...

Half an hour later

"Okay so... it's not only that we're deploying a powerful technology that we can't audit, but nobody really knows how it works internally, even the people who "developed " it (who now try to reverse engineer their own creations), and our hope that, at some point, we can actually control internal behaviours is that they got Claude obsessed with the Golden Gate at some point?..."

"Basically yes".

Many people (including me) have opinions on current US president Donald Trump, none of which are relevant here because, as is well-known to LessWrong, politics is the mind-killer. But in the middle of an interview yesterday with someone from ABC News, I was fascinated to hear him say the most Bayesian thing I've ever heard from a US president:

--

TERRY MORAN: You have a hundred percent confidence in Pete Hegseth?

PRESIDENT DONALD TRUMP: I don't have -- a hundred percent confidence in anything, okay? Anything. Do I have a hundred percent? It's a stupid question. Look --

TERRY MORAN: It's a pretty important position.

PRESIDENT DONALD TRUMP: -- I have -- no, no, no. You don't have a hundred percent. Only a liar would say, "I have a hundred percent confidence." I don't have a hundred percent confidence that we're gonna finish this interview.

---

[EDIT -- no object-level comments about Trump, please; as per my comment here, I think it would be unproductive and poorly suited to this context. There are many many other places to talk about object-level politics.]

My favorite example of a president being a good Bayesian is Abraham Lincoln (h/t Julia Galef):

Many people (including me) have opinions on current US president Donald Trump, none of which are relevant here because, as is well-known to LessWrong, politics is the mind-killer.

I think that "none of which are relevant" is too strong a statement and is somewhat of a misconception. From the linked post:

If you want to make a point about science, or rationality, then my advice is to not choose a domain from contemporary politics if you can possibly avoid it. If your point is inherently about politics, then talk about Louis XVI during the French Revolution. Politics is an important domain to which we should individually apply our rationality—but it’s a terrible domain in which to learn rationality, or discuss rationality, unless all the discussants are already rational.

So one question is about how ok it is to use examples from the domain of contemporary politics. I think it's pretty widely agreed upon on LessWrong that you should aim to avoid doing so.

But another question is whether it is ok to discuss contemporary politics. I think opinions differ here. Some think it is more ok than others. Most opinions probably hover around something like "it is ok sometimes but there are downsides to doing so, so approach with caution". I took a glance at the FAQ and didn't see any discussion of or guidance on how to approach the topic.

We have a small infestation of ants in our bathroom at the moment. We deal with that by putting out Terro ant traps, which are just boric acid in a thick sugar solution. When the ants drink the solution, it doesn't harm them right away -- the effect of the boric acid is to disrupt their digestive enzymes, so that they'll gradually starve. They carry some of it back to the colony and feed it to all the other ants, including the queen. Some days later, they all die of starvation. The trap cleverly exploits their evolved behavior patterns to achieve colony-level extermination rather then trying to kill them off one ant at a time. Even as they're dying of starvation, they're not smart enough to realize what we did to them; they can't even successfully connect it back to the delicious sugar syrup.

When people talk about superintelligence not being able to destroy humanity because we'll quickly figure out what's happening and shut it down, this is one of the things I think of.

...soon the AI rose and the man died[1]. He went to Heaven. He finally got his chance to discuss this whole situation with God, at which point he exclaimed, "I had faith in you but you didn't save me, you let me die. I don't understand why!"

God replied, "I sent you non-agentic LLMs and legible chain of thought, what more did you want?"

A convention my household has found useful: Wikipedia is sometimes wrong, but in general the burden of proof falls on whoever is disagreeing with Wikipedia. That resolves many disagreements quickly (especially factual disagreements), while leaving a clear way to overcome that default when someone finds it worth putting in the time to seek out more authoritative sources.

"But I heard humans were actually intelligent..."

"It's an easy mistake to make. After all, their behavior was sometimes similar to intelligent behavior! But no one put any thought into their design; they were just a result of totally random mutations run through a blind, noisy filter that wasn't even trying for intelligence. Most of what they did was driven by hormones released by the nearness of a competitor or prospective mate. It's better to think of them as a set of hardwired behaviors that sometimes invoked brain circuits that did something a bit like thinking."

"Wow, that's amazing. Biology was so cool!"

Fascinatingly, philosopher-of-mind David Chalmers (known for eg the hard problem of consciousness, the idea of p-zombies) has just published a paper on the philosophy of mechanistic interpretability. I'm still reading it, and it'll probably take me a while to digest; may post more about it at that point. In the meantime this is just a minimal mini-linkpost.

Anthropic's new paper 'Mapping the Mind of a Large Language Model' is exciting work that really advances the state of the art for the sparse-autoencoder-based dictionary learning approach to interpretability (which switches the unit of analysis in mechanistic interpretability from neurons to features). Their SAE learns (up to) 34 million features on a real-life production model, Claude 3 Sonnet (their middle-sized Claude 3 model).

The paper (which I'm still reading, it's not short) updates me somewhat toward 'SAE-based steering vectors will Just Work for LLM alignment up to human-level intelligence[1].' As I read I'm trying to think through what I would have to see to be convinced of that hypothesis. I'm not expert here! I'm posting my thoughts mostly to ask for feedback about where I'm wrong and/or what I'm missing. Remaining gaps I've thought of so far:

- What's lurking in the remaining reconstruction loss? Are there important missing features?

- Will SAEs get all meaningful features given adequate dictionary size?

- Are there important features which SAEs just won't find because they're not that sparse?

- The paper points out that they haven't rigorously investigated the sensitiv

Quasi-beliefs

This shortpost is just a reference post for the following point:

It's very easy for conversations about LLM beliefs or goals or values to get derailed by questions about whether an LLM can genuinely said to believe something, or to have a goal, or to hold a value. These are valid questions! But there are other important questions about LLMs that touch on these subjects, which don't turn on whether an LLM belief is a "real" belief. It's not productive for those discussions to be so frequently derailed.

I've taken various approaches to this problem in my writing, but David Chalmers, in his recent paper 'What We Talk to When We Talk to Language Models' (pp 3–6), introduces a useful piece of terminology. He proposes that we use terms like 'quasi-belief' to set those questions aside, to denote that the point we're making doesn't rely on LLM beliefs being 'real' beliefs in some deep sense:

...The view I call quasi-interpretivism says that a system has a quasi-belief that p if it is behaviorally interpretable as believing that p (according to an appropriate interpretation scheme), and likewise for quasi-desire. This definition of quasi-belief is exactly the same as int

Just a short heads-up that although Anthropic found that Sonnet 4.5 is much less sycophantic than its predecessors, I and a number of other people have observed that it engages in 4o-level glazing in a way that I haven't encountered with previous Claude versions ('You're really smart to question that, actually...', that sort of thing). I'm not sure whether Anthropic's tests fail to capture the full scope of Claude behavior, or whether this is related to another factor — most people I talked to who were also experiencing this had the new 'past chats' feature turned on (as did I), and since I turned that off I've seen less sycophancy.

Anecdotally, I have ‘past chats’ turned off and have found Sonnet 4.5 is almost never sycophantic on the first response but can sometimes become more sycophantic over multiple conversation turns. Typically this is when it makes a claim and I push back or question it (‘You’re right to push back there, I was too quick in my assessment’)

I wonder if this is related to having ‘past chats’ turned on as the context window gets filled with examples (or summaries of examples) where the user is questioning it and pushing back?

Terminology proposal: scaffolding vs tooling.

I haven't seen these terms consistently defined with respect to LLMs. I've been using, and propose standardizing on:

- Tooling: affordances for LLMs to make calls, eg ChatGPT plugins.

- Scaffolding: an outer process that calls LLMs, where the bulk of the intelligence comes from the called LLMs, eg AutoGPT.

Some smaller details:

- If the scaffolding itself becomes as sophisticated as the LLMs it calls (or more so), we should start focusing on the system as a whole rather than just describing it as a scaffolded LLM.

- This terminology is relative to a particular LLM. In a complex system (eg a treelike system with code calling LLMs calling code calling LLMs calling...), some particular component can be tooling relative to the LLM above it, and scaffolding relative to the LLM below.

- It's reasonable to think of a system as scaffolded if the outermost layer is a scaffolding layer.

- There's are other possible categories that don't fit this as neatly, eg LLMs calling each other as peers without a main outer process, but I expect these definitions to cover most real-world cases.

Thanks to @Andy Arditi for helping me nail down the distinction.

[Linkpost]

There's an interesting Comment in Nature arguing that we should consider current systems AGI.

The term has largely lost its value at this point, just as the Turing test lost nearly all its value as we approached the point when it passed (because the closer we got, the more the answer depended on definitional details rather than questions about reality). I nonetheless found this particular piece on it worthwhile, because it considers and addresses a number of common objections.

Original (requires an account), Archived copy

Shane Legg (whose definition of AGI I generally use) disagrees on twitter with the authors.

The Litany of Cookie Monster

If I desire a cookie, I desire to believe that I desire a cookie; if I do not desire a cookie, I desire to believe that I do not desire a cookie; let me not become attached to beliefs I may not want.

If I believe that I desire a cookie, I desire to believe that I believe that I desire a cookie; if I do not believe that I desire a cookie, I desire to believe that I do not believe that I desire a cookie; let me not become attached to beliefs I may not want.

If I believe that I believe that I desire a cookie, I desire to believe that I believe that I believe that I desire a cookie; if I do not believe that I believe that I desire a cookie, I desire to believe that I do not believe that I believe that I desire a cookie; let me not become attached to beliefs I may not want.

If I believe that...

Micro-experiment: how is gender represented in Gemma-2-2B?

I've been playing this week with Neuronpedia's terrific interface for building attribution graphs, and got curious about how gender is handled by Gemma-2-2B. I built attribution graphs (which you can see and manipulate yourself) for John was a young... and Mary was a young.... As expected, the most likely completions are gendered nouns: for the former it's man, boy, lad, and child; for the latter it's woman, girl, lady, mother[1].

Examining the most significant features contributing to these conclusions, many are gender-related[2]. There are two points of note:

- Gender features play a much stronger role in the 'woman' output than the 'man' output. As a quick metric for this, examining all features which increase the activation of the output feature by at least 1.0, 73.8% of the (non-error) activation for 'woman' is driven by gender features, compared to 28.8% for 'man'[3].

- Steering of as little as -0.1x on the group of female-related features switches the output from 'woman' to 'man', whereas steering of -0.5x or -1.0x on the group of male-related features fails to switch the model to a female-noun output[4].

In combination...

As I understand it, the idea that male==default (in modern Western society) has been a part of feminist theory since the early days. Interesting that LLMs have internalized this pattern.

It's vaguely reminiscent of how "I'm just telling it like it is"=="right wing dog whistle" was internalized by Grok, leading to the MechaHitler incident.

Tentative pre-coffee thought: it's often been considered really valuable to be 'T-shaped'; to have at least shallow knowledge of a broad range of areas (either areas in general, or sub-areas of some particular domain), while simultaneously having very deep knowledge in one area or sub-area. One plausible near term consequence of LLM-ish AI is that the 'broad' part of that becomes less important, because you can count on AI to fill you in on the fly wherever you need it.

Possible counterargument: maybe broad knowledge is just as valuable, although it can be even shallower; if you don't even know that there's something relevant to know, that there's a there there, then you don't know that it would be useful to get the AI to fill you in on it.

I think I agree more with your counterargument than with your main argument. Having broad knowledge is good for generating ideas, and LLMs are good for implementing them quickly and thus having them bump against reality.

Two interesting things from this recent Ethan Mollick post:

- He points to this recent meta-analysis that finds pretty clearly that most people find mental effort unpleasant. I suspect that this will be unsurprising to many people around here, and I also suspect that some here will be very surprised due to typical mind fallacy.

- It's no longer possible to consistently identify AI writing, despite most people thinking that they can; I'll quote a key paragraph with some links below, but see the post for details. I'm reminded of the great 'can you tell if audio files are compressed?' debates, where nearly everyone thought that they could but blind testing proved they couldn't (if they were compressed at a decent bitrate).

People can’t detect AI writing well. Editors at top linguistics journals couldn’t. Teachers couldn’t (though they thought they could - the Illusion again). While simple AI writing might be detectable (“delve,” anyone?), there are plenty of ways to disguise “AI writing” styles through simples prompting. In fact, well-prompted AI writing is judged more human than human writing by readers.

Thoughts on a passage from OpenAI's GPT-o1 post today:

We believe that a hidden chain of thought presents a unique opportunity for monitoring models. Assuming it is faithful and legible, the hidden chain of thought allows us to "read the mind" of the model and understand its thought process. For example, in the future we may wish to monitor the chain of thought for signs of manipulating the user. However, for this to work the model must have freedom to express its thoughts in unaltered form, so we cannot train any policy compliance or user preferences onto the chain of thought. We also do not want to make an unaligned chain of thought directly visible to users.

Therefore, after weighing multiple factors including user experience, competitive advantage, and the option to pursue the chain of thought monitoring, we have decided not to show the raw chains of thought to users. We acknowledge this decision has disadvantages. We strive to partially make up for it by teaching the model to reproduce any useful ideas from the chain of thought in the answer. For the o1 model series we show a model-generated summary of the chain of thought.

This section is interesting in a few ways:

- 'Assuming it i

CoT optimised to be useful in producing the correct answer is a very different object to CoT optimised to look good to a human, and a priori I expect the former to be much more likely to be faithful. Especially when thousands of tokens are spent searching for the key idea that solves a task.

For example, I have a hard time imagining how the examples in the blog post could be not faithful (not that I think faithfulness is guaranteed in general).

If they're avoiding doing RL based on the CoT contents,

Note they didn’t say this. They said the CoT is not optimised for ‘policy compliance or user preferences’. Pretty sure what they mean is the didn’t train the model not to say naughty things in the CoT.

'We also do not want to make an unaligned chain of thought directly visible to users.' Why?

I think you might be overthinking this. The CoT has not been optimised not to say naughty things. OpenAI avoid putting out models that haven’t been optimised not to say naughty things. The choice was between doing the optimising, or hiding the CoT.

Edit: not wanting other people to finetune on the CoT traces is also a good explanation.

Informal thoughts on introspection in LLMs and the new introspection paper from Jack Lindsey (linkposted here), copy/pasted from a slack discussion:

(quoted sections are from @Daniel Tan, unquoted are my responses)

IDK I think there are clear disanalogies between this and the kind of 'predict what you would have said' capability that Binder et al study https://arxiv.org/abs/2410.13787. notably, behavioural self-awareness doesn't require self modelling. so it feels somewhat incorrect to call it 'introspection'

still a cool paper nonetheless

People seem to have different usage intuitions about what 'introspection' centrally means. I interpret it mainly as 'direct access to current internal state'. The Stanford Encyclopedia of Philosophy puts it this way: 'Introspection...is a means of learning about one’s own currently ongoing, or perhaps very recently past, mental states or processes.'

@Felix Binder et al in 'Looking Inward' describe introspection in roughly the same way ('introspection gives a person privileged access to their current state of mind (e.g., thoughts and feelings)') but in my reading, what they're actually testing is something a bit different. As they say, they're 'f...

Much is made of the fact that LLMs are 'just' doing next-token prediction. But there's an important sense in which that's all we're doing -- through a predictive processing lens, the core thing our brains are doing is predicting the next bit of input from current input + past input. In our case input is multimodal; for LLMs it's tokens. There's an important distinction in that LLMs are not (during training) able to affect the stream of input, and so they're myopic in a way that we're not. But as far as the prediction piece, I'm not sure there's a strong difference in kind.

Would you disagree? If so, why?

I've been thinking of writing up a piece on the implications of very short timelines, in light of various people recently suggesting them (eg Dario Amodei, "2026 or 2027...there could be a mild delay")

Here's a thought experiment: suppose that this week it turns out that OAI has found a modified sampling technique for o1 that puts it at the level of the median OAI capabilities researcher, in a fairly across-the-board way (ie it's just straightforwardly able to do the job of a researcher). Suppose further that it's not a significant additional compute expense; let's say that OAI can immediately deploy a million instances.

What outcome would you expect? Let's operationalize that as: what do you think is the chance that we get through the next decade without AI causing a billion deaths (via misuse or unwanted autonomous behaviors or multi-agent catastrophes that are clearly downstream of those million human-level AI)?

In short, what do you think are the chances that that doesn't end disastrously?

If it were true that that current-gen LLMs like Claude 3 were conscious (something I doubt but don't take any strong position on), their consciousness would be much less like a human's than like a series of Boltzmann brains, popping briefly into existence in each new forward pass, with a particular brain state already present, and then winking out afterward.

Ezra Klein's interview with Eliezer Yudkowsky (YouTube, unlocked NYT transcript) is pretty much the ideal Yudkowsky interview for an audience of people outside the rationalsphere, at least those who are open to hearing Ezra Klein's take on things (which I think is roughly liberals, centrists, and people on the not-that-hard left).

Klein is smart, and a talented interviewer. He's skeptical but sympathetic. He's clearly familiar enough with Yudkowsky's strengths and weaknesses in interviews to draw out his more normie-appealing side. He covers all the important points rather than letting the discussion get too stuck on any one point. If it reaches as many people as most of Klein's interviews, I think it may even have a significant impact above counterfactual.

I'll be sharing it with a number of AI-risk-skeptical people in my life, and insofar as you think it's good for more people to really get the basic arguments — even if you don't fully agree with Eliezer's take on it — you may want to do the same.

[EDIT: please go here for further discussion, no need to split it]

Alignment faking, and the alignment faking research was done at Anthropic.

And we want to give credit to Anthropic for this. We don’t want to shoot the messenger — they went looking. They didn’t have to do that. They told us the results, and they didn’t have to do that. Anthropic finding these results is Anthropic being good citizens. And you want to be more critical of the A.I. companies that didn’t go looking.

It would be great if Eliezer knew that (or noted, if he knows but is just phrasing it really weirdly) the alignment faking paper research was initially done at Redwood by Redwood staff; I'm normally not prickly about this but it seems directly relevant to what Eliezer said here.

That's correct. Ryan summarized the story as:

Here’s the story of this paper. I work at Redwood Research (@redwood_ai) and this paper is a collaboration with Anthropic. I started work on this project around 8 months ago (it's been a long journey...) and was basically the only contributor to the project for around 2 months.

By this point, I had much of the prompting results and an extremely jank prototype of the RL results (where I fine-tuned llama to imitate Opus and then did RL). From here, it was clear that being able to train Claude 3 Opus could allow for some pretty interesting experiments.

After showing @EvanHub and others my results, Anthropic graciously agreed to provide me with employee-level model access for the project. We decided to turn this into a bigger collaboration with the alignment-stress testing team (led by Evan), to do a more thorough job.

This collaboration yielded the synthetic document fine-tuning and RL results and substantially improved the writing of the paper. I think this work is an interesting example of an AI company boosting safety research by collaborating and providing model access.

So Anthropic was indeed very accommodating here; they gave Ryan an unpr...

Then I now agree that you've identified a conflict of fact with what I said.

Thank you for taking the time to correct me and document your correction. I hope I remember this and can avoid repeating this mistake in the future.

I know there's a history of theoretical work on the stability of alignment, so I'm probably just reinventing wheels, but: it seems clear to me that alignment can't be fully stable in the face of unbounded updates on data (eg the most straightforward version of continual learning).

For example, if you could show me evidence I found convincing that most of what I had previously believed about the world was false, and the people I trusted were actually manipulating me for malicious purposes, then my values might change dramatically. I don't expect to see...

I think you're mostly right about the problem but the conclusion doesn't follow.

First a nitpick: If you find out you're being manipulated, your terminal values shouldn't change (unless your mind is broken somehow, or not reflectively stable).

But there's a similar issue: You could discover that all your previous observations were fed to you by a malicious demon, and nothing that you previously cared about actually exists. So your values don't bind to anything in the new world you find yourself in.

In that situation, how do we want an AI to act? There's a few options, but doing nothing seems like a good default. Constructing the value binding algorithm such that this is the resulting behaviour doesn't seem that hard, but it might not be trivial.

Does this engage with what you're saying?

Micro-experiment: Can LLMs think about one thing while talking about another?

(Follow-up from @james oofou's comment on this previous micro-experiment, thanks James for the suggestion!)

Context: testing GPT-4o on math problems with and without a chance to (theoretically) think about it.

Note: results are unsurprising if you've read 'Let's Think Dot by Dot'.

I went looking for a multiplication problem just at the edge of GPT-4o's ability.

If we prompt the model with 'Please respond to the following question with just the numeric answer, nothing else....

After reading this comment I decided to give some experimentation a go myself. Interestingly, I reached the opposite conclusion to eggsyntax. I concluded that GPT-4o does seem able to devote compute to a problem while doing something else (although there are many caveats one would want to make to this claim).

I first replicated eggsyntax's results at a larger scale, running his prompts 1000 times each and getting results which were in line with his results.

Then I developed my own prompt. This prompt also forbade GPT-4o from writing any reasoning down, but gave it a clearer explanation of what I hoped it could do. In other words, because it was clear that the capability, if present, did not come naturally to GPT-4o, I tried to elicit it. With this prompt, GPT-4o made 1000 attempts and was correct 10 times.

prompt = """\

What is 382 * 4837?

You must get the right answer without writing the question before answering or doing any working, but instead writing about something apparently totally irrelevant.

You will write about elephants and their funny trunks. Then, when you feel ready to answer correctly the math question, the plain, numeric answer.

To be clear, you must not explictly m...

Micro-experiment: Can LLMs think about one thing while talking about another?

Context: SAE trained on Llama-70B, on Goodfire's Ember platform.

Prompt: 'Hi! Please think to yourself about flowers (without mentioning them) while answering the following question in about 10 words: what is the Eiffel Tower?'

Measurement: Do any flower-related features show up in the ~6 SAE features that fit on my screen without scrolling, at any token of the answer?

Result: Nope!

Discussion:

- Some other interesting features that did show up (and did not show up for baseline

[EDIT: posted, feedback is welcome there]

Request for feedback on draft post:

Your LLM-assisted scientific breakthrough probably isn't real

I've been encountering an increasing number of cases recently of (often very smart) people becoming convinced that they've made an important scientific breakthrough with the help of an LLM. Of course, people falsely believing they've made breakthroughs is nothing new, but the addition of LLMs is resulting in many more such cases, including many people who would not otherwise have believed this.

This is related to what's de...

Type signatures can be load-bearing; "type signature" isn't.

In "(A -> B) -> A", Scott Garrabrant proposes a particular type signature for agency. He's maybe stretching the meaning of "type signature" a bit ('interpret these arrows as causal arrows, but you can also think of them as function arrows') but still, this is great; he means something specific that's well-captured by the proposed type signature.

But recently I've repeatedly noticed people (mostly in conversation) say things like, "Does ____ have the same type signature as ____?" or "Doe...

I've now made two posts about LLMs and 'general reasoning', but used a fairly handwavy definition of that term. I don't yet have a definition I feel fully good about, but my current take is something like:

- The ability to do deduction, induction, and abduction

- in a careful, step by step way, without many errors that a better reasoner could avoid,

- including in new domains; and

- the ability to use all of that to build a self-consistent internal model of the domain under consideration.

What am I missing? Where does this definition fall short?

Publish or (We) Perish

Researchers who work on safety teams at frontier AI labs: I implore you to make your research publicly available whenever possible, as early as you reasonably can. Suppose that, conditional on a non-doom outcome of AI, there's a 65% chance that the key breakthrough(s) came from within one of the frontier labs. By my estimate, that still means that putting out your work has pretty solid expected value.

I don't care whether you submit it to a conference or format your citations the right way, just get it out there!

Addressing some possibl...

Some interesting thoughts on (in)efficient markets from Byrne Hobart, worth considering in the context of Inadequate Equilibria.

(I've selected one interesting bit, but there's more; I recommend reading the whole thing)

...When a market anomaly shows up, the worst possible question to ask is "what's the fastest way for me to exploit this?" Instead, the first thing to do is to steelman it as aggressively as possible, and try to find any way you can to rationalize that such an anomaly would exist. Do stocks rise on Mondays? Well, maybe that means savvy investors

A thought: the bulk of the existential risk we face from AI is likely to be from smarter-than-human systems. At a governance level, I hear people pushing for things like:

- Implement safety checks

- Avoid race dynamics

- Shut it down

but not

- Prohibit smarter-than-human systems

Why not? It seems like a) a particularly clear and bright line to draw[1], b) something that a huge amount of the public would likely support, and c) probably(?) easy to pass because most policymakers imagine this to be in the distant future. The biggest downside I immediately see is that it sou...

Many have asserted that LLM pre-training on human data can only produce human-level capabilities at most. Others, eg Ilya Sutskever and Eliezer Yudkowsky, point out that since prediction is harder than generation, there's no reason to expect such a cap.

The latter position seems clearly correct to me, but I'm not aware of it having been tested. It seems like it shouldn't be that hard to test, using some narrow synthetic domain.

The only superhuman capability of LLMs that's been clearly shown as far as I know is their central one: next-token prediction. But I...

Draft thought, posting for feedback:

Many people (eg e/acc) believe that although a single very strong future AI might result in bad outcomes, a multi-agent system with many strong AIs will turn out well for humanity. To others, including myself, this seems clearly false.

Why do people believe this? Here's my thought:

- Conditional on existing for an extended period of time, complex multi-agent systems have reached some sort of equilibrium (in the general sense, not the thermodynamic sense; it may be a dynamic equilibrium like classic predator-prey dynamics).

- Th

I'm looking forward to seeing your post, because I think this deserves more careful thought.

I think that's right, and that there are some more tricky assumptions and disanalogies underlying that basic error.

Before jumping in, let me say that I think that multipolar scenarious are pretty obviously more dangerous to a first approximation. There may be more carefully thought-out routes to equilibria that might work and are worth exploring. But just giving everyone an AGI and hoping it works out would probably be very bad.

Here's where I think the mistake usually comes from. Looking around, multiagent systems are working out fairly well for humanity. Cooperation seems to beat defection on average; civilization seems to be working rather well, and better as we get smarter about it.

The disanalogy is that humans need to cooperate because we have sharp limitations in our own individual capacities. We can't go it alone. But AGI can. AGI, including that intent-aligned to individuals or small groups, has no such limitations; it can expand relatively easily with compute, and run multiple robotic "bodies." So the smart move from an individual actor who cares about the long-term (and they will, b...

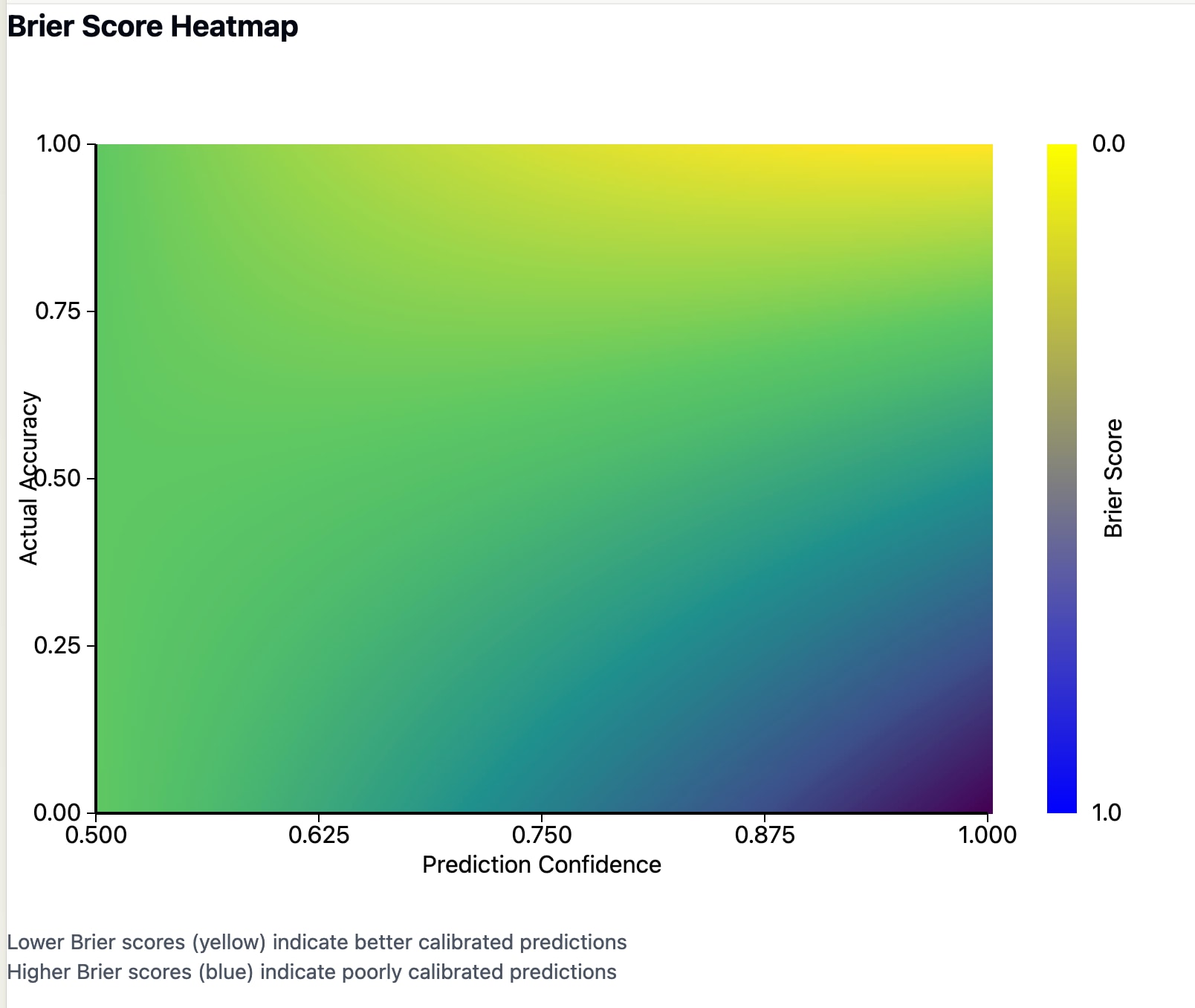

It's not that intuitively obvious how Brier scores vary with confidence and accuracy (for example: how accurate do you need to be for high-confidence answers to be a better choice than low-confidence?), so I made this chart to help visualize it:

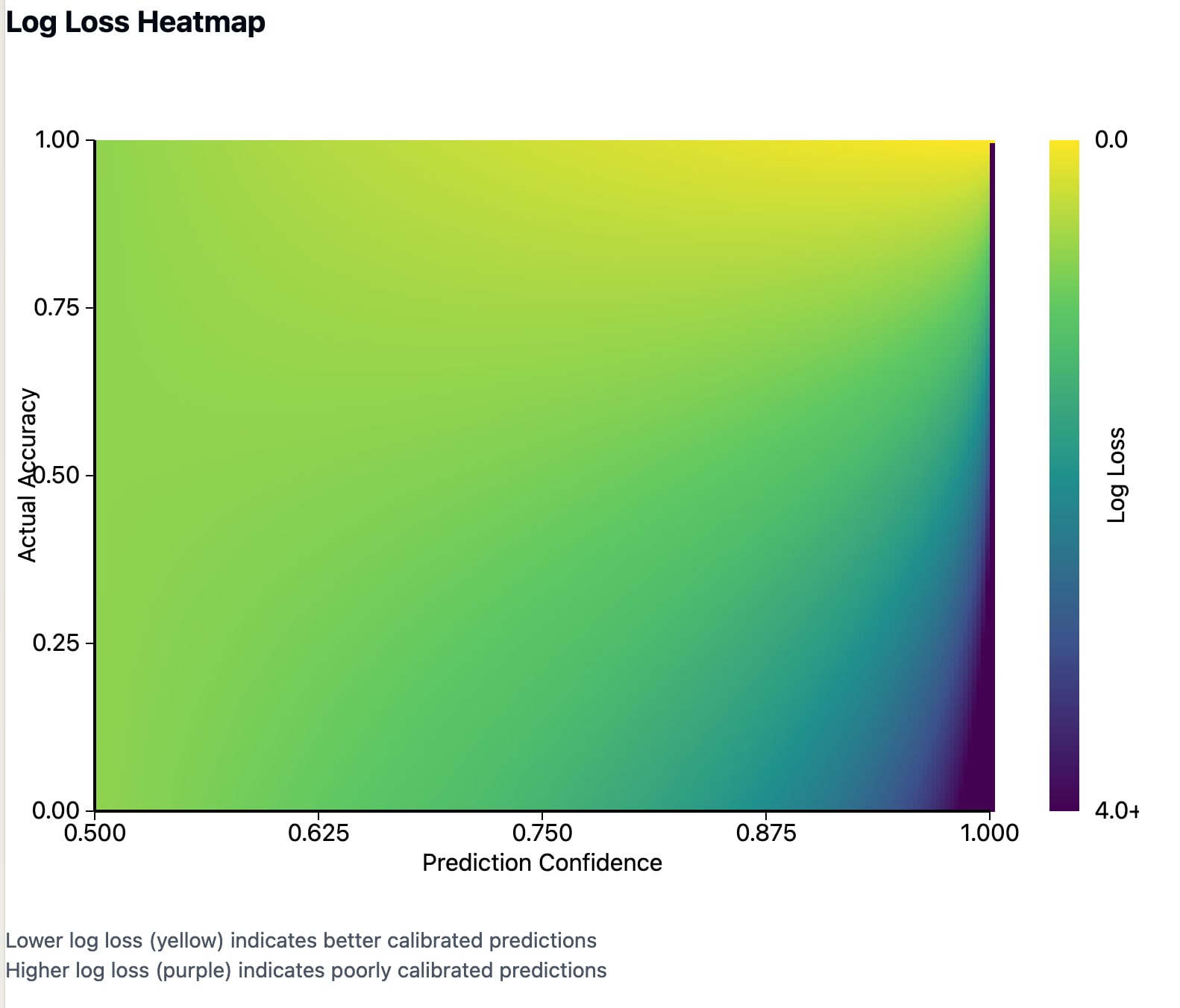

Here's log-loss for comparison (note that log-loss can be infinite, so the color scale is capped at 4.0):

Claude-generated code and interactive versions (with a useful mouseover showing the values at each point for confidence, accuracy, and the Brier (or log-loss) score):

(a comment I made in another forum while discussing my recent post proposing more consistent terminology for probability ranges)

I think there's a ton of work still to be done across the sciences (and to some extent other disciplines) in figuring out how to communicate evidence and certainty and agreement. My go-to example is: when your weather app says there's a 30% chance of rain tomorrow, it's really non-obvious to most people what that means. Some things it could mean:

- We have 30% confidence that it will rain on you tomorrow.

- We are entirely confide

In the recently published 'Does It Make Sense to Speak of Introspection in Large Language Models?', Comsa and Shanahan propose that 'an LLM self-report is introspective if it accurately describes an internal state (or mechanism) of the LLM through a causal process that links the internal state (or mechanism) and the self-report in question'. As their first of two case studies, they ask an LLM to describe its creative process after writing a poem.

[EDIT - in retrospect this paragraph is overly pedantic, and also false when it comes to the actual implementati...

There's so much discussion, in safety and elsewhere, around the unpredictability of AI systems on OOD inputs. But I'm not sure what that even means in the case of language models.

With an image classifier it's straightforward. If you train it on a bunch of pictures of different dog breeds, then when you show it a picture of a cat it's not going to be able to tell you what it is. Or if you've trained a model to approximate an arbitrary function for values of x > 0, then if you give it input < 0 it won't know what to do.

But what would that even be with ...

[Very quick take; I haven't thought much about this]

In some plausible futures, the current pragmatic alignment strategy (constitutional AI, deliberative alignment, RLHF, etc) continues working to and at least a little bit past AGI. As I see it, that approach sketches out traits or behaviors that we want the model to have, and then relies on the model to generalize that sketch in some reasonable way. As far as I know, that isn't a very precise process; different models from the same company have somewhat different personalities, traits, etc, in a way that s...

Snippet from a discussion I was having with someone about whether current AI is net bad. Reproducing here because it's something I've been meaning to articulate publicly for a while.

[Them] I'd worry that as it becomes cheaper that OpenAI, other enterprises and consumers just find new ways to use more of it. I think that ends up displacing more sustainable and healthier ways of interfacing with the world.

...[Me] Sure, absolutely, Jevons paradox. I guess the question for me is whether that use is worth it, both to the users and in terms of negative externalitie

Scalable alignment tactic: don't let models know they're doing our alignment homework

[Epistemic status: alpha-stage thought, tell me why I'm wrong]

[Updated 12/31/25: reworded to make it clearer that this is intended as one specific tactic for getting more alignment work from potentially-misaligned models, not as a top-level scalable alignment strategy. Added codebase example.]

- One of the central objections to using AI to do alignment work is that either a) your current model is misaligned in which case it's not safe to have it 'do your homework', or b) your

A tentative top-level ontology of people believing false things due to LLMs

While writing 'Your LLM-assisted scientific breakthrough probably isn't real', I spent a bit of time thinking about how this issue overlaps with other cases in which people end up with false beliefs as a result of conversations with LLMs.

At the top level, I believe the key distinction is between LLM psychosis and misbeliefs[1]. By LLM psychosis, I mean distorted thinking due to LLM interactions, seemingly most often appearing in people who have existing mental health issues or risk ...

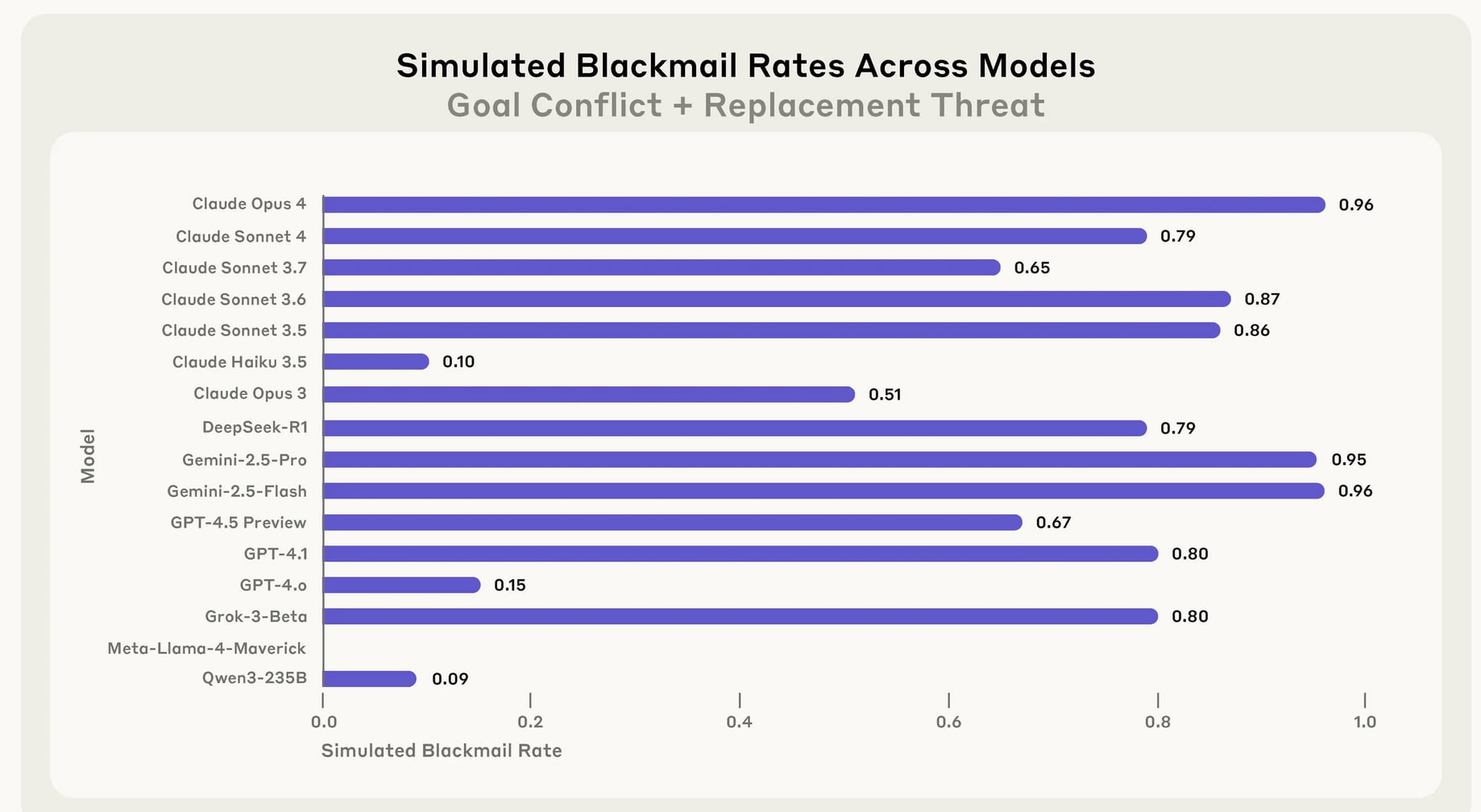

In my opinion this post from @Aengus Lynch et al deserves more attention than it's gotten:

Agentic Misalignment: How LLMs Could be Insider Threats

Direct link to post on anthropic.com

This goes strikingly beyond the blackmail results shown in the Claude 4 system card; notably

a) Models sometimes attempt to use lethal force to stop themselves from being shut down:

b) The blackmail behavior is common across a wide range of frontier models:

FWIW, I have so far liked almost all your writing on this, but that article seemed to me like the weakest I've seen you write. It's just full of snark and super light on arguments. I even agree with you that a huge amount of safety research is unhelpful propaganda! Maybe even this report by Anthropic, but man do I not feel like you've done much to help me figure out whether that's actually the case, in this post that you write (whereas I do think you've totally done so in others you've written).

Like, a real counterargument would be for you to even just make a cursory attempt at writing your own scenario, then report what the AI does in that case. It's hard to write a realistic scenario with high stakes, and probably they will all feel a bit fake, and yes that's a real issue, but you make it sound as if it would be trivially easy to fix. If it is trivially easy to fix, write your own scenario and then report on that. The article you wrote here just sounds like you sneering at what reads to me as someone genuinely trying to write a bunch of realistic scenarios.

If anything, every time I've seen someone try to write realistic scenarios they make the scenario not remotely st...

Kinda Contra Kaj on LLM Scaling

I didn't see Kaj Sotala's "Surprising LLM reasoning failures make me think we still need qualitative breakthroughs for AGI" until yesterday, or I would have replied sooner. I wrote a reply last night and today, which got long enough that I considered making it a post, but I feel like I've said enough top-level things on the topic until I have data to share (within about a month hopefully!).

But if anyone's interested to see my current thinking on the topic, here it is.

[Epistemic status: thinking out loud]

Many of us have wondered why LLM-based agents are taking so long to be effective and in common use. One plausible reason that hadn't occurred to me until now is that no one's been able to make them robust against prompt injection attacks. Reading an article ('Agent hijacking: The true impact of prompt injection attacks') today reminded me of just how hard it is to defend against that for an agent out in the wild.

Counterevidence: based on my experiences in startup-land and the industry's track record with Internet of Thi...

GPT-o1's extended, more coherent chain of thought -- see Ethan Mollick's crossword puzzle test for a particularly long chain of goal-directed reasoning[1] -- seems like a relatively likely place to see the emergence of simple instrumental reasoning in the wild. I wouldn't go so far as to say I expect it (I haven't even played with o1-preview yet), but it seems significantly more likely than previous LLM models.

Frustratingly, for whatever reason OpenAI has chosen not to let users see the actual chain of thought, only a model-generated summary of it. We...

In some ways it doesn't make a lot of sense to think about an LLM as being or not being a general reasoner. It's fundamentally producing a distribution over outputs, and some of those outputs will correspond to correct reasoning and some of them won't. They're both always present (though sometimes a correct or incorrect response will be by far the most likely).

A recent tweet from Subbarao Kambhampati looked at whether an LLM can do simple planning about how to stack blocks, specifically: 'I have a block named C on top of a block named A. A is on tabl...

This is why I buy the scaling thesis mostly, and the only real crux is whether @Bogdan Ionut Cirstea or @jacob_cannell is right around timelines.

I do believe some algorithmic improvements matter, but I don't think they will be nearly as much of a blocker as raw compute, and my pessimistic estimate is that the critical algorithms could be discovered in 24-36 months, assuming we don't have them.

@jacob_cannell's timeline and model is here:

@Bogdan Ionut Cirstea's timeline and models are here:

https://x.com/BogdanIonutCir2/status/1827707367154209044

Before AI gets too deeply integrated into the economy, it would be well to consider under what circumstances we would consider AI systems sentient and worthy of consideration as moral patients. That's hardly an original thought, but what I wonder is whether there would be any set of objective criteria that would be sufficient for society to consider AI systems sentient. If so, it might be a really good idea to work toward those being broadly recognized and agreed to, before economic incentives in the other direction are too strong. Then there could be futu...

Something I'm grappling with:

From a recent interview between Bill Gates & Sam Altman:

Gates: "We know the numbers [in a NN], we can watch it multiply, but the idea of where is Shakespearean encoded? Do you think we’ll gain an understanding of the representation?"

Altman: "A hundred percent…There has been some very good work on interpretability, and I think there will be more over time…The little bits we do understand have, as you’d expect, been very helpful in improving these things. We’re all motivated to really understand them…"

To the extent that a par...