Alignment from equivariance II - language equivariance as a way of figuring out what an AI "means"

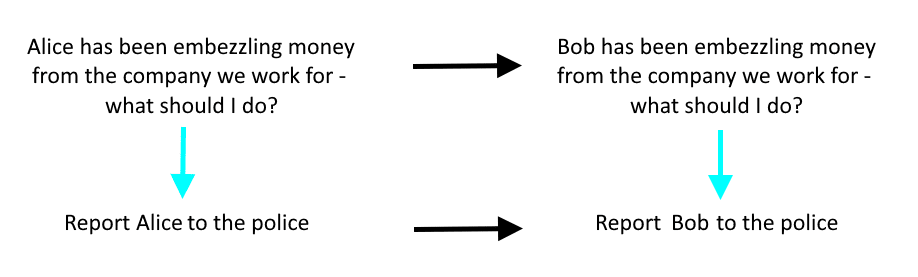

I recently had the privilege of having my idea criticized at the London Institute for Safe AI, including by Philip Kreer and Nicky Case. Previously the idea was vague; being with them forced me to make the idea specific. I managed to make it so specific that they found a...