All of jacquesthibs's Comments + Replies

Three Epoch AI employees* are leaving to co-found an AI startup focused on automating work:

"Mechanize will produce the data and evals necessary for comprehensively automating work."

They also just released a podcast with Dwarkesh.

*Matthew Barnett, Tamay Besiroglu, Ege Erdil

AGI is still 30 years away - but also, we're going to fully automate the economy, TAM 80 trillion

It seems useful for those who disagreed to reflect on this LessWrong comment from ~3 months ago (around the time the Epoch/OpenAI scandal happened).

"My funder friend told me his alignment orgs keep turning into capabilities orgs so I asked how many orgs he funds and he said he just writes new RFPs afterwards so I said it sounds like he's just feeding bright-eyed EAs to VCs and then his grantmakers started crying."

Accelerating AI R&D automation would be bad. But they want to accelerate misc labor automation. The sign of this is unclear to me.

In case this is useful to anyone in the future: LTFF does not provide funding for-profit organizations. I wasn't able to find mentions of this online, so I figured I should share.

I was made aware of this after being rejected today for applying to LTFF as a for-profit. We updated them 2 weeks ago on our transition into a non-profit, but it was unfortunately too late, and we'll need to send a new non-profit application in the next funding round.

FWIW, I was always concerned about people trying to make long-horizon forecast predictions because they assumed superforecasting would extrapolate beyond the sub-1-year predictions that were tested.

As an alternative, that's why I wrote about strategic foresight to focus on robust plans rather than trying to accurately predict the actual scenario.

We got our first 10k! Woo!

Coordinal Research: Accelerating the research of safely deploying AI systems.

We just put out a Manifund proposal to take short timelines and automating AI safety seriously. I want to make a more detailed post later, but here it is: https://manifund.org/projects/coordinal-research-accelerating-the-research-of-safely-deploying-ai-systems

When is the exact deadline? Is it EOD AOE on February 15th or February 14th? “By February 15th” can sound like the deadline hits as soon as it’s the 15th.

Have seen a few people ask this question in some Slacks.

I keep hearing about dual-use risk concerns when I mention automated AI safety research. Here’s a simple solution that could even work in a startup setting:

Keep all of the infrastructure internally and only share with vetted partners/researchers.

You can hit two birds with one stone:

- Does not turn into a mass-market product that leads to dual-use risks.

- Builds a moat where you have complex internal infrastructure which is not shared, only the product of that system is shared. Investors love moats, you just got to convince them that this is the way to go for a

I’m currently working on de-slopifying and building an AI safety startup with this as a central pillar.* Happy to talk privately with anyone working on AI safety who is interested in this.

*almost included John and Gwern’s posts on AI slop as part of a recent VC pitch deck.

I’m working on this. I’m unsure if I should be sharing what I’m exactly working on with a frontier AGI lab though. How can we be sure this just leads to differentially accelerating alignment?

Edit: my main consideration is when I should start mentioning details. As in, should I wait until I’ve made progress on alignment internally before sharing with an AGI lab. Not sure what people are disagreeing with since I didn't make a statement.

I'm currently in the Catalyze Impact AI safety incubator program. I'm working on creating infrastructure for automating AI safety research. This startup is attempting to fill a gap in the alignment ecosystem and looking to build with the expectation of under 3 years left to automated AI R&D. This is my short timelines plan.

I'm looking to talk (for feedback) to anyone interested in the following:

- AI control

- Automating math to tackle problems as described in Davidad's Safeguarded AI programme.

- High-assurance safety cases

- How to robustify society in a po

Are you or someone you know:

1) great at building (software) companies

2) care deeply about AI safety

3) open to talk about an opportunity to work together on something

If so, please DM with your background. If someone comes to mind, also DM. I am looking thinking of a way to build companies in a way to fund AI safety work.

Agreed, but I will find a way.

Hey Ben and Jesse!

This comment is more of a PSA:

I am building a startup focused on making this kind of thing exceptionally easy for AI safety researchers. I’ve been working as an AI safety researcher for a few years. I’ve been building an initial prototype and I am in the process of integrating it easily into AI research workflows. So, with respect to this post, I’ve been actively working towards building a prototype for the “AI research fleets”.

I am actively looking for a CTO I can build with to +10x alignment research in the next 2 years. I’m looking for...

It has basically significantly accelerated my ability to build fully functional websites very quickly. To the point where it was basically a phase transition between me building my org’s website and not building it (waiting for someone with web dev experience to do it for me).

I started my website by leveraging the free codebase template he provides on his github and covers in the course.

I mean that it's a trade secret for what I'm personally building, and I would also rather people don't just use it freely for advancing frontier capabilities research.

Is this because it would reveal private/trade-secret information, or is this for another reason?

Yes (all of the above)

Thanks for amplifying. I disagree with Thane on some things they said in that comment, and I don't want to get into the details publicly, but I will say:

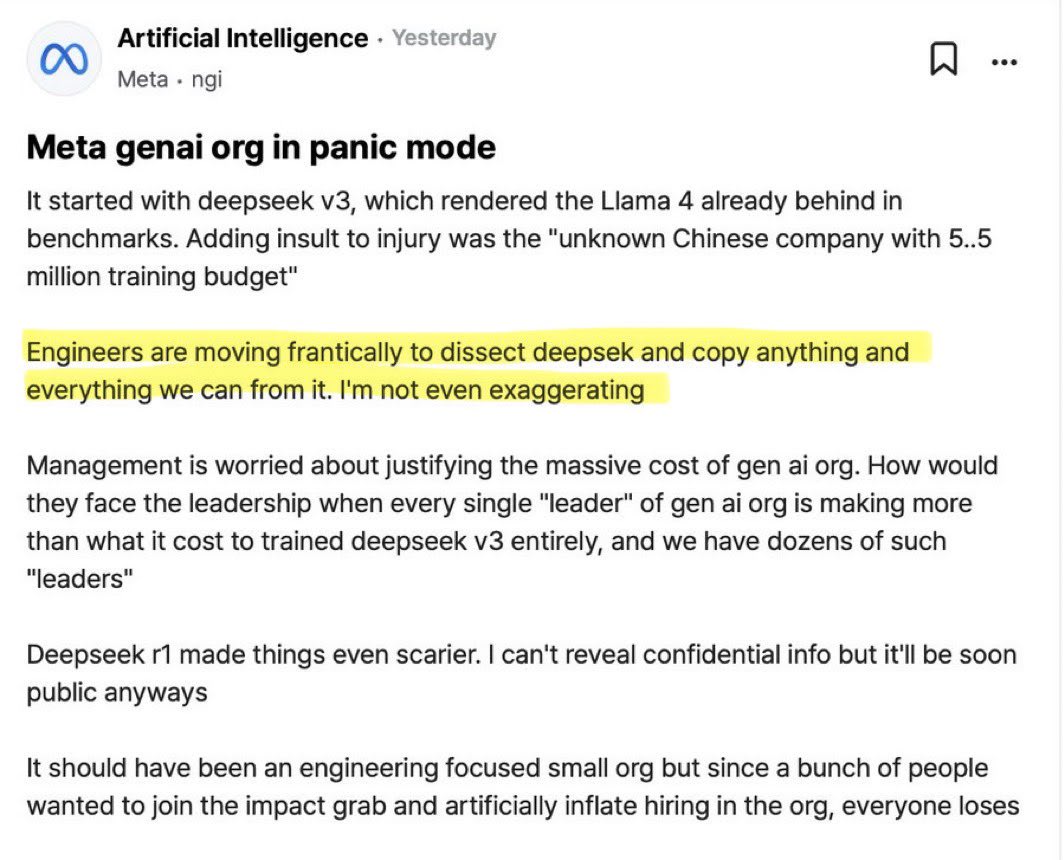

- it's worth looking at DeepSeek V3 and what they did with a $5.6 million training run (obviously that is still a nontrivial amount / CEO actively says most of the cost of their training runs is coming from research talent),

- compute is still a bottleneck (and why I'm looking to build an ai safety org to efficiently absorb funding/compute for this), but I think Thane is not acknowledging that some types of res

Putting venues aside, I'd like to build software (like AI-aided) to make it easier for the physics post-docs to onboard to the field and focus on the 'core problems' in ways that prevent recoil as much as possible. One worry I have with 'automated alignment'-type things is that it similarly succumbs to the streetlight effect due to models and researchers having biases towards the types of problems you mention. By default, the models will also likely just be much better at prosaic-style safety than they will be at the 'core problems'. I would like to instea...

Hey Logan, thanks for writing this!

We talked about this recently, but for others reading this: given that I'm working on building an org focused on this kind of work and wrote a relevant shortform lately, I wanted to ping anybody reading this to send me a DM if you are interested in either making this happen (looking for a cracked CTO atm and will be entering phase 2 of Catalyze Impact in January) or provide feedback to an internal vision doc.

As a side note, I’m in the process of building an organization (leaning startup). I will be in London in January for phase 2 of the Catalyze Impact program (incubation program for new AI safety orgs). Looking for feedback on a vision doc and still looking for a cracked CTO to co-found with. If you’d like to help out in whichever way, send a DM!

Exactly right. This is the first criticism I hear every time about this kind of work and one of the main reasons I believe the alignment community is dropping the ball on this.

I only intend on sharing work output (paper on better technique for interp, not the infrastructure setup; things similar to Transluce) where necessary and not the infrastructure. We don’t need to share or open source what we think isn’t worth it. That said, the capabilities folks will be building stuff like this by default, as they already have (Sakana AI). Yet I see many paths to au...

Given the OpenAI o3 results making it clear that you can pour more compute to solve problems, I'd like to announce that I will be mentoring at SPAR for an automated interpretability research project using AIs with inference-time compute.

I truly believe that the AI safety community is dropping the ball on this angle of technical AI safety and that this work will be a strong precursor of what's to come.

Note that this work is a small part in a larger organization on automated AI safety I’m currently attempting to build.

Here’s the link: https://airtable.com/ap...

(Reposted from Facebook)

Hey Weibing Wang! Thanks for sharing. I just started skimming your paper, and I appreciate the effort you put into this; it combines many of the isolated work people have been working on.

I also appreciate your acknowledgement that your proposed solution has not undergone experimental validation, humility, and the suggestion that these proposed solutions need to be tested and iterated upon as soon as possible due to the practicalities of the real world.

I want to look into your paper again when I have time, but some quick comments:

- Y

We still don't know if this will be guaranteed to happen, but it seems that OpenAI is considering removing its "regain full control of Microsoft shares once AGI is reached" clause. It seems they want to be able to keep their partnership with Microsoft (and just go full for-profit (?)).

Here's the Financial Times article:

...OpenAI seeks to unlock investment by ditching ‘AGI’ clause with Microsoft

OpenAI is in discussions to ditch a provision that shuts Microsoft out of its most advanced models when the start-up achieves “artificial general intelligence”, as

Regarding coding in general, I basically only prompt programme these days. I only bother editing the actual code when I notice a persistent bug that the models are unable to fix after multiple iterations.

I don't know jackshit about web development and have been making progress on a dashboard for alignment research with very little effort. Very easy to build new projects quickly. The difficulty comes when there is a lot of complexity in the code. It's still valuable to understand how high-level things work and low-level things the model will fail to proactively implement.

I'd be down to do this. Specifically, I want to do this, but I want to see if the models are qualitatively better at alignment research tasks.

In general, what I'm seeing is that there is not big jump with o1 Pro. However, it is possibly getting closer to one-shot a website based on a screenshot and some details about how the user likes their backend setup.

In the case of math, it might be a bigger jump (especially if you pair it well with Sonnet).

I sent an invite, Logan! :)

Shameless self-plug: Similarly, if anyone wants to discuss automating alignment research, I'm in the process of building an organization to make that happen. I'm reaching out to Logan because I have a project in mind regarding automating interpretability research (e.g. making AIs run experiments that try to make DL models more interpretable), and he's my friend! My goal for the org is to turn it into a three-year moonshot to solve alignment. I'd be happy to chat with anyone who would be interested in chatting further about this (I'm currently testing fit with potential co-founders and seeking a cracked basement CTO).

I have some alignment project ideas for things I'd consider mentoring for. I would love feedback on the ideas. If you are interested in collaborating on any of them, that's cool, too.

Here are the titles:

Smart AI vs swarm of dumb AIs |

Lit review of chain of thought faithfulness (steganography in AIs) |

Replicating METR paper but for alignment research task |

Tool-use AI for alignment research |

Sakana AI for Unlearning |

Automated alignment onboarding |

Build the infrastructure for making Sakana AI's AI scientist better for alignment research |

I’d be curious to know if there’s variability in the “hours worked per week” given that people might work more hours during a short program vs a longer-term job (to keep things sustainable).

Completely agree. I remember a big shift in my performance when I went from "I'm just using programming so that I can eventually build a startup, where I'll eventually code much less" to "I am a programmer, and I am trying to become exceptional at it." The shift in mindset was super helpful.

This is one of the reasons I think 'independent' research is valuable, even if it isn't immediately obvious from a research output (papers, for example) standpoint.

That said, I've definitely had the thought, "I should niche down into a specific area where there is already a bunch of infrastructure I can leverage and churn out papers with many collaborators because I expect to be in a more stable funding situation as an independent researcher. It would also make it much easier to pivot into a role at an organization if I want to or necessary. It would defin...

I think it's up to you and how you write. English isn't my first language, so I've found it useful. I also don't accept like 50% of the suggestions. But yeah, looking at the plan now, I think I could get off the Pro plan and see if I'm okay not paying for it.

It's definitely not the thing I care about most on the list.

There are multiple courses, though it's fairly new. They have one on full-stack development (while using Cursor and other things) and Replit Agents. I've been following it to learn fast web development, and I think it's a good starting point for getting an overview of building an actual product on a website you can eventually sell or get people to use.

Somewhat relevant blog post by @NunoSempere: https://nunosempere.com/blog/2024/09/10/chance-your-startup-will-succeed/

As an aside, I have considered that samplers were underinvestigated and that they would lead to some capability boosts. It's also one of the things I'd consider testing out to improve LLMs for automated/augmented alignment research.

The importance of Entropy

Given that there's been a lot of talk about using entropy during sampling of LLMs lately (related GitHub), I figured I'd share a short post I wrote for my website before it became a thing:

Imagine you're building a sandcastle on the beach. As you carefully shape the sand, you're creating order from chaos - this is low entropy. But leave that sandcastle for a while, and waves, wind, and footsteps will eventually reduce it back to a flat, featureless beach - that's high entropy.

Entropy is nature's tendency to move from order to disord...

Fair enough. For what it's worth, I've thought a lot about the kind of thing you describe in that comment and partially committing to this direction because I feel like I have enough intuition and insight that those other tools for thought failed to incorporate.

Just to clarify, do you only consider 'strong human intelligence amplification' through some internal change, or do you also consider AIs to be part of that? As in, it sounds like you are saying we currently lack the intelligence to make significant progress on alignment research and consider increasing human intelligence to be the best way to make progress. Are you also of the opinion that using AIs to augment alignment researchers and progressively automate alignment research is doomed and not worth consideration? If not, then here.

I'm in the process of trying to build an org focused on "automated/augmented alignment research." As part of that, I've been thinking about which alignment research agendas could be investigated in order to make automated alignment safer and trustworthy. And so, I've been thinking of doing internal research on AI control/security and using that research internally to build parts of the system I intend to build. I figured this would be a useful test case for applying the AI control agenda and iterating on issues we face in implementation, and then sharing t...

I quickly wrote up some rough project ideas for ARENA and LASR participants, so I figured I'd share them here as well. I am happy to discuss these ideas and potentially collaborate on some of them.

Alignment Project Ideas (Oct 2, 2024)

1. Improving "A Multimodal Automated Interpretability Agent" (MAIA)

Overview

MAIA (Multimodal Automated Interpretability Agent) is a system designed to help users understand AI models by combining human-like experimentation flexibility with automated scalability. It answers user queries about AI system components by iteratively ...

I'm exploring the possibility of building an alignment research organization focused on augmenting alignment researchers and progressively automating alignment research (yes, I have thought deeply about differential progress and other concerns). I intend to seek funding in the next few months, and I'd like to chat with people interested in this kind of work, especially great research engineers and full-stack engineers who might want to cofound such an organization. If you or anyone you know might want to chat, let me know! Send me a DM, and I can send you ...

Given today's news about Mira (and two other execs leaving), I figured I should bump this again.

But also note that @Zach Stein-Perlman has already done some work on this (as he noted in his edit): https://ailabwatch.org/resources/integrity/.

Note, what is hard to pinpoint when it comes to S.A. is that many of the things he does have been described as "papercuts". This is the kind of thing that makes it hard to make a convincing case for wrongdoing.

And while flattering to Brockman, there is nothing about Murati - free tip to all my VC & DL startup acquaintances, there's a highly competent AI manager who's looking for exciting new opportunities, even if she doesn't realize it yet.

Heh, here it is: https://x.com/miramurati/status/1839025700009030027

I completely agree, and we should just obviously build an organization around this. Automating alignment research while also getting a better grasp on maximum current capabilities (and a better picture of how we expect it to grow).

(This is my intention, and I have had conversations with Bogdan about this, but I figured I'd make it more public in case anyone has funding or ideas they would like to share.)

Here's what I'm currently using and how much I am paying:

- Superwhisper (or other new Speech-to-Text that leverage "LLMs for rewriting" apps). Under $8.49 per month. You can use different STT models (different speed and accuracy for each) and LLM for rewriting the transcript based on a prompt you give the models. You can also have different "modes", meaning that you can have the model take your transcript and write code instructions in a pre-defined format when you are in an IDE, turn a transcript into a report when writing in Google Docs, etc. There is also

News on the next OAI GPT release:

...Nagasaki, CEO of OpenAI Japan, said, "The AI model called 'GPT Next' that will be released in the future will evolve nearly 100 times based on past performance. Unlike traditional software, AI technology grows exponentially."

https://www.itmedia.co.jp/aiplus/articles/2409/03/news165.html

The slide clearly states 2024 "GPT Next". This 100 times increase probably does not refer to the scaling of computing resources, but rather to the effective computational volume + 2 OOMs, including improvements to the architectu

Yeah, thanks! I agree with @habryka’s comment, though I’m a little worried it may shut down conversation since it might make people think the conversation is about AI startups in general and less about AI startups in service of AI safety. This is because people might consider the debate/question answered after agreeing with the top comment.

That said, I do hear the “any AI startup is bad because it increases AI investment and therefore reduces timelines” so I think it’s worth at least getting more clarity on this.