How a bug of AI hardware may become a feature for AI governance

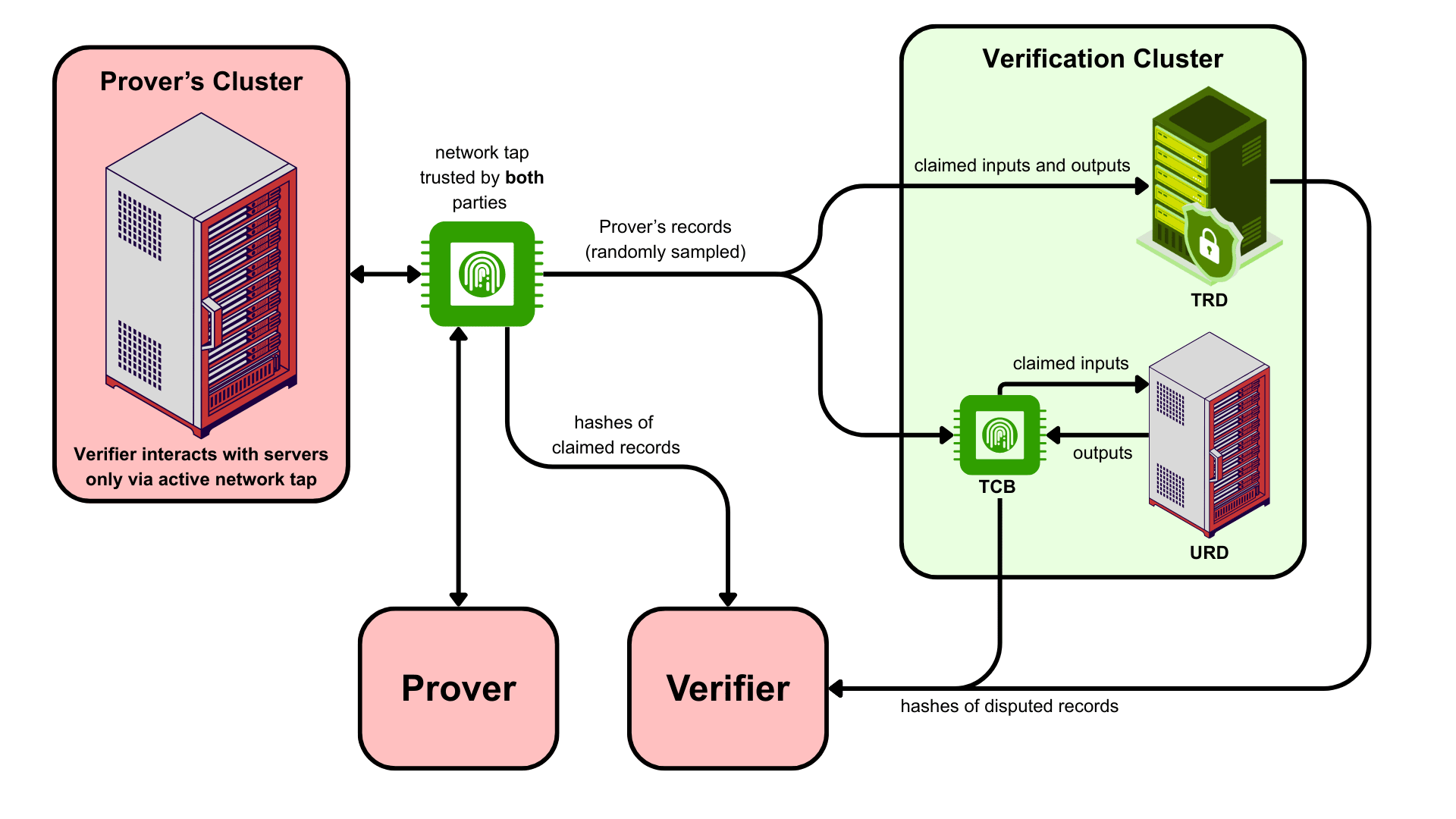

“Hardware noise” in AI accelerators is often seen as a nuisance, but it might actually turn out to be a useful signal for verification of claims about AI workloads and hardware usage. With this post about my experiments (GitHub), I aim to 1. Contribute more clarity to the discussion about “GPU non-determinism” 2. Present how non-associativity can help monitor untrusted AI datacenters Summary * I ran ML inference in dozens of setups to test which setups have exactly reproducible results, and which differences in setups lead to detectable changes in outputs or activations. * In nearly all cases studied, results were bitwise-reproducible within fixed settings. Differences across production methods were consistent, not random. * Given that these perturbations are reproducible and unique, they can act as a “fingerprint” of the exact setup that produced an output. This may turn out useful for monitoring untrusted ML hardware (such as in the context of AI hardware governance, international treaty verification, and AI control/security). * Some settings had unique fingerprints, while others were invariant under change. * Invariant (i.e. not detectable by noise): * batch size in prefill inference * concurrent CUDA streams * pipeline parallelism rank * Detectable when re-executing on identical hardware: * batch size in decode inference * attention algorithm (sdpa, FlashAttention, eager, …) * CUDA version (if kernel libraries were updated) * tensor parallelism * different quantization methods, even at the same precision * Any change that affects numerics is detectable, since results were bitwise-reproducible within settings. * Detectable even with reproduction on different hardware: * attention algorithm * different quantizations (even within the same INT precision) * and of course different inputs or models * Different reduction order (a subtle difference resulting from batching, tensor paralle