Why does the AI safety community need help founding projects?

- AI safety should scale

- Labs need external auditors for the AI control plan to work

- We should pursue many research bets in case superalignment/control fails

- Talent leaves MATS/ARENA and sometimes struggles to find meaningful work for mundane reasons, not for lack of talent or ideas

- Some emerging research agendas don’t have a home

- There are diminishing returns at scale for current AI safety teams; sometimes founding new projects is better than joining an existing team

- Scaling lab alignment teams are bottlenecked by management capacity, so their talent cut-off is above the level required to do “useful AIS work”

- Research organizations (inc. nonprofits) are often more effective than independent researchers

- “Block funding model” is more efficient, as researchers can spend more time researching, rather than seeking grants, managing, or other traditional PI duties that can be outsourced

- Open source/collective projects often need a central rallying point (e.g., EleutherAI, dev interp at Timaeus, selection theorems and cyborgism agendas seem too delocalized, etc.)

- There is (imminently) a market for for-profit AI safety companies and value-aligned people should capture this free energy or let worse alternatives flourish

- If labs or API users are made legally liable for their products, they will seek out external red-teaming/auditing consultants to prove they “made a reasonable attempt” to mitigate harms

- If government regulations require labs to seek external auditing, there will be a market for many types of companies

- “Ethical AI” companies might seek out interpretability or bias/fairness consultants

- New AI safety organizations struggle to get funding and co-founders despite having good ideas

- AIS researchers are usually not experienced entrepeneurs (e.g., don’t know how to write grant proposals for EA funders, pitch decks for VCs, manage/hire new team members, etc.)

- There are not many competent start-up founders in the EA/AIS community and when they join, they don’t know what is most impactful to help

- Creating a centralized resource for entrepeneurial education/consulting and co-founder pairing would solve these problems

I am a Manifund Regrantor. In addition to general grantmaking, I have requests for proposals in the following areas:

- Funding for AI safety PhDs (e.g., with these supervisors), particularly in exploratory research connecting AI safety theory with empirical ML research.

- An AI safety PhD advisory service that helps prospective PhD students choose a supervisor and topic (similar to Effective Thesis, but specialized for AI safety).

- Initiatives to critically examine current AI safety macrostrategy (e.g., as articulated by Holden Karnofsky) like the Open Philanthropy AI Worldviews Contest and Future Fund Worldview Prize.

- Initiatives to identify and develop "Connectors" outside of academia (e.g., a reboot of the Refine program, well-scoped contests, long-term mentoring and peer-support programs).

- Physical community spaces for AI safety in AI hubs outside of the SF Bay Area or London (e.g., Japan, France, Bangalore).

- Start-up incubators for projects, including evals/red-teaming/interp companies, that aim to benefit AI safety, like Catalyze Impact, Future of Life Foundation, and YCombinator's request for Explainable AI start-ups.

- Initiatives to develop and publish expert consensus on AI safety macrostrategy cruxes, such as the Existential Persuasion Tournament and 2023 Expert Survey on Progress in AI (e.g., via the Delphi method, interviews, surveys, etc.).

- Ethics/prioritization research into:

- What values to instill in artificial superintelligence?

- How should AI-generated wealth be distributed?

- What should people do in a post-labor society?

- What level of surveillance/restriction is justified by the Unilateralist's Curse?

- What moral personhood will digital minds have?

- How should nations share decision making power regarding transformative AI?

- New nonprofit startups that aim to benefit AI safety.

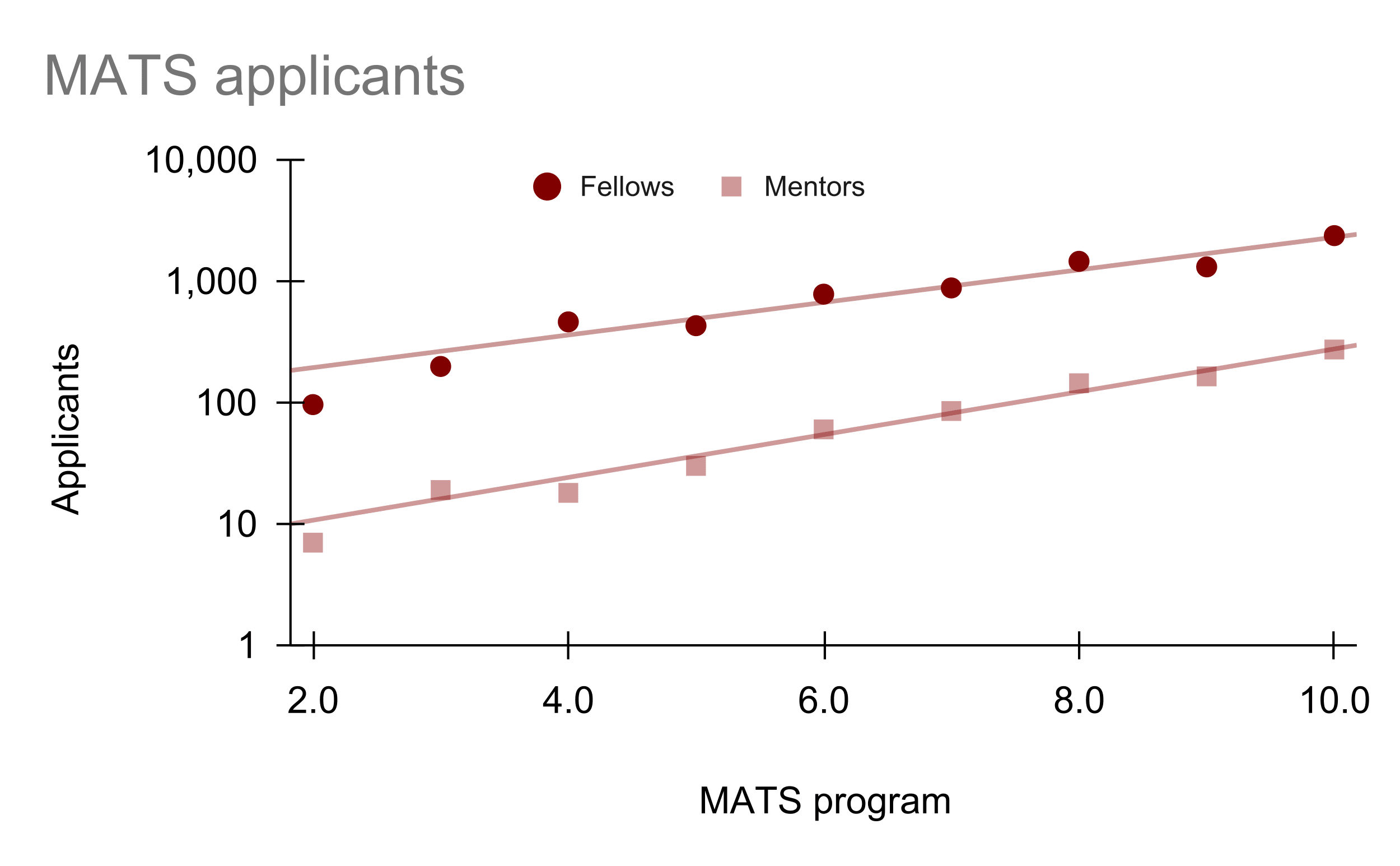

I think that the distribution of mentors we are drawing from is slowing growing to include more highly respected academics and industry professionals by percentage. I think this increases the average quality of our mentor applicant pool, but I understand that this is might be controversial. Note that I still think our most impactful mentors are well-known within the AI safety field and most of the top-50 most impactful researchers in AI safety apply to mentor at MATS.

This is a hard question to answer precisely, as we have changed the metrics by which we have evaluated potential mentors several times. The average quality of mentors we accept has grown each program, by my lights. I weakly think that the average quality of mentors applying has also grown, though much slower.

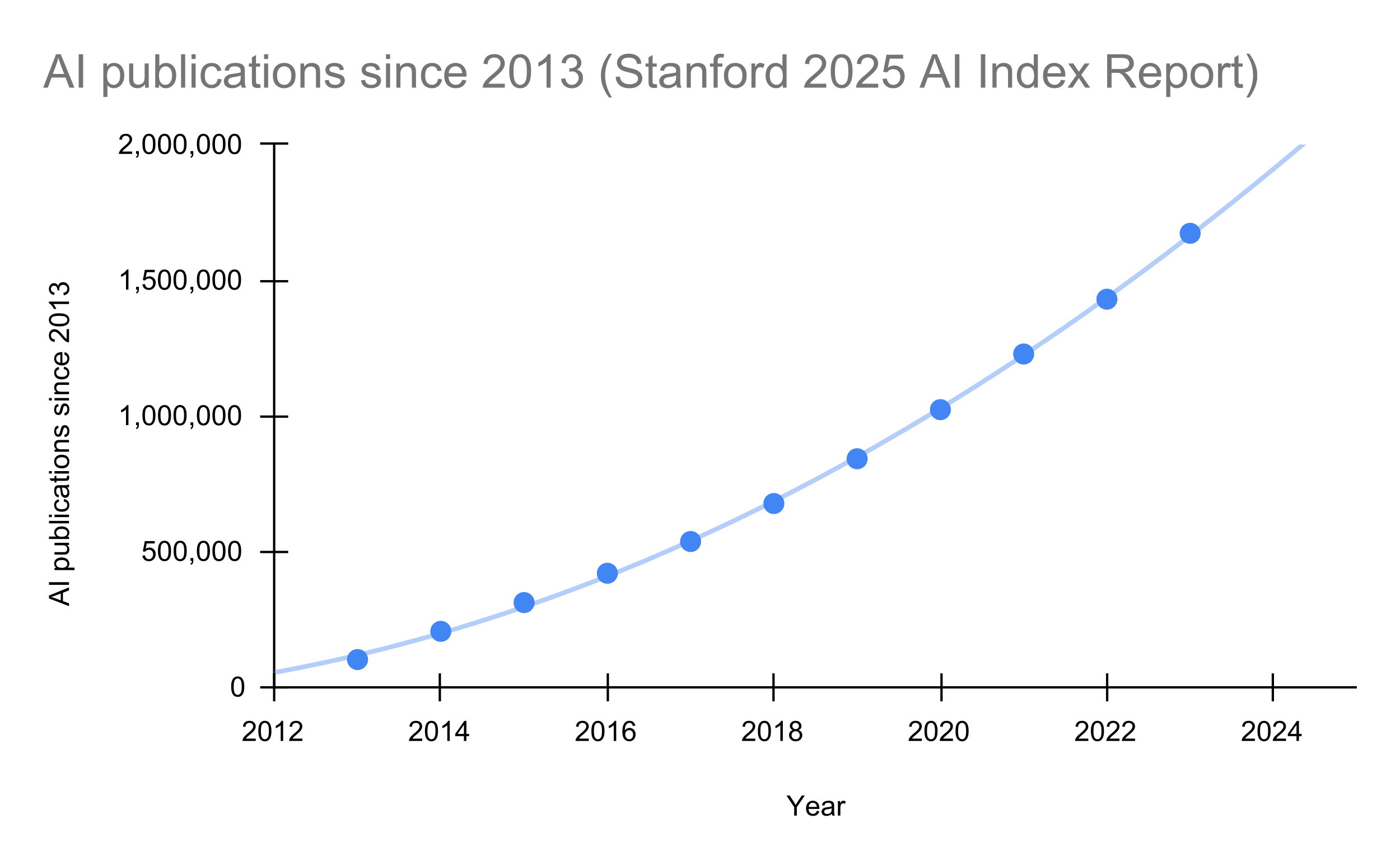

In contrast to the apparent exponential growth in AI conference attendees, the number of AI publications since 2013 has grown quadratically (data from the Standford HAI 2025 AI Index Report). Quadratic growth in publications suggests that a linearly (constantly) increasing number of researchers are producing publications at a linear (constant) rate. Extrapolating a little, this growth rate suggests there will be 3.7M cumulative publications by 2030 (since 2013).

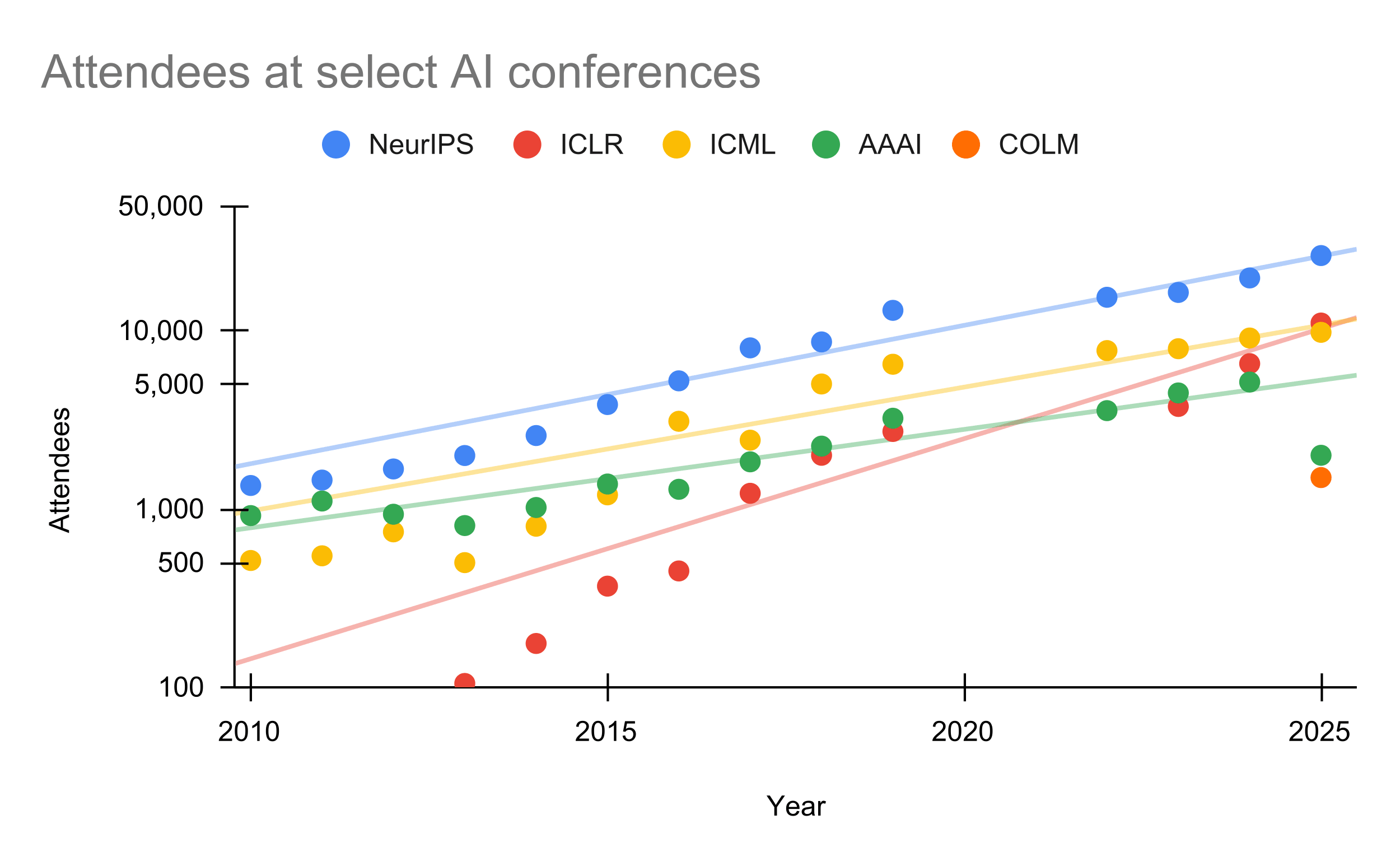

If the AI researcher growth rate is linear, the exponential growth of AI conference attendees might be due to increased industry presence. Also, it's possible that the attendee growth rate is also quadratic.

Open question: how fast did the field of cybersecurity grow since the launch of the internet?

Attendees at the top-four AI conferences have been growing at 1.26x/year on average. Data is from Our World in Data. I skipped 2020-2021 for all conferences and 2022 for ICLR, as these conferences were virtual due to the COVID pandemic and had increased virtual attendance.

One could infer from these growth rates that the academic field of AI is growing 1.26x/year. Interestingly, the AI safety field (including technical and governance) seems to be growing at 1.25x/year.

The top-10 most-cited papers that MATS contributed to are (all with at least 290 citations)

- Representation Engineering: A Top-Down Approach to AI Transparency

- Sparse autoencoders find highly interpretable features in language models

- Towards understanding sycophancy in language models

- Steering Language Models With Activation Engineering

- Steering Llama 2 via Contrastive Activation Addition

- Refusal in language models is mediated by a single direction

- The WMDP Benchmark: Measuring and Reducing Malicious Use With Unlearning

- The Reversal Curse: LLMs trained on" A is B" fail to learn" B is A"

- LLM Evaluators Recognize and Favor Their Own Generations

- Finding neurons in a haystack: Case studies with sparse probing

Compare this to the top-10 highest-karma LessWrong posts that MATS contributed to (all with over 200 karma):

- SolidGoldMagikarp (plus, prompt generation)

- Steering GPT-2-XL by adding an activation vector (arXiv)

- Transformers Represent Belief State Geometry in their Residual Stream (arXiv)

- Understanding and Controlling a Maze-Solving Policy Network (arXiv)

- Emergent Misalignment: Narrow finetuning can produce broadly misaligned LLMs (arXiv)

- Refusal in LLMs is mediated by a single direction (arXiv)

- Natural Abstractions: Key Claims, Theorems, and Critiques

- Distillation Robustifies Unlearning (arXiv)

- Mechanistically Eliciting Latent Behaviors in Language Models

- Neural networks generalize because of this one weird trick

80% of MATS alumni who completed the program before 2025 are still working on AI safety today, based on a survey of all available alumni LinkedIns or personal websites (242/292 ~ 83%). 10% are working on AI capabilities, but only ~6 at a frontier AI company (2 at Anthropic, 2 at Google DeepMind, 1 at Mistral AI, 1 extrapolated). 2% are still studying, but not in a research degree focused on AI safety. The last 8% are doing miscellaneous things, including non-AI safety/capabilities software engineering, teaching, data science, consulting, and quantitative trading.

Of the 193+ MATS alumni working on AI safety (extrapolated: 234):

10% of MATS alumni co-founded an active AI safety start-up or team during or after the program, including Apollo Research, Timaeus, Simplex, ARENA, etc.

Errata: I mistakenly included UK AISI in the "non-profit AI safety organization" category instead of "government agency". I also mistakenly said that the ~6 alumni working on AI capabilities at frontier AI companies were all working on pre-training.