This post is a not a so secret analogy for the AI Alignment problem. Via a fictional dialog, Eliezer explores and counters common questions to the Rocket Alignment Problem as approached by the Mathematics of Intentional Rocketry Institute.

MIRI researchers will tell you they're worried that "right now, nobody can tell you how to point your rocket’s nose such that it goes to the moon, nor indeed any prespecified celestial destination."

Popular Comments

Recent Discussion

Before we get started, this is your quarterly reminder that I have no medical credentials and my highest academic credential is a BA in a different part of biology (with a double major in computer science). In a world with a functional medical system no one would listen to me.

Tl;dr povidone iodine probably reduces viral load when used in the mouth or nose, with corresponding decreases in symptoms and infectivity. The effect size could be as high as 90% for prophylactic use (and as low as 0% when used in late illness), but is probably much smaller. There is a long tail of side-effects. No study I read reported side effects at clinically significant levels, but I don’t think they looked hard enough. There are other gargle...

I'm somewhat confused. I may not be reading the charts you included right, but it sort of looks to me like just rinsing with saline is useful, and that seems like it should be extremely safe and low risk and just about as effective as anything else. Thoughts?

Yeah. It's possible to give quite accurate definitions of some vague concepts, because the words used in such definitions also express vague concepts. E.g. "cygnet" - "a young swan".

Warning: This post might be depressing to read for everyone except trans women. Gender identity and suicide is discussed. This is all highly speculative. I know near-zero about biology, chemistry, or physiology. I do not recommend anyone take hormones to try to increase their intelligence; mood & identity are more important.

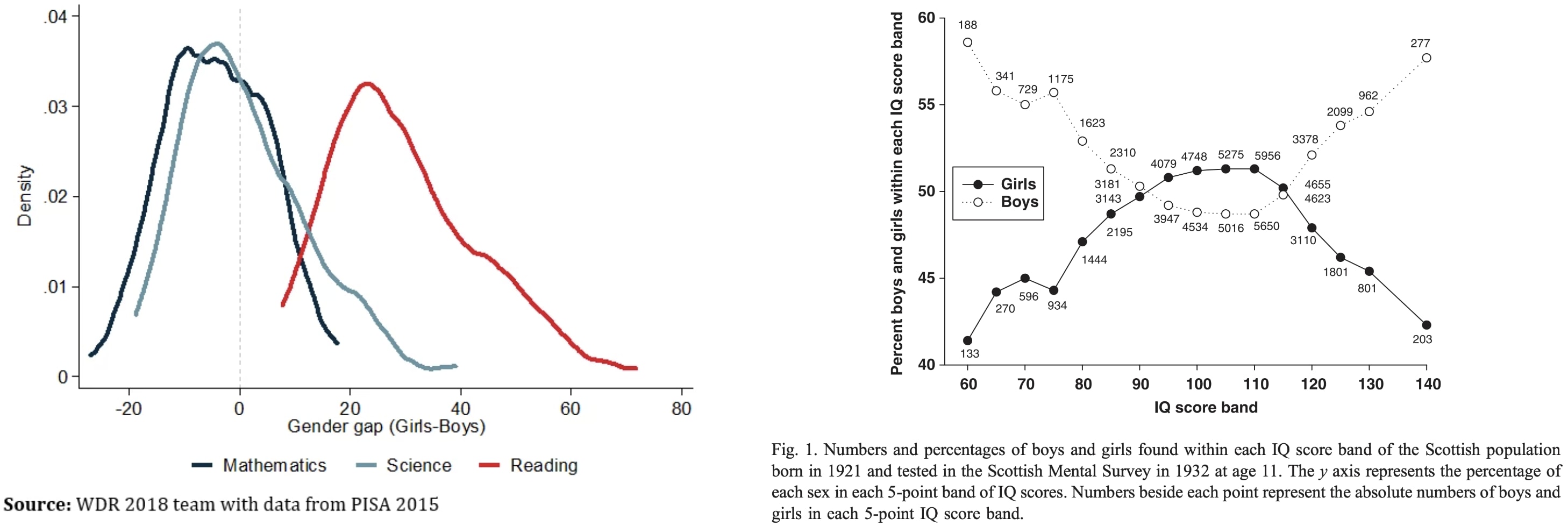

Why are trans women so intellectually successful? They seem to be overrepresented 5-100x in eg cybersecurity twitter, mathy AI alignment, non-scam crypto twitter, math PhD programs, etc.

To explain this, let's first ask: Why aren't males way smarter than females on average? Males have ~13% higher cortical neuron density and 11% heavier brains (implying more area?). One might expect males to have mean IQ far above females then, but instead the means and medians are similar:

My theory...

Then where are the smart trans men hiding?

My credence: 33% confidence in the claim that the growth in the number of GPUs used for training SOTA AI will slow down significantly directly after GPT-5. It is not higher because of (1) decentralized training is possible, and (2) GPT-5 may be able to increase hardware efficiency significantly, (3) GPT-5 may be smaller than assumed in this post, (4) race dynamics.

TLDR: Because of a bottleneck in energy access to data centers and the need to build OOM larger data centers.

The reasoning behind the claim:

- Current large data centers consume around 100 MW of power, while a single nuclear power plant generates 1GW. The largest seems to consume 150 MW.

- An A100 GPU uses 250W, and around 1kW with overheard. B200 GPUs, uses ~1kW without overhead. Thus a 1MW

Distributed training seems close enough to being a solved problem that a project costing north of a billion dollars might get it working on schedule. It's easier to stay within a single datacenter, and so far it wasn't necessary to do more than that, so distributed training not being routinely used yet is hardly evidence that it's very hard to implement.

There's also this snippet in the Gemini report that says

...Training Gemini Ultra used a large fleet of TPUv4 accelerators owned by Google across multiple datacenters. [...] we combine SuperPods in multiple d

The history of science has tons of examples of the same thing being discovered multiple time independently; wikipedia has a whole list of examples here. If your goal in studying the history of science is to extract the predictable/overdetermined component of humanity's trajectory, then it makes sense to focus on such examples.

But if your goal is to achieve high counterfactual impact in your own research, then you should probably draw inspiration from the opposite: "singular" discoveries, i.e. discoveries which nobody else was anywhere close to figuring out. After all, if someone else would have figured it out shortly after anyways, then the discovery probably wasn't very counterfactually impactful.

Alas, nobody seems to have made a list of highly counterfactual scientific discoveries, to complement wikipedia's list of multiple discoveries.

To...

First, your non-standard use of the term "counterfactual" is jarring, though, as I understand, it is somewhat normalized in your circles. "Counterfactual" unlike "factual" means something that could have happened, given your limited knowledge of the world, but did not. What you probably mean is "completely unexpected", "surprising" or something similar. I suspect you got this feedback before.

Sticking with physics. Galilean relativity was completely against the Aristotelian grain. More recently, the singularity theorems of Penrose and Hawking unexpectedly s...

Epistemic – this post is more suitable for LW as it was 10 years ago

Thought experiment with curing a disease by forgetting

Imagine I have a bad but rare disease X. I may try to escape it in the following way:

1. I enter the blank state of mind and forget that I had X.

2. Now I in some sense merge with a very large number of my (semi)copies in parallel worlds who do the same. I will be in the same state of mind as other my copies, some of them have disease X, but most don’t.

3. Now I can use self-sampling assumption for observer-moments (Strong SSA) and think that I am randomly selected from all these exactly the same observer-moments.

4. Based on this, the chances that my next observer-moment after...

The number of poor people is much larger than the number of billionaires, but the number of poor people who THINK they're billionaires probably isn't that much larger. Good point about needing to forget the technique, though.

Austin said they have $1.5 million in the bank, vs $1.2 million mana issued. The only outflows right now are to the charity programme which even with a lot of outflows is only at $200k. they also recently raised at a $40 million valuation. I am confused by running out of money. They have a large user base that wants to bet and will do so at larger amounts if given the opportunity. I'm not so convinced that there is some tiny timeline here.

But if there is, then say so "we know that we often talked about mana being eventually worth $100 mana per dollar, but ...

But I do think, intuitively, GPT-5-MAIA might e.g. make 'catching AIs red-handed' using methods like in this comment significantly easier/cheaper/more scalable.

Noteably, the mainline approach for catching doesn't involve any internals usage at all, let alone labeling a bunch of things.

I agree that this model might help in performing various input/output experiments to determine what made a model do a given suspicious action.

TL;DR

Tacit knowledge is extremely valuable. Unfortunately, developing tacit knowledge is usually bottlenecked by apprentice-master relationships. Tacit Knowledge Videos could widen this bottleneck. This post is a Schelling point for aggregating these videos—aiming to be The Best Textbooks on Every Subject for Tacit Knowledge Videos. Scroll down to the list if that's what you're here for. Post videos that highlight tacit knowledge in the comments and I’ll add them to the post. Experts in the videos include Stephen Wolfram, Holden Karnofsky, Andy Matuschak, Jonathan Blow, Tyler Cowen, George Hotz, and others.

What are Tacit Knowledge Videos?

Samo Burja claims YouTube has opened the gates for a revolution in tacit knowledge transfer. Burja defines tacit knowledge as follows:

...Tacit knowledge is knowledge that can’t properly be transmitted via verbal or written instruction, like the ability to create

"Mise En Place", "[i]nterviews and kitchen walkthroughs:

Qualifies as tacit knowledge, in that people are showing what they're doing that you seldom have a chance to watch first-hand. Reasonably entertaining, seems like you could learn a bit here.

Caveat: most of the dishes are really high-class/meat/fish etc. that you aren't very likely to ever cook yourself, and knowledge seems difficult to transfer.