I (Ben) recently made a poll for voting on interesting disagreements to be discussed on LessWrong. It generated a lot of good topic suggestions and data about what questions folks cared about and disagreed on.

So, Jacob and I figured we'd try applying the same format to help people orient to the current OpenAI situation.

What important questions would you want to see discussed and debated here in the coming days? Suggest and vote below.

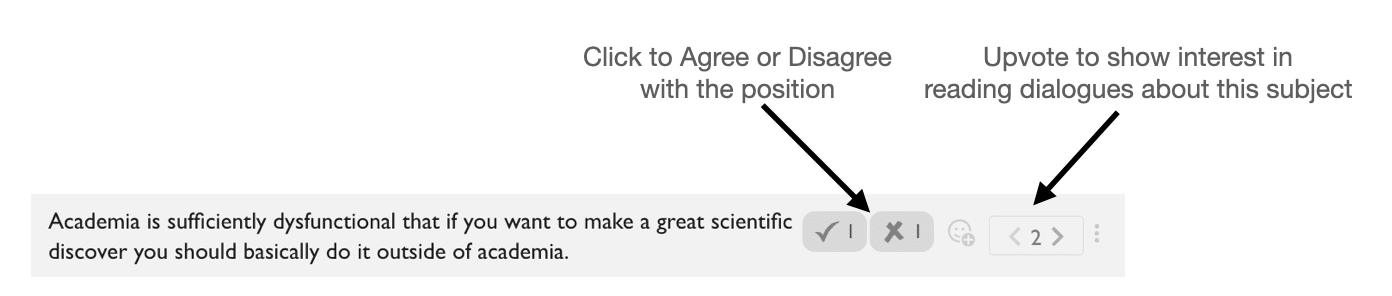

How to use the poll

- Reacts: Click on the agree/disagree reacts to help people see how much disagreement there is on the topic.

- Karma: Upvote positions that you'd like to read discussion about.

- New Poll Option: Add new positions for people to take sides on. Please add the agree/disagree reacts to new poll options you make.

The goal is to show people where a lot of interest and disagreement lies. This can be used to find discussion and dialogue topics in the future.

I was asked to clarify my position about why I voted 'disagree' with "I assign >50% to this claim: The board should be straightforward with its employees about why they fired the CEO."

I'm putting a maybe-unjustified high amount of trust in all the people involved, and from that, my prior is very high on "for some reason, it would be really bad, inappropriate, or wrong to discuss this in a public way." And given that OpenAI has ~800 employees, telling them would basically count as a 'public' announcement. (I would update significantly on the claim if it was only a select group of trusted employees, rather than all of them.)

To me, people seem too-biased in the direction of "this info should be public"—maybe with the assumption that "well I am personally trustworthy, and I want to know, and in fact, I should know in order to be able to assess the situation for myself." Or maybe with the assumption that the 'public' is good for keeping people accountable and ethical. Meaning that informing the public would be net helpful.

I am maybe biased in the direction of: The general public overestimates its own trustworthiness and ability to evaluate complex situations, especially without most of the relevant context.

My overall experience is that the involvement of the public makes situations worse, as a general rule.

And I think the public also overestimates their own helpfulness, post-hoc. So when things are handled in a public way, the public assesses their role in a positive light, but they rarely have ANY way to judge the counterfactual. And in fact, I basically NEVER see them even ACKNOWLEDGE the counterfactual. Which makes sense because that counterfactual is almost beyond-imagining. The public doesn't have ANY of the relevant information that would make it possible to evaluate the counterfactual.

So in the end, they just default to believing that it had to play out in the way it did, and that the public's involvement was either inevitable or good. And I do not understand where this assessment comes from, other than availability bias?

The involvement of the public, in my view, incentivizes more dishonesty, hiding, and various forms of deception. Because the public is usually NOT in a position to judge complex situations and lack much of the relevant context (and also aren't particularly clear about ethics, often, IMO), so people who ARE extremely thoughtful, ethically minded, high-integrity, etc. are often put in very awkward binds when it comes to trying to interface with the public. And so I believe it's better for the public not to be involved if they don't have to be.

I am a strong proponent of keeping things close to the chest and keeping things within more trusted, high-context, in-person circles. And to avoid online involvement as much as possible for highly complex, high-touch situations. Does this mean OpenAI should keep it purely internal? No they should have outside advisors etc. Does this mean no employees should know what's going on? No, some of them should—the ones who are high-level, responsible, and trustworthy, and they can then share what needs to be shared with the people under them.

Maybe some people believe that all ~800 employees deserve to know why their CEO was fired. Like, as a courtesy or general good policy or something. I think it depends on the actual reason. I can envision certain reasons that don't need to be shared, and I can envision reasons that ought to be shared.

I can envision situations where sharing the reasons could potentially damage AI Safety efforts in the future. Or disable similar groups from being able to make really difficult but ethically sound choices—such as shutting down an entire company. I do not want to disable groups from being able to make extremely unpopular choices that ARE, in fact, the right thing to do.

"Well if it's the right thing to do, we, the public, would understand and not retaliate against those decision-makers or generally cause havoc" is a terrible assumption, in my view.

I am interested in brainstorming, developing, and setting up really strong and effective accountability structures for orgs like OpenAI, and I do not believe most of those effective structures will include 'keep the public informed' as a policy. More often the opposite.

I'm interpreting this specifically through the lens of "this was a public statement". The board definitely had the ability to execute steps like "ask ChatGPT for some examples of concrete scenarios that would lead a company to issue that statement". The board probably had better options than "ask ChatGPT", but that should still serve as a baseline for how informed one would expect them to be about the implications of their statement.

Here are some concrete example scenarios ChatGPT gives that might lead to that statement being given:

... (read more)