I have, over the last year, become fairly well-known in a small corner of the internet tangentially related to AI.

As a result, I've begun making what I would have previously considered astronomical amounts of money: several hundred thousand dollars per month in personal income.

This has been great, obviously, and the funds have alleviated a fair number of my personal burdens (mostly related to poverty). But aside from that I don't really care much for the money itself.

My long term ambitions have always been to contribute materially to the mitigation of the impending existential AI threat. I never used to have the means to do so, mostly because of more pressing, safety/sustenance concerns, but now that I do, I would like to help however possible.

Some other points about me that may be useful:

- I'm intelligent, socially capable, and exceedingly industrious.

- I have a few hundred thousand followers worldwide across a few distribution channels. My audience is primarily small-midsized business owners. A subset of these people are very high leverage (i.e their actions directly impact the beliefs, actions, or habits of tens of thousands of people).

- My current work does not take much time. I have modest resources (~$2M) and a relatively free schedule. I am also, by all means, very young.

Given the above, I feel there's a reasonable opportunity here for me to help. It would certainly be more grassroots than a well-funded safety lab or one of the many state actors that has sprung up, but probably still sizeable enough to make a fraction of a % of a difference in the way the scales tip (assuming I dedicate my life to it).

What would you do in my shoes, assuming alignment on core virtues like maximizing AI safety?

Firstly, and perhaps most importantly, my advice on what not to do is not to try directly convincing politicians to pause or stop AGI development. A prerequisite for them to take actions drastic enough to actually matter is for them to understand how powerful AGI will truly become. And once that happens, even if they ban all AI development, unless they consider the arguments for doom to be extremely strong, which they won't[1], they will race and put truly enormous amounts of resources behind it, and that would be it for the species. Getting mid-sized business owners on board, on the other hand, might be a good idea due to the funding they could provide.

I don't think any of the big donors are good enough, so if you want to donate to other people's projects (or maybe become a co-founder), you could try finding interesting projects yourself on Manifund and the Nonlinear Network.

We know for a fact that alignment, at least for human-level intelligences, has a solution because people do actually care, at least in part, about each other. Therefore, it might be worth contacting Steven Byrnes and asking him whether he could usefully use more funding or what similar projects he recommends.

Outside AI, if the reason you care about existential risk isn't because you want to save the species, but because human extinction implies a lot of people will die, you could try looking into chemical brain preservation and how cheap it is. This could itself be a source of revenue, and you probably won't have any competitors (established cryonics orgs don't offer cheap brain preservation and I have asked and Tomorrow Biostasis isn't interested either).

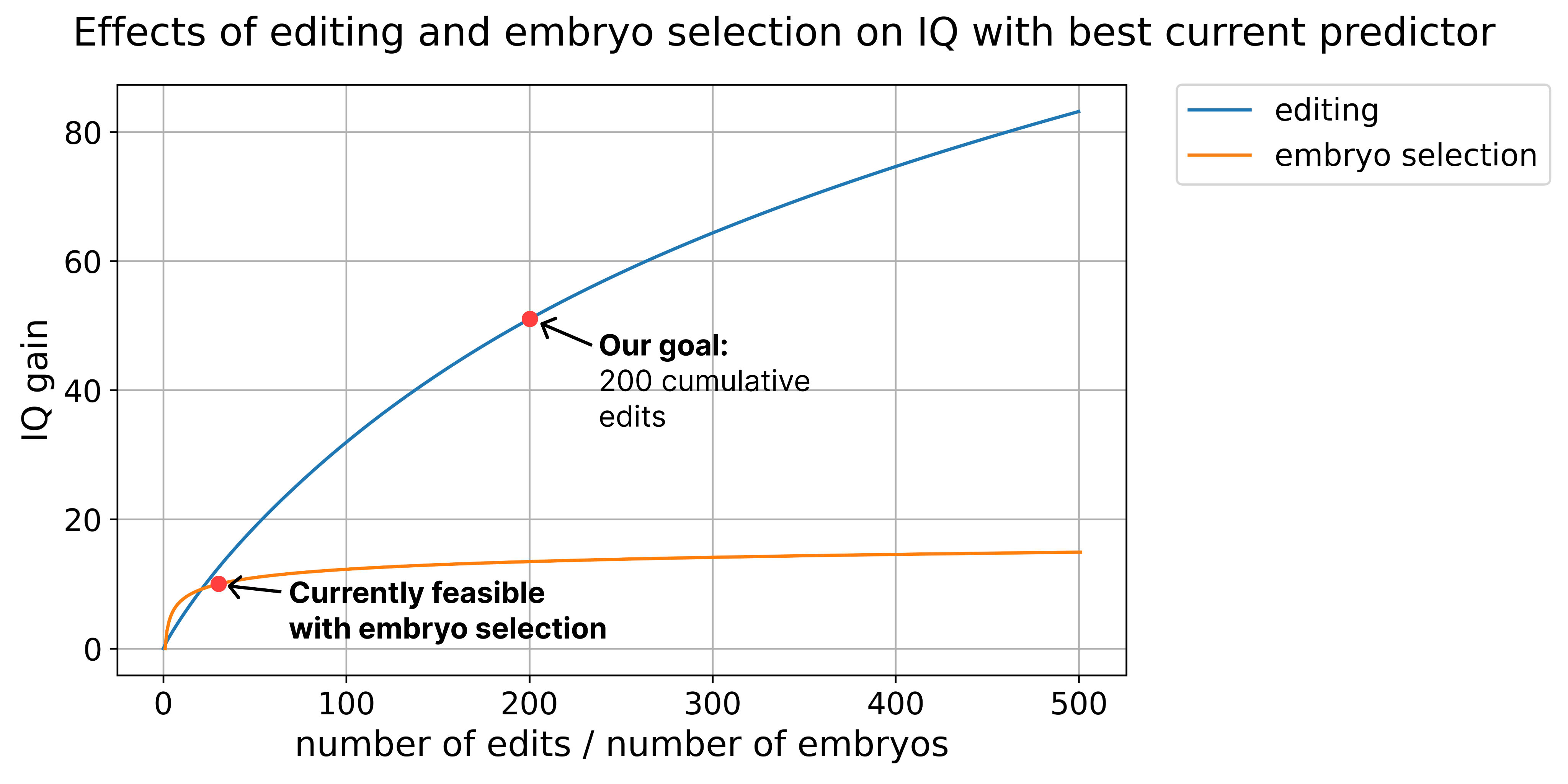

I also personally have not completely terrible ideas for alignment research and weak (half an SD?) intelligence augmentation. If you're interested, we can discuss them via DMs.

Finally, if you do fund intelligence augmentation research, please consider whether to keep it secret, if feasible.

Or maybe even if they do.