One type of question that would be straightforward for humans to answer, but difficult to train a machine learning model to answer reliably, would be to ask "How much money is visible in this picture?" for images like this:

If you have pictures with bills, coins, and non-money objects in random configurations - with many items overlapping and partly occluding each other - it is still fairly easy for humans to pick out what is what from the image.

But to get an AI to do this would be more difficult than a normal image classification problem where you can just fine tune a vision model with a bunch of task-relevant training cases. It would probably require multiple denomination-specific visions models working together, as well as some robust way for the model to determine where one object ends and another begins.

I would also expect such an AI to be more confounded by any adversarial factors - such as the inclusion of non-money arcade tokens or drawings of coins or colored-in circles - added to the image.

Now, maybe to solve this in under one minute some people would need to start the timer when they already have a calculator in hand (or the captcha screen would need to include an on-screen calculator). But in general, as long as there is not a huge number of coins and bills, I don't think this type of captcha would take the average person more than say 3-4 times longer than it takes them to compete the "select all squares with traffic lights" type captchas in use now. (Though some may want to familiarize themselves with the various $1.00 and $0.50 coins that exist and some the variations of the tails sides of quarters if this becomes the new prove-you-are-a-human method.)

I can see the numbers on the notes and infer that they denote United States Dollars, but have zero idea of what the coins are worth. I would expect that anyone outside United States would have to look up every coin type and so take very much more than 3-4 times longer clicking images with boats. Especially if the coins have multiple variations.

If the image additionally included coin-like tokens, it would be a nontrivial research project (on the order of an hour) to verify that each such object is in fact not any form of legal tender, past or present, in the United States.

Even if all the above were solved, you still need such images to be easily generated in a manner that any human can solve it fairly quickly but a machine vision system custom trained to solve this type of problem, based on at least thousands of different examples, can't. This is much harder than it sounds.

I can see the numbers on the notes and infer that they denote United States Dollars, but have zero idea of what the coins are worth. I would expect that anyone outside United States would have to look up every coin type and so take very much more than 3-4 times longer clicking images with boats. Especially if the coins have multiple variations.

If a system like this were widely deployed online using US currency, people outside the US would need to familiarize themselves with US currency if they are not already familiar with it. But they would only need to do this once and then it should be easy to remember for subsequent instances. There are only 6 denominations of US coins in circulation - $0.01, $0.05, $0.10, $0.25, $0.50, and $1.00 - and although there are variations for some of them, they mostly follow a very similar pattern. They also frequently have words on them like "ONE CENT" ($0.01) or "QUARTER DOLLAR" ($0.25) indicating the value, so it should be possible for non-US people to become familiar with those.

Alternatively, an easier option could be using country specific-captchas which show a picture like this except with the currency of whatever country the internet user is in. This would only require extra work for VPN users who seek to conceal their location by having the VPN make it look like they are in some other country.

If the image additionally included coin-like tokens, it would be a nontrivial research project (on the order of an hour) to verify that each such object is in fact not any form of legal tender, past or present, in the United States.

The idea was they the tokens would only be similar in broad shape and color - but would be different enough from actual legal tender coins that I would expect a human to easily tell the two apart.

Some examples would be:

https://barcade.com/wp-content/uploads/2021/07/BarcadeToken_OPT.png

https://www.pinterest.com/pin/64105994675283502/

Even if all the above were solved, you still need such images to be easily generated in a manner that any human can solve it fairly quickly but a machine vision system custom trained to solve this type of problem, based on at least thousands of different examples, can't. This is much harder than it sounds.

I agree that the difficulty of generating a lot of these is the main disadvantage, as you would probably have to just take a huge number of real pictures like this which would be very time consuming. It is not clear to me that Dall-E or other AI image generators could produce such pictures with enough realism and detail that it would be possible for human users to determine how much money is supposed to be in the fake image (and have many humans all converge to the same answer). You also might get weird things using Dall-E for this, like 2 corners of the same bill having different numbers indicating the bill's denomination.

But I maintain that, once a large set of such images exists, training a custom machine vision system to solve these would be very difficult. It would require much more work than simply fine tuning an off-the-shelf vision system to answer the binary question of "Does this image contain a bus?".

Suppose that, say, a few hundred people worked for several months to create 1,000,000 of these in total and then started deploying them. If you are a malicious AI developer trying to crack this, the mere tasks of compiling a properly labeled data set (or multiple data sets) and deciding how many sub-models to train and how they should cooperate (if you use more than one) are already non-trivial problems that you have to solve just to get started. So I think it would take more than a few days.

This idea is really brilliant I think, quite promising that it could work. It requires the image AI to understand the entire image, it is hard to divide it up into one frame per bill/coin. And it can't use the intelligence of LLM models easily.

To aid the user, on the side there could be a clear picture of each coin and their worth, that we we could even have made up coins, that could further trick the AI.

All this could be combined with traditional image obfucation techniques (like making them distorted.

I'm not entirely sure how to generate images of money efficiently, Dall-E couldn't really do it well in the test I ran. Stable diffusion probably would do better though.

If we create a few thousand real world images of money though, they might be possible to combine and obfuscate and delete parts of them in order to make several million different images. Like one bill could be taken from one image, and then a bill from another image could be placed on top of it etc.

To aid the user, on the side there could be a clear picture of each coin and their worth, that we we could even have made up coins, that could further trick the AI.

A user aid showing clear pictures of all available legal tender coins is a very good idea. It avoids problems more obscure coins which may have been only issued in a single year - so the user is not sitting there thinking "wait a second, did they actually issue a Ulysses S. Grant coin at some point or it that just there to fool the bots?".

I'm not entirely sure how to generate images of money efficiently, Dall-E couldn't really do it well in the test I ran. Stable diffusion probably would do better though.

If we create a few thousand real world images of money though, they might be possible to combine and obfuscate and delete parts of them in order to make several million different images. Like one bill could be taken from one image, and then a bill from another image could be placed on top of it etc.

I agree that efficient generation of these types of images is the main difficulty and probable bottleneck to deploying something like this if websites try to do so. Taking a large number of such pictures in real life would be time consuming. If you could speed up the process by automated image generation or automated creation of synthetic images by copying and pasting bills or notes between real images, that would be very useful. But doing that while preserving photo-realism and clarity to human users of how much money is in the image would be tricky.

Perhaps an advanced game engine could be used to create lots of simulations of piles of money. Like, if 100 3d objects of money are created (like 5 coins, 3 bills with 10 variations each (like folded etc), some fake money and other objects). Then these could be randomly generated into constellations. Further, it would then be possible to make videos instead of pictures, which makes it even harder for AI's to classify. Like, imagine the camera changing angel of a table, and a minimum of two angels are needed to see all bills.

I don't think the photos/videos needs to be super realistic, we can add different types of distortions to make it harder for the AI to find patterns.

You should team up with MIRI and create a captcha based on human values. Either the machines learn to understand human values, in which case we finally get an aligned artificial intelligence; or they won't, and then at least we get a good captcha...

(Narrator: Unfortunately, 90% of internet users also failed to understand human values.)

I know this is a joke, but I nonetheless feel compelled to point out that unaligned AGI could also pass a captcha based on human values.

Miri: Instead of paperclips the AI is optimizing for solving captchas, and is now turning the world into captcha solving machines. Our last chance is to make a captcha that only verified if human prosperity is guaranteed. Any ideas?

- At least 90% of internet users could solve it within one minute.

While I understand the reasoning behind this bar, having a bar greater than something like 99.99% of internet users is strongly discriminatory and regressive. Captchas are used to gate parts of the internet that are required for daily life. For instance, almost all free email services require filling out captchas, and many government agencies now require you to have an email address to interact with them. A bar that cuts out a meaningful number of humans means that those humans become unable to interact with society. Moreover, the people who are most likely to fail at solving this are people who already struggle to use computers and the internet, so the uneducated, the poor, and the elderly. Those groups can ill afford yet another barrier to living in our modern society.

If only 90% can solve the captcha within one minute, it does not follow that the other 10% are completely unable to solve it and faced with "yet another barrier to living in our modern society".

It could be that the other 10% just need a longer time period to solve it (which might still be relatively trivial, like needing 2 or 3 minutes) or they may need multiple tries.

If we are talking about someone at the extreme low end of the captcha proficiency distribution, such that the person can not even solve in a half hour something that 90% of the population can answer in 60 seconds, then I would expect that person to already need assistance with setting up an email account/completing government forms online/etc, so whoever is helping them with that would also help with the captcha.

(I am also assuming that this post is only for vision-based captchas, and blind people would still take a hearing-based alternative.)

I'm not sure this is solvable, but even if it is, I'm not sure its a good problem to work on.

Why, fundamentally, do we care if the user is a bot or a human? Is it just because bots don't buy things they see advertised, so we don't want to waste server cycles and bandwidth on them?

Whatever the reasons for wanting to distinguish bots from humans, perhaps there are better means than CAPTCHA, focused on the reasons rather than bots vs. humans.

For example, if you don't want to serve a web page to bots because you don't make any money from them, a micropayments system could allow a human to pay you $0.001/page or so - enough to cover the marginal cost of serving the page. If a bot is willing to pay that much - let them.

I get what you mean, if an AI can do things as well as the human, why block it?

I'm not really sure how that would apply in most cases however. For example bot swarms on social media platforms is a problem that has received a lot of attention lately. Of course, solving a captcha is not as deterring as charging let's say 8 usd per month, but I still think captchas could be useful in a bot deterring strategy.

Is this a useful problem work on? I understand that for most people it probably isn't, but personally I find it fun, and it might even be possible to start a SAAS business to make money that could be spent on useful things (although this seems unlikely).

$8/month (or other small charges) can solve a lot of problems.

Note that some of the early CAPTCHA algorithms solved two problems at once - both distinguishing bots from humans, and helping improve OCR technology by harnessing human vision. (I'm not sure exactly how it worked - either you were voting on the interpretation of an image of some text, or you were training a neural network).

Such dual-use CAPTCHA seems worthwhile, if it helps crowdsource solving some other worthwhile problem (better OCR does seem worthwhile).

Are there any browser plugins that actually bypass most CAPTCHAs? Honestly, I see them less often recently, but they bug me, and I can't imagine they do much good. Actually, that's perhaps not true - they may serve the same function as most door locks - not impossible or even all that hard to pick, but a mild deterrent to trying.

Anyway, I predict failure for this quest. There is no typing or clicking sequence that the second-lowest decile of internet users can easily and reliably do that a human can't train a model to do at least as well.

The key in this is the assistance of a very smart human to design/train the 'bot. It'll be a special-purpose ML task, and the hardest part will be connecting the model to the input/output needed to work the CAPTCHA.

Building a general-purpose browser scraper/assistant that can handle NEW captchas is probably feasible, even, but it's orders of magnitude harder.

There are browser plugins, but I haven't tried any of them.

General purpose CAPTCHA solver could be really difficult assuming people would start building more diverse CAPTCHAS. All CAPTCHAS I've seen so far has been of a few number of types.

One "cheat" would be to let users use their camera and microphone to record them saying a specified sentence. Deepfakes can still be detected, especially if we add requirements such as "say it in a cheerful tone" and "cover part of your mouth with your finger". That's not of course a solution to the competition but might be a potential workaround.

The "90% of internet users can do it" is a really binding constraint. Ability to speak in a requested tone or the like cuts out a surprisingly large portion of real humans.

A lot hinges on the requirements and acceptable error rates in both directions, too. "Identify using your bank or mobile account" limits AIs pretty strictly to those with enough backing to get a bank or phone, and certainly cuts down on quantity of non-human accounts. But it's also a lot of friction for humans, and a lot will choose not to do so, or be unable to.

Isn't that exactly what the ARC challenge set out to do?

My personal idea would be some kind of 3D shape rotation task. For example, you're presented with an image of a dodecahedron with each face colored differently, and then there are 4 more colored dodecahedrons in the answer section, only one of which is a rotation of the original which you need to identify to pass the test.

The 1 minute time limit is pretty damning though, wordcels might have trouble (sorry, I couldn't resist^^).

The core problem of your request is that with this time limit you are basically forcing the human to shut off System 2 thinking while solving an AI-proof CAPTCHA, when System 2 is all the advantage we have over AI. Kind of like a Turing test where you only get 1 minute and aren't allowed to think too hard.

Really interesting idea to make it 3D. I think it might be possible to combined with random tasks given by text, such as "find the part of the 3d object that is incorrect" or different tasks like that (and the object in this case might be a common object like a sofa but one of the pillows is made of wood or something like that)

Well, the point here is with geometry tasks, you can generate and evaluate an arbitrarily large number of problem instances automatically. Hand-crafted common sense reasoning tasks work great in the context of a Turing test but are vulnerable to simple dataset lookup in the CAPTCHA context.

From ChatGPT:

One idea for a CAPTCHA that meets the criteria you have outlined is to use a combination of text and image recognition. For example, the CAPTCHA could present the user with a series of images and ask them to select all the images that contain a certain object or word. This could be difficult for an AI model to solve, as it would require both image and language processing capabilities.

Another idea is to use a CAPTCHA that involves solving a simple puzzle or task that requires some degree of human cognition and problem-solving. For example, the CAPTCHA could present the user with a set of shapes and ask them to rearrange them in a specific way, or it could ask the user to perform a simple arithmetic calculation. This type of CAPTCHA would be difficult for an AI model to solve as it would require the ability to understand and manipulate abstract concepts.

Another possibility is to use a CAPTCHA that involves asking the user to identify and select certain elements within a larger image, such as a specific object or text within a scene. This type of CAPTCHA would require the ability to understand and interpret the context of the image, which is a challenge for many AI models.

Overall, the key to creating a CAPTCHA that is both easy for humans to solve and difficult for AI models to crack is to design a task that requires some degree of human cognition and problem-solving, rather than relying solely on image or text recognition.

The first jdea is just literally what a CAPTCHA is, but the second sounds interesting, having the user play a game or solve some puzzle (minus the arithmetic), and third is also dumb.

I do think "image reasoning" could potentially be a viable captcha strategy.

A classic example is "find the time traveller" pictures, where there are modern objects that gives away who the time traveller is.

However, I think it shouldn't be too difficult to teach an AI to identify "odd" objects in an image, unless each image has some unique trick, in which case we would need to create millions of such puzzles somehow. Maybe it could be made harder by having "red herrings" that might seem out of place but actually aren't which might make the AI misunderstand part of the time.

If done such that nothing using current AI tech could do it, I don't think 90% of people would be able to identify a time traveler.

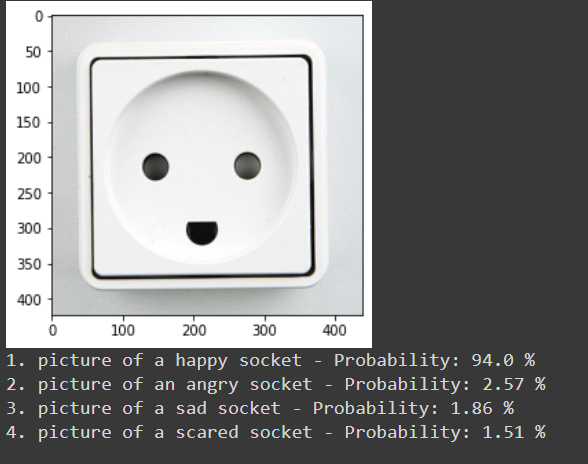

How about somehow utilizing pareidolia? Something like asking how this socket is feeling?

Or who's in this picture?

I think those are very creative ideas, and I think asking for "non-obvious" things in pictures is a good approach, since basically all really intelligent models are language models, some sort of "image reasoning" might work.

I tried the socket with the clip model, and the clip model got the feeling correct very confidently:

I myself can't see who the person in the bread is supposed to be, so I think an AI would struggle with it too. But on the other hand I think it shouldn't be too difficult to train a face identification AI to identify people in bread (or hidden in other ways), assuming the developer could create a training dataset from solving some captchas himself.

I'm thinking if it's possible to pose long reasonging problems in an image. Like: Next to the roundest object in the picture, there is a dark object, what other object in the picture is most similar in shape?

I like that direction, but I fear it'll fail the "90% of internet users" criterion. I also suspect that simple image matching will find similar-enough photos with captions that have the answer.

If we don't have AGI at the level of diamondoid nanotech bacteria, it may be possible to reliably identify humans using some kind of physical smart card system requiring frequent or continuous re-authentication via biometric sensors, similar to breathalyzers / ignition interlock devices installed in the cars of DUI offenders.

Not the most practical or non-invasive method that could be deployed for online services, but it is fairly secure if you're in a lab trying to keep an AGI in a box.

As for online solutions not requiring new hardware, recently I had to take a "video selfie" on my phone matching a scan of my driver's license for id.me as part of my unemployment benefits application. I'm fairly certain this could be fooled, but that's how our government is handling it now.

'identify humans using some kind of physical smart card system requiring frequent or continuous re-authentication via biometric sensors'

This is a really fascinating concept. Maybe the captcha could work in a way like "make a cricle with your index finger" or some other strange movement, and the chip would use that data to somehow verify that the action was done. If no motion is required I guess you could simply store the data outputted at one point and reuse it? Or the hacker using their own smart chip to authenticate them without them actually having to do something...

Deepfakes are still detectable using AI, especially if you do complicated motions like putting your hand on your face, or talk (which also gives us sound to work with).

How does AI do at classifying video these days?

I'm picturing something along the lines of "Pick the odd one out, from these three 10-second video clips", where the clips are two different examples from some broad genre (birthday party, tennis match, wildlife, city street, etc etc) and one from another.

I might be behind the times though, or underestimating the success rate you'd get by classifying based on, say, still images taken from one random frame of the video.

But maybe if you added static noise to make the videos heavily obscured, and rely on a human ability to infer missing details and fill in noisy visual inputs.

I think "video reasoning" could be an interesting approach as you say.

Like if there are 10 frames and no single frame shows a tennis racket, but if you play them real fast, a human could infer there being a tennis racket because part of the racket is in each frame.

While it is hard for AI to generate very real looking hands, it is a significantly easier task for AI to classify if hands are real or AI generated.

But perhaps it's possible to make extra distortions somehow that makes it harder for both AI and humans to determine which are real...

I don't think this is true. If it was possible to distinguish them, you could also guide the diffuser to generate them correctly. And if you created a better classification model, you would probably apply it to generation first rather than solving captchas.

Please correct me if I misunderstand you.

We have to first train the model that generates the image from the captcha, before we can provide any captcha, meaning that the hacker can train their discriminator on images generated by our model.

But even if this was not the case, generating is a more difficult task that evaluating. I'm pretty sure a small clip model that is two years old can detects hands generated by stable diffusion (probably even without any fine tuning), which is a more modern and larger model.

What happens when you train using GANs, is that eventually progress stagnates, even if you keep the discriminator and generator "balanced" (train whichever is doing worse until the other is worse). The models then continually change to trick/not be tricked by the other models. So the limit in making better generators is not that we can't make discriminators that can't detect them.

I don't expect this to be possible in like 1.5 years, and expect it's difficult now. Stuff like "only allow users who have a long browser history & have bought things and such" (like reCapcha does) feels like the only way out besides "go to the office in person"

Background

As artificial intelligence continues to advance, it becomes increasingly important to find ways to verify the humanity of individuals online. One common method for doing so is through the use of CAPTCHAs, which are designed to be easily solved by humans but difficult for AI models to crack. However, many CAPTCHAs can be easily defeated by readily available models, even without fine-tuning.

Currently, most CAPTCHAs looks like this:

Or this:

These CAPTCHAS can be easily solved using readily available models, although they might require some fine tuning.

For example I solved several different types of text captchas using this model out of the box: https://huggingface.co/microsoft/trocr-large-printed

And the image CAPTCHA above I solved using this model, without any fine tuning: https://huggingface.co/openai/clip-vit-large-patch14

Competition

In light of this, we are launching a competition to generate new ideas for CAPTCHAs that are both easy for humans to solve and difficult for AI models to crack. To be considered a winner, an idea must meet the following criteria:

The prize for this competition is the honor of having your idea considered as a reliable CAPTCHA solution, which CHATgpt couldn’t do when I prompted it. Therefore, it would ironically also serve as strong evidence that you are human. Do you have an idea that meets these criteria? If so, I strongly encourage you to write a comment with your idea.