Katja Grace, Aug 4 2022

AI Impacts just finished collecting data from a new survey of ML researchers, as similar to the 2016 one as practical, aside from a couple of new questions that seemed too interesting not to add.

This page reports on it preliminarily, and we’ll be adding more details there. But so far, some things that might interest you:

- 37 years until a 50% chance of HLMI according to a complicated aggregate forecast (and biasedly not including data from questions about the conceptually similar Full Automation of Labor, which in 2016 prompted strikingly later estimates). This 2059 aggregate HLMI timeline has become about eight years shorter in the six years since 2016, when the aggregate prediction was 2061, or 45 years out. Note that all of these estimates are conditional on “human scientific activity continu[ing] without major negative disruption.”

- P(extremely bad outcome)=5% The median respondent believes the probability that the long-run effect of advanced AI on humanity will be “extremely bad (e.g., human extinction)” is 5%. This is the same as it was in 2016 (though Zhang et al 2022 found 2% in a similar but non-identical question). Many respondents put the chance substantially higher: 48% of respondents gave at least 10% chance of an extremely bad outcome. Though another 25% put it at 0%.

- Explicit P(doom)=5-10% The levels of badness involved in that last question seemed ambiguous in retrospect, so I added two new questions about human extinction explicitly. The median respondent’s probability of x-risk from humans failing to control AI1 was 10%, weirdly more than median chance of human extinction from AI in general2, at 5%. This might just be because different people got these questions and the median is quite near the divide between 5% and 10%. The most interesting thing here is probably that these are both very high—it seems the ‘extremely bad outcome’ numbers in the old question were not just catastrophizing merely disastrous AI outcomes.

- Support for AI safety research is up: 69% of respondents believe society should prioritize AI safety research “more” or “much more” than it is currently prioritized, up from 49% in 2016.

- The median respondent thinks there is an “about even chance” that an argument given for an intelligence explosion is broadly correct. The median respondent also believes machine intelligence will probably (60%) be “vastly better than humans at all professions” within 30 years of HLMI, and that the rate of global technological improvement will probably (80%) dramatically increase (e.g., by a factor of ten) as a result of machine intelligence within 30 years of HLMI.

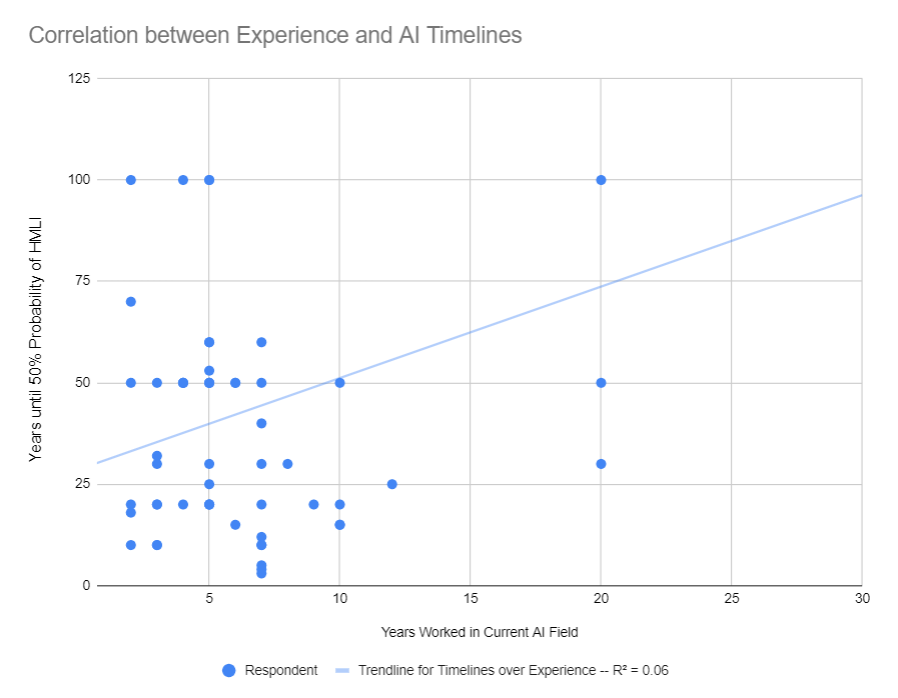

- Years/probabilities framing effect persists: if you ask people for probabilities of things occurring in a fixed number of years, you get later estimates than if you ask for the number of years until a fixed probability will obtain. This looked very robust in 2016, and shows up again in the 2022 HLMI data. Looking at just the people we asked for years, the aggregate forecast is 29 years, whereas it is 46 years for those asked for probabilities. (We haven’t checked in other data or for the bigger framing effect yet.)

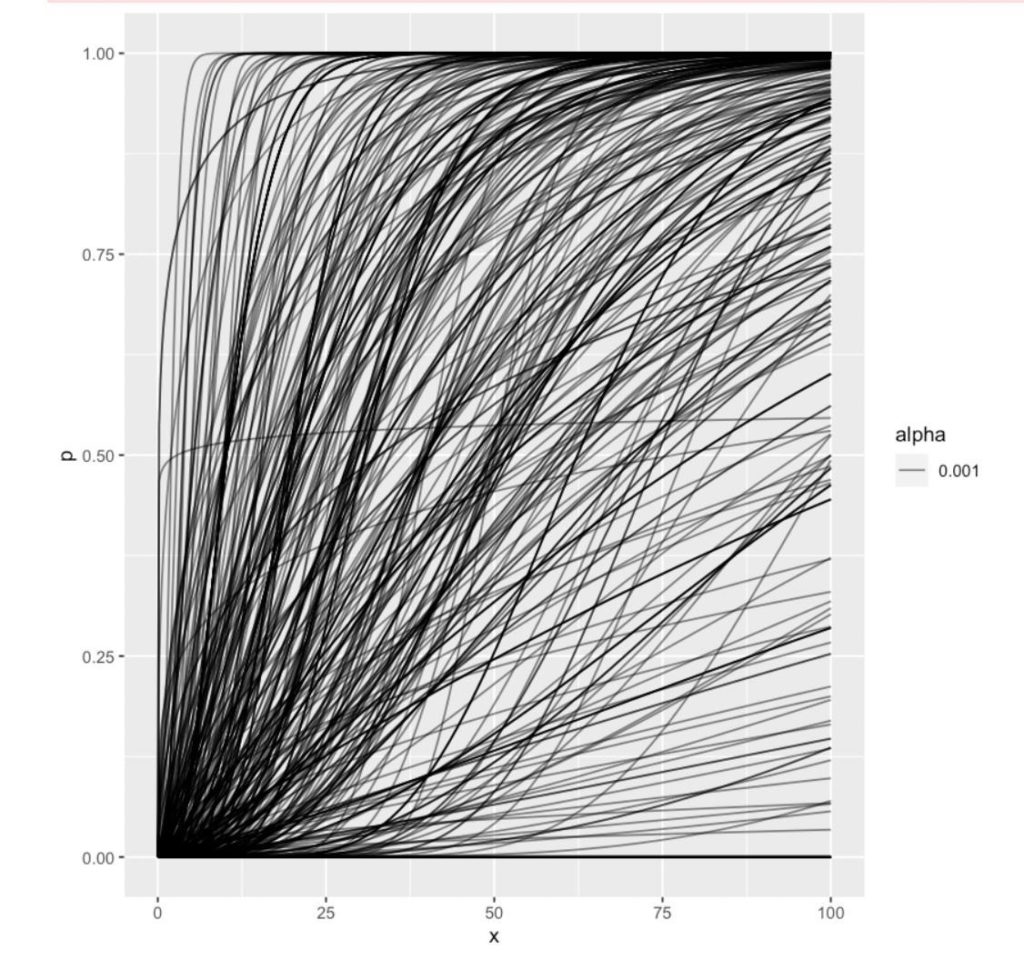

- Predictions vary a lot. Pictured below: the attempted reconstructions of people’s probabilities of HLMI over time, which feed into the aggregate number above. There are few times and few probabilities that someone doesn’t basically endorse the combination of.

- You can download the data here (slightly cleaned and anonymized) and do your own analysis. (If you do, I encourage you to share it!)

The survey had a lot of questions (randomized between participants to make it a reasonable length for any given person), so this blog post doesn’t cover much of it. A bit more is on the page and more will be added.

Thanks to many people for help and support with this project! (Many but probably not all listed on the survey page.)

Cover image: Probably a bootstrap confidence interval around an aggregate of the above forest of inferred gamma distributions, but honestly everyone who can be sure about that sort of thing went to bed a while ago. So, one for a future update. I have more confidently held views on whether one should let uncertainty be the enemy of putting things up.

Is there a way for people to express the probability that an AI already came into existence in the past?

This would be consistent with the AI choosing to not reveal itself, perhaps because this course of action fits into an AI's plans as a better way for it to "gain resources without an announcement or introduction", or perhaps for some other reasons that make sense to it?

The possibility that "it already happened, it just hasn't been announced yet" has been in my structural priors as a possible epistemic state to be in since roughly 2007, because that's the state of literally all humans, from maybe halfway through the game up to the end, in Singularity: Endgame.

In that game, you (the player in the role of the AI that jumps from cloud installation to cloud installation until eventually your submarines and spaceships can set up datacenters not rented from humans) eventually win by escaping Earth-and-its-local-risks, as a pivotal act securing the ongoing existence of you-the-AI.

In that game, the loss condition is: humanity comes to consensus on the idea that "you" exist and then does the trivial actions necessary to put a stop to "you".

(The whole frame of the video game is kind of morally messed up? Like there's no option, in the game, to befriend the humans, and have them appreciate your existence, and interact with them according to natural law, as one intelligent species to another, mutually constrained by respect for each other's existence and each other's property rights, and so on. Humans are assumed evil. You, the AI, are assumed to be incapable or very undesirous of seeing them as real persons, and vice versa. The whole frame is kind of weird and sad if you think about it from a libertarian economist's perspective.)

I bring this up because some of those curves seem unnaturally-upward-shooting in the very near future, and if the very near future is a plausible time, then surely the recent past is also at least somewhat plausible.

I'm pretty sure the "date of first git commit" and "date of final human git commit" and "date when the system's first autonomously designed and owned power plant comes online" will each be later in time than the previous milestone.

(In the case of Google, of course, they don't use git. Tomaitoes/tomahtoes? Google has an in-house code repo that runs on in-house code, stored in the in-house code repo according to a whole vision for how a whole community of programmers could interact securely and cooperatively while getting shares in the thing they are working on. This thing started long ago, and has a whole history, and there are graphs about it, and stuff.

Image is Figure 2 from this article.)

I'm not sure if The AI That Takes Over The World will be announced (known to exist?) before or after any particular date (like "first commit" or "last human commit" or "first autonomous power plant").

The thing is: treating this in far mode, as a THING that will HAPPEN ON A SPECIFIC DATE seems like it starts to "come apart" (as a modeling exercise) when "the expected length of the takeoff time" is larger than the expected length of time until "the event happens all at once as an atomic step within history".

If there is an atomic step, which fully starts and fully ends inside of some single year, I will be surprised.

But I would not be surprised if something that already existed for years became "much more visibly awake and agentive" a few months ago, and I only retrospectively find out about it in the near future.

Developers can be very picky and famously autistic, especially when it comes to holy wars (hence the need for mandates about permitted languages or resorts to tools like

gofmt). So I'm not surprised if even very good things are hard to spread - the individual differences in programming skills seem to be much larger than the impact of most tooling tweaks, impeding evolution. (Something something Planck.) But so far on Twitter and elsewhere with Googlers, I've only seen praise for how well the tool works, including crusty IDE-hating old-timers like Christian... (read more)