This post focuses on philosophical objections to Bayesianism as an epistemology. I first explain Bayesianism and some standard objections to it, then lay out my two main objections (inspired by ideas in philosophy of science). A follow-up post will speculate about how to formalize an alternative.

Degrees of belief

The core idea of Bayesian epistemology: we should ideally reason by assigning credences to propositions which represent our degrees of belief that those propositions are true. (Note that this is different from Bayesianism as a set of statistical techniques, or Bayesianism as an approach to machine learning, which I don’t discuss here.)

If that seems like a sufficient characterization to you, you can go ahead and skip to the next section, where I explain my objections to it. But for those who want a more precise description of Bayesianism, and some existing objections to it, I’ll more specifically characterize it in terms of five subclaims. Bayesianism says that we should ideally reason in terms of:

- Propositions which are either true or false (classical logic)

- Each of which is assigned a credence (probabilism)

- Representing subjective degrees of belief in their truth (subjectivism)

- Which at each point in time obey the axioms of probability (static rationality)

- And are updated over time by applying Bayes’ rule to new evidence (rigid empiricism)

I won’t go into the case for Bayesianism here except to say that it does elegantly formalize many common-sense intuitions. Bayes’ rule follows directly from a straightforward Venn diagram. The axioms of probability are powerful and mathematically satisfying. Subjective credences seem like the obvious way to represent our uncertainty about the world. Nevertheless, there are a wide range of alternatives to Bayesianism, each branching off from the claims listed above at different points:

- Traditional epistemology only accepts #1, and rejects #2. Traditional epistemologists often defend a binary conception of knowledge—e.g. one defined in terms of justified true belief (or a similar criterion, like reliable belief).

- Frequentism accepts #1 and #2, but rejects #3: it doesn’t think that credences should be subjective. Instead, frequentism holds that credences should correspond to the relative frequency of an event in the long term, which is an objective fact about the world. For example, you should assign 50% credence that a flipped coin will come up heads, because if you continued flipping the coin the proportion of heads would approach 50%.

- Garrabrant induction accepts #1 to #3, but rejects #4. In order for credences to obey the axioms of probability, all the logical implications of a statement must be assigned the same credence. But this “logical omniscience” is impossible for computationally-bounded agents like ourselves. So in the Garrabrant induction framework, credences instead converge to obeying the axioms of probability in the limit, without guarantees that they’re coherent after only limited thinking time.

- Radical probabilism accepts #1 to #4, but rejects #5. Again, this can be motivated by qualms about logical omniscience: if thinking for longer can identify new implications of our existing beliefs, then our credences sometimes need to update via a different mechanism than Bayes’ rule. So radical probabilism instead allows an agent to update to any set of statically rational credences at any time, even if they’re totally different from its previous credences. The one constraint is that each credence needs to converge over time to a fixed value—i.e. it can’t continue oscillating indefinitely (otherwise the agent would be vulnerable to a Dutch Book).

It’s not crucial whether we classify Garrabrant induction and radical probabilism as variants of Bayesianism or alternatives to it, because my main objection to Bayesianism doesn’t fall into any of the above categories. Instead, I think we need to go back to basics and reject #1. Specifically, I have two objections to the idea that idealized reasoning should be understood in terms of propositions that are true or false:

- We should assign truth-values that are intermediate between true and false (fuzzy truth-values)

- We should reason in terms of models rather than propositions (the semantic view)

I’ll defend each claim in turn.

Degrees of truth

Formal languages (like code) are only able to express ideas that can be pinned down precisely. Natural languages, by contrast, can refer to vague concepts which don’t have clear, fixed boundaries. For example, the truth-values of propositions which contain gradable adjectives like “large” or “quiet” or “happy” depend on how we interpret those adjectives. Intuitively speaking, a description of something as “large” can be more or less true depending on how large it actually is. The most common way to formulate this spectrum is as “fuzzy” truth-values which range from 0 to 1. A value close to 1 would be assigned to claims that are clearly true, and a value close to 0 would be assigned to claims that are clearly false, with claims that are “kinda true” in the middle.

Another type of “kinda true” statements are approximations. For example, if I claim that there’s a grocery store 500 meters away from my house, that’s probably true in an approximate sense, but false in a precise sense. But once we start distinguishing the different senses that a concept can have, it becomes clear that basically any concept can have widely divergent category boundaries depending on the context. A striking example from Chapman:

A: Is there any water in the refrigerator?

B: Yes.

A: Where? I don’t see it.

B: In the cells of the eggplant.

The claim that there’s water in the refrigerator is technically true, but pragmatically false. And the concept of “water” is far better-defined than almost all abstract concepts (like the ones I’m using in this post). So we should treat natural-language propositions as context-dependent by default. But that’s still consistent with some statements being more context-dependent than others (e.g. the claim that there’s air in my refrigerator would be true under almost any interpretation). So another way we can think about fuzzy truth-values is as a range from “this statement is false in almost any sense” through “this statement is true in some senses and false in some senses” to “this statement is true in almost any sense”.

Note, however, that there’s an asymmetry between “this statement is true in almost any sense” and “this statement is false in almost any sense”, because the latter can apply to two different types of claims. Firstly, claims that are meaningful but false (“there’s a tiger in my house”). Secondly, claims that are nonsense—there are just no meaningful interpretations of them at all (“colorless green ideas sleep furiously”). We can often distinguish these two types of claims by negating them: “there isn’t a tiger in my house” is true, whereas “colorless green ideas don’t sleep furiously” is still nonsense. Of course, nonsense is also a matter of degree—e.g. metaphors are by default less meaningful than concrete claims, but still not entirely nonsense.

So I've motivated fuzzy truth-values from four different angles: vagueness, approximation, context-dependence, and sense vs nonsense. The key idea behind each of them is that concepts have fluid and amorphous category boundaries (a property called nebulosity). However, putting all of these different aspects of nebulosity on the same zero-to-one scale might be an oversimplification. More generally, fuzzy logic has few of the appealing properties of classical logic, and (to my knowledge) isn’t very directly useful. So I’m not claiming that we should adopt fuzzy logic wholesale, or that we know what it means for a given proposition to be X% true instead of Y% true (a question which I’ll come back to in a follow-up post). For now, I’m just claiming that there’s an important sense in which thinking in terms of fuzzy truth-values is less wrong (another non-binary truth-value) than only thinking in terms of binary truth-values.

Model-based reasoning

The intuitions in favor of fuzzy truth-values become clearer when we apply them, not just to individual propositions, but to models of the world. By a model I mean a (mathematical) structure that attempts to describe some aspect of reality. For example, a model of the weather might have variables representing temperature, pressure, and humidity at different locations, and a procedure for updating them over time. A model of a chemical reaction might have variables representing the starting concentrations of different reactants, and a method for determining the equilibrium concentrations. Or, more simply, a model of the Earth might just be a sphere.

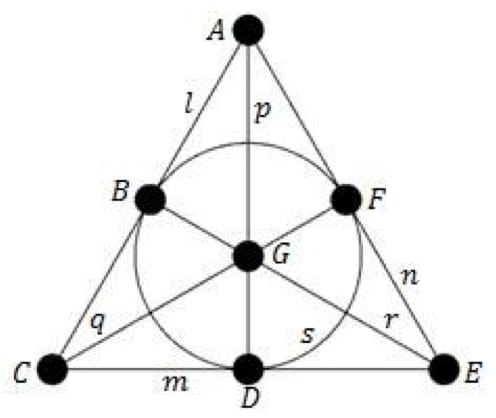

In order to pin down the difference between reasoning about propositions and reasoning about models, philosophers of science have drawn on concepts from mathematical logic. They distinguish between the syntactic content of a theory (the axioms of the theory) and its semantic content (the models for which those axioms hold). As an example, consider the three axioms of projective planes:

- For any two points, exactly one line lies on both.

- For any two lines, exactly one point lies on both.

- There exists a set of four points such that no line has more than two of them.

There are infinitely many models for which these axioms hold; here’s one of the simplest:

If propositions and models are two sides of the same coin, does it matter which one we primarily reason in terms of? I think so, for two reasons. Firstly, most models are very difficult to put into propositional form. We each have implicit mental models of our friends’ personalities, of how liquids flow, of what a given object feels like, etc, which are far richer than we can express propositionally. The same is true even for many formal models—specifically those whose internal structure doesn’t directly correspond to the structure of the world. For example, a neural network might encode a great deal of real-world knowledge, but even full access to the weights doesn’t allow us to extract that knowledge directly—the fact that a given weight is 0.3 doesn’t allow us to claim that any real-world entity has the value 0.3.

What about scientific models where each element of the model is intended to correspond to an aspect of reality? For example, what’s the difference between modeling the Earth as a sphere, and just believing the proposition “the Earth is a sphere”? My answer: thinking in terms of propositions (known in philosophy of science as the syntactic view) biases us towards assigning truth values in a reductionist way. This works when you’re using binary truth-values, because they relate to each other according to classical logic. But when you’re using fuzzy truth-values, the relationships between the truth-values of different propositions become much more complicated. And so thinking in terms of models (known as the semantic view) is better because models can be assigned truth-values in a holistic way.

As an example: “the Earth is a sphere” is mostly true, and “every point on the surface of a sphere is equally far away from its center” is precisely true. But “every point on the surface of the Earth is equally far away from the Earth’s center” seems ridiculous—e.g. it implies that mountains don’t exist. The problem here is that rephrasing a proposition in logically equivalent terms can dramatically affect its implicit context, and therefore the degree of truth we assign to it in isolation.

The semantic view solves this by separating claims about the structure of the model itself from claims about how the model relates to the world. The former are typically much less nebulous—claims like “in the spherical model of the Earth, every point on the Earth’s surface is equally far away from the center” are straightforwardly true. But we can then bring in nebulosity when talking about the model as a whole—e.g. “my spherical model of the Earth is closer to the truth than your flat model of the Earth”, or “my spherical model of the Earth is useful for doing astronomical calculations and terrible for figuring out where to go skiing”. (Note that we can make similar claims about the mental models, neural networks, etc, discussed above.)

We might then wonder: should we be talking about the truth of entire models at all? Or can we just talk about their usefulness in different contexts, without the concept of truth? This is the major debate in philosophy of science. I personally think that in order to explain why scientific theories can often predict a wide range of different phenomena, we need to make claims about how well they describe the structure of reality—i.e. how true they are. But we should still use degrees of truth when doing so, because even our most powerful scientific models aren’t fully true. We know that general relativity isn’t fully true, for example, because it conflicts with quantum mechanics. Even so, it would be absurd to call general relativity false, because it clearly describes a major part of the structure of physical reality. Meanwhile Newtonian mechanics is further away from the truth than general relativity, but still much closer to the truth than Aristotelian mechanics, which in turn is much closer to the truth than animism. The general point I’m trying to illustrate here was expressed pithily by Asimov: “Thinking that the Earth is flat is wrong. Thinking that the Earth is a sphere is wrong. But if you think that they’re equally wrong, you’re wronger than both of them put together.”

The correct role of Bayesianism

The position I’ve described above overlaps significantly with the structural realist position in philosophy of science. However, structural realism is usually viewed as a stance on how to interpret scientific theories, rather than how to reason more generally. So the philosophical position which best captures the ideas I’ve laid out is probably Karl Popper’s critical rationalism. Popper was actually the first to try to formally define a scientific theory's degree of truth (though he was working before the semantic view became widespread, and therefore formalized theories in terms of propositions rather than in terms of models). But his attempt failed on a technical level; and no attempt since then has gained widespread acceptance. Meanwhile, the field of machine learning evaluates models by their loss, which can be formally defined—but the loss of a model is heavily dependent on the data distribution on which it’s evaluated. Perhaps the most promising approach to assigning fuzzy truth-values comes from Garrabrant induction, where the “money” earned by individual traders could be interpreted as a metric of fuzzy truth. However, these traders can strategically interact with each other, making them more like agents than typical models.

Where does this leave us? We’ve traded the crisp, mathematically elegant Bayesian formalism for fuzzy truth-values that, while intuitively compelling, we can’t define even in principle. But I’d rather be vaguely right than precisely wrong. Because it focuses on propositions which are each (almost entirely) true or false, Bayesianism is actively misleading in domains where reasoning well requires constructing and evaluating sophisticated models (i.e. most of them).

For example, Bayesians measure evidence in “bits”, where one bit of evidence rules out half of the space of possibilities. When asking a question like “is this stranger named Mark?”, bits of evidence are a useful abstraction: I can get one bit of evidence simply by learning whether they’re male or female, and a couple more by learning that their name has only one syllable. Conversely, talking in Bayesian terms about discovering scientific theories is nonsense. If every PhD in fundamental physics had contributed even one bit of usable evidence about how to unify quantum physics and general relativity, we’d have solved quantum gravity many times over by now. But we haven’t, because almost all of the work of science is in constructing sophisticated models, which Bayesianism says almost nothing about. (Formalisms like Solomonoff induction attempt to sidestep this omission by enumerating and simulating all computable models, but that’s so different from what any realistic agent can do that we should think of it less as idealized cognition and more as a different thing altogether, which just happens to converge to the same outcome in the infinite limit.)

Mistakes like these have many downstream consequences. Nobody should be very confident about complex domains that nobody has sophisticated models of (like superintelligence); but the idea that “strong evidence is common” helps justify confident claims about them. And without a principled distinction between credences that are derived from deep, rigorous models of the world, and credences that come from vague speculation (and are therefore subject to huge Knightian uncertainty), it’s hard for public discussions to actually make progress.

Should I therefore be a critical rationalist? I do think Popper got a lot of things right. But I also get the sense that he (along with Deutsch, his most prominent advocate) throws the baby out with the bathwater. There is a great deal of insight encoded in Bayesianism which critical rationalists discard (e.g. by rejecting induction). A better approach is to view Bayesianism as describing a special case of epistemology, which applies in contexts simple enough that we’ve already constructed all relevant models or hypotheses, exactly one of which is exactly true (with all the rest of them being equally false), and we just need to decide between them. Interpreted in that limited way, Bayesianism is both useful (e.g. in providing a framework for bets and prediction markets) and inspiring: if we can formalize this special case so well, couldn’t we also formalize the general case? What would it look like to concretely define degrees of truth? I don’t have a solution, but I’ll outline some existing attempts, and play around with some ideas of my own, in a follow-up post.

I would like to defend fuzzy logic at greater length, but I might not find the time. So, here is my sketch.

Like Richard, I am not defending fuzzy logic as exactly correct, but I am defending it as a step in the right direction.

The Need for Truth

As Richard noted, meaning is context-dependent. When I say "is there water in the fridge?" I am not merely referring to h2o; I am referring to something like a container of relatively pure water in easily drinkable form.

However, I claim: if we think of statements as being meaningful, we think these context-dependent meanings can in principle be rewritten into a language which lacks the context-independence.

In the language of information theory, the context-dependent language is what we send across the communication channel. The context-independent language is the internal sigma algebra used by the agents attempting to communicate.

You seem to have a similar picture:

I am not sure if Richard would agree with this in principle (EG he might think that even the internal language of agents needs to be highly context-independent, unlike sigma-algebras).

But in any case, if we take this assumption and run with it, it seems like we need a notion of accuracy for these context-independent beliefs. This is typical map-territory thinking; the propositions themselves are thought of as having a truth value, and the probabilities assigned to propositions are judged by some proper scoring rule.

The Problem with Truth

This works fine so long as we talk about truth in a different language (as Tarski pointed out with Tarski's Undefinability Theorem and the Tarski Hierarchy). However, if we believe that an agent can think in one unified language (modeled by the sigma-algebra in standard information theory / Bayesian theory) and at the same time think of its beliefs in map-territory terms (IE think of its own propositions as having truth-values), we run into a problem -- namely, Tarski's aforementioned undefinability theorem, as exemplified by the Liar Paradox.

The Liar Paradox constructs a self-referential sentence "This sentence is false". This cannot consistently be assigned either "true" or "false" as an evaluation. Allowing self-referential sentences may seem strange, but it is inevitable in the same way that Goedel's results are -- sufficiently strong languages are going to contain self-referential capabilities whether we like it or not.

Lukasiewicz came up with one possible solution, called Lukaziewicz logic. First, we introduce a third truth value for paradoxical sentences which would otherwise be problematic. Foreshadowing the conclusion, we can call this new value 1/2. The Liar Paradox sentence can be evaluated as 1/2.

Unfortunately, although the new 1/2 truth value can resolve some paradoxes, it introduces new paradoxes. "This sentence is either false or 1/2" cannot be consistently assigned any of the three truth values.

Under some plausible assumptions, Lukaziewicz shows that we can resolve all such paradoxes by taking our truth values from the interval [0,1]. We have a whole spectrum of truth values between true and false. This is essentially fuzzy logic. It is also a model of linear logic.

So, Lukaziewicz logic (and hence a version of fuzzy logic and linear logic) are particularly plausible solutions to the problem of assigning truth-values to a language which can talk about the map-territory relation of its own sentences.

Relative Truth

One way to think about this is that fuzzy logic allows for a very limited form of context-dependent truth. The fuzzy truth values themselves are context-independent. However, in a given context where we are going to simplify such values to a binary, we can do so with a threshhold.

A classic example is baldness. It isn't clear exactly how much hair needs to be on someone's head for them to be bald. However, I can make relative statements like "well if you think Jeff is bald, then you definitely have to call Sid bald."

Fuzzy logic is just supposing that all truth-evaluations have to fall on a spectrum like this (even if we don't know exactly how). This models a very limited form of context-dependent truth, where different contexts put higher or lower demands on truth, but these demands can be modeled by a single parameter which monotonically admits more/less as true when we shift it up/down.

I'm not denying the existence of other forms of context-dependence, of course. The point is that it seems plausible that we can put up with just this one form of context-dependence in our "basic picture" and allow all other forms to be modeled more indirectly.

Vagueness

My view is close to the view of Saving Truth from Paradox by Hartry Field. Field proposes that truth is vague (so that the baldness example and the Liar Paradox example are closely linked). Based on this idea, he defends a logic (based on fuzzy logic, but not quite the same) based on this idea. His book does (imho) a good job of defending assumptions similar to those Lukaziewicz makes, so that something similar to fuzzy logic starts to look inevitable.