Once upon a time, the sun let out a powerful beam of light which shattered the world. The air and the liquid was split, turning into body and breath. Body and breath became fire, trees and animals. In the presence of the lightray, any attempt to reunite simply created more shards, of mushrooms, carnivores, herbivores and humans. The hunter, the pastoralist, the farmer and the bandit. The king, the blacksmith, the merchant, the butcher. Money, lords, bureaucrats, knights, and scholars. As the sun cleaved through the world, history progressed, creating endless forms most beautiful.

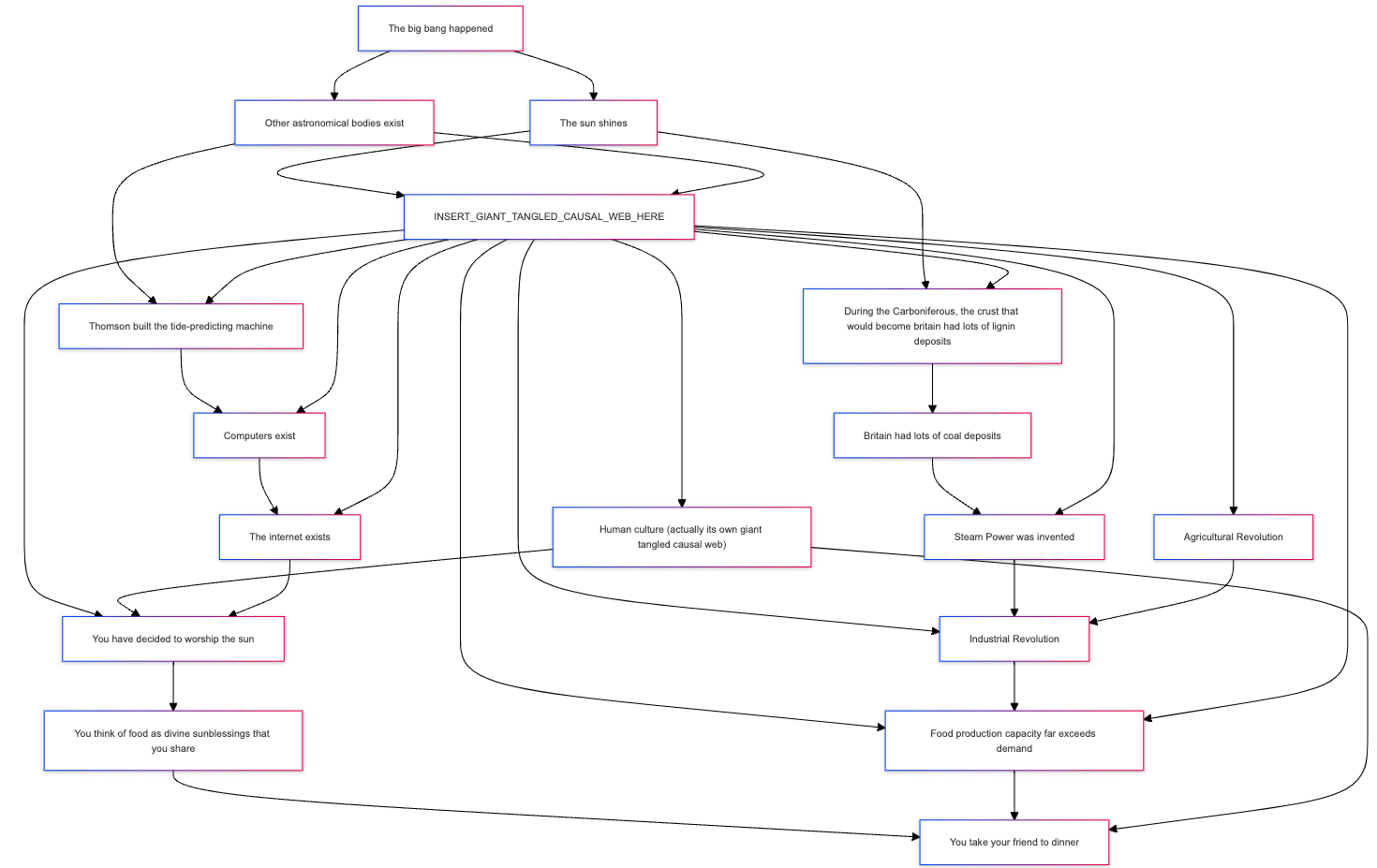

It would be perverse to try to understand a king in terms of his molecular configuration, rather than in the contact between the farmer and the bandit. The molecules of the king are highly diminished phenomena, and if they have information about his place in the ecology, that information is widely spread out across all the molecules and easily lost just by missing a small fraction of them. Any thing can only be understood in terms of the greater forms that were shattered from the world, and this includes neural networks too.

But through gradient descent, shards act upon the neural networks by leaving imprints of themselves, and these imprints have no reason to be concentrated in any one spot of the network (whether activation-space or weight-space). So studying weights and activations is pretty doomed. In principle it's more relevant to study how external objects like the dataset influence the network, though this is complicated by the fact that the datasets themselves are a mishmash of all sorts of random trash[1].

Probably the most relevant approach for current LLMs is Janus's, which focuses on how the different styles of "alignment" performed by the companies affect the AIs, qualitatively speaking. Alternatively, when one has scaffolding that couples important real-world shards to the interchangeable LLMs, one can study how the different LLMs channel the shards in different ways.

Admittedly, it's very plausible that future AIs will use some architectures that bias the representations to be more concentrated in their dimensions, both to improve interpretability and to improve agency. And maybe mechanistic interpretability will work better for such AIs. But we're not there yet.

- ^

Possibly clustering the data points by their network gradients would be a way to put some order into this mess? But two problems: 1) The data points themselves are merely diminished fragments of the bigger picture, so the clustering will not be properly faithful to the shard structure, 2) The gradients are as big as the network's weights, so this clustering would be epically expensive to compute.

In the same way that cells were understood to be indivisible, atomic units of biology hundreds of years ago--before the discovery of sub-cellular structures like organelles, proteins, and DNA--we currently understand features to be fundamental units of neural network representations that we are examining with tools like mechanistic interpretability.

This is not to say that the definition of what constitutes a "feature" is clear at all--in fact, its lack of consensus reflects the extremely immature (but exciting!) state of interpretability research today. I am not claiming that this is a pure bijection; in fact, one of the pivotal ways in which mechanistic interpretability and biology diverge is the fact that defining and understanding feature emergence will most definitely come outside of simple model decomposition into weight + activation spaces (for example, understanding dataset-dependent computation flow as you mentioned above). In contrast, most of biology's advancement has come from decomposing cellular complexity into smaller and smaller pieces.

I suspect this will not be the final story for interpretability, but it is mechanistic interpretability is an interesting first chapter.