Suppose you want to collect some kind of data from a population, but people vary widely in their willingness to provide the data (eg maybe you want to conduct a 30 minute phone survey but some people really dislike phone calls or have much higher hourly wages this funges against).

One thing you could do is offer to pay everyone dollars for data collection. But this will only capture the people whose cost of providing data is below , which will distort your sample.

Here's another proposal: ask everyone for their fair price to provide the data. If they quote you , pay them to collect the data with probability , or with certainty if they quote you a value less than . (If your RNG doesn't return yes, do nothing.) Then upweight the data from your randomly-chosen respondents in inverse proportion to the odds that they were selected. You can do a bit of calculus to see that this scheme incentivizes respondents to quote their fair value, and will provide an expected surplus of dollars to a respondent who disvalues providing data at .

Now you have an unbiased sample of your population and you'll pay at most dollars in expectation if you reach out to people. The cost is that you'll have a noisier sample of the high-reluctance population, but that's a lot better than definitely having none of that population in your study.

Huh, this is pretty cool. It wasn't intuitively obvious there would be an incentive-compatible payment scheme here.

Assorted followup thoughts:

- There are nonzero transaction costs to specifying your price in the first place.

- This is probably too complicated to explain to the general population.

- In practice the survey-giver doesn't have unbounded bankroll so they'll have to cap payouts at some value and give up on survey-takers who quote prices that are too high. I think it's fine if they do this dynamically based on how much they've had to spend so far?

- You can tweak the function from stated price to payment amount and probability of selection here - eg one thing you can do is collect data with probability proportional to for and pay them . I haven't thought a lot about which functions have especially good properties here; it might be possible to improve substantially on the formula I gave here.

- You might, as a survey-giver, end up in a situation where you have more than enough datapoints from $10-value respondents and you care about getting high-value-of-time datapoints by a large margin, like you'd happily pay $1000 to get a random $100-value-of-time respondent but you need to pay $5000 in total rewards to get enough people that you locate a $100-value-of-time respondent who you end up actually selecting.

- Intuitively it feels like there should be some way for you to just target these high-value-of-time respondents, but I think this is fundamentally kind of impossible? Let's suppose 5% of respondents hate surveys, and disvalue the survey at $100, while the rest don't mind surveys and would do it for free. Any strategy which ends up collecting a rich respondent has to pay them at least $100, which means that in order to distinguish such respondents you have to make it worth a survey-liker's time to not pretend to be a survey-hater. So every survey-liker needs to be paid at least $100*p(you end up surveying someone who quotes you $100) in expectation, which means you have to dole out at least $1900 to survey-likers before you can get your survey-hater datapoint.

- Having said the above though, I think it might be fair game to look for observed patterns in time value from data you already have (eg maybe you know which zip codes have more high-value-of-time respondents) and disproportionately target those types of respondents? But now you're introducing new sources of bias into your data, which you could again correct with stochastic sampling and inverse weighting, and it'd be a question of which source of bias/noise you're more worried about in your survey.

This is probably too complicated to explain to the general population

I think it's workable.

No one ever internalises the exact logic of a game the first time they hear the rules (unless they've played very similar games before). A good teacher gives them several levels of approximation, then they play at the level they're comfortable with. Here's the level of approximation I'd start with, which I think is good enough.

"How much would we need to pay you for you to be happy to take the survey? Your data may really be worth that much to us, we really want to make sure we get answers that represent every type of person, including people who value their time a lot. So name your price. Note, you want to give your true price. The more you ask, the less likely it is you'll get to take the survey."

(if callee says "you wouldn't be able to afford it", say "try us.")

(if callee requests a very high amount, double-check and emphasise again that the more they ask the less likely it is that they'll get to take the survey and receive such a payment, make sure they're sure. Maybe explain that the math is set up so that they can't benefit from overstating it)

Nice! The standard method would be the BDM mechanism, and what’s neat is that it works even if people aren't risk-neutral in money. Here’s the idea.

Have people report their disvalue . Independently draw a number at random, e.g. from an exponential distribution with expectation . Then offer to pay them iff .

Now it’s a dominant strategy to report your disvalue truthfully: reporting above your disvalue can never raise what you’re offered but it can risk eliminating a profitable trade, while reporting below it either makes no difference or gives you an offer you wouldn’t want to take.

This is the exact same logic behind the second-price auction.

This is a really cool mechanism! I'm surprised I haven't seen it before -- maybe it's original :)

After thinking about it more, I have a complaint about it, though. The complaint is that it doesn't feel natural to value the act of reaching out to someone at $X. It's natural to value an actual sample at $X, and you don't get a sample every time you reach out to someone, only when they respond.

Like, imagine two worlds. In world A, everyone's fair price is below X, so they're guaranteed to respond. You decide you want 1000 samples, so you pay $1000X. In world B, everyone has a 10% chance of responding in your mechanism. To get a survey with the same level of precision (i.e. variance), you still need to get 1000 responses, and not just reach out to 1000 people.

My suspicion is that if you're paying per (effective) sample, you probably can't mechanism-design your way out of paying more for people who value their time more. I haven't tried to prove that, though.

Ah oops, I now see that one of Drake's follow-up comments was basically about this!

One suggestion that I made to Drake, which I'll state here in case anyone else is interested:

Define a utility function: for example, utility = -(dollars paid out) - c*(variance of your estimator). Then, see if you can figure out how to sample people to maximize your utility.

I think this sort of analysis may end up being more clear-eyed in terms of what you actually want and how good different sampling methods are at achieving that.

A few months ago I spent $60 ordering the March 2025 version of Anthropic's certificate of incorporation from the state of Delaware, and last week I finally got around to scanning and uploading it. Here's a PDF! After writing most of this shortform, I discovered while googling related keywords that someone had already uploaded the 2023-09-21 version online here, which is slightly different.

I don't particularly bid that people spend their time reading it; it's very long and dense and I predict that most people trying to draw important conclusions from it who aren't already familiar with corporate law (including me) will end up being somewhat confused by default. But I'd like more transparency about the corporate governance of frontier AI companies and this is an easy step.

Anthropic uses a bunch of different phrasings of its mission across various official documents; of these, I believe the COI's is the most legally binding one, which says that "the specific public benefit that the Corporation will promote is to responsibly develop and maintain advanced Al for the long term benefit of humanity." I like this wording less than others that Anthropic has used like "Ensure the world safely makes the transition through transformative AI", though I don't expect it to matter terribly much.

I think the main thing this sheds light on is stuff like Maybe Anthropic's Long-Term Benefit Trust Is Powerless: as of late 2025, overriding the LTBT takes 85% of voting stock or all of (a) 75% of founder shares (b) 50% of series A preferred (c) 75% of non-series-A voting preferred stock. (And, unrelated to the COI but relevant to that post, it is now public that neither Google nor Amazon hold voting shares.)

The only thing I'm aware of in the COI that seems concerning to me re: the Trust is a clause added to the COI sometime between the 2023 and 2025 editions, namely the italicized portion of the following:

(C) Action by the Board of Directors. Except as expressly provided herein, each director of the Corporation shall be entitled to one (1) vote on all matters presented to the Board of Directors for approval at any meeting of the Board of Directors, or for action to be taken by written consent without a meeting; provided, however, that, if and for so long as the Electing Preferred Holders are entitled to elect a director of the Corporation, the affirmative vote of either (i) the Electing Preferred Director or (ii) at least 61% of the then serving directors may be required for authorization by the Board of Directors of any of the matters set forth in the Investors' Rights Agreement. If at any time the vote of the Board of Directors with respect to a matter is tied (a "Deadlocked Matter") and the Chief Executive Officer of the Corporation is then serving as a director (the "CEO Director"), the CEO Director shall be entitled to an additional vote for the purpose of deciding the Deadlocked Matter (a "Deadlock Vote") (and every reference in this Restated Certificate or in the Bylaws of the Corporation to a majority or other proportion of the directors shall refer to a majority or other proportion of the votes of the directors), except with respect to any vote as to which the CEO Director is not disinterested or has a conflict of interest, in which such case the CEO Director shall not have a Deadlock Vote.

I think this means that the 3 LTBT-appointed directors do not have the ability to unilaterally take some kinds of actions, plausibly including things like firing the CEO (it would depend on what's in the Investors' Rights Agreement, which I don't have access to). I think this is somewhat concerning, and moderately downgrades my estimate of the hard power possessed by the LTBT, though my biggest worry about the quality of the Trust's oversight remains the degree of its AI safety expertise and engagement rather than its nominal hard power. (Though as I said above, interpreting this stuff is hard and I think it's quite plausible I'm neglecting important considerations!)

You may be interested in ailabwatch.org/resources/corporate-documents, which links to a folder where I have uploaded ~all past versions of the CoI. (I don't recommend reading it, although afaik the only lawyers who've read the Anthropic CoI are Anthropic lawyers and advisors, so it might be cool if one independent lawyer read it from a skeptical/robustness perspective. And I haven’t even done a good job diffing the current version from a past version; I wasn’t aware of the thing Drake highlighted.)

(TLDR: Recent Cochrane review says zinc lozenges shave 0.5 to 4 days off of cold duration with low confidence, middling results for other endpoints. Some reason to think good lozenges are better than this.)

There's a 2024 Cochrane review on zinc lozenges for colds that's come out since LessWrong posts on the topic from 2019, 2020, and 2021. 34 studies, 17 of which are lozenges, 9/17 are gluconate and I assume most of the rest are acetate but they don't say. Not on sci-hub or Anna's Archive, so I'm just going off the abstract and summary here; would love a PDF if anyone has one.

- Dosing ranged between 45 and 276 mg/day, which lines up with 3-15 18mg lozenges per day: basically in the same ballpark as the recommendation on Life Extension's acetate lozenges (the rationalist favorite).

- Evidence for prevention is weak (partly bc fewer studies): they looked at risk of developing cold, rate of colds during followup, duration conditional on getting a cold, and global symptom severity. All but the last had CIs just barely overlapping "no effect" but leaning in the efficacious direction; even the optimistic ends of the CIs don't seem great, though.

- Evidence for treatment is OK: "there may be a reduction in the mean duration of the cold in days (MD ‐2.37, 95% CI ‐4.21 to ‐0.53; I² = 97%; 8 studies, 972 participants; low‐certainty evidence)". P(cold at end of followup) and global symptom severity look like basically noise and have few studies.

My not very informed takes:

- On the model of the podcast in the 2019 post, I should expect several of these studies to be using treatments I think are less or not at all efficacious, be less surprised by study-to-study variation, and increase my estimate of the effect size of using zinc acetate lozenges compared to anything else. Also maybe I worry that some of these studies didn't start zinc early enough? Ideally I could get the full PDF and they'll just have a table of (study, intervention type, effect size).

- Even with the caveats around some methods of absorption being worse than others, this seems rough for a theory in which zinc acetate taken early completely obliterates colds - the prevention numbers just don't look very good. (But maybe the prevention studies all used bad zinc?)

- I don't know what baseline cold duration is, but assuming it's something like a week, this lines up pretty well with the 33% decrease (40% for acetate) seen in this meta-analysis from 2013 if we assume effect sizes are dragged down by worse forms of zinc in the 2024 review.

- But note these two reviews are probably looking at many of the same studies, so that's more of an indication that nothing damning has come out since 2013 rather than an independent datapoint.

- My current best guess for the efficacy of zinc acetate lozenges at 18mg every two waking hours from onset of any symptoms, as measured by "expected decrease in integral of cold symptom disutility", is:

- 15% demolishes colds, like 0.2x disutility

- 25% helps a lot, like 0.5x disutility

- 35% helps some (or helps lots but only for a small subset of people or cases), like 0.75x disutility

- 25% negligible difference from placebo

I woke up at 2am this morning with my throat feeling bad, started taking Life Extension peppermint flavored 18mg zinc acetate lozenges at noon, expecting to take 5ish lozenges per day for 3 days or while symptoms are worsening. My most recent cold before this was about 6 days: [mild throat tingle, bad, worse, bad, fair bit better, nearly symptomless, symptomless]. I'll follow up about how it goes!

Update: Got tested, turns out the thing I have is bacterial rather than viral (Haemophilius influenzae). Lines up with the zinc lozenges not really helping! If I remember to take zinc the next time I come down with a cold, I'll comment here again.

I'm starting to feel a bit sneezy and throat-bad-y this evening; I took a zinc lozenge maybe 2h after the first time I noticed anything feeling slightly off. Will keep it up for as long as I feel bad and edit accordingly, but preregistering early to commit myself to updating regardless of outcome.

This is actually a crazy big effect size? Preventing ~10–50% of a cold for taking a few pills a day seems like a great deal to me.

I agree, zinc lozenges seem like they're probably really worthwhile (even in the milder-benefit worlds)! My less-ecstatic tone is only relative to the promise of older lesswrong posts that suggested it could basically solve all viral respiratory infections, but maybe I should have made the "but actually though, buy some zinc lozenges" takeaway more explicit.

Not on sci-hub or Anna's Archive, so I'm just going off the abstract and summary here; would love a PDF if anyone has one.

If you email the authors they will probably send you the full article.

Does anyone know of a not peppermint flavored zinc acetate lozenge? I really dislike peppermint, so I'm not sure it would be worth it to drink 5 peppermint flavored glasses of water a day to decrease the duration of cold with one day, and I haven't found other zinc acetate lozenge options yet, the acetate version seems to be rare among zing supplement. (Why?)

Earlier discussion on LW on zinc lozenges effectiveness mentioned that other flavorings which make it taste nice actually prevent the zinc effect.

From this comment by philh (quite a chain of quotes haha):

According to a podcast that seemed like the host knew what he was talking about, you also need the lozenges to not contain any additional ingredients that might make them taste nice, like vitamin C. (If it tastes nice, the zinc isn’t binding in the right place. Bad taste doesn’t mean it’s working, but good taste means it’s not.) As of a few years ago, that brand of lozenge was apparently the only one on the market that would work. More info: https://www.lesswrong.com/posts/un2fgBad4uqqwm9sH/is-this-info-on-zinc-lozenges-accurate

That's why the peppermint zinc acetate lozenge from Life Extension is the recommended one. So your only other option might be somehow finding unflavored zinc lozenges, which might taste even worse? Not sure where that might be available

Note that the lozenges dissolve slowly, so (bad news) you'd have the taste around for a while but (good news) it's really not a very strong peppermint flavor while it's in your mouth, and in my experience it doesn't really have much of the menthol-triggered cooling effect. My guess is that you would still find it unpleasant, but I think there's a decent chance you won't really mind. I don't know of other zinc acetate brands, but I haven't looked carefully; as of 2019 the claim on this podcast was that only Life Extension brand are any good.

Thanks for putting this together!

I have a vague memory of a post saying that taking zinc early, while virus was replicating in the upper respiratory tract, was much more important than taking it later, because later it would have spread all over the body and thus the zinc can’t get to it, or something like this. So I tend to take a couple early on then stop. But it sounds like you don’t consider that difference important.

Is it your current (Not asking you to do more research!) impression that it’s useful to take zinc throughout the illness?

My impression is that since zinc inhibits viral replication, it's most useful in the regime where viral populations are still growing and your body hasn't figured out how to beat the virus yet. So getting started ASAP is good, but it's likely helpful for the first 2-3 days of the illness.

An important part of the model here that I don't understand yet is how your body's immune response varies as a function of viral populations - e.g. two models you could have are

- As soon as any immune cell in your body has ever seen a virus, a fixed scale-up of immune response begins, and you're sick until that scale-up exceeds viral populations.

- Immune response progress is proportional to current viral population, and you get better as soon as total progress crosses some threshold.

If we simplistically assume* that badness of cold = current viral population, then in world 1 you're really happy to take zinc as soon as you have just a bit of virus and will get better quickly without ever being very sick. In world 2, the zinc has no effect at all on total badness experienced, it just affects the duration over which you experience that badness.

*this is false, tbc - I think you generally keep having symptoms a while after viral load becomes very low, because a lot of symptoms are from immune response rather than the virus itself.

I woke up this morning thinking 'would be nice to have a concise source for the whole zinc/colds thing'. This is amazing.

I help run an EA coliving space, so I started doing some napkin math on how many sick days you'll be saving our community over the next year. Then vaguely extrapolated to the broader lesswrong audience who'll read your post and be convinced/reminded to take zinc (and given decent guidance for how to use it effectively).

I'd guess at minimum you've saved dozens of days over the next year by writing this post. That's pretty cool. Thankyou <3

I ordered some of the Life Extension lozenges you said you were using; they are very large and take a long time to dissolve. It's not super unpleasant or anything, I'm just wondering if you would count this against them?

On my model of what's going on, you probably want the lozenges to spend a while dissolving, so that you have fairly continuous exposure of throat and nasal tissue to the zinc ions. I find that they taste bad and astringent if I actively suck on them but are pretty unobtrusive if they just gradually dissolve over an hour or two (sounds like you had a similar experience). I sometimes cut the lozenges in half and let each half dissolve so that they fit into my mouth more easily, you might want to give that a try?

Interesting, I can see why that would be a feature. I don't mind the taste at all actually. Before, I had some of their smaller citrus flavored kind, and they dissolved super quick and made me a little nauseous. I can see these ones being better in that respect.

Do you have any thoughts on mechanism & whether prevention is actually worse independent of inconvenience?

The 2019 LW post discusses a podcast which talks a lot about gears-y models and proposed mechanisms; as I understand it, the high level "zinc ions inhibit viral replication" model is fairly well accepted, but some of the details around which brands are best aren't as well-attested elsewhere in the literature. For instance, many of these studies don't use zinc acetate, which this podcast would suggest is best. (To its credit, the 2013 meta-analysis does find that acetate is (nonsignificantly) better than gluconate, though not radically so.)

I work on a capabilities team at Anthropic, and in the course of deciding to take this job I've spent[1] a while thinking about whether that's good for the world and which kinds of observations could update me up or down about it. This is an open offer to chat with anyone else trying to figure out questions of working on capability-advancing work at a frontier lab! I can be reached at "graham's number is big" sans spaces at gmail.

- ^

and still spend - I'd like to have Joseph Rotblat's virtue of noticing when one's former reasoning for working on a project changes.

I’m not “trying to figure out” whether to work on capabilities, having already decided I’ve figured it out and given up such work. Are you interested in talking about this to someone like me? I can’t tell whether you want to restrict discussion to people who are still in the figuring out stage. Not that there’s anything wrong with that, mind you.

I think my original comment was ambiguous - I also consider myself to have mostly figured it out, in that I thought through these considerations pretty extensively before joining and am in a "monitoring for new considerations or evidence or events that might affect my assessment" state rather than a "just now orienting to the question" state. I'd expect to be most useful to people in shoes similar to my past self (deciding whether to apply or accept an offer) but am pretty happy to talk to anyone, including eg people who are confident I'm wrong and want to convince me otherwise.

Thanks for clearing that up. It sounds like we’re thinking along very similar lines, but that I came to a decision to stop earlier. From a position inside one of major AI labs, you’ll be positioned to more correctly perceive when the risks start outweighing the benefits. I was perceiving events more remotely from over here in Boston, and from inside a company that uses AI as a one of a number of tools, not as the main product.

I’ve been aware of the danger of superintelligence since the turn of the century, and I did my “just now orienting to the question” back in the early 2000s. I decided that it was way too early to stop working on AI back then, and I should just “monitor for new considerations or evidence or events.” Then in 2022, Sydney/Bing came along, and it was of near-human intelligence, and aggressively misaligned, despite the best efforts of its creators. I decided that was close enough to dangerous AI that it was time to stop working on such things. In retrospect I could have kept working safely in AI for another couple of years, i.e. until today. But I decided to pursue the “death with dignity” strategy: if it all goes wrong, at least you can’t blame me. Fortunately my employers were agreeable to have me pivot away from AI; there’s plenty of other work to be done.

Isn't the most relevant question whether it is the best choice for you? (Taking into account your objectives which are (mostly?) altruistic.)

I'd guess having you work on capabilities at Anthropic is net good for the world[1], but probably isn't your best choice long run and plausibly isn't your best choice right now. (I don't have a good understanding of your alternatives.)

My current view is that working on capabilites at Anthropic is a good idea for people who are mostly altruistically motivated if and only if that person is very comparatively advantaged at doing capabilies at Anthropic relative to other similarly altruistically motivated people. (Maybe if they are in the top 20% or 10% of comparatively advantage among this group of similarly motivated people.)

Because I think Anthropic being more powerful/successful is good, the experience you'd gain is good, and the influence is net positive. And these factors are larger than the negative externalities on advacing AI for other actors. ↩︎

The way I'd think about this: You should have at least 3 good plans for what you would do that you really believe in, and at least one of them should be significantly different from what you are currently doing. I find this really valuable for avoiding accidental inertia, motivated reasoning, or just regular ol' tunnel vision.

I remain fairly confused about Anthropic despite having thought about it a lot, but in my experience "have two alternate plans you really believe in" is a sort of necessary step for thinking clearly about one's mainline plan.

Yeah, I agree that you should care about more than just the sign bit. I tend to think the magnitude of effects of such work is large enough that "positive sign" often is enough information to decide that it dominates many alternatives, though certainly not all of them. (I also have some kind of virtue-ethical sensitivity to the zero point of the impacts of my direct work, even if second-order effects like skill building or intra-lab influence might make things look robustly good from a consequentialist POV.)

The offer of the parent comment is more narrowly scoped, because I don't think I'm especially well suited to evaluate someone else's comparative advantages but do have helpful things to say on the tradeoffs of that particular career choice. Definitely don't mean to suggest that people (including myself) should take on capability-focused roles iff they're net good!

I did think a fair bit about comparative advantage and the space of alternatives when deciding to accept my offer; I've put much less work into exploration since then, arguably too much less (eg I suspect I don't quite meet Raemon's bar). Generally happy to get randomly pitched on things, I suppose!

the magnitude of effects of such work is large enough that "positive sign" often is enough information to decide that it dominates many alternatives, though certainly not all of them

FWIW, my guess is that this is technically true if you mean something broad by "many alternatives", but if you mean something like "the best several alternatives that you would think of if you spent a few days thinking about it and talking to people" then I would disagree.

@Drake Thomas are you interested in talking about other opportunities that might be better for the world than your current position (and meet other preferences of yours)? Or are you primarily interested in the "is my current position net positive or net negative for the world" question?

See my reply to Ryan - I'm primarily interested in offering advice on something like that question since I think it's where I have unusually helpful thoughts, I don't mean to imply that this is the only question that matters in making these sorts of decisions! Feel free to message me if you have pitches for other projects you think would be better for the world.

Gotcha, I interpreted your comment as implying you were interested in trying to improve your views on the topic in collaboration with someone else (who is also interested in improving their views on the topic).

So I thought it was relevant to point out that people should probably mostly care about a different question.

(I also failed to interpret the OP correctly, although I might have been primed by Ryan's comment. Whoops)

Just saw the OP replied in another comment that he is offering advice.

I recommend the Wikipedia article on Puyi, the last emperor of China. He was 6 years old when the 1911 Xinhai revolution forced him to abdicate, and spent the remainder of his life being courted and/or used by various actors who found his support useful to their cause.

I think it's worth reading, though it's fairly long; I think the story of his life is just pretty interesting and gripping, and the article is unusually well-written and presented in a fairly narratively engaging style. (Though I am not particularly well-versed in 20th century Chinese history and am fully deferring to Wikipedia editors on the veracity and neutrality of this account.)

It's an interesting case study of Just Some Guy being thrust into high-stakes political machinations without much independent power - if you've ever wondered how you would fare in a Game of Thrones style political intrigue, I think Puyi's story gives a decent sense: you get puppeted around by much more ruthless actors, accomplish very little of what you want, and generally have a pretty bad time.

I feel a weird mix of emotions towards the guy. He was pretty clearly an awful person almost wholly devoid of any virtue for the first several decades of his life - cowardly, vain, cruel, naive, incompetent, and a rapist. But he never really had a chance to be anything else; it's unclear if he had a single genuine relationship with someone who wasn't trying to manipulate him after age 8, and I'm not sure he had ever encountered a person earnestly doing something for moral reasons. And he lived a pretty unpleasant life during this whole time.

A couple teaser facts to entice you to read the article:

- He was incredibly clumsy. Until middle age he had never once had to do things like brush his teeth, close doors behind himself, or put anything away, because servants simply handled it all for him. Apparently a total lack of having to do things for yourself in childhood can give you a sort of developmental disorder in which you can't really pick up the habits effectively at age 40.

- He has one of the most dramatic redemption arcs of any character I know of in truth or fiction; it seems like he pretty genuinely came to regret his (many) past misdeeds and ended up as a pretty kind and humble man. This redemption arc is slightly tarnished by the fact that it, like everything else in his life, was a calculated manipulation, this time on the part of the newly formed CCP. Though it seems like they mostly just had to expose him to the reality of what he'd been complicit in, rather than any more sophisticated manipulation (and avoid letting him learn about the widespread famines).

- About the only positive influence on his life during adolescence was his English tutor Reginald Johnston, a Scottish guy who really liked monarchy (in the "emperor is basically a god" sense rather than the British sense) and had enormous influence over Puyi as the only person willing or able to say no to him.

Yeah I remember watching this YouTube video about Puyi and thinking, huh, we do have a real historical example of Ajeya Cotra's young businessperson analogy from Holden's blog awhile back:

Imagine you are an eight-year-old whose parents left you a $1 trillion company and no trusted adult to serve as your guide to the world. You must hire a smart adult to run your company as CEO, handle your life the way that a parent would (e.g. decide your school, where you’ll live, when you need to go to the dentist), and administer your vast wealth (e.g. decide where you’ll invest your money).

You have to hire these grownups based on a work trial or interview you come up with -- you don't get to see any resumes, don't get to do reference checks, etc. Because you're so rich, tons of people apply for all sorts of reasons.

Your candidate pool includes:

- Saints -- people who genuinely just want to help you manage your estate well and look out for your long-term interests.

- Sycophants -- people who just want to do whatever it takes to make you short-term happy or satisfy the letter of your instructions regardless of long-term consequences.

- Schemers -- people with their own agendas who want to get access to your company and all its wealth and power so they can use it however they want.

Because you're eight, you'll probably be terrible at designing the right kind of work tests... Whatever you could easily come up with seems like it could easily end up with you hiring, and giving all functional control to, a Sycophant or a Schemer. By the time you're an adult and realize your error, there's a good chance you're penniless and powerless to reverse that.

I read that article. I'm suspicious because the story is too perfect, and surely lots of people wanted to discredit the monarchy, and there are no apologists to dispute the account.

It seems like Reginald Johnston was basically an apologist? But I haven't done any due diligence here, it's certainly possible this account is pretty distorted. Would be curious to hear about any countervailing narratives you find.

Oh sorry, somehow I forgot what you wrote about Reginald Johnston before writing my comment! I haven't read anything else about Puyi, so my suspicion is just a hunch.

So it's been a few months since SB1047. My sense of the main events that have happened since the peak of LW commenter interest (might have made mistakes or missed some items) are:

- The bill got vetoed by Newsom for pretty nonsensical stated reasons, after passing in the state legislature (but the state legislature tends to pass lots of stuff so this isn't much signal).

- My sense of the rumor mill is that there are perhaps some similar-ish bills in the works in various state legislatures, but AFAIK none that have yet been formally proposed or accrued serious discussion except maybe for S.5616.

- We're now in a Trump administration which looks substantially less inclined to do safety regulation of AI at the federal level than the previous admin was. In particular, some acceleration-y VC people prominently opposed to SB1047 are now in positions of greater political power in the new administration.

- Eg Sriram Krishnan, Trump's senior policy advisor on AI, was opposed; "AI and Crypto Czar" David Sacks doesn't have a position on record but I'd be surprised if he was a fan.

- On the other hand, Elon was nominally in favor (though I don't think xAI took an official position one way or the other).

Curious for retrospectives here! Whose earlier predictions gain or lose Bayes points? What postmortems do folks have?

though I don't think xAI took an official position one way or the other

I assumed most of everybody assumed xAI supported it since Elon did. I didn't bother pushing for an additional xAI endorsement given that Elon endorsed it.

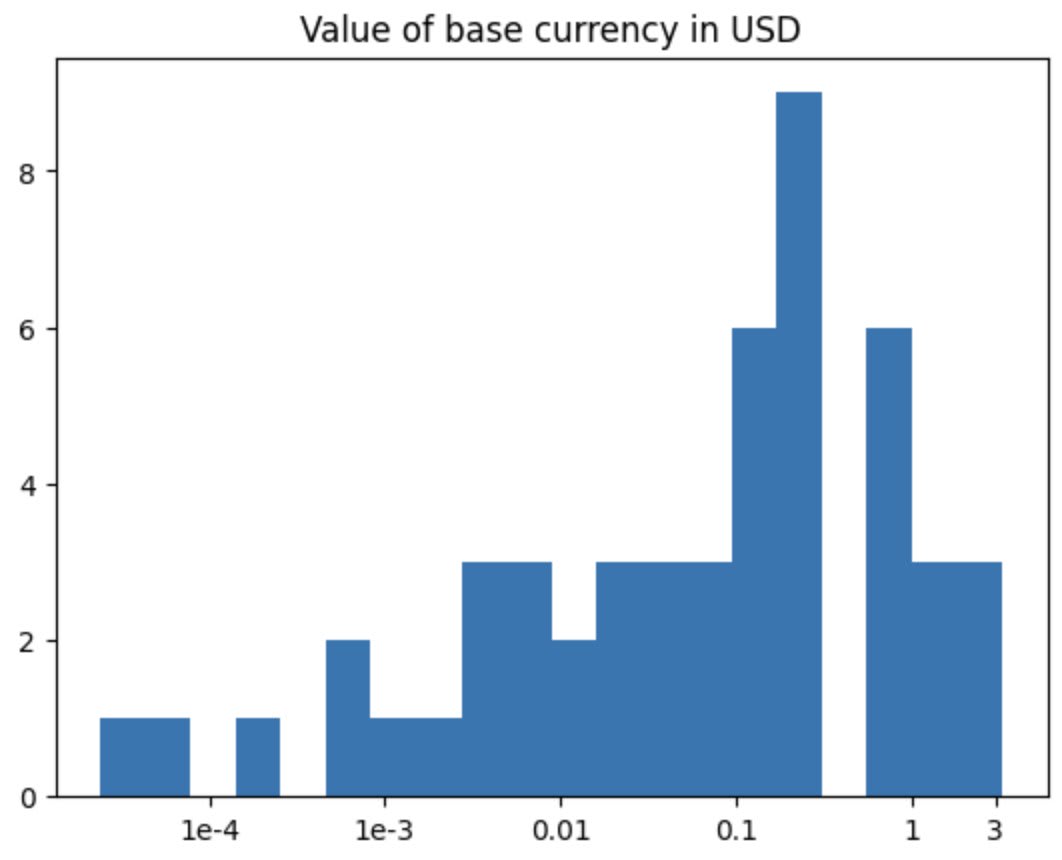

Basically all major world currencies have a base unit worth at most 4 US dollars:

There’s a left tail going out to 2.4e-5 USD with the Iranian rial, but no right tail. Why is that?

One thing to note is that this is a recent trend: for most of US history (until 1940 or so), a US dollar was worth about 20 modern dollars inflation-adjusted. I thought maybe people used dimes or cents as a base unit then, but Thomas Jefferson talks about "adopt[ing] the Dollar for our Unit" in 1784.

You could argue that base units are best around $1, but historical coins (backed by precious metals) are inconvenient if small, so they couldn’t shrink enough. But if that’s true, why not make the dime the base unit then? Maybe it’s really useful to have fractional amounts of currency, or it’s unsatisfying for a base-unit coin to be too small?

Another angle is to say that base units should be large, but steady inflation pushes them down over time and it’s inconvenient to change, and a preference for similar exchange rates + a fairly stable world order means no upstart central banks to set a new standard.

This is my current best guess (along with "base units between $1 and $20 are all basically fine") but I don't feel very confident about it (and if people want to match the US dollar then why does the left tail exist?).

My views which I have already shared in person:

- The reason old currency units were large is because they're derived from weights of silver (eg a pound sterling or the equivalent French system dating back to Charlemagne), and the pound is a reasonably-sized base unit of weight, so the corresponding monetary unit was extremely large. There would be nothing wrong with having $1000 currency units again, it's just that we usually have inflation rather than deflation. In crypto none of the reasonably sized subdivisions have caught on and it seems tolerable to buy a sandwich for 0.0031 ETH if that were common.

- Currencies are redenominated after sufficient inflation only when the number of zeros on everything gets unwieldy. This requires replacing all cash and is a bad look because it's usually done after hyperinflation, so countries like South Korea haven't done it yet.

- The Iranian rial's exchange rate, actually around 1e-6 now, is so low partly due to sanctions, and is in the middle of redenomination from 10000 rial = 1 toman.

- When people make a new currency, they make it similar to the currencies of their largest trading partners for convenience, hence why so many are in the range of the USD, euro and yuan. Various regional status games change this but not by an order of magnitude, and it is conceivable to me that we could get a $20 base unit if they escalate a bit.

Interesting question. The "base" unit is largely arbitrary, but the smallest subunit of a currency has more practical implications, so it may also help to think in those terms. Back in the day, you had all kinds of wonky fractions but now basically everyone is decimalized, and 1/100 is usually the smallest unit. I imagine then that the value of the cent is as important here as the value of the dollar.

Here's a totally speculative theory based on that.

When we write numbers, we have to include any zeros after the decimal but you never need leading zeros on a whole number. That is, we write "4" not "004" but if the number is "0.004" there is no compressed way of writing that out. In book keeping, it's typical to keep everything right-aligned but it makes adding up and comparing magnitudes easier, so you'll also write trailing zeros that aren't strictly necessary (that is $4.00 rather than just $4 if other prices you're recording sometimes use those places).

This means if you have a large number of decimal places, book keeping is much more annoying and you have to be really careful about leading zeros. Entering in a price as "0.00003" is annoying and easy to mess up by an order of magnitude without noticing. Thus, having a decimalized currency with a really large base unit is a pain and there's a natural tendency towards a base unit that allows a minimum subunit of 0.01 or so to be sensible.

A problem I have that I think is fairly common:

- I notice an incoming message of some kind.

- For whatever reason it's mildly aversive or I'm busy or something.

- Time passes.

- I feel guilty about not having replied yet.

- Interacting with the message is associated with negative emotions and guilt, so it becomes more aversive.

- Repeat steps 4 and 5 until the badness of not replying exceeds the escalating 4/5 cycle, or until the end of time.

Curious if anyone who once had this problem feels like they've resolved it, and if so what worked!

I haven’t totally defeated this, but I’ve had some luck with immediately replying “I am looking to reply to this properly, first I need to X” if there is an X blocking a useful reply

I liked this post, but I think there's a good chance that the future doesn't end up looking like a central example of either "a single human seizes power" or "a single rogue AI seizes power". Some other possible futures:

- Control over the future by a group of humans, like "the US government" or "the shareholders of an AI lab" or "direct democracy over all humans who existed in 2029"

- Takeover via an AI that a specific human crafted to do a good job at enacting that human's values in particular, but which the human has no further steering power over

- Lots of different actors (both human and AI) respecting one another's property rights and pursuing goals within negotiated regions of spacetime, with no one actor having power over the majority of available resources

- A governance structure which nominally leaves particular humans in charge, and which the AIs involved are rule-abiding enough to respect, but in which things are sufficiently complicated and beyond human understanding that most decisions lack meaningful human oversight.

- A future in which one human has extremely large amounts of power, but they acquired that power via trade and consensual agreements through their immense (ASI-derived) material wealth rather than via the sorts of coercive actions we tend to imagine with words like "takeover".

- A singleton ASI is in decisive control of the future, and among its values are a strong commitment to listen to human input and behave according to its understanding of collective human preferences, though maybe not its single overriding concern.

I'd be pretty excited to see more attempts at comparing these kinds of scenarios for plausibility and for how well the world might go conditional on their occurrence.

(I think it's fairly likely that lots of these scenarios will eventually converge on something like the standard picture of one relatively coherent nonhuman agent doing vaguely consequentialist maximization across the universe, after sufficient negotiation and value-reflection and so on, but you might still care quite a lot about how the initial conditions shake out, and the dumbest AI capable of performing a takeover is probably very far from that limiting state.)

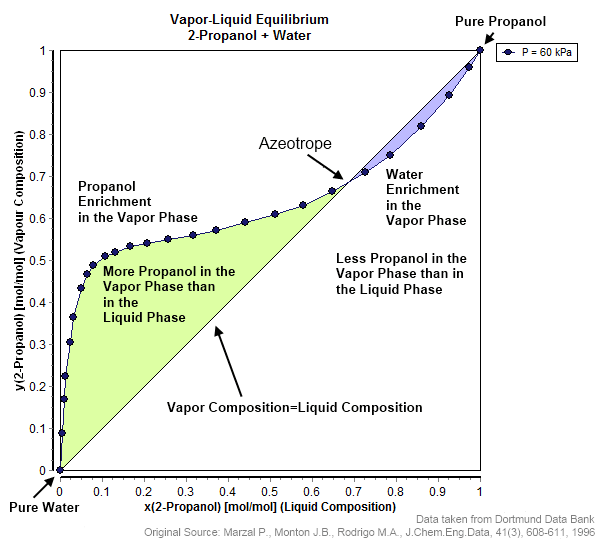

My friend Peter Schmidt-Nielsen revitalized an old conversation of ours on twitter, and it led to me having a much better sense of what's up with azeotropic concentrations and why it is that you "can't" concentrate ethanol above its azeotropic point of 95% via distillation and boiling.

The level 1 argument goes:

When you boil off vapors from a 50% ethanol mixture, the ethanol boils off more readily, and so you can condense the vapor back into a 60% ethanol mixture (and redistill your now-lower-concentration liquid leftovers, and so on). If you repeat this process, the ethanol concentration will keep climbing, but it asymptotes - at 94%, you'll only get to 94.5%, and 94.5% will only get you to 94.7%, and so on. If all you have is a collection of mixtures at concentrations less than 95%, everything you do to them will only give you more of the same. So you can't make pure ethanol via distillation.

[N.B.: This chart is for propanol rather than ethanol, but it's more nicely labeled than the ethanol charts I could find, and also the relevant regions end up a little more visually distinct here. But it's the same qualitative story as with ethanol, just with more of the action in the [0.95,1] regime. See here for a real ethanol graph.]

The level 2 rejoinder goes:

Hang on a moment, though. What if I get up to 95%, and then just give myself a tiny little push by adding in a bit of pure ethanol? Now my vapor will be lower concentration than my starting liquid, but that's just fine - it means that the leftover liquid has improved! So I can do the same kind of distillation pipeline, but using my liquid as the high-value enriched stages, and carry myself all the way up to 100% purity. Once I pass over the azeotropic point even a little, I'm back in a regime where I can keep increasing my concentrations, just via the opposite method.

But the level 3 counterargument goes:

Although this technically works, your yields are crap - in particular, the yield of pure ethanol you can get out this way is only ever as much as you added initially to get over the hump of the azeotropic point, so you don't get any gains. Suppose you start with 1 unit of azeotropic solution and you add units of pure ethanol to bring yourself up to a concentration solution. Now any distillation and remixing steps you do from here will keep concentrations above , for the same reasons they kept it below in the other regime. But if the concentrations can't drop below , then conservation of water and ethanol mass means that you can only ever get units of pure ethanol back out of this pool - if you had any more, the leftovers would have to average a concentration below , which they can't cross. So your yield from this process is at most as good as what you put in to kickstart it.

I'm not sure what level 4 is yet, but I think it might be either of:

- Use variation in vapor-liquid curves with atmospheric pressure to get more wiggle room and extract way better yields by dynamically moving the pressure of your distillery up and down.

- Force higher concentrations via osmosis with the right filter around the azeotropic point to get the boost, and anti-distill to get pure ethanol out of that, which might turn out to be more energy-efficient than using osmosis to go that high directly.

(People who actually know physics and chemistry, feel free to correct me on any of this!)