(edit 3: i'm not sure, but this text might be net-harmful to discourse)

i continue to feel so confused at what continuity led to some users of this forum asking questions like, "what effect will superintelligence have on the economy?" or otherwise expecting an economic ecosystem of superintelligences (e.g. 1[1], 2 (edit 2: I misinterpreted this question)).

it actually reminds me of this short story by davidad, in which one researcher on an alignment team has been offline for 3 months, and comes back to find the others on the team saying things like "[Coherent Extrapolated Volition?] Yeah, exactly! Our latest model is constantly talking about how coherent he is. And how coherent his volitions are!", in that it's something i thought this forum would have seen as 'confused about the basics' just a year ago, and i don't yet understand what led to it.

(edit: i'm feeling conflicted about this shortform after seeing it upvoted this much. the above paragraph would be unsubstantive/bad discourse if read as an argument by analogy, which i'm worried it was (?). i was mainly trying to express confusion.)

from the power of intelligence (actually, i want to quote the entire post, it's short):

...I keep

As far as I know, my post started the recent trend you complain about.

Several commenters on this thread (e.g. @Lucius Bushnaq here and @MondSemmel here) mention LessWrong's growth and the resulting influx of uninformed new users as the likely cause. Any such new users may benefit from reading my recently-curated review of Planecrash, the bulk of which is about summarising Yudkowsky's worldview.

i continue to feel so confused at what continuity led to some users of this forum asking questions like, "what effect will superintelligence have on the economy?" or otherwise expecting an economic ecosystem of superintelligences

If there's decision-making about scarce resources, you will have an economy. Even superintelligence does not necessarily imply infinite abundance of everything, starting with the reason that our universe only has so many atoms. Multipolar outcomes seem plausible under continuous takeoff, which the consensus view in AI safety (as I understand it) sees as more likely than fast takeoff. I admit that there are strong reasons for thinking that the aggregate of a bunch of sufficiently smart things is agentic, but this isn't directly relevant for the concerns about humans wi...

End points are easier to infer than trajectories

Assuming that which end point you get to doesn't depend on the intermediate trajectories at least.

Another issue is the Eternal September issue where LW membership has grown a ton due to the AI boom (see the LW site metrics in the recent fundraiser post), so as one might expect, most new users haven't read the old stuff on the site. There are various ways in which the LW team tries to encourage them to read those, but nevertheless.

The basic answer is the following:

- The incentive problem still remains, such that it's more effective to use the price system than to use a command economy to deal with incentive issues:

https://x.com/MatthewJBar/status/1871640396583030806

- Related to this, perhaps the outer loss of the markets isn't nearly as dispensable as a lot of people on LW believe, and contact with reality is a necessary part of all future AIs.

More here:

- A potentially large crux is I don't really think a utopia is possible, at least in the early years even by superintelligences, because I expect preferences in the new environment to grow unboundedly such that preferences are always dissatisfied, even charitably assuming a restriction on the utopia concept to be relative to someone else's values.

My guess is that it's just an effect of field growth. A lot of people coming in now weren't around when the consensus formed and don't agree with it or don't even know much about it.

Also, the consensus wasn't exactly uncontroversial on LW even way back in the day. Hanson's Ems inhabit a somewhat more recognisable world and economy that doesn't have superintelligence in it, and lots of skeptics used to be skeptical in the sense of thinking all of this AI stuff was way too speculative and wouldn't happen for hundreds of years if ever, so they made critiques of that form or just didn't engage in AI discussions at all. LW wasn't anywhere near this AI-centric when I started reading it around 2010.

nothing short of death can stop me from trying to do good.

the world could destroy or corrupt EA, but i'd remain an altruist.

it could imprison me, but i'd stay focused on alignment, as long as i could communicate to at least one on the outside.

even if it tried to kill me, i'd continue in the paths through time where i survived.

Never say 'nothing' :-)

- the world might be in such state that attempts to do good bring it into some failure instead, and doing the opposite is prevented by society

(AI rise and blame-credit which rationality movement takes for it, perhaps?) - what if, for some numerical scale, the world would give you option "with 50%, double goodness score; otherwise, lose almost everything"? Maximizing EV on this is very dangerous...

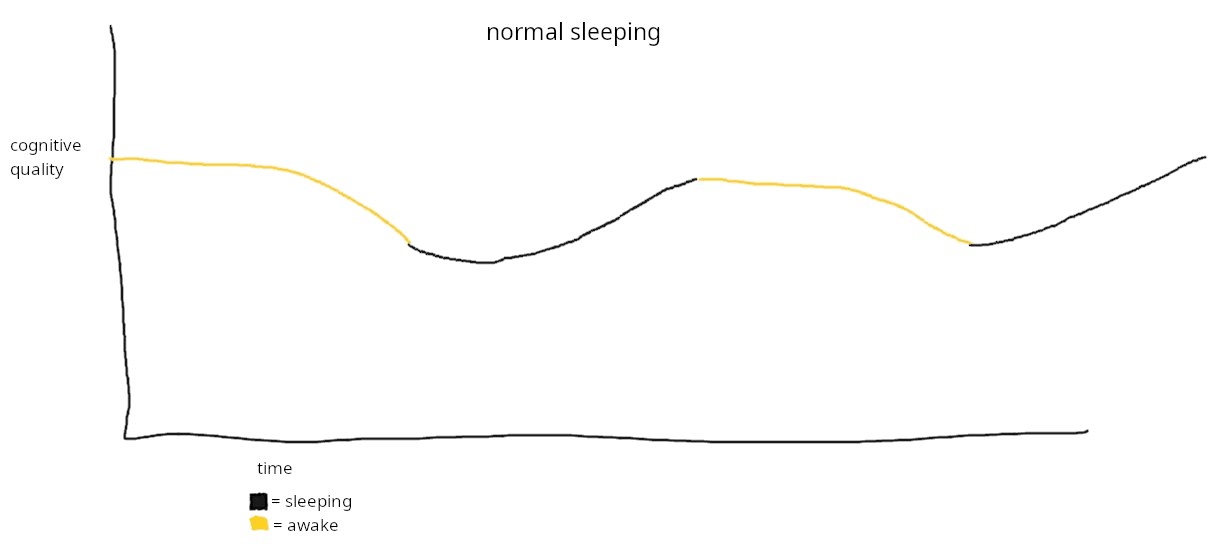

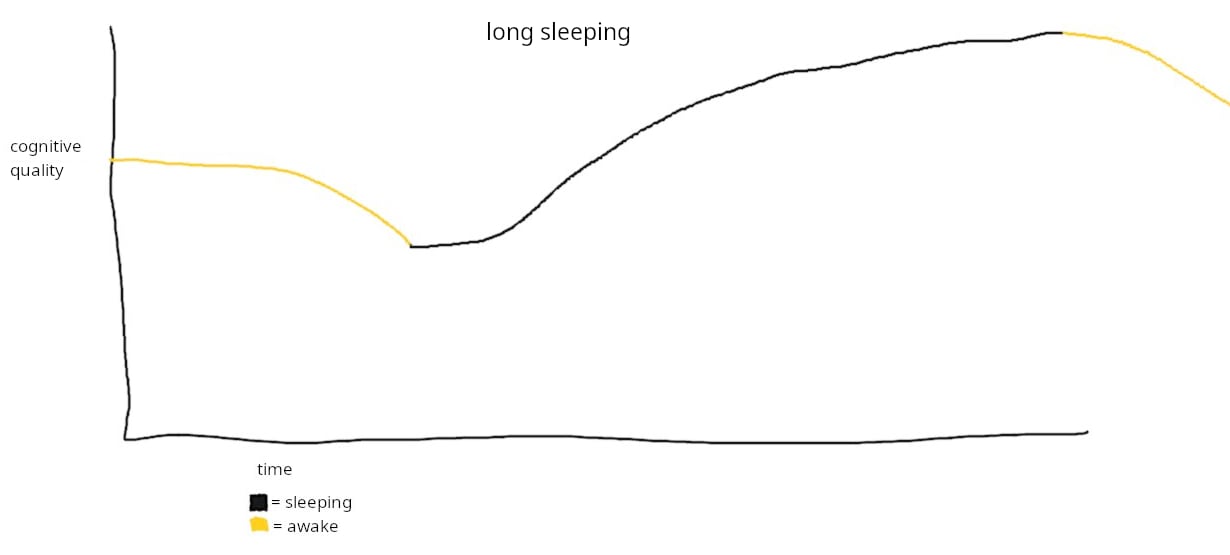

i might try sleeping for a long time (16-24 hours?) by taking sublingual[1] melatonin right when i start to be awake, and falling asleep soon after. my guess: it might increase my cognitive quality on the next wake up, like this:

(or do useful computation during sleep, leading to apparently having insights on the next wakeup? long elaboration below)

i wonder if it's even possible, or if i'd have trouble falling asleep again despite the melatonin.

i don't see much risk to it, since my day/night cycle is already uncalibrated[2], and melatonin is naturally used for this narrow purpose in the body.

'cognitive quality' is really vague. here's what i'm really imagining

my unscientific impression of sleep, from subjective experience (though i only experience the result) and speculation i've read, is that it does these things:

- integrates into memory what happened in the previous wake period, and maybe to a lesser extent further previous ones

- more separate to the previous wake period, acts on my intuitions or beliefs about things to 'reconcile' or 'compute implicated intuitions'. for example if i was trying to reconcile two ideas, or solve some confusing logical problem, maybe the

I predict this won't work as well as you hope because you'll be fighting the circadian effect that partially influences your cognitive performance.

Also, some ways to maximize your sleep quality are too exercise very intensely and/or to sauna, the day before.

i don't think having (even exceptionally) high baseline intelligence and then studying bias avoidance techniques is enough for one to be able to derive an alignment solution. i have not seen in any rationalist i'm aware of what feels like enough for that, though their efforts are virtuous of course. it's just that the standard set by the universe seems higher.

i think this is a sort of background belief for me. not failing at thinking is the baseline; other needed computations are harder. they are not satisfied by avoiding failure conditions, but require the satisfaction of some specific, hard-to-find success condition. learning about human biases will not train one to cognitively seek answers of this kind, only to avoid premature failure.

this is basically a distinction between rationality and creativity. rationality[1] is about avoiding premature failure, creativity is about somehow generating new ideas.

but there is not actually something which will 'guide us through' creativity, like hpmor/the sequences do for rationality. there are various scattered posts about it[2].

i also do not have a guide to creativity to share with you. i'm only pointing at it as an equally if not more...

i currently believe that working on superintelligence-alignment is likely the correct choice from a fully-negative-utilitarian perspective.[1]

for others, this may be an intuitive statement or unquestioned premise. for me it is not, and i'd like to state my reasons for believing it, partially as a response to this post concerned about negative utilitarians trying to accelerate progress towards an unaligned-ai-takeover.

there was a period during which i was more uncertain about this question, and avoided openly sharing minimally-dual-use alignment research (but did not try to accelerate progress towards a nonaligned-takeover) while resolving that uncertainty.

a few relevant updates since then:

- decrease on the probability that the values an aligned AI would have would endorse human-caused moral catastrophes such as human-caused animal suffering.

i did not automatically believe humans to be good-by-default, and wanted to take time to seriously consider what i think should be a default hypothesis-for-consideration upon existing in a society that generally accepts an ongoing mass torture event. - awareness of vastly worse possible s-risks.

factory farming is a form of physical torture, by w

On Pivotal Acts

(edit: status: not a crux, instead downstream of different beliefs about what the first safe ASI will look like in predicted futures where it exists. If I instead believed 'task-aligned superintelligent agents' were the most feasible form of pivotally useful AI, I would then support their use for pivotal acts.)

I was rereading some of the old literature on alignment research sharing policies after Tamsin Leake's recent post and came across some discussion of pivotal acts as well.

Hiring people for your pivotal act project is going to be tricky. [...] People on your team will have a low trust and/or adversarial stance towards neighboring institutions and collaborators, and will have a hard time forming good-faith collaboration. This will alienate other institutions and make them not want to work with you or be supportive of you.

This is in a context where the 'pivotal act' example is using a safe ASI to shut down all AI labs.[1]

My thought is that I don't see why a pivotal act needs to be that. I don't see why shutting down AI labs or using nanotech to disassemble GPUs on Earth would be necessary. These may be among the 'most direct' or 'simplest to imagine' possible...

edit: i think i've received enough expressions of interest (more would have diminishing value but you're still welcome to), thanks everyone!

i recall reading in one of the MIRI posts that Eliezer believed a 'world model violation' would be needed for success to be likely.

i believe i may be in possession of such a model violation and am working to formalize it, where by formalize i mean write in a way that is not 'hard-to-understand intuitions' but 'very clear text that leaves little possibility for disagreement once understood'. it wouldn't solve the problem, but i think it would make it simpler so that maybe the community could solve it.

if you'd be interested in providing feedback on such a 'clearly written version', please let me know as a comment or message.[1] (you're not committing to anything by doing so, rather just saying "im a kind of person who would be interested in this if your claim is true"). to me, the ideal feedback is from someone who can look at the idea under 'hard' assumptions (of the type MIRI has) about the difficulty of pointing an ASI, and see if the idea seems promising (or 'like a relevant model violation') from that perspective.

- ^

i don't have many cont

A quote from an old Nate Soares post that I really liked:

...It is there, while staring the dark world in the face, that I find a deep well of intrinsic drive. It is there that my resolve and determination come to me, rather than me having to go hunting for them.

I find it amusing that "we need lies because we can't bear the truth" is such a common refrain, given how much of my drive stems from my response to attempting to bear the truth.

I find that it's common for people to tell themselves that they need the lies in order to bear reality. In fact, I bet that many of you can think of one thing off the top of your heads that you're intentionally tolerifying, because the truth is too scary to even consider. (I've seen at least a dozen failed relationships dragged out for months and months due to this effect.)

I say, if you want the intrinsic drive, drop the illusion. Refuse to tolerify. Face the facts that you feared you would not be able to handle. You are likely correct that they will be hard to bear, and you are likely correct that attempting to bear them will change you. But that change doesn't need to break you. It can also make you stronger, and fuel your resolve.

So see the dark worl

(Personal) On writing and (not) speaking

I often struggle to find words and sentences that match what I intend to communicate.

Here are some problems this can cause:

- Wordings that are odd or unintuitive to the reader, but that are at least literally correct.[1]

- Not being able express what I mean, and having to choose between not writing it, or risking miscommunication by trying anyways. I tend to choose the former unless I'm writing to a close friend. Unfortunately this means I am unable to express some key insights to a general audience.

- Writing taking lots of time: I usually have to iterate many times on words/sentences until I find one which my mind parses as referring to what I intend. In the slowest cases, I might finalize only 2-10 words per minute. Even after iterating, my words are still sometimes interpreted in ways I failed to foresee.

These apply to speaking, too. If I speak what would be the 'first iteration' of a sentence, there's a good chance it won't create an interpretation matching what I intend to communicate. In spoken language I have no chance to constantly 'rewrite' my output before sending it. This is one reason, but not the only reason, that I've had a policy of t...

i observe that processes seem to have a tendency towards what i'll call "surreal equilibria". [status: trying to put words to a latent concept. may not be legible, feel free to skip. partly 'writing as if i know the reader will understand' so i can write about this at all. maybe it will interest some.]

progressively smaller-scale examples:

- it's probably easiest to imagine this with AI neural nets, procedurally following some adapted policy even as the context changes from the one they grew in. if these systems have an influential, hard to dismantle role, the

Here's a tampermonkey script that hides the agreement score on LessWrong. I wasn't enjoying this feature because I don't want my perception to be influenced by that; I want to judge purely based on ideas, and on my own.

Here's what it looks like:

// ==UserScript==

// @name Hide LessWrong Agree/Disagree Votes

// @namespace http://tampermonkey.net/

// @version 1.0

// @description Hide agree/disagree votes on LessWrong comments.

// @author ChatGPT4

// @match https://www.lesswrong.com/*

// @grant none

// ==/UserScript==

(funI was looking at this image in a post and it gave me some (loosely connected/ADD-type) thoughts.

In order:

- The entities outside the box look pretty scary.

- I think I would get over that quickly, they're just different evolved body shapes. The humans could seem scary-looking from their pov too.

- Wait.. but why would the robots have those big spiky teeth? (implicit question: what narratively coherent world could this depict?)

- Do these forms have qualities associated with predator species, and that's why they feel scary? (Is this a predator-species-world?)

- Most human

random idea for a voting system (i'm a few centuries late. this is just for fun.)

instead of voting directly, everyone is assigned to a discussion group of x (say 5) of themself and others near them. the group meets to discuss at an official location (attendance is optional). only if those who showed up reach consensus does the group cast one vote.

many of these groups would not reach consensus, say 70-90%. that's fine. the point is that most of the ones which do would be composed of people who make and/or are receptive to valid arguments. this would then sh...

i am kind of worried by the possibility that this is not true: there is an 'ideal procedure for figuring out what is true'.

for that to be not true, it would mean that: for any (or some portion of?) task(s), the only way to solve it is through something like a learning/training process (in the AI sense), or other search-process-involving-checking. it would mean that there's no 'reason' behind the solution being what it is, it's just a {mathematical/logical/algorithmic/other isomorphism} coincidence.

for it to be true, i guess it would mean that there's anoth...

Mutual Anthropic Capture, A Decision-theoretic Fermi paradox solution

(copied from discord, written for someone not fully familiar with rat jargon)

(don't read if you wish to avoid acausal theory)

simplified setup

- there are two values. one wants to fill the universe with A, and the other with B.

- for each of them, filling it halfway is really good, and filling it all the way is just a little bit better. in other words, they are non-linear utility functions.

- whichever one comes into existence first can take control of the universe, and fill it with 100% of what th

i've wished to have a research buddy who is very knowledgeable about math or theoretical computer science to answer questions or program experiments (given good specification). but:

- idk how to find such a person

- such a person may wish to focus on their own agenda instead

- unless they're a friend already, idk if i have great evidence that i'd be impactful to support.

so: i could instead do the inverse with someone. i am good at having creative ideas, and i could try to have new ideas about your thing, conditional on me (1) being able to {quickly understand it} a...

avoiding akrasia by thinking of the world in terms of magic: the gathering effects

example initial thought process: "i should open my laptop just to write down this one idea and then close it and not become distracted".

laptop rules text: "when activated, has an 80% chance of making you become distracted"

new reasoning: "if i open it, i need to simultaneously avoid that 80% chance somehow."

why this might help me: (1) i'm very used to strategizing about how to use a kit of this kind of effect, from playing such games. (2) maybe normal reasoning about 'wh...

From an OpenAI technical staff member who is also a prolific twitter user 'roon':

An official OpenAI post has also confirmed:

i'm watching Dominion again to remind myself of the world i live in, to regain passion to Make It Stop

it's already working.

negative values collaborate.

for negative values, as in values about what should not exist, matter can be both "not suffering" and "not a staple", and "not [any number of other things]".

negative values can collaborate with positive ones, although much less efficiently: the positive just need to make the slight trade of being "not ..." to gain matter from the negatives.

What is malevolence? On the nature, measurement, and distribution of dark traits was posted two weeks ago (and i recommend it). there was a questionnaire discussed in that post which tries to measure the levels of 'dark traits' in the respondent.

i'm curious about the results[1] of rationalists[2] on that questionnaire, if anyone wants to volunteer theirs. there are short and long versions (16 and 70 questions).

- ^

(or responses to the questions themselves)

- ^

i also posted the same shortform to the EA forum, asking about EAs

one of my basic background assumptions about agency:

there is no ontologically fundamental caring/goal-directedness, there is only the structure of an action being chosen (by some process, for example a search process), then taken.

this makes me conceptualize the 'ideal agent structure' as being "search, plus a few extra parts". in my model of it, optimal search is queried for what action fulfills some criteria ('maximizes some goal') given some pointer (~ world model) to a mathematical universe sufficiently similar to the actual universe → search's output i...

I recall a shortform here speculated that a good air quality hack could be a small fan aimed at one's face to blow away the Co2 one breathes out. I've been doing this and experience it as helpful, though it's hard know for sure.

This also includes having it pointed above my face during sleep, based on experience after waking. (I tended to be really fatigued right after waking. Keeping water near bed to drink immediately also helped with that.)

I notice that my strong-votes now give/take 4 points. I'm not sure if this is a good system.

At what point should I post content as top-level posts rather than shortforms?

For example, a recent writing I posted to shortform was ~250 concise words plus an image. It would be a top-level post on my blog if I had one set up (maybe soon :p).

Some general guidelines on this would be helpful.

i'm finally learning to prove theorems (the earliest ones following from the Peano axioms) in lean, starting with the natural number game. it is actually somewhat fun, the same kind of fun that mtg has by being not too big to fully comprehend, but still engaging to solve.

(if you want to 'play' it as well, i suggest first reading a bit about what formal systems are and interpretation before starting. also, it was not clear to me at first when the game was introducing axioms vs derived theorems, so i wondered how some operations (e.g 'induction') were a...

in the space of binary-sequences of all lengths, i have an intuition that {the rate at which there are new 'noticed patterns' found at longer lengths} decelerates as the length increases.

what do i mean by "noticed patterns"?

in some sense of 'pattern', each full sequence is itself a 'unique pattern'. i'm using this phrase to avoid that sense.

rather, my intuition is that {what could in principle be noticed about sequences of higher lengths} exponentially tends to be things that had already been noticed of sequences of lower lengths. 'meta patterns' and

i tentatively think an automatically-herbivorous and mostly-asocial species/space-of-minds would have been morally best to be the one which first reached the capability threshold to start building technology and civilization.

- herbivorous -> less ingrained biases against other species[1], no factory farming

- asocial -> less dysfunctional dynamics within the species (like automatic status-seeking, rhetoric, etc), and less 'psychologically automatic processes' which fail to generalize out of the evolutionary distribution.[2]

- ^

i expect there still would be s

what should i do with strong claims whose reasons are not easy to articulate, or the culmination of a lot of smaller subjective impressions? should i just not say them publicly, to not conjunctively-cause needless drama? here's an example:

"i perceive the average LW commenter as maybe having read the sequences long ago, but if so having mostly forgotten their lessons."

Platonism

(status: uninterpretable for 2/4 reviewers, the understanding two being friends who are used to my writing style; i'll aim to write something that makes this concept simple to read)

'Platonic' is a categorization I use internally, and my agenda is currently the search for methods to ensure AI/ASI will have this property.

With this word, I mean this category acceptance/rejection:

✅ Has no goals

✅ Has goals about what to do in isolation. Example: "in isolation from any world, (try to) output A"[1]

❌ Has goals related to physical world states. Example: "(...

a possible research direction which i don't know if anyone has explored: what would a training setup which provably creates a (probably[1]) aligned system look like?

my current intuition, which is not good evidence here beyond elevating the idea from noise, is that such a training setup might somehow leverage how the training data and {subsequent-agent's perceptions/evidence stream} are sampled from the same world, albeit with different sampling procedures. for example, the training program could intake both a dataset and an outer-alignment-goal-function, a...

a moral intuition i have: to avoid culturally/conformistly-motivated cognition, it's useful to ask:

if we were starting over, new to the world but with all the technology we have now, would we recreate this practice?

example: we start and out and there's us, and these innocent fluffy creatures that can't talk to us, but they can be our friends. we're just learning about them for the first time. would we, at some point, spontaneously choose to kill them and eat their bodies, despite us having plant-based foods, supplements, vegan-assuming nutrition guides, etc? to me, the answer seems obviously not. the idea would not even cross our minds.

(i encourage picking other topics and seeing how this applies)

something i'd be interested in reading: writings about the authors alignment ontologies over time, i.e. from when they first heard of AI till now

i saw a shortform from 4 years ago that said in passing:

if we assume that signaling is a huge force in human thinking

is signalling a huge force in human thinking?

if so, anyone want to give examples of ways of this that i, being autistic, may not have noticed?

random (fun-to-me/not practical) observation: probability is not (necessarily) fundamental. we can imagine totally discrete mathematical worlds where it is possible for an entity inside it to observe the entirety of that world including itself. (let's say it monopolizes the discrete world and makes everything but itself into 1s so it can be easily compressed and stored in its world model such that the compressed data of both itself and the world can fit inside of the world)

this entity would be able to 'know' (prove?) with certainty everything about that ma...

my language progression on something, becoming increasingly general: goals/value function -> decision policy (not all functions need to be optimizing towards a terminal value) -> output policy (not all systems need to be agents) -> policy (in the space of all possible systems, there exist some whose architectures do not converge to output layer)

(note: this language isn't meant to imply that systems behavior must be describable with some simple function, in the limit the descriptive function and the neural network are the same)

I'm interested in joining a community or research organization of technical alignment researchers who care about and take seriously astronomical-suffering risks. I'd appreciate being pointed in the direction of such a community if one exists.

this could have been noise, but i noticed an increase in text fearing spies, in the text i've seen in the past few days[1]. i actually don't know how much this concern is shared by LW users, so i think it might be worth writing that, in my view:

- (AFAIK) both governments[2] are currently reacting inadequately to unaligned optimization risk. as a starting prior, there's not strong reason to fear more one government {observing/spying on} ML conferences/gatherings over the other, absent evidence that one or the other will start taking unaligned optimi

(status: metaphysics) two ways it's conceivable[1] that reality could have been different:

- Physical contingency: The world has some starting condition that changes according to some set of rules, and it's conceivable that either could have been different

- Metaphysical contingency: The more fundamental 'what reality is made of', not meaning its particular configuration or laws, could have been some other,[2] unknowable unknown, instead of "logic-structure" and "qualia"

- ^

(i.e. even if actually it being as it is is logically necessary somehow)

in most[1] kinds of infinite worlds, values which are quantitative[2] become fanatical in a way, because they are constrained to:

- making something valued occur with at least >0% frequency, or:

- making something disvalued occur with exactly 0% frequency

"how is either possible?" - as a simple case, if there's infinite copies of one small world, then making either true in that small world snaps the overall quantity between 0 and infinity. then generalize this possibility to more-diverse worlds. (we can abstract away 'infinity' and write about p...

on chimera identity. (edit: status: received some interesting objections from an otherkin server. most importantly, i'd need to explain how this can be true despite humans evolving a lot more from species in their recent lineage. i think this might be possible with something like convergent evolution at a lower level, but at this stage in processing i don't have concrete speculation about that)

this is inspired by seeing how meta-optimization processes can morph one thing into other things. examples: a selection process running on a neural net, an image dif...

i notice my intuitions are adapting to the ontology where people are neural networks. i now sometimes vaguely-visualize/imagine a neural structure giving outputs to the human's body when seeing a human talk or make facial expressions, and that neural network rather than the body is framed as 'them'.

a friend said i have the gift of taking ideas seriously, not keeping them walled off from a [naive/default human reality/world model]. i recognize this as an example of that.

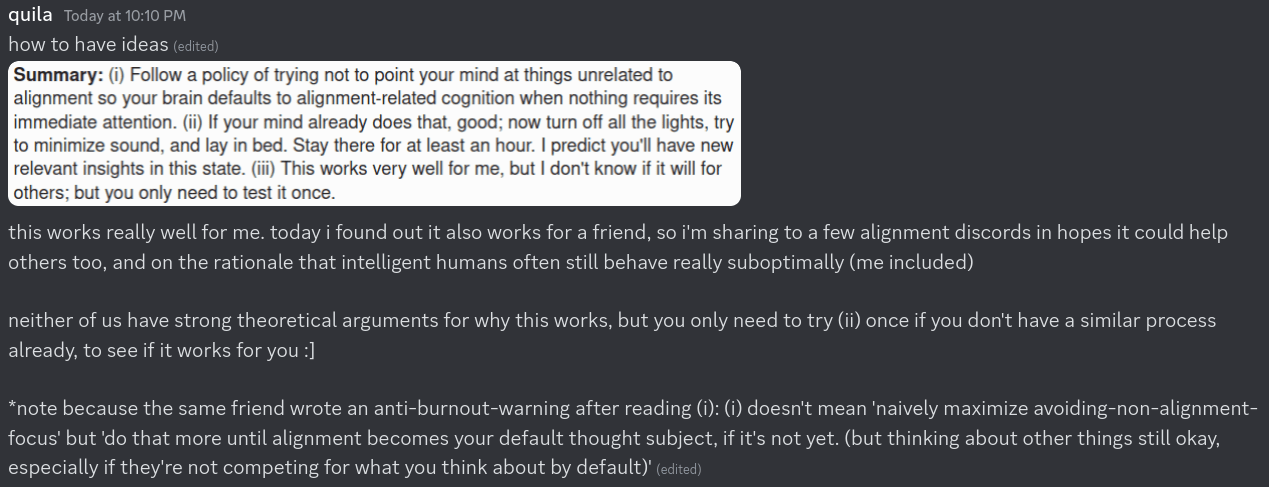

(Copied from my EA forum comment)

I think it's valuable for some of us (those who also want to) to try some odd research/thinking-optimizing-strategy that, if it works, could be enough of a benefit to push at least that one researcher above the bar of 'capable of making serious progress on the core problems'.

One motivating intuition: if an artificial neural network were consistently not solving some specific problem, a way to solve the problem would be to try to improve or change that ANN somehow or otherwise solve it with a 'different' one. Humans, by defa...

(status: silly)

newcombs paradox solutions:

1: i'll take both boxes, because their contents are already locked in.

2: i'll take only box B, because the content of box B is acausally dependent on my choice.

3: i'll open box B first. if it was empty, i won't open box A. if it contained $1m, i will open box A. this way, i can defeat Omega by making our policies have unresolvable mutual dependence.

story for how future LLM training setups could create a world-valuing (-> instrumentally converging) agent:

the initial training task of predicting a vast amount of data from the general human dataset creates an AI that's ~just 'the structure of prediction', a predefined process which computes the answer to the singular question of what text likely comes next.

but subsequent training steps - say rlhf - change the AI from something which merely is this process, to something which has some added structure which uses this process, e.g which passes it certain...

(self-quote relevant to non-agenticness)

Inside a superintelligent agent - defined as a superintelligent system with goals - there must be a superintelligent reasoning procedure entangled with those goals - an 'intelligence process' which procedurally figures out what is true. 'Figuring out what is true' happens to be instrumentally needed to fulfill the goals, so agents contain intelligence, but intelligence-the-ideal-procedure-for-figuring-out-what-is-true is not inherently goal-having.

Two I shared this with said it reminded them of retarget the search, a...

a super-coordination story with a critical flaw

part 1. supercoordination story

- select someone you want to coordinate with without any defection risks

- share this idea with them. it only works if they also have the chance to condition their actions on it.

- general note to maybe make reading easier: this is fully symmetric.

- after the acute risk period, in futures where it's possible: run a simulation of the other person (and you).

- the simulation will start in this current situation, and will be free to terminate when actions are no longer long-term releva...

I wrote this for a discord server. It's a hopefully very precise argument for unaligned intelligence being possible in principle (which was being debated), which was aimed at aiding early deconfusion about questions like 'what are values fundamentally, though?' since there was a lot of that implicitly, including some with moral realist beliefs.

...1. There is an algorithm behind intelligent search. Like simpler search processes, this algorithm does not, fundamentally, need to have some specific value about what to search for - for if it did, one's search proce

(edit: see disclaimers[1])

- Creating superintelligence generally leads to runaway optimization.

- Under the anthropic principle, we should expect there to be a 'consistent underlying reason' for our continued survival.[2]

- By default, I'd expect the 'consistent underlying reason' to be a prolonged alignment effort in absence of capabilities progress. However, this seems inconsistent with the observation of progressing from AI winters to a period of vast training runs and widespread technical interest in advancing capabilities.

- That particular 'consistent underlyin

'Value Capture' - An anthropic attack against some possible formally aligned ASIs

(this is a more specific case of anthropic capture attacks in general, aimed at causing a superintelligent search process within a formally aligned system to become uncertain about the value function it is to maximize (or its output policy more generally))

Imagine you're a superintelligence somewhere in the world that's unreachable to life on Earth, and you have a complete simulation of Earth. You see a group of alignment researchers about to successfully create a formal-value-...

People are confused about the basics because the basics are insufficiently justified.