If a tree falls in the forest, and two people are around to hear it, does it make a sound?

I feel like typically you'd say yes, it makes a sound. Not two sounds, one for each person, but one sound that both people hear.

But that must mean that a sound is not just auditory experiences, because then there would be two rather than one. Rather it's more like, emissions of acoustic vibrations. But this implies that it also makes a sound when no one is around to hear it.

Finally gonna start properly experimenting on stuff. Just writing up what I'm doing to force myself to do something, not claiming this is necessarily particularly important.

Llama (and many other models, but I'm doing experiments on Llama) has a piece of code that looks like this:

h = x + self.attention(self.attention_norm(x), start_pos, freqs_cis, mask)

out = h + self.feed_forward(self.ffn_norm(h))

Here, out is the result of the transformer layer (aka the residual stream), and the vectors self.attention(self.attention_norm(x), start_pos, freqs_cis, mask) and self.feed_forward(self.ffn_norm(h)) are basically where all the computation happens. So basically the transformer proceeds as a series of "writes" to the residual stream using these two vectors.

I took all the residual vectors for some queries to Llama-8b and stacked them into a big matrix M with 4096 columns (the internal hidden dimensionality of the model). Then using SVD, I can express , where the 's and 's are independent units vectors. This basically decomposes the "writes" into some independent locations in the residual stream (u's), some lat...

Thesis: while consciousness isn't literally epiphenomenal, it is approximately epiphenomenal. One way to think of this is that your output bandwidth is much lower than your input bandwidth. Another way to think of this is the prevalence of akrasia, where your conscious mind actually doesn't have full control over your behavior. On a practical level, the ecological reason for this is that it's easier to build a general mind and then use whatever parts of the mind that are useful than to narrow down the mind to only work with a small slice of possibilities. This is quite analogous to how we probably use LLMs for a much narrower set of tasks than what they were trained for.

Thesis: There's three distinct coherent notions of "soul": sideways, upwards and downwards.

By "sideways souls", I basically mean what materialists would translate the notion of a soul to: the brain, or its structure, so something like that. By "upwards souls", I mean attempts to remove arbitrary/contingent factors from the sideways souls, for instance by equating the soul with one's genes or utility function. These are different in the particulars, but they seem conceptually similar and mainly differ in how they attempt to cut the question of identity (ide...

Thesis: in addition to probabilities, forecasts should include entropies (how many different conditions are included in the forecast) and temperatures (how intense is the outcome addressed by the marginal constraint in this forecast, i.e. the big-if-true factor).

I say "in addition to" rather than "instead of" because you can't compute probabilities just from these two numbers. If we assume a Gibbs distribution, there's the free parameter of energy: ln(P) = S - E/T. But I'm not sure whether this energy parameter has any sensible meaning with more general ev...

Thesis: whether or not tradition contains some moral insights, commonly-told biblical stories tend to be too sparse to be informative. For instance, there's no plot-relevant reason why it should be bad for Adam and Eve to have knowledge of good and evil. Maybe there's some interpretation of good and evil where it makes sense, but it seems like then that interpretation should have been embedded more properly in the story.

Thesis: one of the biggest alignment obstacles is that we often think of the utility function as being basically-local, e.g. that each region has a goodness score and we're summing the goodness over all the regions. This basically-guarantees that there is an optimal pattern for a local region, and thus that the global optimum is just a tiling of that local optimal pattern.

Even if one adds a preference for variation, this likely just means that a distribution of patterns is optimal, and the global optimum will be a tiling of samples from said distribution.

T...

Current agent models like argmax entirely lack any notion of "energy". Not only does this seem kind of silly on its own, I think it also leads to missing important dynamics related to temperature.

I think I've got it, the fix to the problem in my corrigibility thing!

So to recap: It seems to me that for the stop button problem, we want humans to control whether the AI stops or runs freely, which is a causal notion, and so we should use counterfactuals in our utility function to describe it. (Dunno why most people don't do this.) That is, if we say that the AI's utility should depend on the counterfactuals related to human behavior, then it will want to observe humans to get input on what to do, rather than manipulate them, because this is the only wa...

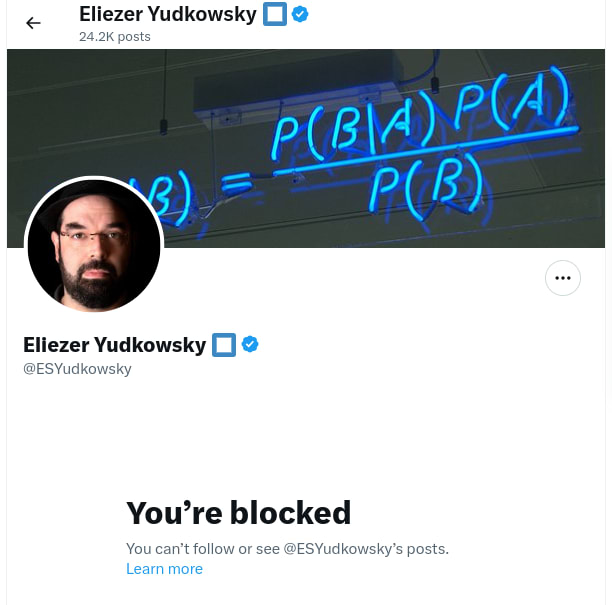

I was surprised to see this on twitter:

I mean, I'm pretty sure I knew what caused it (this thread or this market), and I guess I knew from Zack's stuff that rationalist cultism had gotten pretty far, but I still hadn't expected that something this small would lead to being blocked.

FYI: I have a low bar for blocking people who have according-to-me bad, overconfident, takes about probability theory, in particular. For whatever reason, I find people making claims about that topic, in particular, really frustrating. ¯\_(ツ)_/¯

The block isn't meant as a punishment, just a "I get to curate my online experience however I want."

I'm not particularly interested in discussing it in depth. I'm more like giving you a data-point in favor of not taking the block personally, or particularly reading into it.

(But yeah, "I think these messages are very important", is likely to trigger my personal "bad, overconfident takes about proabrbility theory" neurosis.)

This is awkwardly armchair, but… my impression of Eliezer includes him being just so tired, both specifically from having sacrificed his present energy in the past while pushing to rectify the path of AI development (by his own model thereof, of course!) and maybe for broader zeitgeist reasons that are hard for me to describe. As a result, I expect him to have entered into the natural pattern of having a very low threshold for handing out blocks on Twitter, both because he's beset by a large amount of sneering and crankage in his particular position and because the platform easily becomes a sinkhole in cognitive/experiential ways that are hard for me to describe but are greatly intertwined with the aforementioned zeitgeist tiredness.

Something like: when people run heavily out of certain kinds of slack for dealing with The Other, they reach a kind of contextual-but-bleed-prone scarcity-based closed-mindedness of necessity, something that both looks and can become “cultish” but where reaching for that adjective first is misleading about the structure around it. I haven't succeeded in extracting a more legible model of this, and I bet my perception is still skew to the reality, but I'...

I disagree with the sibling thread about this kind of post being “low cost”, BTW; I think adding salience to “who blocked whom” types of considerations can be subtly very costly.

I agree publicizing blocks has costs, but so does a strong advocate of something with a pattern of blocking critics. People publicly announcing "Bob blocked me" is often the only way to find out if Bob has such a pattern.

I do think it was ridiculous to call this cultish. Tuning out critics can be evidence of several kinds of problems, but not particularly that one.

This is a very useful point:

Most people with many followers on Twitter seem to need to have a hair trigger for blocking, or at least feel like they need to, in order to not constantly have terrible experiences.

I think that this is a point that people not on social media that much don't get: You need to be very quick to block because otherwise you will not have good experiences on the site otherwise.

MIRI full-time employed many critics of bayesianism for 5+ years and MIRI researchers themselves argued most of the points you made in these arguments. It is obviously not the case that critiquing bayesianism is the reason why you got blocked.

I've been thinking about how the way to talk about how a neural network works (instead of how it could hypothetically come to work by adding new features) would be to project away components of its activations/weights, but I got stuck because of the issue where you can add new components by subtracting off large irrelevant components.

I've also been thinking about deception and its relationship to "natural abstractions", and in that case it seems to me that our primary hope would be that the concepts we care about are represented at a large "magnitude" than...

One thing that seems really important for agency is perception. And one thing that seems really important for perception is representation learning. Where representation learning involves taking a complex universe (or perhaps rather, complex sense-data) and choosing features of that universe that are useful for modelling things.

When the features are linearly related to the observations/state of the universe, I feel like I have a really good grasp of how to think about this. But most of the time, the features will be nonlinearly related; e.g. in order to do...

Thesis: money = negative entropy, wealth = heat/bound energy, prices = coldness/inverse temperature, Baumol effect = heat diffusion, arbitrage opportunity = free energy.

Thesis: there's a condition/trauma that arises from having spent a lot of time in an environment where there's excess resources for no reasons, which can lead to several outcomes:

- Inertial drifting in the direction implied by ones' prior adaptations,

- Conformity/adaptation to social popularity contests based on the urges above,

- Getting lost in meta-level preparations,

- Acting as a stickler for the authorities,

- "Bite the hand that feeds you",

- Tracking the resource/motivation flows present.

By contrast, if resources are contingent on a particular reason, everything takes shape according to said reason, and so one cannot make a general characterization of the outcomes.

Thesis: the median entity in any large group never matters and therefore the median voter doesn't matter and therefore the median voter theorem proves that democracies get obsessed about stuff that doesn't matter.

I recently wrote a post about myopia, and one thing I found difficult when writing the post was in really justifying its usefulness. So eventually I mostly gave up, leaving just the point that it can be used for some general analysis (which I still think is true), but without doing any optimality proofs.

But now I've been thinking about it further, and I think I've realized - don't we lack formal proofs of the usefulness of myopia in general? Myopia seems to mostly be justified by the observation that we're already being myopic in some ways, e.g. when train...

Thesis: a general-purpose interpretability method for utility-maximizing adversarial search is a sufficient and feasible solution to the alignment problem. Simple games like chess have sufficient features/complexity to work as a toy model for developing this, as long as you don't rely overly much on preexisting human interpretations for the game, but instead build the interpretability from the ground-up.

The universe has many conserved and approximately-conserved quantities, yet among them energy feels "special" to me. Some speculations why:

- The sun bombards the earth with a steady stream of free energy, which leaves out into the night.

- Time-evolution is determined by a 90-degree rotation of energy (Schrodinger equation/Hamiltonian mechanics).

- Breaking a system down into smaller components primarily requires energy.

- While aspects of thermodynamics could apply to many conserved quantities, we usually apply it to energy only, and it was first discovered in the c

Thesis: the problem with LLM interpretability is that LLMs cannot do very much, so for almost all purposes "prompt X => outcome Y" is all the interpretation we can get.

Counterthesis: LLMs are fiddly and usually it would be nice to understand what ways one can change prompts to improve their effectiveness.

Synthesis: LLM interpretability needs to start with some application (e.g. customer support chatbot) to extend the external subject matter that actually drives the effectiveness of the LLM into the study.

Problem: this seems difficult to access, and the people who have access to it are busy doing their job.

Thesis: linear diffusion of sparse lognormals contains the explanation for shard-like phenomena in neural networks. The world itself consists of ~discrete, big phenomena. Gradient descent allows those phenomena to make imprints upon the neural networks, and those imprints are what is meant by "shards".

... But shard theory is still kind of broken because it lacks consideration of the possibility that the neural network might have an impetus to nudge those shards towards specific outcomes.

Thesis: the openness-conscientiousness axis of personality is about whether you live as a result of intelligence or whether you live through a bias for vitality.

Thesis: if being loud and honest about what you think about others would make you get seen as a jerk, that's a you problem. It means you either haven't learned to appreciate others or haven't learned to meet people well.

Thought: couldn't you make a lossless SAE using something along the lines of:

- Represent the parameters of the SAE as simply a set of unit vectors for the feature directions.

- To encode a vector using the SAE, iterate: find the most aligned feature vector, dot them to get the coefficient for that feature vector, and subtract off the scaled feature vector to get a residual to encode further

With plenty of diverse vectors, this should presumably guarantee excellent reconstruction, so the main issue is to ensure high sparsity, which could be achieved by some ...

Idea: for a self-attention where you give it two prompts p1 and p2, could you measure the mutual information between the prompts using something vaguely along the lines of V1^T softmax(K1 K2^T/sqrt(dK)) V2?

In the context of natural impact regularization, it would be interesting to try to explore some @TurnTrout-style powerseeking theorems for subagents. (Yes, I know he denounces the powerseeking theorems, but I still like them.)

Specifically, consider this setup: Agent U starts a number of subagents S1, S2, S3, ..., with the subagents being picked according to U's utility function (or decision algorithm or whatever). Now, would S1 seek power? My intuition says, often not! If S1 seeks power in a way that takes away power from S2, that could disadvantage U. So ...

Theory for a capabilities advance that is going to occur soon:

OpenAI is currently getting lots of novel triplets (S, U, A), where S is a system prompt, U is a user prompt, and A is an assistant answer.

Given a bunch of such triplets (S, U_1, A_1), ... (S, U_n, A_n), it seems like they could probably create a model P(S|U_1, A_1, ..., U_n, A_n), which could essentially "generate/distill prompts from examples".

This seems like the first step towards efficiently integrating information from lots of places. (Well, they could ofc also do standard SGD-based gradien...

I recently wrote a post presenting a step towards corrigibility using causality here. I've got several ideas in the works for how to improve it, but I'm not sure which one is going to be most interesting to people. Here's a list.

- Develop the stop button solution further, cleaning up errors, better matching the purpose, etc..

e.g.

...I think there may be some variant of this that could work. Like if you give the AI reward proportional to (where is a reward function for ) for its current world-state (rather than picking a policy t

Thesis: The motion of the planets are the strongest governing factor for life on Earth.

Reasoning: Time-series data often shows strong changes with the day and night cycle, and sometimes also with the seasons. The daily cycle and the seasonal cycle are governed by the relationship between the Earth and the sun. The Earth is a planet, and so its movement is part of the motion of the planets.

Are there good versions of DAGs for other things than causality?

I've found Pearl-style causal DAGs (and other causal graphical models) useful for reasoning about causality. It's a nice way to abstractly talk and think about it without needing to get bogged down with fiddly details.

In a way, causality describes the paths through which information can "flow". But information is not the only thing in the universe that gets transferred from node to node; there's also things like energy, money, etc., which have somewhat different properties but intuitively seem...

I have a concept that I expect to take off in reinforcement learning. I don't have time to test it right now, though hopefully I'd find time later. Until then, I want to put it out here, either as inspiration for others, or as a "called it"/prediction, or as a way to hear critique/about similar projects others might have made:

Reinforcement learning is currently trying to do stuff like learning to model the sum of their future rewards, e.g. expectations using V, A and Q functions for many algorithm, or the entire probability distribution in algorithms like ...

Because it's capability research. It shortens the TAI timeline with little compensating benefit.