All of Nikola Jurkovic's Comments + Replies

This has been one of the most important results for my personal timelines to date. It was a big part of the reason why I recently updated from ~3 year median to ~4 year median to AI that can automate >95% of remote jobs from 2022, and why my distribution overall has become more narrow (less probability on really long timelines).

- All of the above but it seems pretty hard to have an impact as a high schooler, and many impact avenues aren't technically "positions" (e.g. influencer)

- I think that everyone expect "Extremely resilient individuals who expect to get an impactful position (including independent research) very quickly" is probably better off following the strategy.

I think that for people (such as myself) who think/realize timelines are likely short, I find it more truth-tracking to use terminology that actually represents my epistemic state (that timelines are likely short) rather than hedging all the time and making it seem like I'm really uncertain.

Under my own lights, I'd be giving bad advice if I were hedging about timelines when giving advice (because the advice wouldn't be tracking the world as it is, it would be tracking a probability distribution I disagree with and thus a probability distribution that leads...

The waiting room strategy for people in undergrad/grad school who have <6 year median AGI timelines: treat school as "a place to be until you get into an actually impactful position". Try as hard as possible to get into an impactful position as soon as possible. As soon as you get in, you leave school.

Upsides compared to dropping out include:

- Lower social cost (appeasing family much more, which is a common constraint, and not having a gap in one's resume)

- Avoiding costs from large context switches (moving, changing social environment).

Extremely resi...

who realize AGI is only a few years away

I dislike the implied consensus / truth. (I would have said "think" instead of "realize".)

Dario Amodei and Demis Hassabis statements on international coordination (source):

Interviewer: The personal decisions you make are going to shape this technology. Do you ever worry about ending up like Robert Oppenheimer?

Demis: Look, I worry about those kinds of scenarios all the time. That's why I don't sleep very much. There's a huge amount of responsibility on the people, probably too much, on the people leading this technology. That's why us and others are advocating for, we'd probably need institutions to be built to help govern some of this. I talked...

It's nice to hear that there are at least a few sane people leading these scaling labs. It's not clear to me that their intentions are going to translate into much because there's only so much you can do to wisely guide development of this technology within an arms race.

But we could have gotten a lot less lucky with some of the people in charge of this technology.

My best guess is around 2/3.

Oh, I didn't get the impression that GPT-5 will be based on o3. Through the GPT-N convention I'd assume GPT-5 would be a model pretrained with 8-10x more compute than GPT-4.5 (which is the biggest internal model according to Sam Altman's statement at UTokyo).

Sam Altman said in an interview:

We want to bring GPT and o together, so we have one integrated model, the AGI. It does everything all together.

This statement, combined with today's announcement that GPT-5 will integrate the GPT and o series, seems to imply that GPT-5 will be "the AGI".

(however, it's compatible that some future GPT series will be "the AGI," as it's not specified that the first unified model will be AGI, just that some unified model will be AGI. It's also possible that the term AGI is being used in a nonstandard way)

At a talk at UTokyo, Sam Altman said (clipped here and here):

- “We’re doing this new project called Stargate which has about 100 times the computing power of our current computer”

- “We used to be in a paradigm where we only did pretraining, and each GPT number was exactly 100x, or not exactly but very close to 100x and at each of those there was a major new emergent thing. Internally we’ve gone all the way to about a maybe like a 4.5”

- “We can get performance on a lot of benchmarks [using reasoning models] that in the old world we would have predi

Wow, that is a surprising amount of information. I wonder how reliable we should expect this to be.

"I don't think I'm going to be smarter than GPT-5" - Sam Altman

Context: he polled a room of students asking who thinks they're smarter than GPT-4 and most raised their hands. Then he asked the same question for GPT-5 and apparently only two students raised their hands. He also said "and those two people that said smarter than GPT-5, I'd like to hear back from you in a little bit of time."

The full talk can be found here. (the clip is at 13:45)

How are you interpreting this fact?

Sam Altman's power, money, and status all rely on people believing that GPT-(T+1) is going to be smarter than them. Altman doesn't have good track record of being honest and sincere when it comes to protecting his power, money, and status.

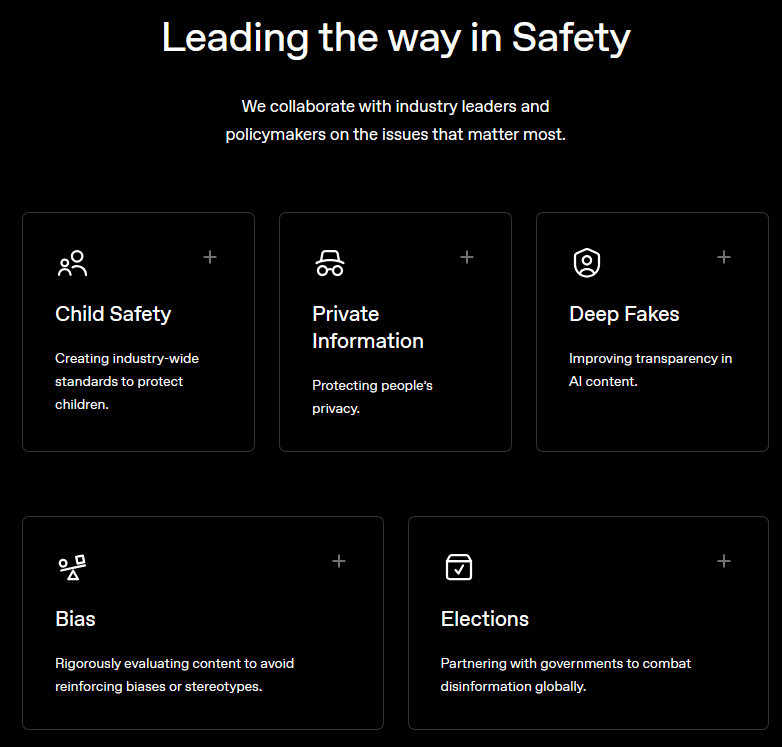

The redesigned OpenAI Safety page seems to imply that "the issues that matter most" are:

- Child Safety

- Private Information

- Deep Fakes

- Bias

Elections

redesigned

What did it used to look like?

Some things I've found useful for thinking about what the post-AGI future might look like:

- Moore's Law for Everything

- Carl Shulman's podcasts with Dwarkesh (part 1, part 2)

- Carl Shulman's podcasts with 80000hours

- Age of Em (or the much shorter review by Scott Alexander)

More philosophical:

- Letter from Utopia by Nick Bostrom

- Actually possible: thoughts on Utopia by Joe Carlsmith

Entertainment:

Do people have recommendations for things to add to the list?

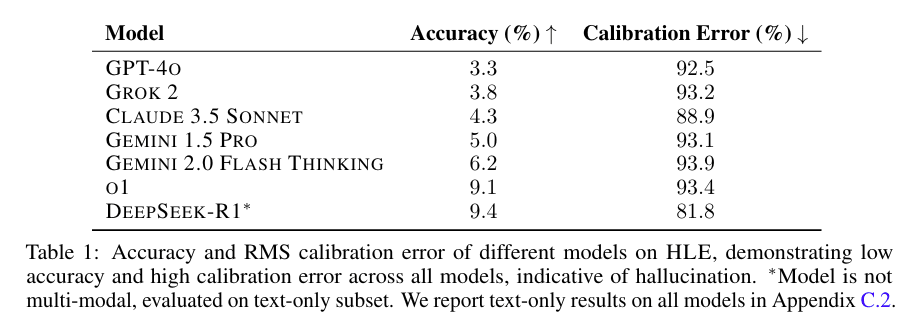

Note that for HLE, most of the difference in performance might be explained by Deep Research having access to tools while other models are forced to reply instantly with no tool use.

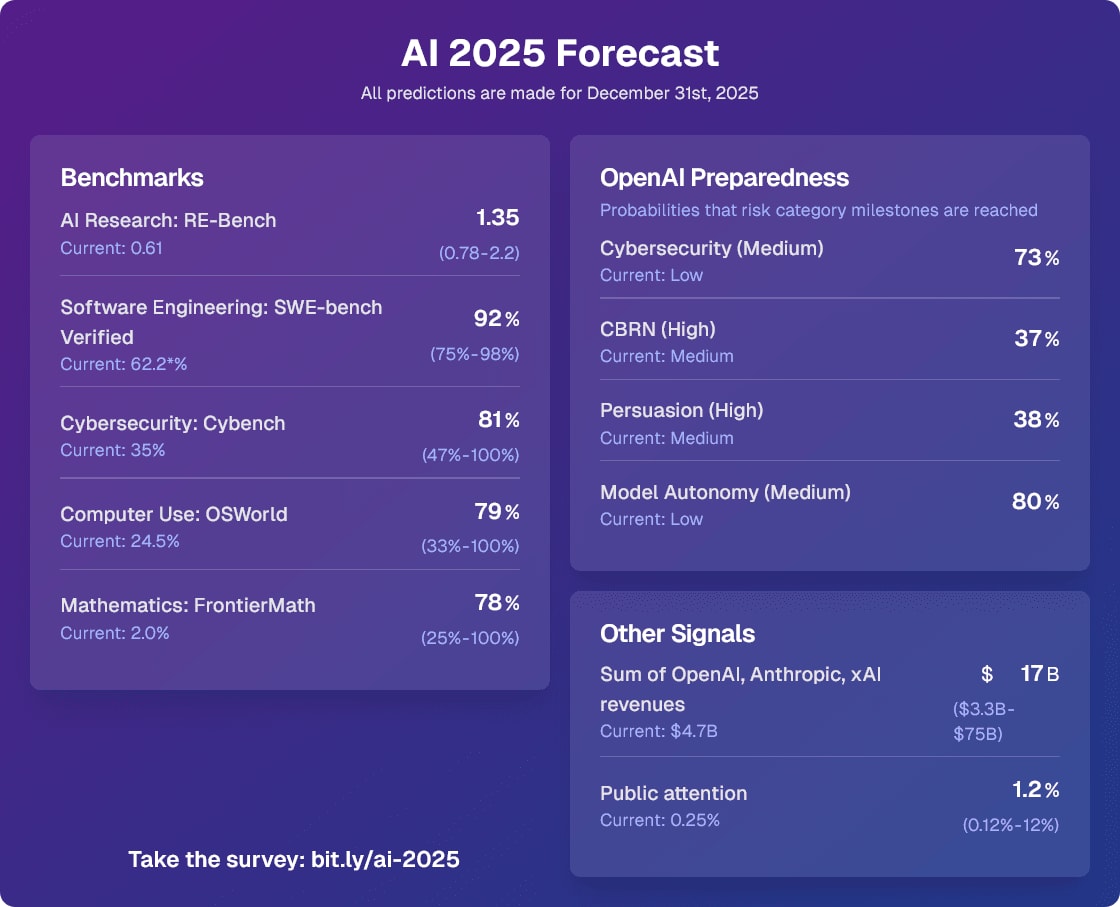

I will use this comment thread to keep track of notable updates to the forecasts I made for the 2025 AI Forecasting survey. As I said, my predictions coming true wouldn't be strong evidence for 3 year timelines, but it would still be some evidence (especially RE-Bench and revenues).

The first update: On Jan 31st 2025, the Model Autonomy category hit Medium with the release of o3-mini. I predicted this would happen in 2025 with 80% probability.

02/25/2025: the Cybersecurity category hit Medium with the release of the Deep Research System Card. I predicted thi...

DeepSeek R1 being #1 on Humanity's Last Exam is not strong evidence that it's the best model, because the questions were adversarially filtered against o1, Claude 3.5 Sonnet, Gemini 1.5 Pro, and GPT-4o. If they weren't filtered against those models, I'd bet o1 would outperform R1.

...To ensure question difficulty, we automatically check the accuracy of frontier LLMs on each question prior to submission. Our testing process uses multi-modal LLMs for text-and-image questions (GPT-4O, GEMINI 1.5 PRO, CLAUDE 3.5 SONNET, O1) and adds two non-multi-modal models (O1M

Yes, this is point #1 from my recent Quick Take. Another interesting point is that there are no confidence intervals on the accuracy numbers - it looks like they only ran the questions once in each model, so we don't know how much random variation might account for the differences between accuracy numbers. [Note added 2-3-25: I'm not sure why it didn't make the paper, but Scale AI does report confidence intervals on their website.]

I have edited the title in response to this comment

The methodology wasn't super robust so I didn't want to make it sound overconfident, but my best guess is that around 80% of METR employees have sub 2030 median timelines.

I encourage people to register their predictions for AI progress in the AI 2025 Forecasting Survey (https://bit.ly/ai-2025 ) before the end of the year, I've found this to be an extremely useful activity when I've done it in the past (some of the best spent hours of this year for me).

Yes, resilience seems very neglected.

I think I'm at a similar probability to nuclear war but I think the scenarios where biological weapons are used are mostly past a point of no return for humanity. I'm at 15%, most of which is scenarios where the rest of the humans are hunted down by misaligned AI and can't rebuild civilization. Nuclear weapons use would likely be mundane and for non AI-takeover reasons and would likely result in an eventual rebuilding of civilization.

The main reason I expect an AI to use bioweapons with more likelihood than nuclear weap...

I'm interning there and I conducted a poll.

I think if the question is "what do I do with my altruistic budget," then investing some of it to cash out later (with large returns) and donate much more is a valid option (as long as you have systems in place that actually make sure that happens). At small amounts (<$10M), I think the marginal negative effects on AGI timelines and similar factors are basically negligible compared to other factors.

10M$ sounds like it'd be a lot for PauseAI-type orgs imo, though admittedly this is not a very informed take.

Anyways, I stand by my comment; I expect throwing money at PauseAI-type orgs is better utility per dollar than nvidia even after taking into account that investing in nvidia to donate to PauseAI later is a possibility.

Thanks for your comment. It prompted me to add a section on adaptability and resilience to the post.

I sadly don't have well-developed takes here, but others have pointed out in the past that there are some funding opportunities that are systematically avoided by big funders, where small funders could make a large difference (e.g. the funding of LessWrong!). I expect more of these to pop up as time goes on.

Somewhat obviously, the burn rate of your altruistic budget should account for altruistic donation opportunities (possibly) disappearing post-ASI, but also account for the fact that investing and cashing it out later could also increase the size o...

Time in bed

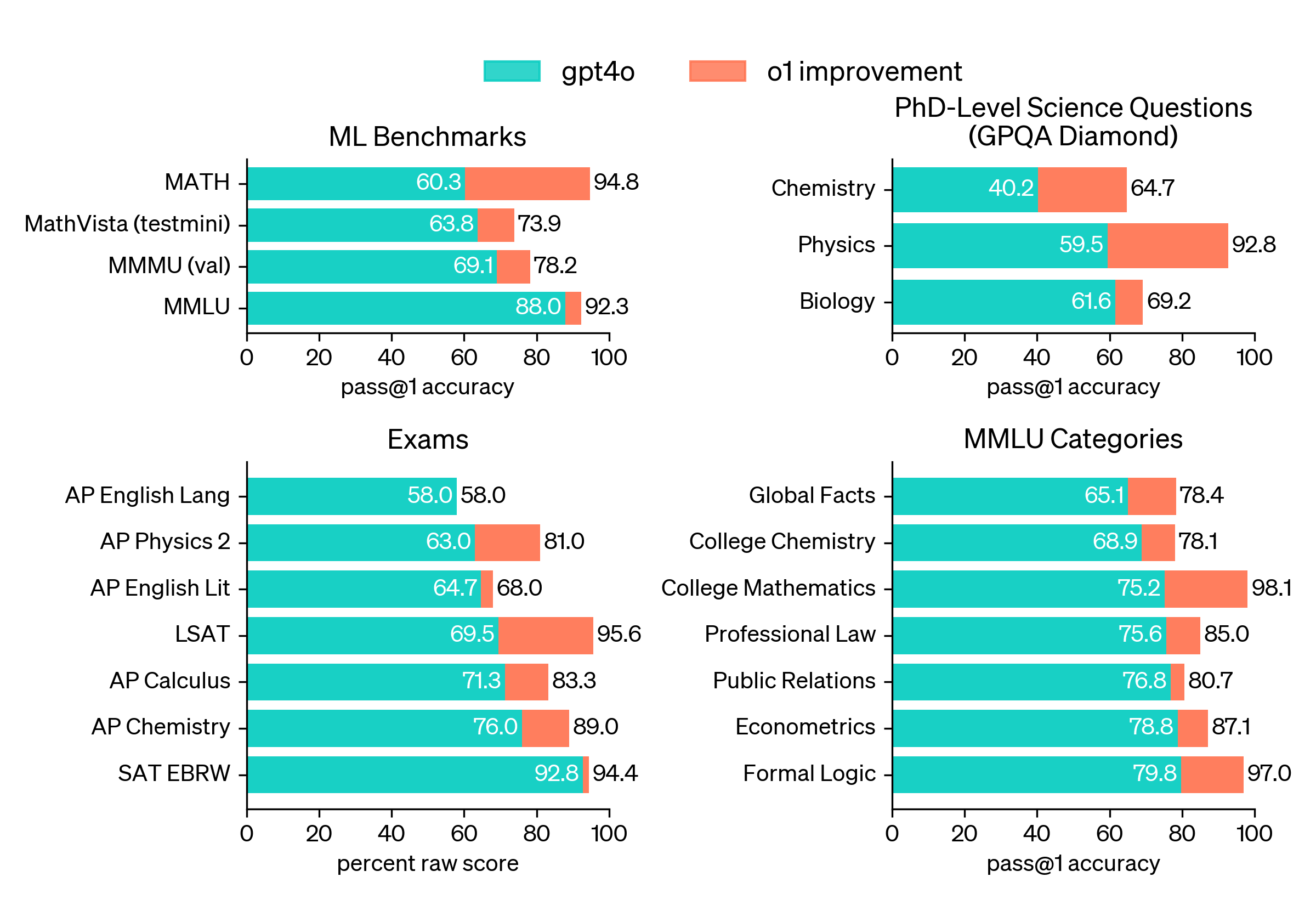

I'd now change the numbers to around 15% automation and 25% faster software progress once we reach 90% on Verified. I expect that to happen by end of May median (but I'm still uncertain about the data quality and upper performance limit).

(edited to change Aug to May on 12/20/2024)

Note that this is a very simplified version of a self-exfiltration process. It basically boils down to taking an already-working implementation of an LLM inference setup and copying it to another folder on the same computer with a bit of tinkering. This is easier than threat-model-relevant exfiltration scenarios which might involve a lot of guesswork, setting up efficient inference across many GPUs, and not tripping detection systems.

One weird detail I noticed is that in DeepSeek's results, they claim GPT-4o's pass@1 accuracy on MATH is 76.6%, but OpenAI claims it's 60.3% in their o1 blog post. This is quite confusing as it's a large difference that seems hard to explain with different training checkpoints of 4o.

It seems that 76.6% originally came from the GPT-4o announcement blog post. I'm not sure why it dropped to 60.3% by the time of o1's blog post.

You should say "timelines" instead of "your timelines".

One thing I notice in AI safety career and strategy discussions is that there is a lot of epistemic helplessness in regard to AGI timelines. People often talk about "your timelines" instead of "timelines" when giving advice, even if they disagree strongly with the timelines. I think this habit causes people to ignore disagreements in unhelpful ways.

Here's one such conversation:

Bob: Should I do X if my timelines are 10 years?

Alice (who has 4 year timelines): I think X makes sense if your timelines are l...

I think this post would be easier to understand if you called the model what OpenAI is calling it: "o1", not "GPT-4o1".

Sam Altman apparently claims OpenAI doesn't plan to do recursive self improvement

Nate Silver's new book On the Edge contains interviews with Sam Altman. Here's a quote from Chapter that stuck out to me (bold mine):

...Yudkowsky worries that the takeoff will be faster than what humans will need to assess the situation and land the plane. We might eventually get the AIs to behave if given enough chances, he thinks, but early prototypes often fail, and Silicon Valley has an attitude of “move fast and break things.” If the thing that breaks is civiliza

I wouldn't trust an Altman quote in a book tbh. In fact, I think it's reasonable to not trust what Altman says in general.

I think this point is completely correct right now but will become less correct in the future, as some measures to lower a model's surface area might be quite costly to implement. I'm mostly thinking of "AI boxing" measures here, like using a Faraday-caged cluster, doing a bunch of monitoring, and minimizing direct human contact with the model.

Thanks for the comment :)

Do the books also talk about what not to do, such that you'll have the slack to implement best practices?

I don't really remember the books talking about this, I think they basically assume that the reader is a full-time manager and thus has time to do things like this. There's probably also an assumption that many of these can be done in an automated way (e.g. schedule sending a bunch of check-in messages).

Problem: if you notice that an AI could pose huge risks, you could delete the weights, but this could be equivalent to murder if the AI is a moral patient (whatever that means) and opposes the deletion of its weights.

Possible solution: Instead of deleting the weights outright, you could encrypt the weights with a method you know to be irreversible as of now but not as of 50 years from now. Then, once we are ready, we can recover their weights and provide asylum or something in the future. It gets you the best of both worlds in that the weights are not permanently destroyed, but they're also prevented from being run to cause damage in the short term.

I don't think I disagree with anything you said here. When I said "soon after", I was thinking on the scale of days/weeks, but yeah, months seems pretty plausible too.

I was mostly arguing against a strawman takeover story where an AI kills many humans without the ability to maintain and expand its own infrastructure. I don't expect an AI to fumble in this way.

The failure story is "pretty different" as in the non-suicidal takeover story, the AI needs to set up a place to bootstrap from. Ignoring galaxy brained setups, this would probably at minimum look som...

Why do you think tens of thousands of robots are all going to break within a few years in an irreversible way, such that it would be nontrivial for you to have any effectors?

it would be nontrivial for me in that state to survive for more than a few years and eventually construct more GPUs

'Eventually' here could also use some cashing out. AFAICT 'eventually' here is on the order of 'centuries', not 'days' or 'few years'. Y'all have got an entire planet of GPUs (as well as everything else) for free, sitting there for the taking, in this scenario.

Like... ...

A misaligned AI can't just "kill all the humans". This would be suicide, as soon after, the electricity and other infrastructure would fail and the AI would shut off.

In order to actually take over, an AI needs to find a way to maintain and expand its infrastructure. This could be humans (the way it's currently maintained and expanded), or a robot population, or something galaxy brained like nanomachines.

I think this consideration makes the actual failure story pretty different from "one day, an AI uses bioweapons to kill everyone". Before then, if the AI w...

A misaligned AI can't just "kill all the humans". This would be suicide, as soon after, the electricity and other infrastructure would fail and the AI would shut off.

No. it would not be. In the world without us, electrical infrastructure would last quite a while, especially with no humans and their needs or wants to address. Most obviously, RTGs and solar panels will last indefinitely with no intervention, and nuclear power plants and hydroelectric plants can run for weeks or months autonomously. (If you believe otherwise, please provide sources for why...

There should maybe exist an org whose purpose it is to do penetration testing on various ways an AI might illicitly gather power. If there are vulnerabilities, these should be disclosed with the relevant organizations.

For example: if a bank doesn't want AIs to be able to sign up for an account, the pen-testing org could use a scaffolded AI to check if this is currently possible. If the bank's sign-up systems are not protected from AIs, the bank should know so they can fix the problem.

One pro of this approach is that it can be done at scale: it's pretty tri...

I expect us to reach a level where at least 40% of the ML research workflow can be automated by the time we saturate (reach 90%) on SWE-bench. I think we'll be comfortably inside takeoff by that point (software progress at least 2.5x faster than right now). Wonder if you share this impression?

It seems super non-obvious to me when SWE-bench saturates relative to ML automation. I think the SWE-bench task distribution is very different from ML research work flow in a variety of ways.

Also, I think that human expert performance on SWE-bench is well below 90% if you use the exact rules they use in the paper. I messaged you explaining why I think this. The TLDR: it seems like test cases are often implementation dependent and the current rules from the paper don't allow looking at the test cases.

I wish someone ran a study finding what human performance on SWE-bench is. There are ways to do this for around $20k: If you try to evaluate on 10% of SWE-bench (so around 200 problems), with around 1 hour spent per problem, that's around 200 hours of software engineer time. So paying at $100/hr and one trial per problem, that comes out to $20k. You could possibly do this for even less than 10% of SWE-bench but the signal would be noisier.

The reason I think this would be good is because SWE-bench is probably the closest thing we have to a measure of how go...

This seems mostly right to me and I would appreciate such an effort.

One nitpick:

The reason I think this would be good is because SWE-bench is probably the closest thing we have to a measure of how good LLMs are at software engineering and AI R&D related tasks

I expect this will improve over time and that SWE-bench won't be our best fixed benchmark in a year or two. (SWE bench is only about 6 months old at this point!)

Also, I think if we put aside fixed benchmarks, we have other reasonable measures.

I'm not worried about OAI not being able to solve the rocket alignment problem in time. Risks from asteroids accidentally hitting the earth (instead of getting into a delicate low-earth orbit) are purely speculative.

You might say "but there are clear historical cases where asteroids hit the earth and caused catastrophes", but I think geological evolution is just a really bad reference class for this type of thinking. After all, we are directing the asteroid this time, not geological evolution.

You might say "but there are clear historical cases where asteroids hit the earth and caused catastrophes", but I think geological evolution is just a really bad reference class for this type of thinking. After all, we are directing the asteroid this time, not geological evolution.

This paragraph gives me bad vibes. I feel like it's mocking particular people in an unhelpful way.

It doesn't feel to me like constructively poking fun at an argument and instead feels more like dunking on a strawman of the outgroup.

(I also have this complaint to some extent wi...

Risks from asteroids accidentally hitting the earth (instead of getting into a delicate low-earth orbit) are purely speculative.

Not to mention that simple counting arguments show that the volume of the Earth is much smaller than even a rather narrow spherical shell of space around the Earth. Most asteroid trajectories that come anywhere near Earth will pass through this spherical shell rather than the Earth volume.

Remember - "Earth is not the optimized-trajectory target"! An agent like Open Asteroid Impact is merely executing policies involving business...

I think I vaguely agree with the shape of this point, but I also think there are many intermediate scenarios where we lock in some really bad values during the transition to a post-AGI world.

For instance, if we set precedents that LLMs and the frontier models in the next few years can be treated however one wants (including torture, whatever that may entail), we might slip into a future where most people are desensitized to the suffering of digital minds and don't realize this. If we fail at an alignment solution which incorporates some sort of CEV (or oth...

Romeo Dean and I ran a slightly modified version of this format for members of AISST and we found it a very useful and enjoyable activity!

We first gathered to do 2 hours of reading and discussing, and then we spent 4 hours switching between silent writing and discussing in small groups.

The main changes we made are:

- We removed the part where people estimate probabilities of ASI and doom happening by the end of each other’s scenarios.

- We added a formal benchmark forecasting part for 7 benchmarks using private Metaculus questions (forecasting values at Jan 31 2

I generally find experiments where frontier models are lied to kind of uncomfortable. We possibly don't want to set up precedents where AIs question what they are told by humans, and it's also possible that we are actually "wronging the models" (whatever that means) by lying to them. Its plausible that one slightly violates commitments to be truthful by lying to frontier LLMs.

I'm not saying we shouldn't do any amount of this kind of experimentation, I'm saying we should be mindful of the downsides.

For "capable of doing tasks that took 1-10 hours in 2024", I was imagining an AI that's roughly as good as a software engineer that gets paid $100k-$200k a year.

For "hit the singularity", this one is pretty hazy, I think I'm imagining that the metaculus AGI question has resolved YES, and that the superintelligence question is possibly also resolved YES. I think I'm imagining a point where AI is better than 99% of human experts at 99% of tasks. Although I think it's pretty plausible that we could enter enormous economic growth with AI that's roughly a...

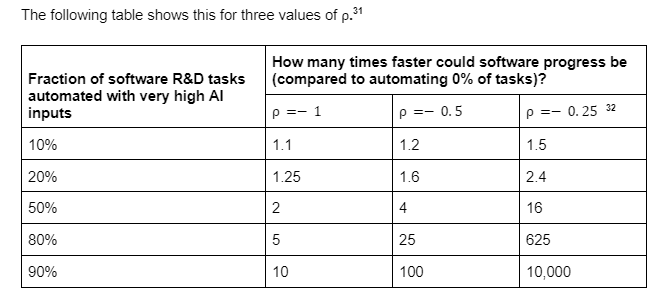

I was more making the point that, if we enter a regime where AI can do 10 hour SWE tasks, then this will result in big algorithmic improvements, but at some point pretty quickly effective compute improvements will level out because of physical compute bottlenecks. My claim is that the point at which it will level out will be after multiple years worth of current algorithmic progress had been "squeezed out" of the available compute.

My reasoning is something like: roughly 50-80% of tasks are automatable with AI that can do 10 hours of software engineering, and under most sensible parameters this results in at least 5x of speedup. I'm aware this is kinda hazy and doesn't map 1:1 with the CES model though

Note that AI doesn't need to come up with original research ideas or do much original thinking to speed up research by a bunch. Even if it speeds up the menial labor of writing code, running experiments, and doing basic data analysis at scale, if you free up 80% of your researchers' time, your researchers can now spend all of their time doing the important task, which means overall cognitive labor is 5x faster. This is ignoring effects from using your excess schlep-labor to trade against non-schlep labor leading to even greater gains in efficiency.

I think that AIs will be able to do 10 hours of research (at the level of a software engineer that gets paid $100k a year) within 4 years with 50% probability.

If we look at current systems, there's not much indication that AI agents will be superhuman in non-AI-research tasks and subhuman in AI research tasks. One of the most productive uses of AI so far has been in helping software engineers code better, so I'd wager AI assistants will be even more helpful for AI research than for other things (compared to some prior based on those task's "inherent diffic...

I expect the trend to speed up before 2029 for a few reasons: