(Status: another point I find myself repeating frequently.)

One of the reasons I suspect we need a lot of serial time to solve the alignment problem is that alignment researchers don't seem to me to "stack". Where “stacking” means something like, quadrupling the size of your team of highly skilled alignment researchers lets you finish the job in ~1/4 of the time.

It seems to me that whenever somebody new and skilled arrives on the alignment scene, with the sort of vision and drive that lets them push in a promising direction (rather than just doing incremental work that has little chance of changing the strategic landscape), they push in a new direction relative to everybody else. Eliezer Yudkowsky and Paul Christiano don't have any synergy between their research programs. Adding John Wentworth doesn't really speed up either of them. Adding Adam Shimi doesn't really speed up any of the previous three. Vanessa Kosoy isn't overlapping with any of the other four.

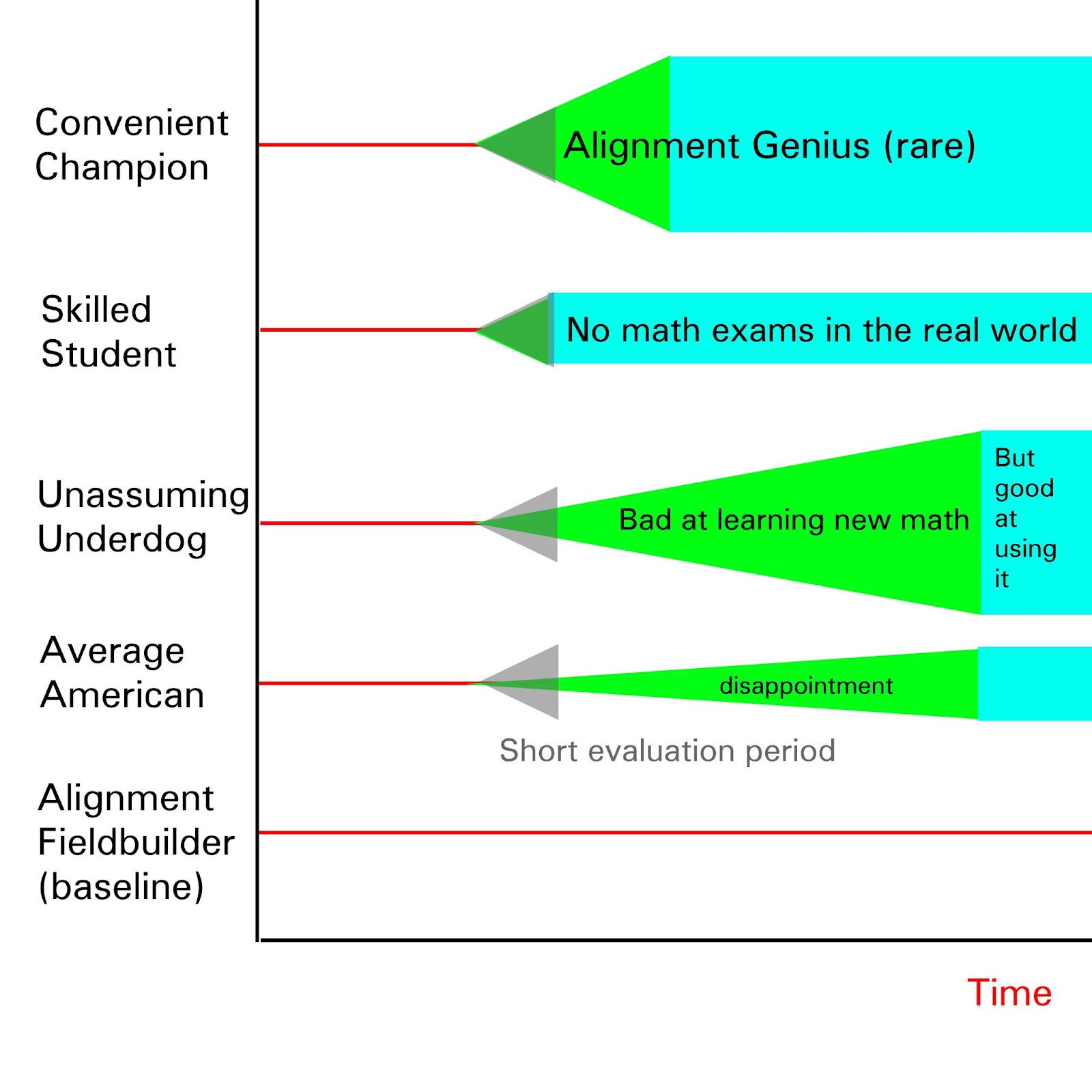

Sure, sometimes one of our visionary alignment-leaders finds a person or two that sees sufficiently eye-to-eye with them and can speed things along (such as Diffractor with Vanessa, it seems to me from a distance). And with ops support and a variety of other people helping out where they can, it seems possible to me to take one of our visionaries and speed them up by a factor of 2 or so (in a simplified toy model where we project ‘progress’ down to a single time dimension). But new visionaries aren't really joining forces with older visionaries; they're striking out on their own paths.

And to be clear, I think that this is fine and healthy. It seems to me that this is how fields are often built, with individual visionaries wandering off in some direction, and later generations following the ones who figured out stuff that was sufficiently cool (like Newton or Laplace or Hamilton or Einstein or Grothendieck). In fact, the phenomenon looks even more wide-ranging than that, to me: When studying the Napoleonic wars, I was struck by the sense that Napoleon could have easily won if only he'd been everywhere at once; he was never able to find other generals who shared his spark. Various statesmen (Bismark comes to mind) proved irreplaceable. Steve Jobs never managed to find a worthy successor, despite significant effort.

Also, I've tried a few different ways of getting researchers to "stack" (i.e., of getting multiple people capable of leading research, all leading research in the same direction, in a way that significantly shortens the amount of serial time required), and have failed at this. (Which isn't to say that you can't succeed where I failed!)

I don't think we're doing something particularly wrong here. Rather, I'd say: the space to explore is extremely broad; humans are sparsely distributed in the space of intuitions they're able to draw upon; people who have an intuition they can follow towards plausible alignment-solutions are themselves pretty rare; most humans don't have the ability to make research progress without an intuition to guide them. Each time we find a new person with an intuition to guide them towards alignment solutions, it's likely to guide them in a whole new direction, because the space is so large. Hopefully at least one is onto something.

But, while this might not be an indication of an error, it sure is a reason to worry. Because if each new alignment researcher pursues some new pathway, and can be sped up a little but not a ton by research-partners and operational support, then no matter how many new alignment visionaries we find, we aren't much decreasing the amount of time it takes to find a solution.

Like, as a crappy toy model, if every alignment-visionary's vision would ultimately succeed, but only after 30 years of study along their particular path, then no amount of new visionaries added will decrease the amount of time required from “30y since the first visionary started out”.

And of course, in real life, different paths have different lengths, and adding new people decreases the amount of time required at least a little in expectation. But not necessarily very much, and not linearly.

(And all this is to say nothing of how the workable paths might not even be visible to the first generation of visionaries; the intuitions that lead one to a solution might be the sort of thing that you can only see if you've been raised with the memes generated by the partial-successes and failures of failed research pathways, as seems-to-me to have been the case with mathematics and physics regularly in the past. But I digress.)

I think it's mostly right, in the sense that any given novel research artifact produced by Visionary A is unlikely to be useful for whatever research is currently pursued by Visionary B. But I think there's a more diffuse speed-up effect from scale, based on the following already happening:

The one thing all the different visionaries pushing in different directions do accomplish is mapping out the problem domain. If you're just prompted with the string "ML research is an existential threat", and you know nothing else about the topic, there's a plethora of obvious-at-first-glance lines of inquiry you can go down. Would prosaic alignment somehow not work, and if yes, why? How difficult would it be to interpret a ML model's internals? Can we prevent a ML model from becoming an agent? Is there some really easy hack to sidestep the problem? Would intelligence scale so sharply that the first AGI failure kills us all? If all you have to start with is just "ML research is an existential threat", all of these look... maybe not equally plausible, but not like something you can dismiss without at least glancing in that direction. And each glance takes up time.

On the other hand, if you're entering the field late, after other people have looked in these directions already, surveying the problem landscape is as easy as consuming their research artifacts. Maybe you disagree with some of them, but you can at least see the general shape of the thing, and every additional bit of research clarifies that shape even further. Said "clarity" allows you to better evaluate the problem, and even if you end up disagreeing with everyone else's priorities, the clearer the vision, the better you should be able to triangulate your own path.

So every bit of research probabilistically decreases the "distance" between the solution and the point at which a new visionary starts. Orrr, maybe not decreases the distance, but allows a new visionary to plot a path that looks less like a random walk and more like a straight line.