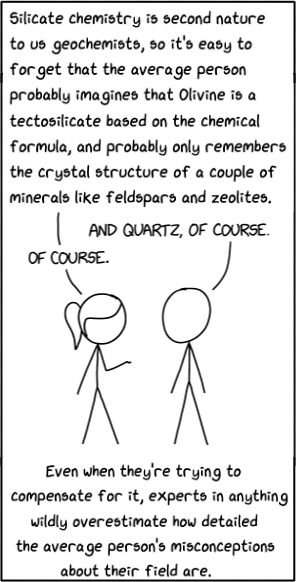

This point feels fairly obvious, yet seems worth stating explicitly.

Those of us familiar with the field of AI after the deep-learning revolution know perfectly well that we have no idea how our ML models work. Sure, we have an understanding of the dynamics of training loops and SGD's properties, and we know how ML models' architectures work. But we don't know what specific algorithms ML models' forward passes implement. We have some guesses, and some insights painstakingly mined by interpretability advances, but nothing even remotely like a full understanding.

And most certainly, we wouldn't automatically know how a fresh model trained on a novel architecture that was just spat out by the training loop works.

We're all used to this state of affairs. It's implicitly-assumed shared background knowledge. But it's actually pretty unusual, when you first learn of it.

And...

I'm pretty sure that the general public doesn't actually know that. I don't have hard data, but it's my strong impression, based on reading AI-related online discussions in communities not focused around tech, talking to people uninterested in AI advances, and so on.[1]

They still think in GOFAI terms. They still believe that all of an AI's functionality has been deliberately programmed, not trained, into it. That behind every single thing ChatGPT can do, there's a human who implemented that functionality and understands it.

Or, at the very least, that it's written in legible, human-readable and human-understandable format, and that we can interfere on it in order to cause precise, predictable changes.

Polls already show concern about AGI. If the fact that we don't know what these systems are actually thinking were widely known and properly appreciated? If there weren't the implicit assurance of "someone understands how it works and why it can't go catastrophically wrong"?

Well, I expect much more concern. Which might serve as a pretty good foundation for further pro-AI-regulations messaging. A way to acquire some political currency you can spend.

So if you're doing any sort of public appeals, I suggest putting the proliferation of this information on the agenda. You get about five words (per message) to the public, and "Powerful AIs Are Black Boxes" seems like a message worth sending out.[2]

- ^

If you do have some hard data on that, that would be welcome.

- ^

There's been some pushback on the "black box" terminology. I maintain that it's correct: ML models are black boxes relative to us, in the sense that by default, we don't have much more insight into what algorithms they execute than we'd have by looking at a homomorphically-encrypted computation to which we don't have the key, or by looking at the activity of a human brain using neuroimaging. There's been a nonzero amount of interpretability research, but it's still largely the case; and would be almost fully the case for models produced by novel architectures.

ML models are not black boxes relative to the SGD, yes. The algorithm can "see" all computations happening, and tightly intervene on them. But that seems like a fairly counter-intuitive use of the term, and I maintain that "AIs are black boxes" conveys all the correct intuitions.

This fits with my experience talking to people unfamiliar with the field. Many do seem to think it's closer to GOFAI, explicitly programmed, maybe with a big database of stuff scraped from the internet that gets mixed-and-matched depending on the situation.

Examples include:

I think most people who have more than a very passing interest in the topic have a better understanding than that though. And I suspect that many completely non-technical people have such a vague understanstanding of what "programmed" means that it could apply to training an LLM or explictly coding an algorithm. But I do think this is a real misunderstanding that is reasonably widespread.